Not long ago, the data analytics world relied on monolithic infrastructures—tightly coupled systems that were difficult to scale, maintain, and adapt to changing needs. These legacy setups often resulted in operational bottlenecks, delayed insights, and high maintenance costs. To overcome these challenges, the industry shifted toward what was deemed the Modern Data Stack (MDS)—a suite of focused tools optimized for specific stages of the data engineering lifecycle.

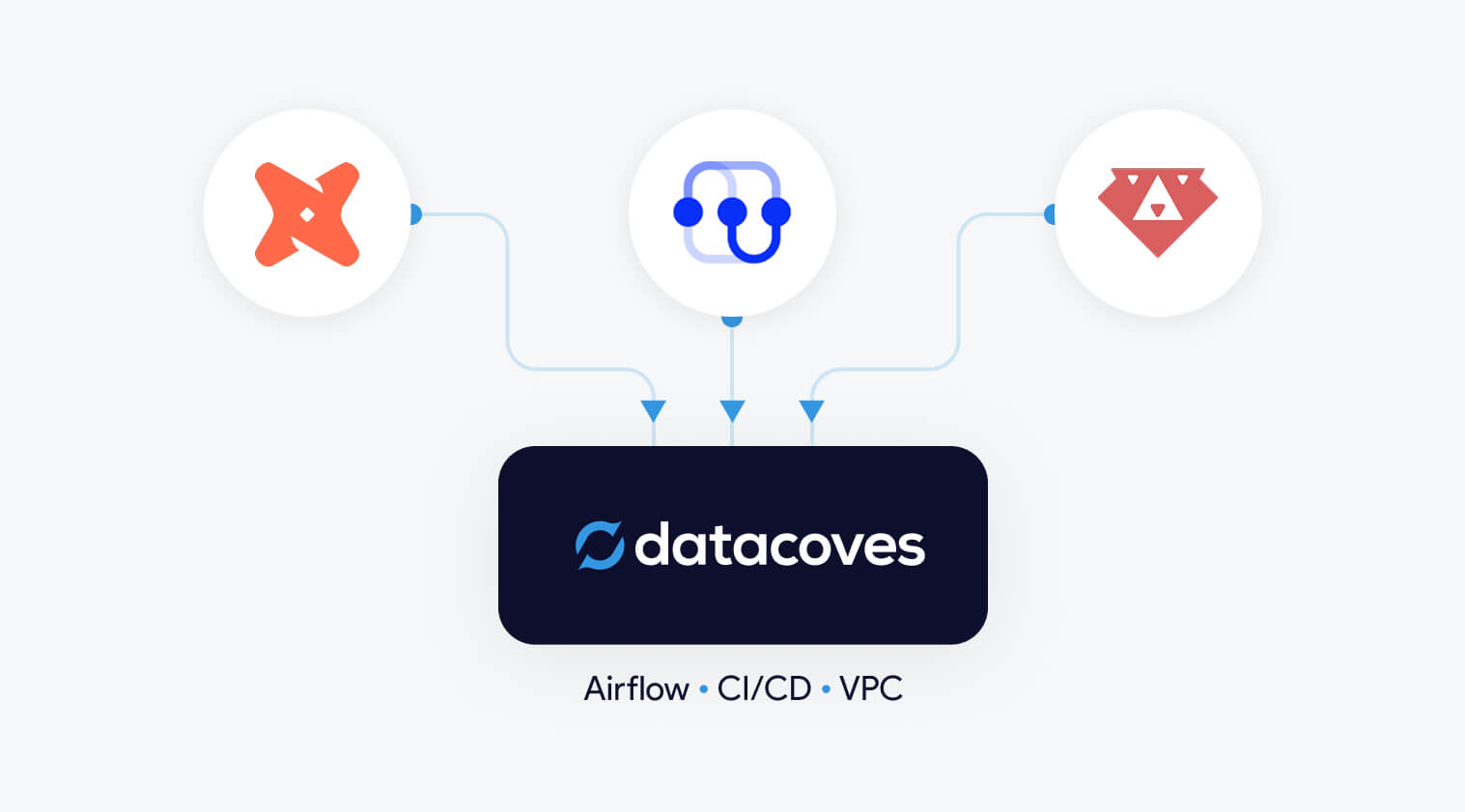

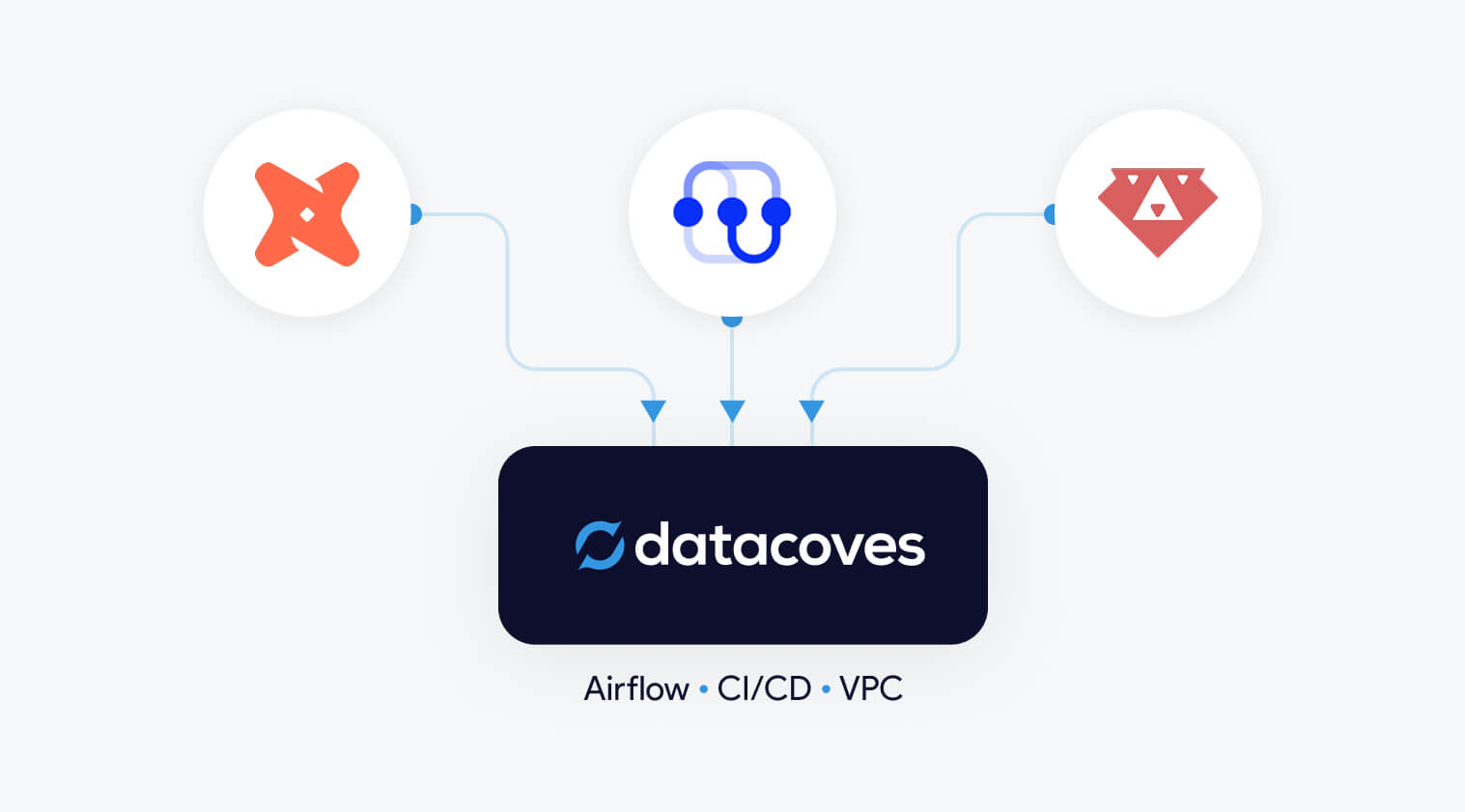

This modular approach was revolutionary, allowing organizations to select best-in-class tools like Airflow for Orchestration or a managed version of Airflow from Astronomer or Amazon without the need to build custom solutions. While the MDS improved scalability, reduced complexity, and enhanced flexibility, it also reshaped the build vs. buy decision for analytics platforms. Today, instead of deciding whether to create a component from scratch, data teams face a new question: Should they build the infrastructure to host open-source tools like Apache Airflow and dbt Core, or purchase their managed counterparts? This article focuses on these two components because pipeline orchestration and data transformation lie at the heart of any organization’s data platform.

When we say build in terms of open-source solutions, we mean building infrastructure to self-host and manage mature open-source tools like Airflow and dbt. These two tools are popular because they have been vetted by thousands of companies! In addition to hosting and managing, engineers must also ensure interoperability of these tools within their stack, handle security, scalability, and reliability. Needless to say, building is a huge undertaking that should not be taken lightly.

dbt and Airflow both started out as open-source tools, which were freely available to use due to their permissive licensing terms. Over time, cloud-based managed offerings of these tools were launched to simplify the setup and development process. These managed solutions build upon the open-source foundation, incorporating proprietary features like enhanced user interfaces, automation, security integration, and scalability. The goal is to make the tools more convenient and reduce the burden of maintaining infrastructure while lowering overall development costs. In other words, paid versions arose out of the pain points of self-managing the open-source tools.

This begs the important question: Should you self-manage or pay for your open-source analytics tools?

As with most things, both options come with trade-offs, and the “right” decision depends on your organization’s needs, resources, and priorities. By understanding the pros and cons of each approach, you can choose the option that aligns with your goals, budget, and long-term vision.

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Before moving on to the buy tradeoffs, it is important to set the record straight. You may have noticed that we did not include “the tool is free to use” as one of our pros for building with open-source. You might have guessed by reading the title of this section, but many people incorrectly believe that building their MDS using open-source tools like dbt is free. When in reality there are many factors that contribute to the true dbt pricing and the same is true for Airflow.

How can that be? Well, setting up everything you need and managing infrastructure for Airflow and dbt isn’t necessarily plug and play. There is day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges which require ongoing expertise and attention. Hiring a team to handle this will be critical particularly as you scale. High salaries and benefits are needed to avoid costly mistakes; this can easily cost anywhere from $5,000 to $26,000+/month depending on the size of your team.

In addition to the cost of salaries, let’s look at other possible hidden costs that come with using open-source tools.

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks and when issues arise, a you will need expertise to accelerate the time to resolution.

And then there’s the learning curve. Not all engineers on your team will be seniors, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents a hidden cost.

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business users are impacted as they become reliant on your insights.

A managed solution for Airflow and dbt can solve many of the problems that come with building your own solution from open-source tools such as: hassle-free maintenance, improved UI/UX experience, and integrated functionality. Let’s take a look at the pros.

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however additional configuration and development will be needed for teams to leverage it with dbt and to troubleshoot infrastructure issues suck as containers running out of memory.

For data teams, the allure of a custom-built solution often lies in its promise of complete control and customization. However, building this requires significant time, expertise, and ongoing maintenance. Datacoves bridges the gap between custom-built flexibility and the simplicity of managed services, offering the best of both worlds.

With Datacoves, teams can leverage managed Airflow and pre-configured dbt environments to eliminate the operational burden of infrastructure setup and maintenance. This allows data teams to focus on what truly matters—delivering insights and driving business decisions—without being bogged down by tool management.

Unlike other managed solutions for dbt or Airflow, which often compromise on flexibility for the sake of simplicity, Datacoves retains the adaptability that custom builds are known for. By combining this flexibility with the ease and efficiency of managed services, Datacoves empowers teams to accelerate their analytics workflows while ensuring scalability and control.

Datacoves doesn’t just run the open-source solutions, but through real-world implementations, the platform has been molded to handle enterprise complexity while simplifying project onboarding. With Datacoves, teams don’t have to compromize on features like Datacoves-Mesh (aka dbt-mesh), column level lineage, GenAI, Semantic Layer, etc. Best of all, the company’s goal is to make you successful and remove hosting complexity without introducing vendor lock-in. What Datacove does, you can do yourself if given enough time, experience, and money. Finally, for security concious organizations, Datacoves is the only solution on the market that can be deployed in your private cloud with white-glove enterprise support.

Datacoves isn’t just a platform—it’s a partnership designed to help your data team unlock their potential. With infrastructure taken care of, your team can focus on what they do best: generating actionable insights and maximizing your ROI.

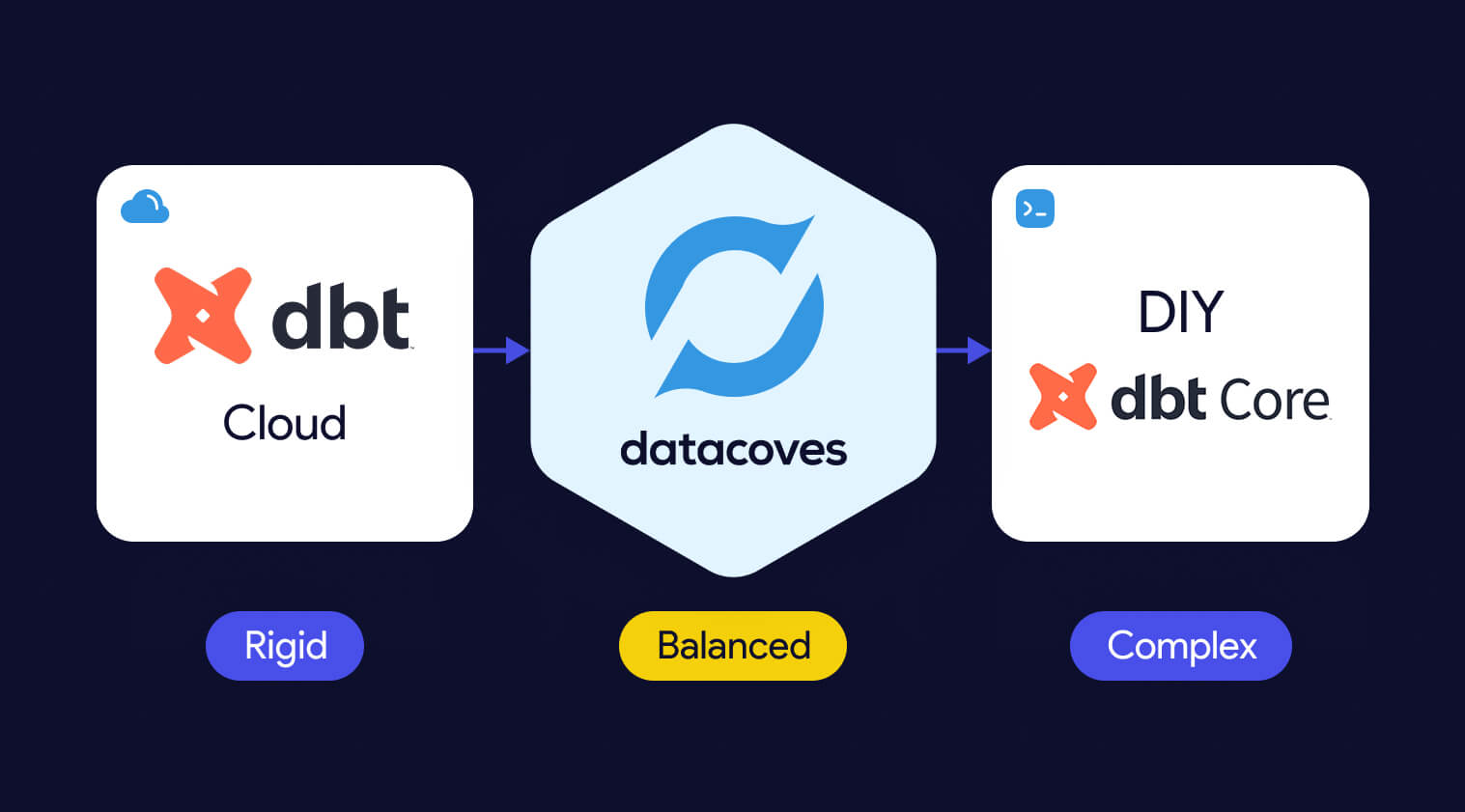

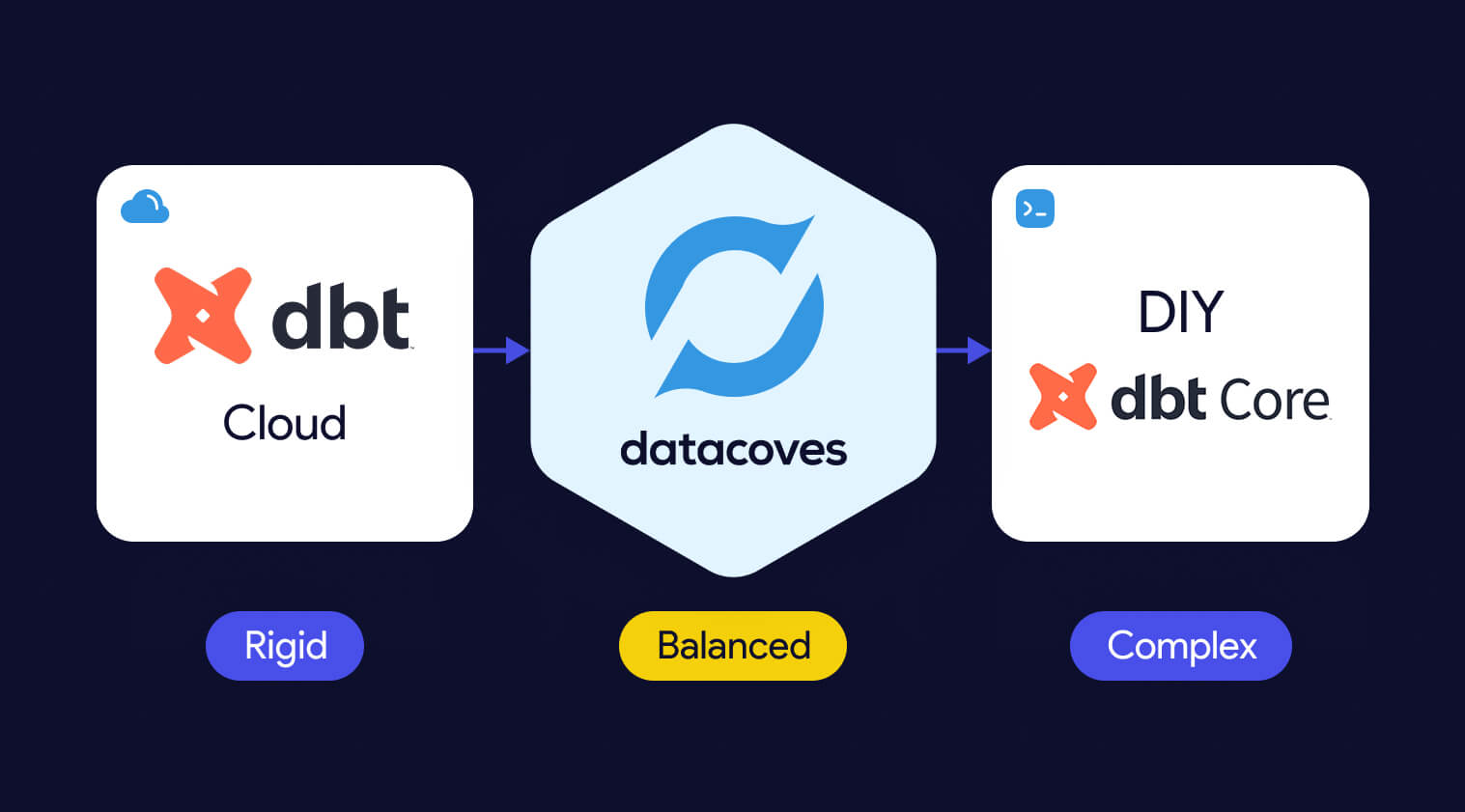

The build vs. buy debate has long been a challenge for data teams, with building offering flexibility at the cost of complexity, and buying sacrificing flexibility for simplicity. As discussed earlier in the article, solutions like dbt and Airflow are powerful, but managing them in-house requires significant time, resources, and expertise. On the other hand, managed offerings like dbt Cloud and MWAA simplify operations but often limit customization and control.

Datacoves bridges this gap, providing a managed platform that delivers the flexibility and control of a custom build without the operational headaches. By eliminating the need to manage infrastructure, scaling, and security. Datacoves enables data teams to focus on what matters most: delivering actionable insights and driving business outcomes.

As highlighted in Fundamentals of Data Engineering, data teams should prioritize extracting value from data rather than managing the tools that support them. Datacoves embodies this principle, making the argument to build obsolete. Why spend weeks—or even months—building when you can have the customization and adaptability of a build with the ease of a buy? Datacoves is not just a solution; it’s a rethinking of how modern data teams operate, helping you achieve your goals faster, with fewer trade-offs.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and visual ETL tools such as Alteryx, Matillion, and Informatica. Code-first engines offer stronger rigor, testing, and CI/CD, while GUI platforms emphasize ease of use and rapid prototyping. Teams choose these alternatives when they need more security, governance, or flexibility than dbt Core or dbt Cloud provide.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and GUI-based ETL tools such as Alteryx, Matillion, and Informatica.

Teams explore dbt alternatives when they need stronger governance, private deployments, or support for Python and code-first workflows that go beyond SQL. Many also prefer GUI-based ETL tools for faster onboarding. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in, which makes tool neutrality and platform flexibility more important than ever.

Teams look for dbt alternatives when they need stronger orchestration, consistent development environments, Python support, or private cloud deployment options that dbt Cloud does not provide.

Organizations evaluating dbt alternatives typically compare tools across three categories. Each category reflects a different approach to data transformation, development preferences, and organizational maturity.

Organizations consider alternatives to dbt Cloud when they need more flexibility, stronger security, or support for development workflows that extend beyond dbt. Teams comparing platform options often begin by evaluating the differences between dbt Cloud vs dbt Core.

Running enterprise-scale ELT pipelines often requires a full orchestration layer, consistent development environments, and private deployment options that dbt Cloud does not provide. Costs can also increase at scale (see our breakdown of dbt pricing considerations), and some organizations prefer to avoid features that are not open source to reduce long-term vendor lock-in.

This category includes platforms that deliver the benefits of dbt Cloud while providing more control, extensibility, and alignment with enterprise data platform requirements.

Datacoves provides a secure, flexible platform that supports dbt, SQLMesh, and Bruin in a unified environment with private cloud or VPC deployment.

Datacoves is an enterprise data platform that serves as a secure, flexible alternative to dbt Cloud. It supports dbt Core, SQLMesh, and Bruin inside a unified development and orchestration environment, and it can be deployed in your private cloud or VPC for full control over data access and governance.

Benefits

Flexibility and Customization:

Datacoves provides a customizable in-browser VS Code IDE, Git workflows, and support for Python libraries and VS Code extensions. Teams can choose the transformation engine that fits their needs without being locked into a single vendor.

Handling Enterprise Complexity:

Datacoves includes managed Airflow for end-to-end orchestration, making it easy to run dbt and Airflow together without maintaining your own infrastructure. It standardizes development environments, manages secrets, and supports multi-team and multi-project workflows without platform drift.

Cost Efficiency:

Datacoves reduces operational overhead by eliminating the need to maintain separate systems for orchestration, environments, CI, logging, and deployment. Its pricing model is predictable and designed for enterprise scalability.

Data Security and Compliance:

Datacoves can be deployed fully inside your VPC or private cloud. This gives organizations complete control over identity, access, logging, network boundaries, and compliance with industry and internal standards.

Reduced Vendor Lock-In:

Datacoves supports dbt, SQLMesh, and Bruin Data, giving teams long-term optionality. This avoids being locked into a single transformation engine or vendor ecosystem.

Running dbt Core yourself is a flexible option that gives teams full control over how dbt executes. It is also the most resource-intensive approach. Teams choosing DIY dbt Core must manage orchestration, scheduling, CI, secrets, environment consistency, and long-term platform maintenance on their own.

Benefits

Full Control:

Teams can configure dbt Core exactly as they want and integrate it with internal tools or custom workflows.

Cost Flexibility:

There are no dbt Cloud platform fees, but total cost of ownership often increases as the system grows.

Considerations

High Maintenance Overhead:

Teams must maintain Airflow or another orchestrator, build CI pipelines, manage secrets, and keep development environments consistent across users.

Requires Platform Engineering Skills:

DIY dbt Core works best for teams with strong Kubernetes, CI, Python, and DevOps expertise. Without this expertise, the environment becomes fragile over time.

Slow to Scale:

As more engineers join the team, keeping dbt environments aligned becomes challenging. Onboarding, upgrades, and platform drift create operational friction.

Security and Compliance Responsibility:

Identity, permissions, logging, and network controls must be designed and maintained internally, which can be significant for regulated organizations.

Teams that prefer code-first tools often look for dbt alternatives that provide strong SQL modeling, Python support, and seamless integration with CI/CD workflows and automated testing. These are part of a broader set of data transformation tools. Code-based ETL tools give developers greater control over transformations, environments, and orchestration patterns than GUI platforms. Below are four code-first contenders that organizations should evaluate.

Code-first dbt alternatives like SQLMesh, Bruin Data, and Dataform provide stronger CI/CD integration, automated testing, and more control over complex transformation workflows.

SQLMesh is an open-source framework for SQL and Python-based data transformations. It provides strong visibility into how changes impact downstream models and uses virtual data environments to preview changes before they reach production.

Benefits

Efficient Development Environments:

Virtual environments reduce unnecessary recomputation and speed up iteration.

Considerations

Part of the Fivetran Ecosystem:

SQLMesh was acquired by Fivetran, which may influence its future roadmap and level of independence.

Dataform is a SQL-based transformation framework focused specifically for BigQuery. It enables teams to create table definitions, manage dependencies, document models, and configure data quality tests inside the Google Cloud ecosystem. It also provides version control and integrates with GitHub and GitLab.

Benefits

Centralized BigQuery Development:

Dataform keeps all modeling and testing within BigQuery, reducing context switching and making it easier for teams to collaborate using familiar SQL workflows.

Considerations

Focused Only on the GCP Ecosystem:

Because Dataform is geared toward BigQuery, it may not be suitable for organizations that use multiple cloud data warehouses.

AWS Glue is a serverless data integration service that supports Python-based ETL and transformation workflows. It works well for organizations operating primarily in AWS and provides native integration with services like S3, Lambda, and Athena.

Benefits

Python-First ETL in AWS:

Glue supports Python scripts and PySpark jobs, making it a good fit for engineering teams already invested in the AWS ecosystem.

Considerations

Requires Engineering Expertise:

Glue can be complex to configure and maintain, and its Python-centric approach may not be ideal for SQL-first analytics teams.

Bruin is a modern SQL-based data modeling framework designed to simplify development, testing, and environment-aware deployments. It offers a familiar SQL developer experience while adding guardrails and automation to help teams manage complex transformation logic.

Benefits

Modern SQL Modeling Experience:

Bruin provides a clean SQL-first workflow with strong dependency management and testing.

Considerations

Growing Ecosystem:

Bruin is newer than dbt and has a smaller community and fewer third-party integrations.

While code-based transformation tools provide the most flexibility and long-term maintainability, some organizations prefer graphical user interface (GUI) tools. These platforms use visual, drag-and-drop components to build data integration and transformation workflows. Many of these platforms fall into the broader category of no-code ETL tools. GUI tools can accelerate onboarding for teams less comfortable with code editors and may simplify development in the short term. Below are several GUI-based options that organizations often consider as dbt alternatives.

GUI-based dbt alternatives such as Matillion, Informatica, and Alteryx use drag-and-drop interfaces that simplify development and accelerate onboarding for mixed-skill teams.

Matillion is a cloud-based data integration platform that enables teams to design ETL and transformation workflows through a visual, drag-and-drop interface. It is built for ease of use and supports major cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake.

Benefits

User-Friendly Visual Development:

Matillion simplifies pipeline building with a graphical interface, making it accessible for users who prefer low-code or no-code tooling.

Considerations

Limited Flexibility for Complex SQL Modeling:

Matillion’s visual approach can become restrictive for advanced transformation logic or engineering workflows that require version control and modular SQL development.

Informatica is an enterprise data integration platform with extensive ETL capabilities, hundreds of connectors, data quality tooling, metadata-driven workflows, and advanced security features. It is built for large and diverse data environments.

Benefits

Enterprise-Scale Data Management:

Informatica supports complex data integration, governance, and quality requirements, making it suitable for organizations with large data volumes and strict compliance needs.

Considerations

High Complexity and Cost:

Informatica’s power comes with a steep learning curve, and its licensing and operational costs can be significant compared to lighter-weight transformation tools.

Alteryx is a visual analytics and data preparation platform that combines data blending, predictive modeling, and spatial analysis in a single GUI-based environment. It is designed for analysts who want to build workflows without writing code and can be deployed on-premises or in the cloud.

Benefits

Powerful GUI Analytics Capabilities:

Alteryx allows users to prepare data, perform advanced analytics, and generate insights in one tool, enabling teams without strong coding skills to automate complex workflows.

Considerations

High Cost and Limited SQL Modeling Flexibility:

Alteryx is one of the more expensive platforms in this category and is less suited for SQL-first transformation teams who need modular modeling and version control.

Azure Data Factory (ADF) is a fully managed, serverless data integration service that provides a visual interface for building ETL and ELT pipelines. It integrates natively with Azure storage, compute, and analytics services, allowing teams to orchestrate and monitor pipelines without writing code.

Benefits

Strong Integration for Microsoft-Centric Teams:

ADF connects seamlessly with other Azure services and supports a pay-as-you-go model, making it ideal for organizations already invested in the Microsoft ecosystem.

Considerations

Limited Transformation Flexibility:

ADF excels at data movement and orchestration but offers limited capabilities for complex SQL modeling, making it less suitable as a primary transformation engine

Talend provides an end-to-end data management platform with support for batch and real-time data integration, data quality, governance, and metadata management. Talend Data Fabric combines these capabilities into a single low-code environment that can run in cloud, hybrid, or on-premises deployments.

Benefits

Comprehensive Data Quality and Governance:

Talend includes built-in tools for data cleansing, validation, and stewardship, helping organizations improve the reliability of their data assets.

Considerations

Broad Platform, Higher Operational Complexity:

Talend’s wide feature set can introduce complexity, and teams may need dedicated expertise to manage the platform effectively.

SQL Server Integration Services is part of the Microsoft SQL Server ecosystem and provides data integration and transformation workflows. It supports extracting, transforming, and loading data from a wide range of sources, and offers graphical tools and wizards for designing ETL pipelines.

Benefits

Strong Fit for SQL Server-Centric Teams:

SSIS integrates deeply with SQL Server and other Microsoft products, making it a natural choice for organizations with a Microsoft-first architecture.

Considerations

Not Designed for Modern Cloud Data Warehouses:

SSIS is optimized for on-premises SQL Server environments and is less suitable for cloud-native architectures or modern ELT workflows.

Recent consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in and pushed organizations to evaluate more flexible transformation platforms.

Organizations explore dbt alternatives when dbt no longer meets their architectural, security, or workflow needs. As teams scale, they often require stronger orchestration, consistent development environments, mixed SQL and Python workflows, and private deployment options that dbt Cloud does not provide.

Some teams prefer code-first engines for deeper CI/CD integration, automated testing, and strong guardrails across developers. Others choose GUI-based tools for faster onboarding or broader integration capabilities. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has also increased concerns about vendor lock-in.

These factors lead many organizations to evaluate tools that better align with their governance requirements, engineering preferences, and long-term strategy.

DIY dbt Core offers full control but requires significant engineering work to manage orchestration, CI/CD, security, and long-term platform maintenance.

Running dbt Core yourself can seem attractive because it offers full control and avoids platform subscription costs. However, building a stable, secure, and scalable dbt environment requires significantly more than executing dbt build on a server. It involves managing orchestration, CI/CD, and ensuring development environment consistency along with long-term platform maintenance, all of which require mature DataOps practices.

The true question for most organizations is not whether they can run dbt Core themselves, but whether it is the best use of engineering time. This is essentially a question of whether to build vs buy your data platform. DIY dbt platforms often start simple and gradually accumulate technical debt as teams grow, pipelines expand, and governance requirements increase.

For many organizations, DIY works in the early stages but becomes difficult to sustain as the platform matures.

The right dbt alternative depends on your team’s skills, governance requirements, pipeline complexity, and long-term data platform strategy.

Selecting the right dbt alternative depends on your team’s skills, security requirements, and long-term data platform strategy. Each category of tools solves different problems, so it is important to evaluate your priorities before committing to a solution.

If these are priorities, a platform with secure deployment options or multi-engine support may be a better fit than dbt Cloud.

Recent consolidation in the ecosystem has raised concerns about vendor dependency. Organizations that want long-term flexibility often look for:

Consider platform fees, engineering maintenance, onboarding time, and the cost of additional supporting tools such as orchestrators, IDEs, and environment management

dbt remains a strong choice for SQL-based transformations, but it is not the only option. As organizations scale, they often need stronger orchestration, consistent development environments, Python support, and private deployment capabilities that dbt Cloud or DIY dbt Core may not provide. Evaluating alternatives helps ensure that your transformation layer aligns with your long-term platform and governance strategy.

Code-first tools like SQLMesh, Bruin Data, and Dataform offer strong engineering workflows, while GUI-based tools such as Matillion, Informatica, and Alteryx support faster onboarding for mixed-skill teams. The right choice depends on the complexity of your pipelines, your team’s technical profile, and the level of security and control your organization requires.

Datacoves provides a flexible, secure alternative that supports dbt, SQLMesh, and Bruin in a unified environment. With private cloud or VPC deployment, managed Airflow, and a standardized development experience, Datacoves helps teams avoid vendor lock-in while gaining an enterprise-ready platform for analytics engineering.

Selecting the right dbt alternative is ultimately about aligning your transformation approach with your data architecture, governance needs, and long-term strategy. Taking the time to assess these factors will help ensure your platform remains scalable, secure, and flexible for your future needs.

The reason companies fail at leveraging analytics stems from the fact that people tend to focus on the destination instead of the journey that will lead to the solutions that will have the most impact on the business. Time and time again, I see people focus on the so-called shiny objects, like new tools, new techniques, or even new people, that appear to be the silver bullet everyone needs. The truth is, if you go back to the first principles and start with true alignment, good data processes, and user-centric experiences, project success and satisfaction are achievable.

Every project I have been a part of started with a sense of optimism and excitement. The honeymoon phase was great. Everyone was united; we had gotten the funding, selected vendor partners, and purchased whatever technology was part of the solution. We all spoke the same language, everyone got to work, management started getting progress updates, and everyone thought we were off to a great start.

It wasn't until real decisions needed to be made that we realized the honeymoon was over. In every single instance, an excessive amount of time was spent in meetings arguing and reaching some level of consensus until the next decision. The reason this happened was because we didn't really spend the time to get on the same page. People assumed that we were aligned because at a high level, we all talking about the key points of the given initiative: digital transformation, self-service analytics, customer mastering, data lakes, etc.

But we were not really thinking the same things. Everyone had different backgrounds and had expertise on different parts of the solution: regulatory requirement, technology limitations, end-user needs, etc. There were also things no one knew at the start, and we didn't have a north star to guide these decisions. We all appeared to be saying the same things, but we were thinking very differently.

I have seen the pressure to get started on a project and show progress lead to delays and ultimate dissatisfaction with the end result. On projects where we have spent a couple of weeks getting aligned using a structured approach to product discovery, we ended up with better estimates and better overall satisfaction.

In any analytics-related project, the same things apply: the team needs to understand the business objectives, the current state (so the new process isn't worse), the risks, and prioritize the high-level features. Most importantly, the team needs to align on what's NOT in the new solution and the prioritizing criteria such as quality, feature completeness, or usability that will be used when making decisions. Agile does not mean no planning.

Trust starts by listening to people and creating a shared vision that sets the right expectations from day one. You can create an achievable plan if everyone knows what you are trying to achieve.

Let's face it, your data processes get no love. This is usually because this is "too technical." Your users don't care about databases, schemas, tables, or columns, let alone the process of converting raw facts into business-ready insights. It's easy for management to see a fancy dashboard and get excited about the possibility of machine learning, but talk about data and people's eyes gloss over.

It kind of makes sense; most people don't understand how the power grid works. We all take it for granted. We flip a switch, the light turns on, and we move forward. No one cares about electricity until something goes wrong. In a lot of organizations, things go wrong with data more often than you would think. Sometimes people notice right away, but other times failures are silent. When something does go wrong, everyone goes into firefighting mode. Meetings are held, issues are discovered, and patches to "prevent" the failure are put in place. The time to think about the inevitable is not once things break; you need to anticipate failure and design for resilience.

The issue here is that we don't think of the process of going from raw data to insights as a single system. It is all interconnected and needs to be treated as such. When it comes to analytics, sometimes it feels like companies want to build a mansion on a foundation atop quicksand. Initially, all seems fine, and everyone is in the house decorating until someone notices that a corner of the house is sinking. Everyone goes outside, props up the corner, and they happily go back inside to decide what color to paint the next room.

You can't build a house on quicksand; you need to set up repeatable processes with quality built in from the start. If we want collaboration, we have to build it in. If you want to be able to do impact analysis, guess what? You can't retrofit that later if you didn't do it from the start. Having documented analytics is not magic; you need this to be part of the culture and part of the process. The good thing is that many smart people have faced the same issues, and there are examples we can see where people are doing things right.

If you want users to trust data analytics, they need to trust the data, and they need to believe in a solid process that is built on a solid foundation.

When you try to please everyone, you please no one, and in many companies, technical teams try to do everything they are asked. They jump through hoops to deliver projects, but it is very common for people to be dissatisfied with the end results. I have also seen new tools used like old ones. Teams sometimes take the approach that the new process is just affecting some part of the current broken process, so they only incrementally change it. I have seen Tableau dashboards that are essentially Excel on the web with some automation.

Instead of asking users what they want, we need to understand what they need and why. What are they trying to accomplish? What's wrong with how they do things today? Is the new process / tool you are putting in place better than what they already have? Sometimes it makes more sense to leave a current process as-is until other parts of the system are improved.

When you understand the real need for an omni-channel dashboard or a sales dashboard, you design the solution to help you achieve that goal. If your users need to quickly get in and out of the tool, you can find ways to reduce the number of clicks it takes them to get there. You simplify access, and you surface the most important information first. You build the solution around them, and more importantly, you are able to justify your decisions and why certain things need to be de-prioritized. When users see that you empathize with them, they trust you. They don't push back on every choice because they know you have their best interests at heart because you have demonstrated time and again that you do care.

Getting decision-makers to trust data analytics is no different than getting anyone to trust anything. You need to start with alignment and set the right expectations; you need to build end-to-end processes that are robust; and you need to deliver the tools that facilitate the job users do.

Any experienced data engineer will tell you that efficiency and resource optimization are always top priorities. One powerful feature that can significantly optimize your dbt CI/CD workflow is dbt Slim CI. However, despite its benefits, some limitations have persisted. Fortunately, the recent addition of the --empty flag in dbt 1.8 addresses these issues. In this article, we will share a GitHub Action Workflow and demonstrate how the new --empty flag can save you time and resources.

dbt Slim CI is designed to make your continuous integration (CI) process more efficient by running only the models that have been changed and their dependencies, rather than running all models during every CI build. In large projects, this feature can lead to significant savings in both compute resources and time.

dbt Slim CI is implemented efficiently using these flags:

--select state:modified: The state:modified selector allows you to choose the models whose "state" has changed (modified) to be included in the run/build. This is done using the state:modified+ selector which tells dbt to run only the models that have been modified and their downstream dependencies.

--state <path to production manifest>: The --state flag specifies the directory where the artifacts from a previous dbt run are stored ie) the production dbt manifest. By comparing the current branch's manifest with the production manifest, dbt can identify which models have been modified.

--defer: The --defer flag tells dbt to pull upstream models that have not changed from a different environment (database). Why rebuild something that exists somewhere else? For this to work, dbt will need access to the dbt production manifest.

You may have noticed that there is an additional flag in the command above.

--fail-fast: The --fail-fast flag is an example of an optimization flag that is not essential to a barebones Slim CI but can provide powerful cost savings. This flag stops the build as soon as an error is encountered instead of allowing dbt to continue building downstream models, therefore reducing wasted builds. To learn more about these arguments you can use have a look at our dbt cheatsheet.

The following sample Github Actions workflow below is executed when a Pull Request is opened. ie) You have a feature branch that you want to merge into main.

Checkout Branch: The workflow begins by checking out the branch associated with the pull request to ensure that the latest code is being used.

Set Secure Directory: This step ensures the repository directory is marked as safe, preventing potential issues with Git operations.

List of Files Changed: This command lists the files changed between the PR branch and the base branch, providing context for the changes and helpful for debugging.

Install dbt Packages: This step installs all required dbt packages, ensuring the environment is set up correctly for the dbt commands that follow.

Create PR Database: This step creates a dedicated database for the PR, isolating the changes and tests from the production environment.

Get Production Manifest: Retrieves the production manifest file, which will be used for deferred runs and governance checks in the following steps.

Run dbt Build in Slim Mode or Run dbt Build Full Run: If a manifest is present in production, dbt will be run in slim mode with deferred models. This build includes only the modified models and their dependencies. If no manifest is present in production we will do a full refresh.

Grant Access to PR Database: Grants the necessary access to the new PR database for end user review.

Generate Docs Combining Production and Branch Catalog: If a dbt test is added to a YAML file, the model will not be run, meaning it will not be present in the PR database. However, governance checks (dbt-checkpoint) will need the model in the database for some checks and if not present this will cause a failure. To solve this, the generate docs step is added to merge the catalog.json from the current branch with the production catalog.json.

Run Governance Checks: Executes governance checks such as SQLFluff and dbt-checkpoint.

As mentioned in the beginning of the article, there is a limitation to this setup. In the existing workflow, governance checks need to run after the dbt build step. This is because dbt-checkpoint relies on the manifest.json and catalog.json. However, if these governance checks fail, it means that the dbt build step will need to run again once the governance issues are fixed. As shown in the diagram below, after running our dbt build, we proceed with governance checks. If these checks fail, we need to resolve the issue and re-trigger the pipeline, leading to another dbt build. This cycle can lead to unnecessary model builds even when leveraging dbt Slim CI.

The solution to this problem is the --empty flag in dbt 1.8. This flag allows dbt to perform schema-only dry runs without processing large datasets. It's like building the wooden frame of a house—it sets up the structure, including the metadata needed for governance checks, without filling it with data. The framework is there, but the data itself is left out, enabling you to perform governance checks without completing an actual build.

Let’s see how we can rework our Github Action:

Checkout Branch: The workflow begins by checking out the branch associated with the pull request to ensure that the latest code is being used.

Set Secure Directory: This step ensures the repository directory is marked as safe, preventing potential issues with Git operations.

List of Files Changed: This step lists the files changed between the PR branch and the base branch, providing context for the changes and helpful for debugging.

Install dbt Packages: This step installs all required dbt packages, ensuring the environment is set up correctly for the dbt commands that follow.

Create PR Database: This command creates a dedicated database for the PR, isolating the changes and tests from the production environment.

Get Production Manifest: Retrieves the production manifest file, which will be used for deferred runs and governance checks in the following steps.

*NEW* Governance Run of dbt (Slim or Full) with EMPTY Models: If there is a manifest in production, this step runs dbt with empty models using slim mode and using the empty flag. The models will be built in the PR database with no data inside and we can now use the catalog.json to run our governance checks since the models. Since the models are empty and we have everything we need to run our checks, we have saved on compute costs as well as run time.

Generate Docs Combining Production and Branch Catalog: If a dbt test is added to a YAML file, the model will not be run, meaning it will not be present in the PR database. However, governance checks (dbt-checkpoint) will need the model in the database for some checks and if not present this will cause a failure. To solve this, the generate docs step is added to merge the catalog.json from the current branch with the production catalog.json.

Run Governance Checks: Executes governance checks such as SQLFluff and dbt-checkpoint.

Run dbt Build: Runs dbt build using either slim mode or full run after passing governance checks.

Grant Access to PR Database: Grants the necessary access to the new PR database for end user review.

By leveraging the dbt --empty flag, we can materialize models in the PR database without the computational overhead, as the actual data is left out. We can then use the metadata that was generated during the empty build. If any checks fail, we can repeat the process again but without the worry of wasting any computational resources doing an actual build. The cycle still exists but we have moved our real build outside of this cycle and replaced it with an empty or fake build. Once all governance checks have passed, we can proceed with the real dbt build of the dbt models as seen in the diagram below.

dbt Slim CI is a powerful addition to the dbt toolkit, offering significant benefits in terms of speed, resource savings, and early error detection. However, we still faced an issue of wasted models when it came to failing governance checks. By incorporating dbt 1.8’s --empty flag into your CI/CD workflows we can reduce wasted model builds to zero, improving the efficiency and reliability of your data engineering processes.

🔗 Watch the vide where Noel explains the --empty flag implementation in Github Actions:

Jinja templating in dbt offers flexibility and expressiveness that can significantly improve SQL code organization and reusability. There is a learning curve, but this cheat sheet is designed to be a quick reference for data practitioners, helping to streamline the development process and reduce common pitfalls.

Whether you're troubleshooting a tricky macro or just brushing up on syntax, bookmark this page. Trust us, it will come in handy and help you unlock the full potential of Jinja in your dbt projects.

If you find this cheat sheet useful, be sure to check out our Ultimate dbt Jinja Functions Cheat Sheet. It covers the specialized Jinja functions created by dbt, designed to enhance versatility and expedite workflows.

This is the foundational syntax of Jinja, from how to comment to the difference between statements and expressions.

Define and assign variables in different data types such as strings, lists, and dictionaries.

Jinja allows fine-grained control over white spaces in compiled output. Understand how to strategically strip or maintain spaces.

In dbt, conditional structures guide the flow of transformations. Grasp how to integrate these structures seamlessly.

Discover how to iterate over lists and dictionaries. Understand simple loop syntax or accessing loop properties.

These logical and comparison operators come in handy, especially when defining tests or setting up configurations in dbt.

Within dbt, you may need to validate if a variable is defined or a if a value is odd or even. These Jinja Variable tests allow you to validate with ease.

Macros are the backbone of advanced dbt workflows. Review how to craft these reusable code snippets and also how to enforce data quality with tests.

Fine-tune your dbt data models with these transformation and formatting utilities.

Please contact us with any errors or suggestions.

Implementing dbt (data build tool) can revolutionize your organization's data maturity, however, if your organization is not ready to take advantage of the benefits of dbt it might not be the right time to start. Why? Because the success of data initiatives often hinges on aspects beyond the tooling itself.

Many companies rush into implementing dbt without assessing their organization’s maturity and this leads to poor implementation. The consequences that come from a poorly implemented dbt initiative can leave the organization frustrated, overwhelmed with technical debt, and wasted resources. To avoid these pitfalls and ensure your organization is truly ready for dbt, you should complete an assessment of your organization's readiness by answering the questions presented later in this article.

Before diving into the maturity assessment questions, it’s important to understand what data maturity means. Data maturity is the extent to which an organization can effectively leverage its data to drive business value. It encompasses multiple areas, including:

Data-Driven Culture: Fostering an environment where data is integral to decision-making processes.

Data Quality: Ensuring data is accurate, consistent, and reliable.

Data Governance: Implementing policies and procedures to manage data assets.

Data Integration: Seamlessly combining data from various sources for a unified view.

A mature data organization not only ensures data accuracy and consistency but also embeds data-driven decision-making into its core operations.

By leveraging dbt's features, organizations can significantly enhance their data maturity, leading to better decision-making, improved data quality, robust governance, and seamless integration. For example:

Data-Driven Culture: By using dbt, you can improve many aspects that contribute to creating a data-driven culture within an organization. One way is by encouraging business users to be involved in providing or reviewing accurate model and column descriptions which are embedded in dbt. You can also involve them in defining what data to test with dbt. Better Data Quality will improve trust in the data. More trust in the data will always lead to more frequent use and reliance on it.

Data Quality and Observability: dbt enables automated testing and validation of data transformations. This ensures data quality by catching issues like schema changes or data anomalies early in the pipeline. As your data quality and data observability needs grow you can assess where you are on the data maturity curve. For example, in a sales data model, we can write tests to ensure there are no negative order quantities and that each order has a valid customer ID. With dbt you can also understand data lineage and this can improve impact and root cause analysis when insights don’t seem quite right.

Data Governance: dbt facilitates version control and documentation for all transformations, enhancing transparency and accountability. Organizations can track changes to data models ensuring compliance with data governance policies.

Data Integration: dbt supports the integration of data from multiple sources by providing a framework for consistent and reusable transformations. This allows for the creation of unified data models that provide a holistic view of business operations.

Now that we understand what data maturity is and how dbt can help improve it, you might be ready to jump on the dbt bandwagon. But first, we encourage you to assess your organization’s readiness for dbt. The journey to data maturity involves not only choosing the right tools but also ensuring that your organization is philosophically and operationally prepared to take full advantage of these tools. It is important to recognize that dbt’s approach requires a shift in mindset towards modern data practices, emphasizing transparency, collaboration, and automation.

To determine if your organization is mature enough for dbt or if dbt is the right fit, consider the following assessment questions:

dbt requires a philosophical alignment with its principles, such as ELT (Extract, Load, Transform) instead of the traditional ETL (Extract, Transform, Load) approach. dbt is also based on idempotency meaning that given the same input, you will always get the same output. This is different than traditional ETL that may use incompatible constructs like Auto-Incrementing Primary Keys. If your organization prefers processes that are incompatible with dbt’s methodology, you will face challenges fighting the dbt framework to make it do something it was not intended to do.

Simply migrating existing processes and code to dbt without rethinking them won’t leverage dbt’s full potential. Assess whether you’re ready to redesign your workflows to take advantage of dbt’s capabilities such as incremental tables, snapshots, seeds, etc.

dbt offers excellent features for data quality and documentation. Evaluate if your team is prepared to prioritize the utilization of these features to enhance transparency and trust in your data. Tests and model descriptions will not write themselves. When it comes to good descriptions, they shouldn't come from a data engineering team that does not know how the data is used or the best data quality rules to implement. Good descriptions must involve business user review at a minimum.

The goal of dbt is to empower various teams including IT and business users by using the same tooling. Consider if your organization is ready to foster this cross-functional collaboration. When you implement dbt correctly, you will empower anyone who knows SQL to contribute. You can have multiple teams contribute to the insight delivery process and still ensure proper governance and testing before updating production.

Automation is key to achieving efficiency with dbt. Implementing automated deployment, testing, and CI/CD pipelines can significantly improve your workflows. If you aren’t ready to automate, the benefits of dbt may not be fully realized. If you simply put in dbt without thinking about the end-to-end process and the failure points, you will miss opportunities for errors. The spaghetti code you have today didn't happen just because you were not using dbt.

dbt is a framework, not a silver bullet. Merely changing tools without altering your underlying processes will not solve existing issues. This is a huge issue with organizations that have not done the work to create a data-driven culture. Assess if your team is ready to adopt better naming conventions and more structured processes to make data more understandable.

Data immaturity might manifest as a reliance on manual processes, lack of data quality controls, or poor documentation practices. These factors can derail the effective implementation of dbt since dbt thrives in environments where data practices are robust and standardized. In other words, dbt alone will not solve these problems.

Ensuring your organization is ready for the changes that come with implementing dbt is not just best practice, it is essential for success. By thoroughly assessing your readiness, you can avoid technical debt, optimize your workflows, and fully harness the power of dbt. Remember, dbt is a powerful tool, but its effectiveness depends on the readiness of your organization to improve data practices and its alignment with dbt’s philosophy.

Digital transformation is often seen through the lens of technological advancement and process optimization. Most blog posts and guides out there revolve around implementing new software, automating tasks, and digitizing operations. Yet, there's a pivotal element that's frequently overlooked in these discussions, especially when it comes to an enterprise: the mindset and culture within an organization. This article aims to shed light on why this is crucial in achieving true digital transformation. But first, let's investigate what digital transformation is and why it is important.

Digital transformation is the integration of digital technology into all areas of a business, fundamentally changing how it operates and delivers value to customers. It is more than just a technological upgrade; it is a cultural shift that requires organizations to continually challenge the status quo, experiment, and get comfortable with failure. This often means walking away from long-standing business processes that companies were built upon to embrace new ways of working. Most organizations find this part the most challenging.

To achieve digital transformation in an enterprise 9 times out of 10 there must be a change in company culture. However, changing a company's culture is a formidable task. It is rare to hear statements like, “We need to fundamentally change our problem-solving approach.” This realization became clear to me through my past experiences as I noticed that managers often lacked the influence to drive change at the highest organizational levels. Additionally, the pressure to deliver quick results within budget cycles frequently hindered genuine cultural transformation.

During my tenure at various companies, under numerous managers, the consistent message was the need for improvement. However, I have come to understand that organizations, much like fireflies, develop their own rhythms. It is this unique rhythm that sets apart innovative and transformative companies from those that merely follow without achieving similar success. What do I mean by this? Let’s turn to nature for an explanation.

Nature is fascinating, especially when observing how hundreds or thousands of fireflies can synchronize their flashes.

In organizations, a similar phenomenon occurs. People will sync up and follow the status quo, even if it is not what is best for the organization. This dramatically hinders digital transformation because the loudest are not always right and yet they cause others to sync up with them. This will cause innovation to be stopped in its tracks.

In addition to this firefly phenomenon, often action differs from ambition. I recall a staff meeting with a former CIO discussing a future less dependent on Microsoft and more open to non-Windows devices. It was clear that iPhones were going to change the corporate landscape. Despite this, every new tool implemented was still optimized for Internet Explorer. This discrepancy between ambition and action often drives analytical people like me to frustration. To effect change, persistence is key. I have had ideas initially dismissed as “not my job,” only to see one later turn into a patented invention.

This manifests itself in other ways as well; have you ever seen a company advocate for fewer meetings while simultaneously criticizing those who do not include “everyone” in decision-making? I have been in such situations and can attest that decision-making by committee is not inherently superior. In fact, the more people involved in an initiative, the less effective it tends to be. This, I believe, is due to the Dunning-Kruger effect.

The more people you involve in a transformation initiative, the more likely the discussions will deteriorate to bike shedding discussions. When there is a disconnect between what is said and what is done, people take notice, and it breeds discontent.

Even in my most successful transformation initiatives, the radius of transformation has been limited to my sphere of influence. Sure, some of my tools and processes got global and cross-functional acceptance, but the underlying principles never took hold because they were too radical for the organization at the time. I was not part of the IT organization so the things I did were typically seen as shadow IT. Instead of focusing on what I should not be doing, it would have been more progressive for them to see how I was practicing Agile principles. They could have inquired about how my project was doing DevOps before that was in style, or how it was that this non-sanctioned product was extremely well received and people sought me out to help them improve their processes.

This means if you want the organization to be more innovative, you need to find the obstacles that hold people back from being innovative. Often politics and bureaucracy impact an initiative more than the solution itself. If you force everyone to comply with existing tools and processes, then you are imposing a constraint on the team that will limit innovation.

A typical way this manifests itself is leadership pushing the idea that one platform or process can solve every need. This can come in the form of imposing that a particular group do data transformation, or a visualization tool be the way that everyone can do analytics. I have never seen one tool that is good at everything, and you end up balancing the single solution with an unmanageable array of tools and processes. A healthy organization is a learning organization that is always open to improvement. When management encourages pushing boundaries and not taking anything as fact then the company can innovate.

A great example of driving innovation is seen in the approach of Steve Jobs, co-founder of Apple Inc. Jobs was known for his ability to challenge conventional wisdom and existing standards in the technology industry. He emphasized the importance of understanding the fundamental principles underlying a problem to innovate and create groundbreaking solutions. One notable instance was the development of the iPhone, which revolutionized the smartphone industry. Jobs and his team did not just improve on existing phones; they rethought what a phone could be, focusing on user experience and simplicity. This approach led to a product that dramatically altered how people interact with technology.

As a leader, you need to look for the fireflies who are using first principles like Steve Jobs to deliver innovative solutions and nurture, or create, a corporate culture that truly challenges what has been done without artificial constraints.

Reasoning by first principles removes the impurity of assumptions and conventions. What remains is the essentials. It’s one of the best mental models you can use to improve your thinking because the essentials allow you to see where reasoning by analogy might lead you astray.

The transformative and innovative thinkers will either comply or leave, both of which are undesirable. In my case I tended to leave. In every organization where I have worked, I have managed to make a significant impact, often through sheer determination. During my time at one such company, our goal was to introduce a data catalog. By analyzing the problem I was able to discern what was essential for our organization vs an elaborate and idealistic vision which was capable of doing everything. While the IT organization felt it would be better to create a home-grown catalog I understood that our biggest obstacle was getting people to use a catalog in the first place, so time to market was critical. I found that Alation met the needs we had and IT kept to their vision to build an all encompassing catalog, In 3 months I had deployed Alation and 1.5 years later, the home grown solution was a tenth as good. This approach of breaking down the problem to its basic elements and building up from there was critical. It is often underestimated how challenging it is to develop and maintain custom software. This experience highlights the effectiveness of first principles thinking in deploying practical and efficient solutions.

The reality is that not everyone possesses the tenacity to advocate for change, especially in the face of substantial resistance. Not only that, but I have also witnessed people being ostracized for thinking differently, while others were promoted for fitting in. It is crucial to seek out divergent thinkers and consider the validity of their perspectives, instead of forcing them to conform. This is why true digital transformation necessitates a shift in culture.

When an individual, much like a firefly that does not flash in unison with the rest, finds themselves out of sync with the collective rhythm, they face a decision: conform and synchronize with the group or venture out to find a new collective that resonates with their unique spark.

True transformational change must come from the top. Achieving enterprise digital transformation requires a deep and bold questioning of the status quo. We must critically assess our processes: Is a particular task truly necessary for a certain group? Can we identify and eliminate inefficiencies? Will adding another layer of approval or inspection genuinely enhance outcomes? It is essential to remember that human behavior often has a more profound impact than any technology or process we implement. When decision-making is centralized within one group, solutions are inevitably skewed to reflect their viewpoint. Too often, I have witnessed decisions justified by cost considerations that, upon closer inspection, proved detrimental in the broader context. An effective strategy involves analyzing the entire system, recognizing that optimizing the whole may require accepting lower efficiency in some areas.

The key is to align with the needs of users and the organization and engage leadership in this journey. With a united front, tackling the 'corporate dragons' becomes a more manageable endeavor. One practical approach is employing methodologies like the 'Job to be Done' framework.

Company culture and change management are frequently overlooked in the pursuit of process improvement. Employees operate within their limitations, while management ponders the lack of innovation and agility compared to other companies. The simpler path might seem to be increasing staff or updating technology, but the heart of transformation lies in the mindset of the organization. Leaders aiming for a lasting impact must embrace first principles thinking, ready to scrutinize and challenge established norms. Transformational change rarely stems from incremental improvements; truly innovative companies are those that dare to think and act differently. The organization thus faces a pivotal choice: will it adapt to a new rhythm, or compel its 'new fireflies' to fall in line with the existing order?

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and visual ETL tools such as Alteryx, Matillion, and Informatica. Code-first engines offer stronger rigor, testing, and CI/CD, while GUI platforms emphasize ease of use and rapid prototyping. Teams choose these alternatives when they need more security, governance, or flexibility than dbt Core or dbt Cloud provide.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and GUI-based ETL tools such as Alteryx, Matillion, and Informatica.

Teams explore dbt alternatives when they need stronger governance, private deployments, or support for Python and code-first workflows that go beyond SQL. Many also prefer GUI-based ETL tools for faster onboarding. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in, which makes tool neutrality and platform flexibility more important than ever.

Teams look for dbt alternatives when they need stronger orchestration, consistent development environments, Python support, or private cloud deployment options that dbt Cloud does not provide.

Organizations evaluating dbt alternatives typically compare tools across three categories. Each category reflects a different approach to data transformation, development preferences, and organizational maturity.

Organizations consider alternatives to dbt Cloud when they need more flexibility, stronger security, or support for development workflows that extend beyond dbt. Teams comparing platform options often begin by evaluating the differences between dbt Cloud vs dbt Core.

Running enterprise-scale ELT pipelines often requires a full orchestration layer, consistent development environments, and private deployment options that dbt Cloud does not provide. Costs can also increase at scale (see our breakdown of dbt pricing considerations), and some organizations prefer to avoid features that are not open source to reduce long-term vendor lock-in.

This category includes platforms that deliver the benefits of dbt Cloud while providing more control, extensibility, and alignment with enterprise data platform requirements.

Datacoves provides a secure, flexible platform that supports dbt, SQLMesh, and Bruin in a unified environment with private cloud or VPC deployment.

Datacoves is an enterprise data platform that serves as a secure, flexible alternative to dbt Cloud. It supports dbt Core, SQLMesh, and Bruin inside a unified development and orchestration environment, and it can be deployed in your private cloud or VPC for full control over data access and governance.

Benefits

Flexibility and Customization:

Datacoves provides a customizable in-browser VS Code IDE, Git workflows, and support for Python libraries and VS Code extensions. Teams can choose the transformation engine that fits their needs without being locked into a single vendor.

Handling Enterprise Complexity:

Datacoves includes managed Airflow for end-to-end orchestration, making it easy to run dbt and Airflow together without maintaining your own infrastructure. It standardizes development environments, manages secrets, and supports multi-team and multi-project workflows without platform drift.

Cost Efficiency:

Datacoves reduces operational overhead by eliminating the need to maintain separate systems for orchestration, environments, CI, logging, and deployment. Its pricing model is predictable and designed for enterprise scalability.

Data Security and Compliance:

Datacoves can be deployed fully inside your VPC or private cloud. This gives organizations complete control over identity, access, logging, network boundaries, and compliance with industry and internal standards.

Reduced Vendor Lock-In:

Datacoves supports dbt, SQLMesh, and Bruin Data, giving teams long-term optionality. This avoids being locked into a single transformation engine or vendor ecosystem.

Running dbt Core yourself is a flexible option that gives teams full control over how dbt executes. It is also the most resource-intensive approach. Teams choosing DIY dbt Core must manage orchestration, scheduling, CI, secrets, environment consistency, and long-term platform maintenance on their own.

Benefits

Full Control:

Teams can configure dbt Core exactly as they want and integrate it with internal tools or custom workflows.

Cost Flexibility:

There are no dbt Cloud platform fees, but total cost of ownership often increases as the system grows.

Considerations

High Maintenance Overhead:

Teams must maintain Airflow or another orchestrator, build CI pipelines, manage secrets, and keep development environments consistent across users.

Requires Platform Engineering Skills:

DIY dbt Core works best for teams with strong Kubernetes, CI, Python, and DevOps expertise. Without this expertise, the environment becomes fragile over time.

Slow to Scale:

As more engineers join the team, keeping dbt environments aligned becomes challenging. Onboarding, upgrades, and platform drift create operational friction.

Security and Compliance Responsibility:

Identity, permissions, logging, and network controls must be designed and maintained internally, which can be significant for regulated organizations.

Teams that prefer code-first tools often look for dbt alternatives that provide strong SQL modeling, Python support, and seamless integration with CI/CD workflows and automated testing. These are part of a broader set of data transformation tools. Code-based ETL tools give developers greater control over transformations, environments, and orchestration patterns than GUI platforms. Below are four code-first contenders that organizations should evaluate.

Code-first dbt alternatives like SQLMesh, Bruin Data, and Dataform provide stronger CI/CD integration, automated testing, and more control over complex transformation workflows.

SQLMesh is an open-source framework for SQL and Python-based data transformations. It provides strong visibility into how changes impact downstream models and uses virtual data environments to preview changes before they reach production.

Benefits

Efficient Development Environments:

Virtual environments reduce unnecessary recomputation and speed up iteration.

Considerations

Part of the Fivetran Ecosystem:

SQLMesh was acquired by Fivetran, which may influence its future roadmap and level of independence.

Dataform is a SQL-based transformation framework focused specifically for BigQuery. It enables teams to create table definitions, manage dependencies, document models, and configure data quality tests inside the Google Cloud ecosystem. It also provides version control and integrates with GitHub and GitLab.

Benefits

Centralized BigQuery Development:

Dataform keeps all modeling and testing within BigQuery, reducing context switching and making it easier for teams to collaborate using familiar SQL workflows.

Considerations

Focused Only on the GCP Ecosystem:

Because Dataform is geared toward BigQuery, it may not be suitable for organizations that use multiple cloud data warehouses.

AWS Glue is a serverless data integration service that supports Python-based ETL and transformation workflows. It works well for organizations operating primarily in AWS and provides native integration with services like S3, Lambda, and Athena.

Benefits

Python-First ETL in AWS:

Glue supports Python scripts and PySpark jobs, making it a good fit for engineering teams already invested in the AWS ecosystem.

Considerations

Requires Engineering Expertise:

Glue can be complex to configure and maintain, and its Python-centric approach may not be ideal for SQL-first analytics teams.

Bruin is a modern SQL-based data modeling framework designed to simplify development, testing, and environment-aware deployments. It offers a familiar SQL developer experience while adding guardrails and automation to help teams manage complex transformation logic.

Benefits

Modern SQL Modeling Experience:

Bruin provides a clean SQL-first workflow with strong dependency management and testing.

Considerations

Growing Ecosystem:

Bruin is newer than dbt and has a smaller community and fewer third-party integrations.

While code-based transformation tools provide the most flexibility and long-term maintainability, some organizations prefer graphical user interface (GUI) tools. These platforms use visual, drag-and-drop components to build data integration and transformation workflows. Many of these platforms fall into the broader category of no-code ETL tools. GUI tools can accelerate onboarding for teams less comfortable with code editors and may simplify development in the short term. Below are several GUI-based options that organizations often consider as dbt alternatives.

GUI-based dbt alternatives such as Matillion, Informatica, and Alteryx use drag-and-drop interfaces that simplify development and accelerate onboarding for mixed-skill teams.

Matillion is a cloud-based data integration platform that enables teams to design ETL and transformation workflows through a visual, drag-and-drop interface. It is built for ease of use and supports major cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake.

Benefits

User-Friendly Visual Development:

Matillion simplifies pipeline building with a graphical interface, making it accessible for users who prefer low-code or no-code tooling.

Considerations

Limited Flexibility for Complex SQL Modeling:

Matillion’s visual approach can become restrictive for advanced transformation logic or engineering workflows that require version control and modular SQL development.

Informatica is an enterprise data integration platform with extensive ETL capabilities, hundreds of connectors, data quality tooling, metadata-driven workflows, and advanced security features. It is built for large and diverse data environments.

Benefits

Enterprise-Scale Data Management:

Informatica supports complex data integration, governance, and quality requirements, making it suitable for organizations with large data volumes and strict compliance needs.

Considerations

High Complexity and Cost:

Informatica’s power comes with a steep learning curve, and its licensing and operational costs can be significant compared to lighter-weight transformation tools.

Alteryx is a visual analytics and data preparation platform that combines data blending, predictive modeling, and spatial analysis in a single GUI-based environment. It is designed for analysts who want to build workflows without writing code and can be deployed on-premises or in the cloud.

Benefits

Powerful GUI Analytics Capabilities:

Alteryx allows users to prepare data, perform advanced analytics, and generate insights in one tool, enabling teams without strong coding skills to automate complex workflows.

Considerations

High Cost and Limited SQL Modeling Flexibility:

Alteryx is one of the more expensive platforms in this category and is less suited for SQL-first transformation teams who need modular modeling and version control.

Azure Data Factory (ADF) is a fully managed, serverless data integration service that provides a visual interface for building ETL and ELT pipelines. It integrates natively with Azure storage, compute, and analytics services, allowing teams to orchestrate and monitor pipelines without writing code.

Benefits

Strong Integration for Microsoft-Centric Teams:

ADF connects seamlessly with other Azure services and supports a pay-as-you-go model, making it ideal for organizations already invested in the Microsoft ecosystem.

Considerations

Limited Transformation Flexibility:

ADF excels at data movement and orchestration but offers limited capabilities for complex SQL modeling, making it less suitable as a primary transformation engine

Talend provides an end-to-end data management platform with support for batch and real-time data integration, data quality, governance, and metadata management. Talend Data Fabric combines these capabilities into a single low-code environment that can run in cloud, hybrid, or on-premises deployments.

Benefits

Comprehensive Data Quality and Governance:

Talend includes built-in tools for data cleansing, validation, and stewardship, helping organizations improve the reliability of their data assets.

Considerations

Broad Platform, Higher Operational Complexity:

Talend’s wide feature set can introduce complexity, and teams may need dedicated expertise to manage the platform effectively.

SQL Server Integration Services is part of the Microsoft SQL Server ecosystem and provides data integration and transformation workflows. It supports extracting, transforming, and loading data from a wide range of sources, and offers graphical tools and wizards for designing ETL pipelines.

Benefits

Strong Fit for SQL Server-Centric Teams:

SSIS integrates deeply with SQL Server and other Microsoft products, making it a natural choice for organizations with a Microsoft-first architecture.

Considerations

Not Designed for Modern Cloud Data Warehouses:

SSIS is optimized for on-premises SQL Server environments and is less suitable for cloud-native architectures or modern ELT workflows.

Recent consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in and pushed organizations to evaluate more flexible transformation platforms.

Organizations explore dbt alternatives when dbt no longer meets their architectural, security, or workflow needs. As teams scale, they often require stronger orchestration, consistent development environments, mixed SQL and Python workflows, and private deployment options that dbt Cloud does not provide.

Some teams prefer code-first engines for deeper CI/CD integration, automated testing, and strong guardrails across developers. Others choose GUI-based tools for faster onboarding or broader integration capabilities. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has also increased concerns about vendor lock-in.

These factors lead many organizations to evaluate tools that better align with their governance requirements, engineering preferences, and long-term strategy.

DIY dbt Core offers full control but requires significant engineering work to manage orchestration, CI/CD, security, and long-term platform maintenance.