Datacoves links & resources

Find links and resources for Datacoves, your hub for data management and analytics!

See it in Action

Datacoves Overview

Datacoves is an enterprise DataOps platform with hosted VS Codefor dbt development and Airflow. Used by hundreds of users at Fortune 100 enterprises, it enables you to implement data management best practices without compromising data security.

Learn MoreGenAI on Datacoves

Using GenAI to Generate Airflow DAGs to run dbt

In this use case we show how an agent can be used to create a new DAG.

Using GenAI to Expand Star in Select Statement

This video demonstrates how GenAI along with MCP servers can be used in Datacoves to simplify dbt development.

Hidden Dangers of AI: Why LLMs Still Need You

Large Language Models (LLMs) like ChatGPT and Claude are becoming common in modern data workflows.

dbt Cheat Sheets

Case Studies

Choosing dbt Cloud or Core

Build vs Buy Analytics Platform: Hosting Open-Source Tools

Not long ago, the data analytics world relied on monolithic infrastructures—tightly coupled systems that were difficult to scale, maintain, and adapt to changing needs. These legacy setups often resulted in operational bottlenecks, delayed insights, and high maintenance costs. To overcome these challenges, the industry shifted toward what was deemed the Modern Data Stack (MDS)—a suite of focused tools optimized for specific stages of the data engineering lifecycle.

This modular approach was revolutionary, allowing organizations to select best-in-class tools like Airflow for Orchestration or a managed version of Airflow from Astronomer or Amazon without the need to build custom solutions. While the MDS improved scalability, reduced complexity, and enhanced flexibility, it also reshaped the build vs. buy decision for analytics platforms. Today, instead of deciding whether to create a component from scratch, data teams face a new question: Should they build the infrastructure to host open-source tools like Apache Airflow and dbt Core, or purchase their managed counterparts? This article focuses on these two components because pipeline orchestration and data transformation lie at the heart of any organization’s data platform.

What does it mean to build vs buy?

Build

When we say build in terms of open-source solutions, we mean building infrastructure to self-host and manage mature open-source tools like Airflow and dbt. These two tools are popular because they have been vetted by thousands of companies! In addition to hosting and managing, engineers must also ensure interoperability of these tools within their stack, handle security, scalability, and reliability. Needless to say, building is a huge undertaking that should not be taken lightly.

Buy

dbt and Airflow both started out as open-source tools, which were freely available to use due to their permissive licensing terms. Over time, cloud-based managed offerings of these tools were launched to simplify the setup and development process. These managed solutions build upon the open-source foundation, incorporating proprietary features like enhanced user interfaces, automation, security integration, and scalability. The goal is to make the tools more convenient and reduce the burden of maintaining infrastructure while lowering overall development costs. In other words, paid versions arose out of the pain points of self-managing the open-source tools.

This begs the important question: Should you self-manage or pay for your open-source analytics tools?

Comparing build vs. buy: Key tradeoffs

As with most things, both options come with trade-offs, and the “right” decision depends on your organization’s needs, resources, and priorities. By understanding the pros and cons of each approach, you can choose the option that aligns with your goals, budget, and long-term vision.

Building In-House

Pros:

- Customization: The biggest advantage of building in-house is the flexibility to customize the tool to fit your exact use case. You maintain full control, allowing you to align configurations with your organization’s unique needs. However, with great power comes great responsibility—your team must have a deep understanding of the tools, their options, and best practices.

- Control: Owning the entire stack gives your team the ability to integrate deeply with existing systems and workflows, ensuring seamless operation within your ecosystem.

- Cost Perception: Without licensing fees, building in-house may initially appear more cost-effective, particularly for smaller-scale deployments.

Cons:

- High Upfront Investment: Setting up infrastructure requires a considerable time commitment from developers. Tasks like configuring environments, integrating tools like Git or S3 for Airflow DAG syncing, and debugging can consume weeks of developer hours.

- Operational Complexity: Ongoing maintenance—such as managing dependencies, handling upgrades, and ensuring reliability—can be overwhelming, especially as the system grows in complexity.

- Skill Gaps: Many teams underestimate the level of expertise needed to manage Kubernetes clusters, Python virtual environments, and secure credential storage systems like AWS Secrets Manager.

- Experimentation: Your organization is using the first iteration the team is producing which can lead to unintended consequences, edge cases, and security issues.

Example:

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Building with open-source is not free. Cons Continued

Before moving on to the buy tradeoffs, it is important to set the record straight. You may have noticed that we did not include “the tool is free to use” as one of our pros for building with open-source. You might have guessed by reading the title of this section, but many people incorrectly believe that building their MDS using open-source tools like dbt is free. When in reality there are many factors that contribute to the true dbt pricing and the same is true for Airflow.

How can that be? Well, setting up everything you need and managing infrastructure for Airflow and dbt isn’t necessarily plug and play. There is day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges which require ongoing expertise and attention. Hiring a team to handle this will be critical particularly as you scale. High salaries and benefits are needed to avoid costly mistakes; this can easily cost anywhere from $5,000 to $26,000+/month depending on the size of your team.

In addition to the cost of salaries, let’s look at other possible hidden costs that come with using open-source tools.

Time and expertise

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks and when issues arise, a you will need expertise to accelerate the time to resolution.

And then there’s the learning curve. Not all engineers on your team will be seniors, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents a hidden cost.

Security and compliance

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling complexities

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business users are impacted as they become reliant on your insights.

Buying a managed solution

A managed solution for Airflow and dbt can solve many of the problems that come with building your own solution from open-source tools such as: hassle-free maintenance, improved UI/UX experience, and integrated functionality. Let’s take a look at the pros.

Pros:

- Faster Time to Value: With a managed solution, your team can get up and running quickly without spending weeks—or months—on setup and configuration.

- Reduced Operational Overhead: Managed providers handle infrastructure, maintenance, and upgrades, freeing your team to focus on business objectives rather than operational minutiae.

- Predictable Costs: Managed solutions typically come with transparent pricing models, which can make budgeting simpler compared to the variable costs of in-house built tooling.

- Reliability: Your team is using version 1000+ of a managed solution vs the 1st version of your self-managed solution. This provides reliability and peace of mind that edge cases have been handled, and security is under wraps.

Cons:

- Potentially Less Flexibility: This is the biggest con and reason why many teams choose to build. Managed solutions may not allow for the same level of customization as building in-house, which could limit certain niche use cases. Care must be taken to choose a provider that is built for enterprise level flexibility.

- Dependency on a Vendor: Relying on a vendor for your analytics stack introduces some level of risk, such as service disruptions or limited migration paths if you decide to switch providers. Some managed solution providers simply offer the service, but leave it up to your team to “make it work” and troubleshoot. A good provider will have a vested interest in your success, because they can’t afford for you to fail.

Example:

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however additional configuration and development will be needed for teams to leverage it with dbt and to troubleshoot infrastructure issues suck as containers running out of memory.

Datacoves for Airflow and dbt: The buy that feels like a build

For data teams, the allure of a custom-built solution often lies in its promise of complete control and customization. However, building this requires significant time, expertise, and ongoing maintenance. Datacoves bridges the gap between custom-built flexibility and the simplicity of managed services, offering the best of both worlds.

With Datacoves, teams can leverage managed Airflow and pre-configured dbt environments to eliminate the operational burden of infrastructure setup and maintenance. This allows data teams to focus on what truly matters—delivering insights and driving business decisions—without being bogged down by tool management.

Unlike other managed solutions for dbt or Airflow, which often compromise on flexibility for the sake of simplicity, Datacoves retains the adaptability that custom builds are known for. By combining this flexibility with the ease and efficiency of managed services, Datacoves empowers teams to accelerate their analytics workflows while ensuring scalability and control.

Datacoves doesn’t just run the open-source solutions, but through real-world implementations, the platform has been molded to handle enterprise complexity while simplifying project onboarding. With Datacoves, teams don’t have to compromize on features like Datacoves-Mesh (aka dbt-mesh), column level lineage, GenAI, Semantic Layer, etc. Best of all, the company’s goal is to make you successful and remove hosting complexity without introducing vendor lock-in. What Datacove does, you can do yourself if given enough time, experience, and money. Finally, for security concious organizations, Datacoves is the only solution on the market that can be deployed in your private cloud with white-glove enterprise support.

Datacoves isn’t just a platform—it’s a partnership designed to help your data team unlock their potential. With infrastructure taken care of, your team can focus on what they do best: generating actionable insights and maximizing your ROI.

Conclusion

The build vs. buy debate has long been a challenge for data teams, with building offering flexibility at the cost of complexity, and buying sacrificing flexibility for simplicity. As discussed earlier in the article, solutions like dbt and Airflow are powerful, but managing them in-house requires significant time, resources, and expertise. On the other hand, managed offerings like dbt Cloud and MWAA simplify operations but often limit customization and control.

Datacoves bridges this gap, providing a managed platform that delivers the flexibility and control of a custom build without the operational headaches. By eliminating the need to manage infrastructure, scaling, and security. Datacoves enables data teams to focus on what matters most: delivering actionable insights and driving business outcomes.

As highlighted in Fundamentals of Data Engineering, data teams should prioritize extracting value from data rather than managing the tools that support them. Datacoves embodies this principle, making the argument to build obsolete. Why spend weeks—or even months—building when you can have the customization and adaptability of a build with the ease of a buy? Datacoves is not just a solution; it’s a rethinking of how modern data teams operate, helping you achieve your goals faster, with fewer trade-offs.

Get Our Free Data Platform Evaluation Worksheet

Get the PDFGet Our Free ebook dbt Cloud vs dbt Core

Get the PDFComparisons

Datacoves vs dbt Cloud

A helpful comparison of Datacoves and

dbt Cloud.

Datacoves vs Alteryx

A helpful comparison of Datacoves and Alteryx.

Datacoves vs Matillion

A helpful comparison of Datacoves and Matillion.

dbt Cloud vs dbt Core vs Managed dbt Core

A helpful comparison of dbt Cloud and dbt Core.

Useful Resources

Microsoft Fabric: 10 Reasons It's Still Not the Right Choice in 2025

There's a lot of buzz around Microsoft Fabric these days. Some people are all-in, singing its praises from the rooftops, while others are more skeptical, waving the "buyer beware" flag. After talking with the community and observing Fabric in action, we're leaning toward caution. Why? Well, like many things in the Microsoft ecosystem, it's a jack of all trades but a master of none. Many of the promises seem to be more marketing hype than substance, leaving you with "marketecture" instead of solid architecture. While the product has admirable, lofty goals, Microsoft has many wrinkles to iron out.

In this article, we'll dive into 10 reasons why Microsoft Fabric might not be the best fit for your organization in 2025. By examining both the promises and the current realities of Microsoft Fabric, we hope to equip you with the information needed to make an informed decision about its adoption.

What is Microsoft Fabric?

Microsoft Fabric is marketed as a unified, cloud-based data platform developed to streamline data management and analytics within organizations. Its goal is to integrate various Microsoft services into a single environment and to centralize and simplify data operations.

This means that Microsoft Fabric is positioning itself as an all-in-one analytics platform designed to handle a wide range of data-related tasks. A place to handle data engineering, data integration, data warehousing, data science, real-time analytics, and business intelligence. A one stop shop if you will. By consolidating these functions, Fabric hopes to provide a seamless experience for organizations to manage, analyze, and gather insights from their data.

Core Components of Microsoft Fabric

- OneLake: OneLake is the foundation of Microsoft Fabric, serving as a unified data lake that centralizes storage across Fabric services. It is built on Delta Lake technology and leverages Azure Blob Storage, similar to how Apache Iceberg is used for large-scale cloud data management

- Synapse Data Warehouse: Similar to Amazon Redshift this provides storage and management for structured data. It supports SQL-based querying and analytics, aiming to facilitate data warehousing needs.

- Synapse Data Engineering: Compute engine based on Apache Spark, similar to Databricks' offering. It is built on Apache Spark and is intended to support tasks such as data cleaning, transformation, and feature engineering.

- Azure Data Factory: A tool for pipeline orchestration and data loading which is also part of Synapse Data Engineering

- Synapse Data Science: Similar to Jupiter Notebooks that can only run on Azure Spark. It is designed to support data scientists in developing predictive analytics and AI solutions by leveraging Azure ML and Azure Spark services.

- Synapse Real-Time Analytics: Enables the analysis of streaming data from various sources including Kafka, Kinesis, and CDC sources.

- Power BI: This is a BI (business intelligence) tool like Tableau tool designed to create data visualizations and dashboards.

Fabric presents itself as an all-in-one solution, but is it really? Let’s break down where the marketing meets reality.

10 Reasons It’s Still Not the Right Choice in 2025

While Microsoft positions Fabric is making an innovative step forward, much of it is clever marketing and repackaging of existing tools. Here’s what’s claimed—and the reality behind these claims:

1. Fragmented User Experience, Not True Unification

Claim: Fabric combines multiple services into a seamless platform, aiming to unify and simplify workflows, reduce tool sprawl, and make collaboration easier with a one-stop shop.

Reality:

- Rebranded Existing Services: Fabric is mainly repackaging existing Azure services under a new brand. For example, Fabric bundles Azure Data Factory (ADF) for pipeline orchestration and Azure Synapse Analytics for traditional data warehousing needs and Azure Spark for distributed workloads. While there are some enhancements to synapse to synchronize data from OneLake, the core functionalities remain largely unchanged. PowerBI is also part of Fabric and this tool has existed for years as have notebooks under the Synapse Data Science umbrella.

- Steep Learning Curve and Complexity: Fabric claims to create a unified experience that doesn’t exist in other platforms, but it just bundles a wide range of services—from data engineering to analytics—and introduces new concepts (like proprietary query language, KQL which is only used in the Azure ecosystem). Some tools are geared to different user personas such as ADF for data engineers and Power BI for business analysts, but to “connect” an end-to-end process, users would need to interact with different tools. This can be overwhelming, particularly for teams without deep Microsoft expertise. Each tool has its own unique quirks and even services that have functionality overlap don’t work exactly the same way to do the same thing. This just complicates the learning process and reduces overall efficiency.

2. Performance Bottlenecks & Throttling Issues

Claim: Fabric offers a scalable and flexible platform.

Reality: In practice, managing scalability in Fabric can be difficult. Scaling isn’t a one‑click, all‑services solution—instead, it requires dedicated administrative intervention. For example, you often have to manually pause and un-pause capacity to save money, a process that is far from ideal if you’re aiming for automation. Although there are ways to automate these operations, setting up such automation is not straightforward. Additionally, scaling isn’t uniform across the board; each service or component must be configured individually, meaning that you must treat them on a case‑by‑case basis. This reality makes the promise of scalability and flexibility a challenge to realize without significant administrative overhead.

3. Capacity-Based Pricing Creates Cost Uncertainty

Claim: Fabric offers predictable, cost-effective pricing.

Reality: While Fabric's pricing structure appears straightforward, several hidden costs and adoption challenges can impact overall expenses and efficiency:

- Cost uncertainty: Microsoft Fabric uses a capacity-based pricing model that requires organizations to purchase predefined Capacity Units (CUs). Organizations need to carefully assess their workload requirements to optimize resource allocation and control expenses. Although a pay-as-you-go (PAYG) option is available, it often demands manual intervention or additional automation to adjust resources dynamically. This means organizations often need to overprovision compute power to avoid throttling, leading to inefficiencies and increased costs. The problem is you pay for what you think you will use and get a 40% discount. If you don’t use all of the capacity, then there are wasted capacity. If you go over capacity, you can do configure PAYG (pay as you go) but it’s at full price. Unlike true serverless solutions, you pay for allocated capacity regardless of actual usage. This isn’t flexible like the cloud was intended to be. 👎

- Throttling and Performance Degradation: Exceeding purchased capacity can result in throttling, causing degraded performance. To prevent this, organizations might feel compelled to purchase higher capacity tiers, further escalating costs.

- Visibility and Cost Management: Users have reported challenges in monitoring and predicting costs due to limited visibility into additional expenses. This lack of transparency necessitates careful monitoring and manual intervention to manage budgets effectively.

- Adoption and Training Time: It’s important to note that implementing Fabric requires significant time investment in training and adapting existing workflows. While this is the case with any new platform, Microsoft is notorious for complexity in their tooling and this can lead to longer adoption periods, during which productivity may temporarily decline.

All this to say that the pricing model is not good unless you can predict with great accuracy exactly how much you will spend every single day, and who knows that? Check out this article on the hidden cost of fabric which goes into detail and cost comparisons.

4. Limited Compatibility with Non-Microsoft Tools

Claim: Fabric supports a wide range of data tools and integrations.

Reality: Fabric is built around a tight integration with other Fabric services and Microsoft tools such as Office 365 and Power BI, making it less ideal for organizations that prefer a “best‑of‑breed” approach (or rely on tools like Tableau, Looker, open-source solutions like Lightdash, or other non‑Microsoft solutions), this can severely limit flexibility and complicate future migrations.

While third-party connections are possible, they don’t integrate as smoothly as those in the MS ecosystem like Power BI, potentially forcing organizations to switch tools just to make Fabric work.

5. Poor DataOps & CI/CD Support

Claim: Fabric simplifies automation and deployment for data teams by supporting modern DataOps workflows.

Reality: Despite some scripting support, many components remain heavily UI‑driven. This hinders full automation and integration with established best practices for CI/CD pipelines (e.g., using Terraform, dbt, or Airflow). Organizations that want to mature data operations with agile DataOps practices find themselves forced into manual workarounds and struggle to integrate Fabric tools into their CI/CD processes. Unlike tools such as dbt, there is not built-in Data Quality or Unit Testing, so additional tools would need to be added to Fabric to achieve this functionality.

6. Security Gaps & Compliance Risks

Claim: Microsoft Fabric provides enterprise-grade security, compliance, and governance features.

Reality: While Microsoft Fabric offers robust security measures like data encryption, role-based access control, and compliance with various regulatory standards, there are some concerns organizations should consider.

One major complaint is that access permissions do not always persist consistently across Fabric services, leading to unintended data exposure.

For example, users can still retrieve restricted data from reports due to how Fabric handles permissions at the semantic model level. Even when specific data is excluded from a report, built-in features may allow users to access the data, creating compliance risks and potential unauthorized access. Read more: Zenity - Inherent Data Leakage in Microsoft Fabric.

While some of these security risks can be mitigated, they require additional configurations and ongoing monitoring, making management more complex than it should be. Ideally, these protections should be unified and work out of the box rather than requiring extra effort to lock down sensitive data.

7. Lack of Maturity & Changes that Disrupt Workflow

Claim: Fabric is presented as a mature, production-ready analytics platform.

Reality: The good news for Fabric is that it is still evolving. The bad news is, it's still evolving. That evolution impacts users in several ways:

- Frequent Updates and Unstable Workflows: Many features remain in preview, and regular updates can sometimes disrupt workflows or introduce unexpected issues. Users have noted that the platform’s UI/UX is continually changing, which can impact consistency in day-to-day operations. Just when you figure out how to do something, the buttons change. 😤

- Limited Features: Several functionalities are still in preview or implementation is still in progress. For example, dynamic connection information, Key Vault integration for connections, and nested notebooks are not yet fully implemented. This restricts the platform’s applicability in scenarios that rely on these advanced features.

- Bugs and Stability Issues: A range of known issues—from data pipeline failures to problems with Direct Lake connections—highlights the platform’s instability. These bugs can make Fabric unpredictable for mission-critical tasks. One user lost 3 months of work!

8. Black Box Automation & Limited Customization

Claim: Fabric automates many complex data processes to simplify workflows.

Reality: Fabric is heavy on abstractions and this can be a double‑edged sword. While at first it may appear to simplify things, these abstractions lead to a lack of visibility and control. When things go wrong it is hard to debug and it may be difficult to fine-tune performance or optimize costs.

For organizations that need deep visibility into query performance, workload scheduling, or resource allocation, Fabric lacks the granular control offered by competitors like Databricks or Snowflake.

9. Limited Resource Governance and Alerting

Claim: Fabric offers comprehensive resource governance and robust alerting mechanisms, enabling administrators to effectively manage and troubleshoot performance issues.

Reality: Fabric currently lacks fine-grained resource governance features making it challenging for administrators to control resource consumption and mitigate issues like the "noisy neighbor" problem, where one service consumes disproportionate resources, affecting others.

The platform's alerting mechanisms are also underdeveloped. While some basic alerting features exist, they often fail to provide detailed information about which processes or users are causing issues. This can make debugging an absolute nightmare. For example, users have reported challenges in identifying specific reports causing slowdowns due to limited visibility in the capacity metrics app. This lack of detailed alerting makes it difficult for administrators to effectively monitor and troubleshoot performance issues, often needing the adoption of third-party tools for more granular governance and alerting capabilities. In other words, not so all in one in this case.

10. Missing Features & Gaps in Functionality

Claim: Fabric aims to be an all-in-one platform that covers every aspect of data management.

Reality: Despite its broad ambitions, key features are missing such as:

- Geographical Availability: Fabric's data warehousing does not support multiple geographies, which could be a constraint for global organizations seeking localized data storage and processing.

- Garbage Collection: Parquet files that are no longer needed are not automatically removed from storage, potentially leading to inefficient storage utilization.

While these are just a couple of examples it's important to note that missing features will compel users to seek third-party tools to fill the gaps, introducing additional complexities. Integrating external solutions is not always straight forward with Microsoft products and often introduces a lot of overhead. Alternatively, users will have to go without the features and create workarounds or add more tools which we know will lead to issues down the road.

Conclusion

Microsoft Fabric promises a lot, but its current execution falls short. Instead of an innovative new platform, Fabric repackages existing services, often making things more complex rather than simpler.

That’s not to say Fabric won’t improve—Microsoft has the resources to refine the platform. But as of 2025, the downsides outweigh the benefits for many organizations.

If your company values flexibility, cost control, and seamless third-party integrations, Fabric may not be the best choice. There are more mature, well-integrated, and cost-effective alternatives that offer the same features without the Microsoft lock-in.

Time will tell if Fabric evolves into the powerhouse it aspires to be. For now, the smart move is to approach it with a healthy dose of skepticism.

👉 Before making a decision, thoroughly evaluate how Fabric fits into your data strategy. Need help assessing your options? Check out this data platform evaluation worksheet.

Event-Driven Airflow: Using Datasets for Smarter Scheduling

In Apache Airflow, scheduling workflows has traditionally been managed using the schedule_interval parameter, which accepts definitions such as datetime objects or cron expressions to establish time-based intervals for DAG (Directed Acyclic Graph) executions. Airflow was a powerful scheduler but became even more efficient when Airflow introduced a significant enhancement in the incorporation of datasets into scheduling. This advancement enables data-driven DAG execution, allowing workflows to be triggered by specific data updates rather than relying on predetermined time intervals.

In this article, we'll dive into the concept of Airflow datasets, explore their transformative impact on workflow orchestration, and provide a step-by-step guide to schedule your DAGs using Datasets!

Understanding Airflow Scheduling (Pre-Datasets)

DAG scheduling in Airflow was primarily time-based, relying on parameters like schedule_interval and start_date to define execution times. With this set up there were three ways to schedule your DAGs: Cron, presets, or timedelta objects. Let's examine each one.

- Cron Expressions: These expressions allowed precise scheduling. For example, to run a DAG daily at 4:05 AM, you would set

schedule_interval='5 4 * * *'.

- Presets: Airflow provided string presets for common intervals:

@hourly: Runs the DAG at the beginning of every hour.@daily: Runs the DAG at midnight every day.@weekly: Runs the DAG at midnight on the first day of the week.@monthly: Runs the DAG at midnight on the first day of the month.@yearly: Runs the DAG at midnight on January 1st.

- Timedelta Objects: For intervals not easily expressed with cron, a timedelta object could be used. For instance,

schedule_interval=timedelta(hours=6)would schedule the DAG every six hours.

Limitations of Time-Based Scheduling

While effective for most complex jobs, time-based scheduling had some limitations:

Fixed Timing: DAGs ran at predetermined times, regardless of data readiness (this is the key to Datasets). If data wasn't available at the scheduled time, tasks could fail or process incomplete data.

Sensors and Polling: To handle data dependencies, sensors were employed to wait for data availability. However, sensors often relied on continuous polling, which could be resource-intensive and lead to inefficiencies.

Airflow Datasets were created to overcome these scheduling limitations.

Intro to Airflow Datasets

A Dataset is a way to represent a specific set of data. Think of it as a label or reference to a particular data resource. This can be anything: a csv file, an s3 bucket or SQL table. A Dataset is defined by passing a string path to the Dataset() object. This path acts as an identifier — it doesn't have to be a real file or URL, but it should be consistent, unique, and ideally in ASCII format (plain English letters, numbers, slashes, underscores, etc.).

from airflow.datasets import Dataset

my_dataset = Dataset("s3://my-bucket/my-data.csv")

# or

my_dataset = Dataset("my_folder/my_file.txt")When using Airflow Datasets, remember that Airflow does not monitor the actual contents of your data. It doesn’t check if a file or table has been updated.

Instead, it tracks task completion. When a task that lists a Dataset in its outlets finishes successfully, Airflow marks that Dataset as “updated.” This means the task doesn’t need to actually modify any data — even a task that only runs a print() statement will still trigger any Consumer DAGs scheduled on that Dataset. It’s up to your task logic to ensure the underlying data is actually being modified when necessary. Even though Airflow isn’t checking the data directly, this mechanism still enables event-driven orchestration because your workflows can run when upstream data should be ready.

For example, if one DAG has a task that generates a report and writes it to a file, you can define a Dataset for that file. Another DAG that depends on the report can be triggered automatically as soon as the first DAG’s task completes. This removes the need for rigid time-based scheduling and reduces the risk of running on incomplete or missing data.

Datasets give you a new way to schedule your DAGs—based on when upstream DAGs completion, not just on a time interval. Instead of relying on schedule_interval, Airflow introduced the schedule parameter to support both time-based and dataset-driven workflows. When a DAG finishes and "updates" a dataset, any DAGs that depend on that dataset can be triggered automatically. And if you want even more control, you can update your Dataset externally using the Airflow API.

When using Datasets in Airflow, you'll typically work with two types of DAGs: Producer and Consumer DAGs.

What is a Producer DAG?

A DAG responsible for defining and "updating" a specific Dataset. We say "updating" because Airflow considers a Dataset "updated" simply when a task that lists it in its outlets completes successfully — regardless of whether the data was truly modified.

A Producer DAG:

✅ Must have the Dataset variable defined or imported

✅ Must include a task with the outlets parameter set to that Dataset

What is a Consumer DAG?

A DAG that is scheduled to execute once the Producer DAG successfully completes.

A Consumer DAG:

✅ Must reference the same Dataset using the schedule parameter

It’s this producer-consumer relationship that enables event-driven scheduling in Airflow — allowing workflows to run as soon as the data they're dependent on is ready, without relying on fixed time intervals.

Tutorial: Scheduling with Datasets

Create a producer DAG

1. Define your Dataset.

In a new DAG file, define a variable using the Dataset object and pass in the path to your data as a string. In this example, it’s the path to a CSV file.

# producer.py

from airflow.datasets import Dataset

# Define the dataset representing the CSV file

csv_dataset = Dataset("/path/to/your_dataset.csv") 2. Create a DAG with a task that updates the CSV dataset.

We’ll use the @dag and @task decorators for a cleaner structure. The key part is passing the outlets parameter to the task. This tells Airflow that the task updates a specific dataset. Once the task completes successfully, Airflow will consider the dataset "updated" and trigger any dependent DAGs.

We’re also using csv_dataset.uri to get the path to the dataset—this is the same path you defined earlier (e.g., "/path/to/your_dataset.csv").

# producer.py

from airflow.decorators import dag, task

from airflow.datasets import Dataset

from datetime import datetime

import pandas as pd

import os

# Define the dataset representing the CSV file

csv_dataset = Dataset("/path/to/your_dataset.csv")

@dag(

dag_id='producer_dag',

start_date=datetime(2025, 3, 31),

schedule='@daily',

catchup=False,

)

def producer_dag():

@task(outlets=[csv_dataset])

def update_csv():

data = {'column1': [1, 2, 3], 'column2': ['A', 'B', 'C']}

df = pd.DataFrame(data)

file_path = csv_dataset.uri

# Check if the file exists to append or write

if os.path.exists(file_path):

df.to_csv(file_path, mode='a', header=False, index=False)

else:

df.to_csv(file_path, index=False)

update_csv()

producer_dag()Create a Consumer DAG

Now that we have a producer DAG that is updating a Dataset. We can create our DAG that will be dependent on the consumer DAG. This is where the magic happens since this DAG will no longer be time dependent but rather Dataset dependant.

1. Instantiate the same Dataset used in the Producer DAG

In a new DAG file (the consumer), start by defining the same Dataset that was used in the Producer DAG. This ensures both DAGs are referencing the exact same dataset path.

# consumer.py

from airflow.datasets import Dataset

# Define the dataset representing the CSV file

csv_dataset = Dataset("/path/to/your_dataset.csv") 2. Set the schedule to the Dataset

Create your DAG and set the schedule parameter to the Dataset you instantiated earlier (the one being updated by the producer DAG). This tells Airflow to trigger this DAG only when that dataset is updated—no need for time-based scheduling.

# consumer.py

import datetime

from airflow.decorators import dag, task

from airflow.datasets import Dataset

csv_dataset = Dataset("/path/to/your_dataset.csv")

@dag(

default_args={

"start_date": datetime.datetime(2024, 1, 1, 0, 0),

"owner": "Mayra Pena",

"email": "mayra@example.com",

"retries": 3

},

description="Sample Consumer DAG",

schedule=[csv_dataset],

tags=["transform"],

catchup=False,

)

def data_aware_consumer_dag():

@task

def run_consumer():

print("Processing updated CSV file")

run_consumer()

dag = data_aware_consumer_dag()

Thats it!🎉 Now this DAG will run whenever the first Producer DAG completes (updates the file).

Dry Principles for Datasets

When using Datasets you may be using the same dataset across multiple DAGs and therfore having to define it many times. There is a simple DRY (Dont Repeat Yourself) way to overcome this.

1. Create a central datasets.py file

To follow DRY (Don't Repeat Yourself) principles, centralize your dataset definitions in a utility module.

Simply create a utils folder and add a datasets.py file.

If you're using Datacoves, your Airflow-related files typically live in a folder named orchestrate, so your path might look like:orchestrate/utils/datasets.py

2. Import the Dataset object

Inside your datasets.py file, import the Dataset class from Airflow:

from airflow.datasets import Dataset 3. Define your Dataset in this file

Now that you’ve imported the Dataset object, define your dataset as a variable. For example, if your DAG writes to a CSV file:

from airflow.datasets import Dataset

# Define the dataset representing the CSV file

CSV_DATASET= Dataset("/path/to/your_dataset.csv") Notice we’ve written the variable name in all caps (CSV_DATASET)—this follows Python convention for constants, signaling that the value shouldn’t change. This makes your code easier to read and maintain.

4. Import the Dataset in your DAG

In your DAG file, simply import the dataset you defined in your utils/datasets.py file and use it as needed.

from airflow.decorators import dag, task

from orchestrate.utils.datasets import CSV_DATASET

from datetime import datetime

import pandas as pd

import os

@dag(

dag_id='producer_dag',

start_date=datetime(2025, 3, 31),

schedule='@daily',

catchup=False,

)

def producer_dag():

@task(outlets=[CSV_DATASET])

def update_csv():

data = {'column1': [1, 2, 3], 'column2': ['A', 'B', 'C']}

df = pd.DataFrame(data)

file_path = CSV_DATASET.uri

# Check if the file exists to append or write

if os.path.exists(file_path):

df.to_csv(file_path, mode='a', header=False, index=False)

else:

df.to_csv(file_path, index=False)

update_csv()

producer_dag()

Now you can reference CSV_DATASET in your DAG's schedule or as a task outlet, keeping your code clean and consistent across projects.🎉

Visualizing Dataset Dependencies in the UI

You can visualize your Datasets as well as events triggered by Datasets in the Airflow UI. There are 3 tabs that will prove helpful for implementation and debugging your event triggered pipelines:

Dataset Events

The Dataset Events sub-tab shows a chronological list of recent events associated with datasets in your Airflow environment. Each entry details the dataset involved, the producer task that updated it, the timestamp of the update, and any triggered consumer DAGs. This view is important for monitoring the flow of data, ensuring that dataset updates occur as expected, and helps with prompt identification and resolution of issues within data pipelines.

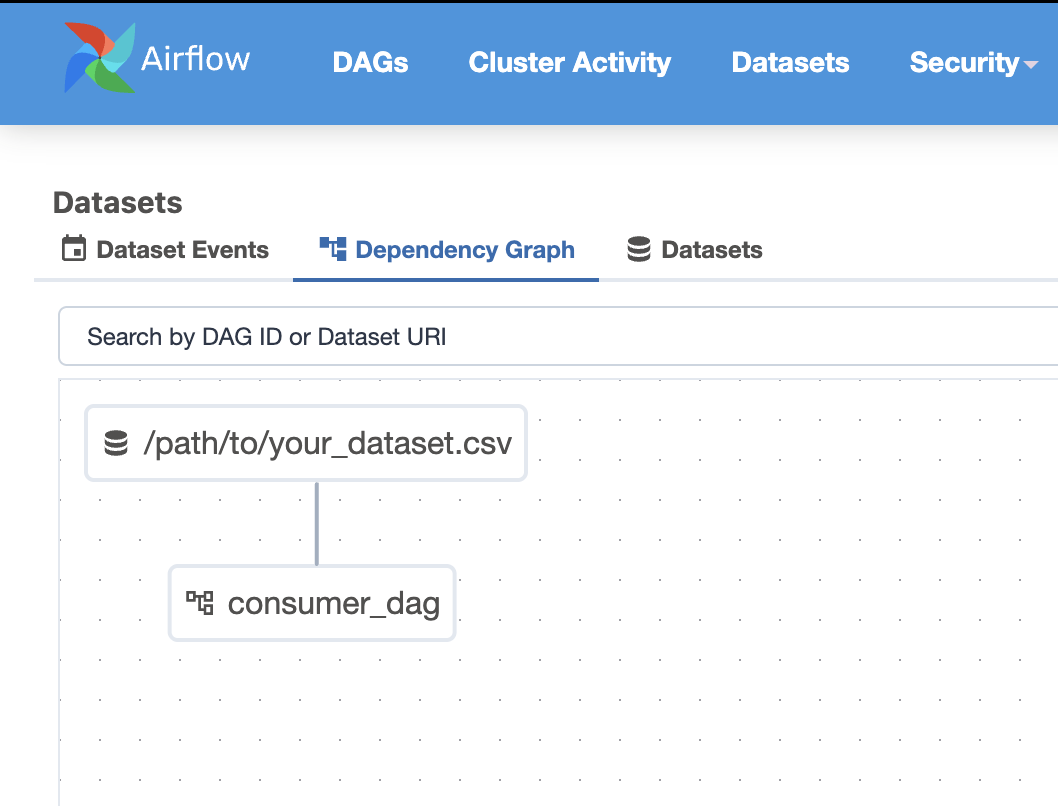

Dependency Graph

The Dependency Graph is a visual representation of the relationships between datasets and DAGs. It illustrates how producer tasks, datasets, and consumer DAGs interconnect, providing a clear overview of data dependencies within your workflows. This graphical depiction helps visualize the structure of your data pipelines to identify potential bottlenecks and optimize your pipeline.

Datasets

The Datasets sub-tab provides a list of all datasets defined in your Airflow instance. For each dataset, it shows important information such as the dataset's URI, associated producer tasks, and consumer DAGs. This centralized view provides efficient management of datasets, allowing users to track dataset usage across various workflows and maintain organized data dependencies.

Best Practices & Considerations

When working with Datasets, there are a couple of things to take into consideration to maintain readability.

Naming datasets meaningfully: Ensure your names are verbose and descriptive. This will help the next person who is looking at your code and even future you.

Avoid overly granular datasets: While they are a great tool too many = hard to manage. So try to strike a balance.

Monitor for dataset DAG execution delays: It is important to keep an eye out for delays since this could point to an issue in your scheduler configuration or system performance.

Task Completion Signals Dataset Update: It’s important to understand that Airflow doesn’t actually check the contents of a dataset (like a file or table). A dataset is considered “updated” only when a task that lists it in its outlets completes successfully. So even if the file wasn’t truly changed, Airflow will still assume it was. At Datacoves, you can also trigger a DAG externally using the Airflow API and an AWS Lambda Function to trigger your DAG once data lands in an S3 Bucket.

Datacoves provides a scalable Managed Airflow solution and handles these upgrades for you. This alleviates the stress of managing Airflow Infrastructure so you can data teams focus on their pipelines. Checkout how Datadrive saved 200 hours yearly by choosing Datacoves.

Conclusion

The introduction of data-aware scheduling with Datasets in Apache Airflow is a big advancement in workflow orchestration. By enabling DAGs to trigger based on data updates rather than fixed time intervals, Airflow has become more adaptable and efficient in managing complex data pipelines.

By adopting Datasets, you can enhance the maintainability and scalability of your workflows, ensuring that tasks are executed exactly when the upstream data is ready. This not only optimizes resource utilization but also simplifies dependency management across DAGs.

Give it a try! 😎

The Hidden Costs of no code ETL Tools: 10 Reasons They Don’t Scale

The Hidden Costs of no code ETL Tools: 10 Reasons They Don’t Scale

"It looked so easy in the demo…"

— Every data team, six months after adopting a drag-and-drop ETL tool

If you lead a data team, you’ve probably seen the pitch: Slick visuals. Drag-and-drop pipelines. "No code required." Everything sounds great — and you can’t wait to start adding value with data!

At first, it does seem like the perfect solution: non-technical folks can build pipelines, onboarding is fast, and your team ships results quickly.

But our time in the data community has revealed the same pattern over and over: What feels easy and intuitive early on becomes rigid, brittle, and painfully complex later.

Let’s explore why no code ETL tools can lead to serious headaches for your data preparation efforts.

What Is ETL (and Why It Matters)?

Before jumping into the why and the how, let’s start with the what.

When data is created in its source systems it is never ready to be used for analysis as is. It always needs to be massaged and transformed for downstream teams to gather any insights from the data. That is where ETL comes in. ETL stands for Extract, Transform, Load. This is the process of moving data from multiple sources, reshaping (transforming) it, and loading it into a system where it can be used for analysis.

At its core, ETL is about data preparation:

- Extracting raw data from different systems

- Transforming it — cleaning, standardizing, joining, and applying business logic

- Loading the refined data into a centralized destination like a data warehouse

Without ETL, you’re stuck with messy, fragmented, and unreliable data. Good ETL enables better decisions, faster insights, and more trustworthy reporting. Think of ETL as the foundation that makes dashboards, analytics, Data Science, Machine Learning, GenAI, and lead to data-driven decision-making even possible.

Now the real question is how do we get from raw data to insights? That is where the topic of tooling comes into the picture. While this might be at a very high-level, we categorize tools into two categories: Code-based and no-code/low-code. Let’s look at these categories in a little more detail.

What Are Code-Based ETL Tools?

Code-based ETL tools require analysts to write scripts or code to build and manage data pipelines. This is typically done with programming languages like SQL, Python, possibly with specialized frameworks, like dbt, tailored for data workflows.

Instead of clicking through a UI, users define the extraction, transformation, and loading steps directly in code — giving them full control over how data moves, changes, and scales.

Common examples of code-based ETL tooling include dbt (data build tool), SQLMesh, Apache Airflow, and custom-built Python scripts designed to orchestrate complex workflows.

While code-based tools often come with a learning curve, they offer serious advantages:

- Greater flexibility to handle complex business logic

- Better scalability as data volumes and pipeline complexity grow

- Stronger maintainability through practices like version control, testing, and modular development

Most importantly, code-based systems allow teams to treat pipelines like software, applying engineering best practices that make systems more reliable, auditable, and adaptable over time.

Building and maintaining robust ETL pipelines with code requires up-front work to set up CI/CD and developers who understand SQL or Python. Because of this investment in expertise, some teams are tempted to explore whether the grass is greener on the other side with no-code or low-code ETL tools that promise faster results with less engineering complexity. No hard-to-understand code, just drag and drop via nice-looking UIs. This is certainly less intimidating than seeing a SQL query.

What Are No-Code ETL Tools?

As you might have already guessed, no-code ETL tools let users build data pipelines without writing code. Instead, they offer visual interfaces—typically drag-and-drop—that “simplify” the process of designing data workflows.

These tools aim to make data preparation accessible to a broader audience reducing complexity by removing coding. They create the impression that you don't need skilled engineers to build and maintain complex pipelines, allowing users to define transformations through menus, flowcharts, and configuration panels—no technical background required.

However, this perceived simplicity is misleading. No-code platforms often lack essential software engineering practices such as version control, modularization, and comprehensive testing frameworks. This can lead to a buildup of technical debt, making systems harder to maintain and scale over time. As workflows become more complex, the initial ease of use can give way to a tangled web of dependencies and configurations, challenging to untangle without skilled engineering expertise. Additional staff is needed to maintain data quality, manage growing complexity, and prevent the platform from devolving into a disorganized state. Over time, team velocity decreases due to layers of configuration menus.

Popular no-code ETL tools include Matillion, Talend, Azure Data Factory(ADF), Informatica, Talend, and Alteryx. They promise minimal coding while supporting complex ETL operations. However, it's important to recognize that while these tools can accelerate initial development, they may introduce challenges in long-term maintenance and scalability.

To help simplify why best-in-class orginazations typically avoid no-code tools, we've come up with 10 reasons that highlight their limitations.

🔟 Reasons GUI-Based ETL Tools Don’t Scale

1. Version control is an afterthought

Most no-code tools claim Git support, but it's often limited to unreadable exports like JSON or XML. This makes collaboration clunky, audits painful, and coordinated development nearly impossible.

Bottom Line: Scaling a data team requires clean, auditable change management — not hidden files and guesswork.

2. Reusability is limited

Without true modular design, teams end up recreating the same logic across pipelines. Small changes become massive, tedious updates, introducing risk and wasting your data team’s time. $$$

Bottom Line: When your team duplicates effort, innovation slows down.

3. Debugging is frustrating

When something breaks, tracing the root cause is often confusing and slow. Error messages are vague, logs are buried, and troubleshooting feels like a scavenger hunt. Again, wasting your data team’s time.

Bottom Line: Operational complexity gets hidden behind a "simple" interface — until it’s too late and it starts costing you money.

4. Testing is nearly impossible

Most no-code tools make it difficult (or impossible) to automate testing. Without safeguards, small changes can ripple through your pipelines undetected. Users will notice it in their dashboards before your data teams have their morning coffee.

Bottom Line: If you can’t trust your pipelines, you can’t trust your dashboards or reports.

5. They eventually require code anyway

As requirements grow, "no-code" often becomes "some-code." But now you’re writing scripts inside a platform never designed for real software development. This leads to painful uphill battles to scale.

Bottom Line: You get the worst of both worlds: the pain of code, without the power of code.

6. Poor team collaboration

Drag-and-drop tools aren’t built for teamwork at scale. Versioning, branching, peer review, and deployment pipelines — the basics of team productivity — are often afterthoughts. This makes it difficult for your teams to onboard, develop and collaborate. Less innovation, less insights, and more money to deliver insights!

Bottom Line: Without true team collaboration, scaling people becomes as hard as scaling data.

7. Vendor lock-in is real

Your data might be portable, but the business logic that transforms it often isn't. Migrating away from a no-code tool can mean rebuilding your entire data stack from scratch. Want to switch tooling for best-in-class tools as the data space changes? Good luck.

Bottom Line: Short-term convenience can turn into long-term captivity.

8. Performance problems sneak up on you

When your data volume grows, you often discover that what worked for a few million rows collapses under real scale. Because the platform abstracts how work is done, optimization is hard — and costly to fix later. Your data team will struggle to lower that bill more than they would with fine tune code-based tools.

Bottom Line: You can’t improve what you can’t control.

9. Developers don’t want to touch them

Great analysts prefer tools that allow precision, performance tuning, and innovation. If your environment frustrates them, you risk losing your most valuable technical talent. Onboarding new people is expensive; you want to keep and cultivate the talent you do have.

Bottom Line: If your platform doesn’t attract builders, you’ll struggle to scale anything.

10. They trade long-term flexibility for short-term ease

No-code tools feel fast at the beginning. Setup is quick, results come fast, and early wins are easy to showcase. But as complexity inevitably grows, you’ll face rigid workflows, limited customization, and painful workarounds. These tools are built for simplicity, not flexibility and that becomes a real problem when your needs evolve. Simple tasks like moving a few fields or renaming columns stay easy, but once you need complex business logic, large transformations, or multi-step workflows, it is a different matter. What once sped up delivery now slows it down, as teams waste time fighting platform limitations instead of building what the business needs.

Bottom Line: Early speed means little if you can’t sustain it. Scaling demands flexibility, not shortcuts.

Conclusion

No-code ETL tools often promise quick wins: rapid deployment, intuitive interfaces, and minimal coding. While these features can be appealing, especially for immediate needs, they can introduce challenges at scale.

As data complexity grows, the limitations of no-code solutions—such as difficulties in version control, limited reusability, and challenges in debugging—can lead to increased operational costs and hindered team efficiency. These factors not only strain resources but can also impact the quality and reliability of your data insights.

It's important to assess whether a no-code ETL tool aligns with your long-term data strategy. Always consider the trade-offs between immediate convenience and future scalability. Engaging with your data team to understand their needs and the potential implications of tool choices can provide valuable insights.

What has been your experience with no-code ETL tools? Have they met your expectations, or have you encountered unforeseen challenges?

Noel Gomez

Datacoves Co-founder | 15+ Years Data Platform Expert. Solving enterprise data challenges quickly with dbt & Airflow.

Seamless data analytics with managed dbt and managed Airflow

Seamless data analytics with managed dbt and Airflow

Don’t let platform limitations or maintenance overhead hold you back.

See it in Action