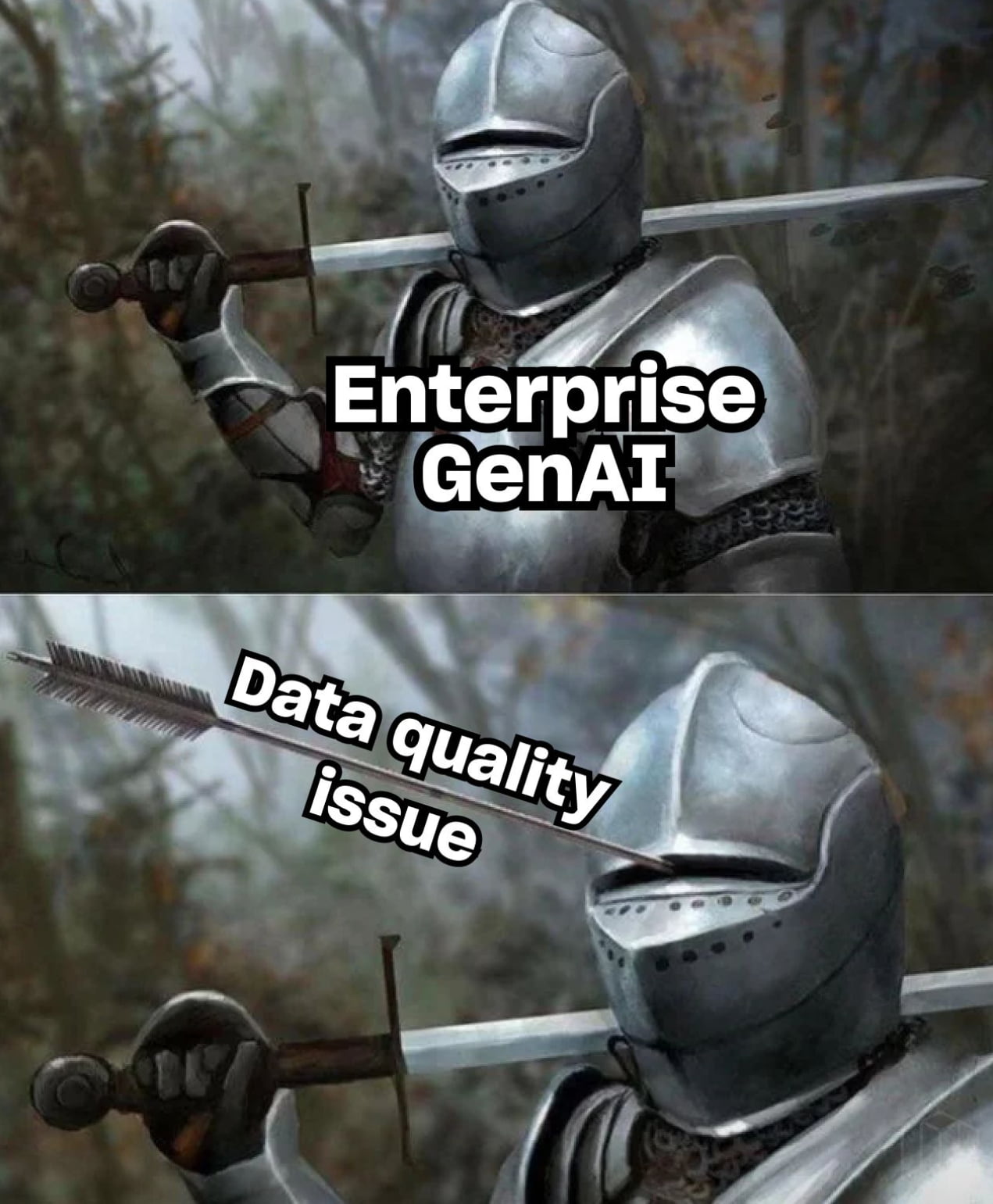

Data is in the spotlight as companies everywhere realize data's true potential. With big initiatives like GenAI and sophisticated data ecosystems, ensuring data quality is not just a necessity but a mandatory investment for businesses and analysts worldwide. Some people are learning the hard way that you need stable data foundations to get the results these initiatives promise.

While there are many great tools out there, the spotlight on open source tools has never been brighter. Open source software offers transparency, adaptability, and community-driven enhancements that are crucial in the rapidly evolving data landscape. This article covers 5 open source data quality tools and is current as of April 2024, so if that is something that interests you, stick around.

What is data quality?

First things first, what is data quality? There are many definitions of data quality, but data is considered high quality if it is fit for its intended uses in operations, decision-making, and planning. In other words, data quality refers to the data's accuracy, completeness, reliability, relevance, and how up-to-date it is. In the context of data-driven decision-making, high-quality data is crucial as it directly impacts the accuracy of insights and the effectiveness of decisions. Our data foundation.

High-quality data should be:

Accurate: Data that is free from errors and discrepancies.

Complete: Data that covers the necessary breadth and depth needed by the business.

Reliable: Data that has no missing elements and is consistently represented and sourced.

Relevant: Data that is applicable to the context and purposes for the business.

Current: Data that is up-to-date and timely for its purpose.

Understanding what is needed for data quality is the first step toward recognizing the importance of these tools and practices that maintain or enhance this quality.

What should you track for good data quality?

Now we know what constitutes high quality data but what do we need to monitor to ensure that our data is high quality? The good news is these metrics tend to be universal. For maintaining high data quality, several metrics and elements should be monitored regularly:

Accuracy: Ensure that your data correctly represents reality or the source from which it came.

Completeness: Check for missing values or data segments that could lead to incorrect analysis or conclusions.

Consistency: Data across different systems or platforms should match and be consistent.

Timeliness: Data should be updated and available in a timeframe that aligns with its intended use.

Validity: Data should adhere to the relevant rules, such as data formats and value ranges.

Uniqueness: No duplicates should be present unless necessary, ensuring each entry is unique.

Integrity: There should be a relationship between datasets and records that maintains data accuracy and consistency.

By tracking these metrics, organizations can set up the essential data foundation and significantly improve the trustworthiness and utility of their data. This will lead to better outcomes and insights that can support great data initiatives of the future.

Since it is essential to track these metrics, companies are on the search for the best tool to help them improve their data quality. Here is a list of open source tools that can be leveraged to improve data quality.

Why open source?

Before we jump into the tool list you may have noticed that a quick google search for this topic will give me many different lists. How is our list different? Well, we are focusing on open source tools. There are many great tools out there both paid and “free” and we put quotes around free because there is no such thing as free; there are always hidden costs (hours worked) for setup and maintenance. However, we wanted to make this open source tool list because regardless of the hidden costs we believe in the following benefits of open source tools:

Transparency: Open source tools offer complete transparency in their operations and algorithms. Users can inspect, modify, and improve the code, which enhances trust and reliability.

Community: Open source projects benefit from the collective intelligence of a global community. This not only accelerates innovation and bug resolution but also provides a large pool of knowledge and support.

Flexibility: With open source, organizations are not locked into proprietary systems, allowing them to tailor tools to their specific needs and integrate them seamlessly into their existing environments.

Cost-effectiveness: While open source doesn't always mean free, it significantly reduces costs associated with licensing fees and vendor lock-in, making cutting-edge tools accessible to everyone.

Quality and Security: Continuous contributions and scrutiny by the community mean that open source tools often meet high standards of quality and security, with issues being identified and addressed rapidly.

Our selection of open source data quality tools is grounded in rigorous open source criteria. We believe that the strength of an open source project lies not just in its ability to solve complex problems but also in its community, transparency, and commitment to ongoing improvement. When compiling this list, we considered factors such as active community engagement, frequency of updates, the quality of the documentation, and ease of contribution. This ensures that the tools recommended not only meet high standards of performance and reliability but also embody the principles that make open source software a valuable asset to the data quality landscape. So without further ado, let's jump into our list.

Open source data quality tool list

dbt Core

Self managed or in Datacoves

Primary Language: SQL / YAML

Purpose & Features: dbt core is an open source tool that allows data analysts and engineers to transform data in their data warehouses by writing dynamic SQL queries, which dbt then converts into tables and views. It also supports version control, testing, and documentation, which helps maintain data integrity and reliability.

For data quality, dbt Core has some out of the box data tests which can be extended through custom made test, or by using libraries such as dbt-expectations and elementary. Testing is easily done by configuring macros in YAML files or by writing custom SQL tests. However, integrating dbt Core into your data stack can be a big task especially when it comes to scheduling. A managed dbt Core platform such as Datacoves could be a great option for saving time and money. While dbt handles only the 'T' in ELT, Datacoves’ managed dbt Core Platform ensures that the entire ELT process is smooth and interconnected, allowing your team to concentrate on deriving insights from the data. There are other dbt alternatives on the market that can also be explored which handle the "T" in the ELT process.

Who it is for: Best for teams using SQL who want to transform data directly in the warehouse and who want to follow software development best practices including unit testing in their data pipelines.

Soda Core

Primary Language: YAML

Purpose & Features: Soda Core is the open source component that allows users to define data quality checks in code and integrate them into workflows.

Who it's for: Teams that need data quality checks integrated into their existing Python workflows or data pipelines.

Great Expectations

Primary Language: Python

Purpose & Features: This tool is a data quality platform that allows you to create data tests, documentation, and profiles automatically. It easily integrates into existing data processing pipelines to ensure data validation against expectations (unit tests). You can collaborate with nontechnical stakeholders by sharing the Data Docs. Data docs are Expectations, Validation Results, and other metadata translated into a human readable format as seen in the image below.

Who it's for: Data teams looking for a Pythonic way to enforce data quality rules and create automated data documentation.

Deequ

Primary Language: Scala (for Apache Spark)

Purpose & Features: Deequ is an open source tool by Amazon with which you can define "unit tests" (columnar or row level) for large-scale data within the Spark ecosystem. It allows for automated checks of data quality metrics such as completeness, uniqueness, and conformity. This enables data teams to find errors early before they are consumed downstream. You can use Deequ to define your assumptions about the data in unit tests to catch any data that does not meet your assumptions. This tool works on tabular data such as CSV files, databases tables, logs and flattened JSON files.

Who it's for: Data engineers and scientists working with big data in Spark (billons of rows), particularly those focused on maintaining data quality at scale.

Data Diff

Primary Language: You don’t manually write data quality tests but as you make changes to your SQL data diff will work its magic.

Purpose & Features: This tool is a little different from the rest because you're not exactly writing tests to catch data quality issues. Instead, this open source Python package by Datafold lets you do development testing by spotting the differences between tables whenever you tweak your code. It's a great way to compare what's happening in your production data against your development changes, helping you see directly how those code changes are playing out in the data.

Who it's for: Data engineers and teams who need to ensure that changes in data processing and ETL logic do not negatively affect data quality.

Choose the right open source data quality tool for your use case

The concept of "the best" for data quality tools is inherently tied to specific use cases. What might be an ideal solution for one organization could be less effective for another, depending on the unique challenges and requirements each face.

Before you dive into a tool, it's crucial to understand your organization's specific data quality challenges. Are you dealing with high volumes of data, requiring scalability? Or are your main issues related to data consistency and accuracy in a smaller, more controlled dataset? Identifying your primary use case will help you navigate through our top 10 tools and select the one that best fits your situation.

Here’s 6 tips to help you make an informed choice for your specific needs:

1. Assess Your Data Quality Needs:

Identify the primary issues you're facing with your data. Are you struggling with incomplete data, inconsistencies, outdated information, or data that's not in the right format? Understanding your main challenges will guide you toward a tool that specializes in addressing those specific problems. Once you understand your data quality challenges and objectives, match these with the strengths of the tools listed above

2. Consider Your Technical Environment:

Evaluate the technical stack you are currently using. Some data quality tools are better suited for certain environments or integrate more seamlessly with specific databases, data lakes, or processing frameworks. Choose a tool that aligns with your existing infrastructure to reduce integration headaches.

3. Evaluate Community and Support:

The strength of an open-source tool lies in its community. Look for a tool with an active community, which is evident through regular updates, vibrant forums, and extensive documentation. A strong community can provide invaluable support, from troubleshooting to best practices.

4. Check for Flexibility and Scalability:

Your data needs will evolve, so it’s important to choose a tool that is flexible and can scale with your business. Assess the tool’s ability to handle different data volumes, types, and sources. A good open-source tool should not only solve your current data quality issues but also adapt to future challenges.

5. Review Security and Compliance Features:

Data security and compliance are imperative. Be sure the tool complies with data protection regulations and offers security features to protect your data. This is especially important if you're dealing with sensitive or personal information.

6. Test Drive the Tool:

Finally, don’t hesitate to get your hands dirty. Most open-source tools are free to use, so take advantage of this by testing the tool with your data. This will give you a clear idea of the tool’s usability, effectiveness, and fit with your use case. Be sure to go into this with an open mind to get the most out of the tool.

Conclusion

In the era of generative AI and other lofty initiatives high-quality data is not just an option but a necessity, and embracing these open-source data quality tools can significantly enhance the reliability and accuracy of your data. Remember, the "best" tool is one that aligns closely with your specific use case offering the features and flexibility your team needs to effectively tackle your data quality challenges; it very well could be a combination of these tools. Whether you are in the world of SQL, Python, or any other programming language, there is a tool tailored to your needs. Consider factors such as ease of integration into your current data ecosystem, the learning curve for your team, and the level of community support available.