The Databricks AI Summit 2025 revealed a major shift toward simpler, AI-ready, and governed data platforms. From no-code analytics to serverless OLTP and agentic workflows, the announcements show Databricks is building for a unified future.

In this post, we break down the six most impactful features announced at the summit and what they mean for the future of data teams.

1. Databricks One and Genie: Making Analytics Truly Accessible

Databricks One (currently in private preview) introduces a no-code analytics platform aimed at democratizing access to insights across the organization. Powered by Genie, users can now interact with business data through natural language Q&A, no SQL or dashboards required. By lowering the barrier to entry, tools like Genie can drive better, faster decision-making across all functions.

Datacoves Take: As with any AI we have used to date, having a solid foundation is key. AI can not solve ambiguous metrics and a lack of knowledge. As we have mentioned, there are some dangers in trusting AI, and these caveats still exist.

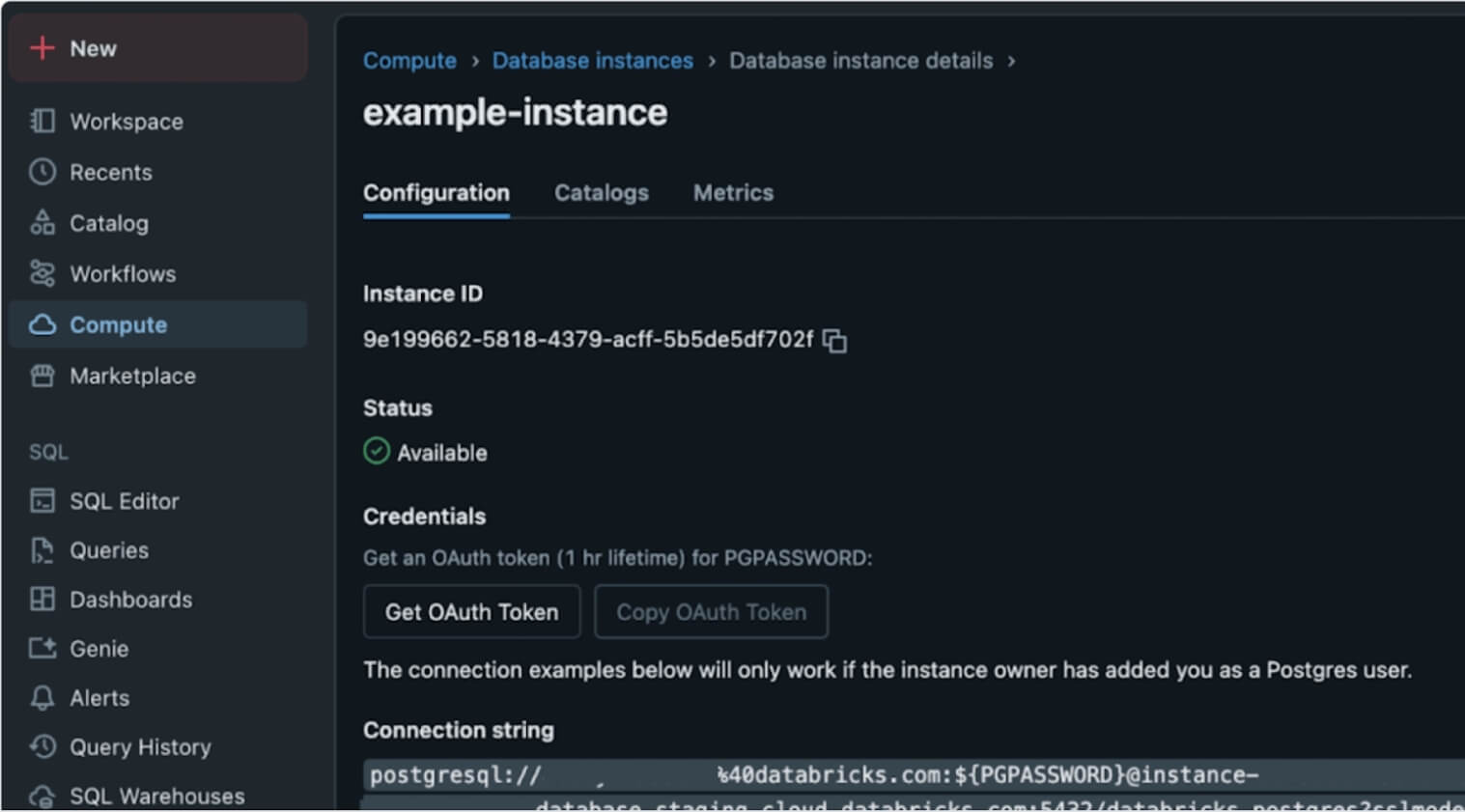

2. Lakebase: A Serverless Postgres for the Lakehouse

In a bold move, Databricks launched Lakebase, a Postgres-compatible, serverless OLTP database natively integrated into the lakehouse. Built atop the foundations laid by the NeonDB acquisition, Lakebase reimagines transactional workloads within the unified lakehouse architecture. This is more than just a database release; it’s a structural shift that brings transactional (OLTP) and analytical (OLAP) workloads together, unlocking powerful agentic and AI use cases without architectural sprawl.

Datacoves Take: We see both Databricks and Snowflake integrating Postgres into their offering. Ducklake is also demonstrating a simpler future for Iceberg catalogs. Postgres has a strong future ahead, and the unification of OLAP and OLTP seems certain.

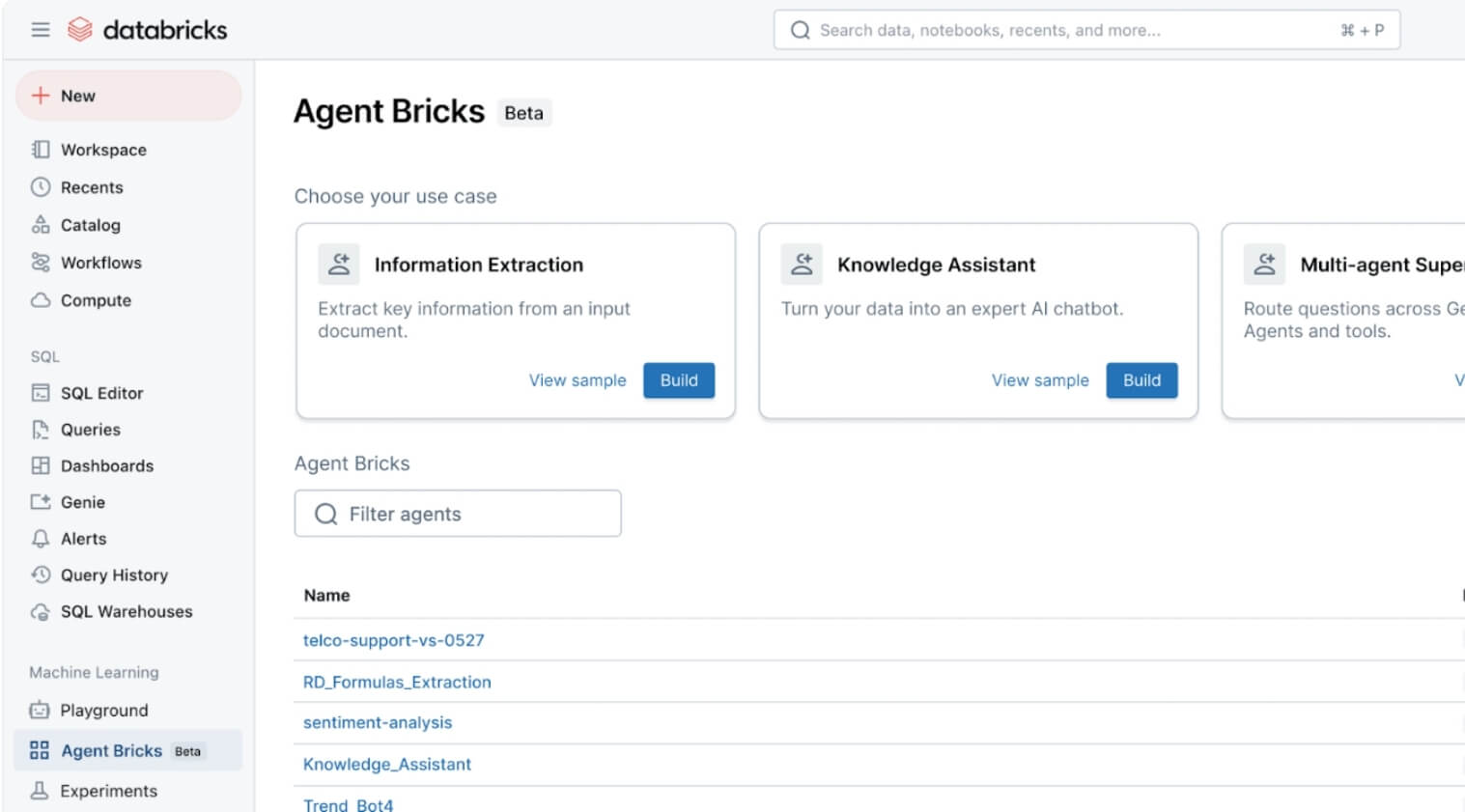

3. Agent Bricks: From Prototype to Enterprise-Ready AI Agents

With the introduction of Agent Bricks, Databricks is making it easier to build, evaluate, and operationalize agents for AI-driven workflows. What sets this apart is the use of built-in “judges” - LLMs that automatically assess agent quality and performance. This moves agents from hackathon demos into the enterprise spotlight, giving teams a foundation to develop production-grade AI assistants grounded in company data and governance frameworks.

Datacoves Take: This looks interesting, and the key here still lies in having a strong data foundation with good processes. Reproducibility is also key. Testing and proving that the right actions are performed will be important for any organization implementing this feature.

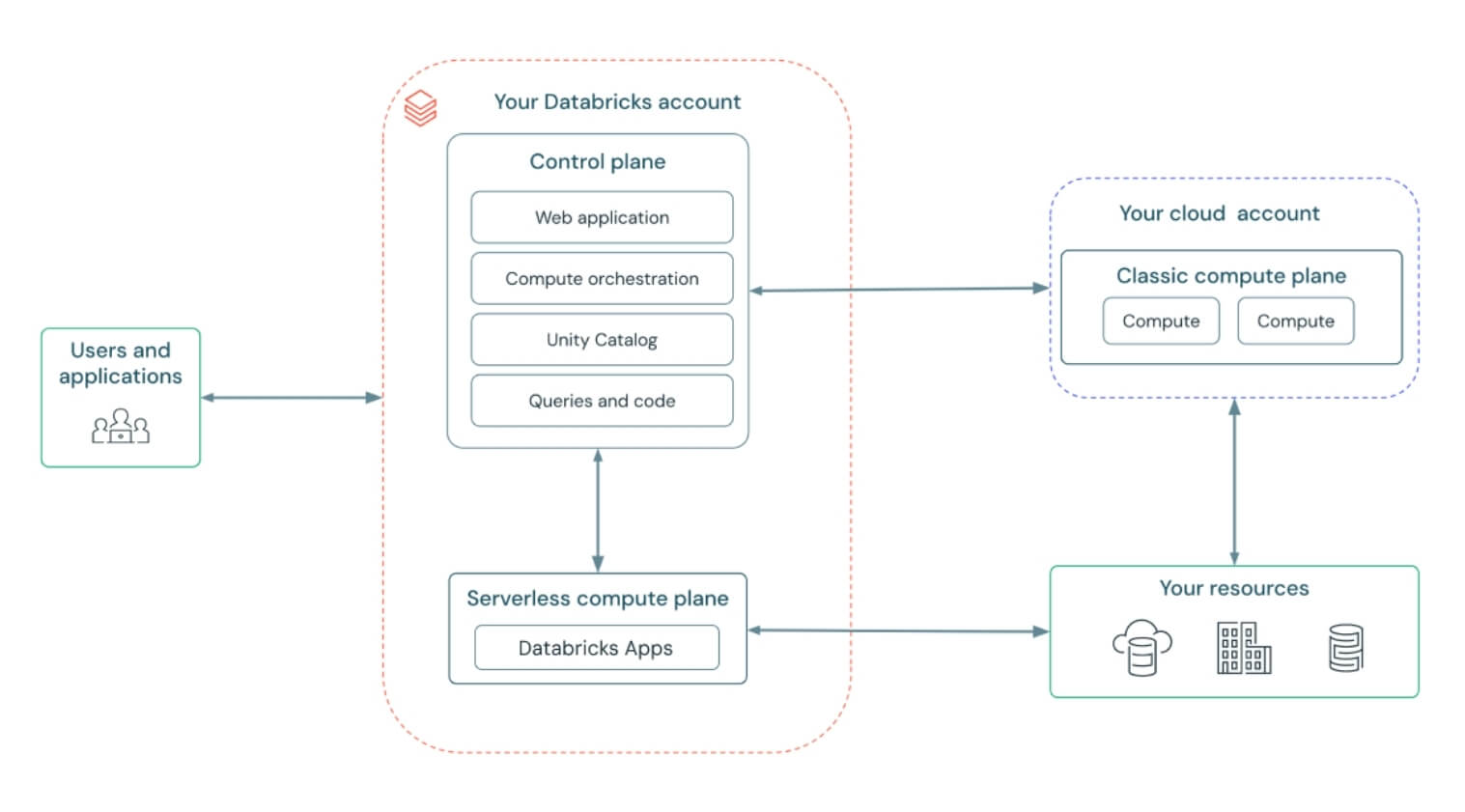

4. Databricks Apps: Interfaces That Inherit Governance by Design

Databricks introduced Databricks Apps, allowing developers to build custom user interfaces that automatically respect Unity Catalog permissions and metadata. A standout demo showed glossary terms appearing inline inside Chrome, giving business users governed definitions directly in the tools they use every day. This bridges the gap between data consumers and governed metadata, making governance feel less like overhead and more like embedded intelligence.

Datacoves Take: Metadata and catalogs are important for AI, so we see both Databricks and Snowflake investing in this area. As with any of these changes, technology is not the only change needed in the organization. Change management is also important. Without proper stewardship, ownership, and review processes, apps can’t provide the experience promised.

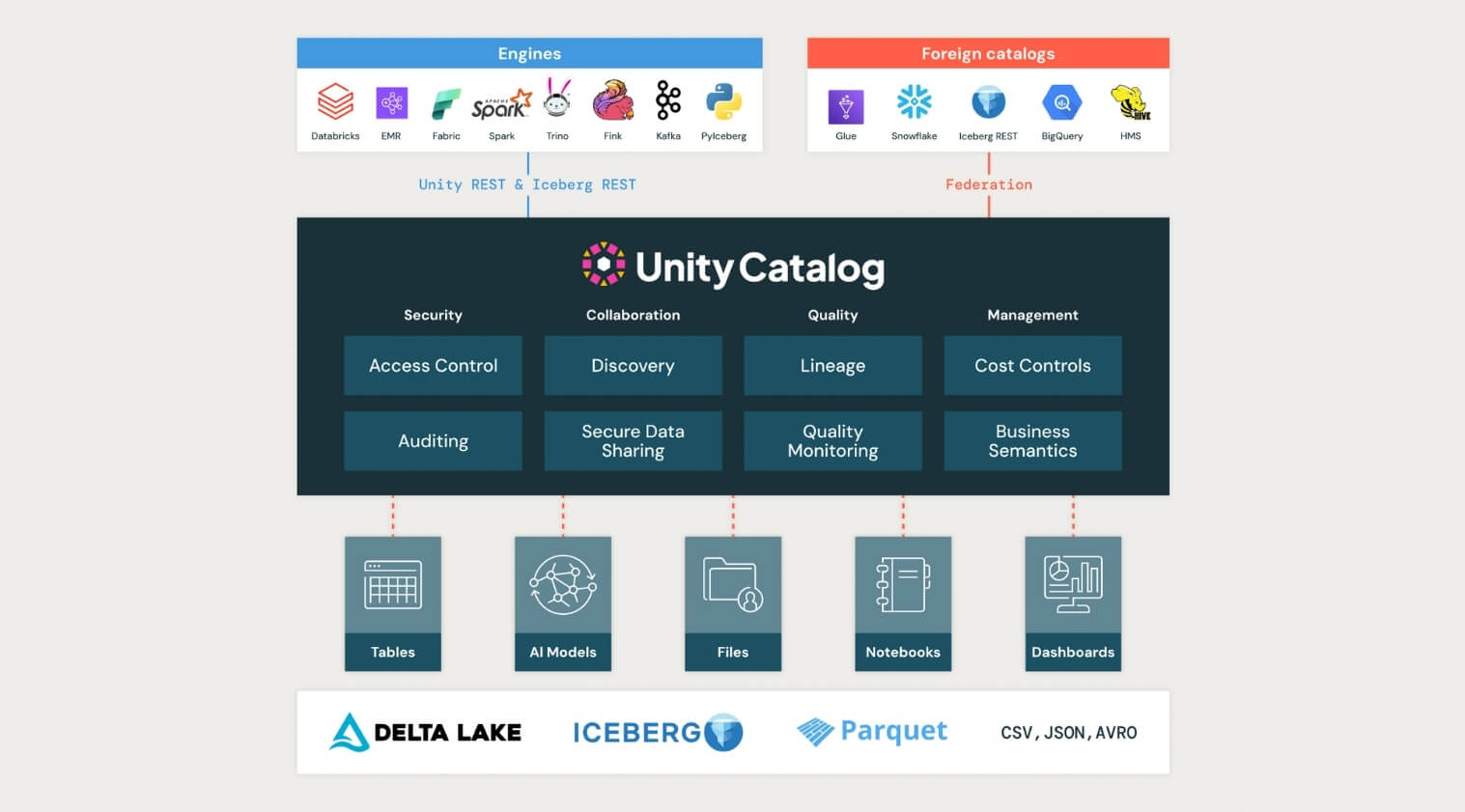

5. Unity Catalog Enhancements: Open Governance at Scale

Unity Catalog took a major step forward at the Databricks AI Summit 2025, now supporting managed Apache Iceberg tables, cross-engine interoperability, and introducing Unity Catalog Metrics to define and track business logic across the organization.

This kind of standardization is critical for teams navigating increasingly complex data landscapes. By supporting both Iceberg and Delta formats, enabling two-way sync, and contributing to the open-source ecosystem, Unity Catalog is positioning itself as the true backbone for open, interoperable governance.

Datacoves Take: The Iceberg data format has the momentum behind it; now it is up to the platforms to enable true interoperability. Organizations are expecting a future where a table can be written and read from any platform. DuckLake is also getting in the game, simplifying how metadata is managed, and multi-table transactions are enabled. It will be interesting to see if Unity and Polaris take some of the DuckLake learnings and integrate them in the next few years.

6. Forever-Free Tier and $100M AI Training Fund

In a community-building move, Databricks introduced a forever-free edition of the platform and committed $100 million toward AI and data training. This massive investment creates a pipeline of talent ready to use and govern AI responsibly. For organizations thinking long-term, this is a wake-up call: governance, security, and education need to scale with AI adoption, not follow behind.

Datacoves Take: This feels like a good way to get more people to try Databricks without a big commitment. Hopefully, competitors take note and do the same. This will benefit the entire data community.

Read the full post from Databricks here:

https://www.databricks.com/blog/summary-dais-2025-announcements-through-lens-games

What Data Leaders Must Do Next After Databricks AI Summit 2025

Democratizing Data Access Is Critical

With tools like Databricks One and Genie enabling no-code, natural language analytics, data leaders must prioritize making insights accessible beyond technical teams to drive faster, data-informed decisions at every level.

Simplify and Unify Data Architecture

Lakebase’s integration of transactional and analytical workloads signals a move toward simpler, more efficient data stacks. Leaders should rethink their architectures to reduce complexity and support real-time, AI-driven applications.

Operationalize AI Agents for Business Impact

Agent Bricks and built-in AI judges highlight the shift from experimental AI agents to production-ready, measurable workflows. Data leaders need to invest in frameworks and governance to safely scale AI agents across use cases.

Governance Must Span Formats and Engines

Unity Catalog’s expanded support for Iceberg, Delta, and cross-engine interoperability emphasizes the need for unified governance frameworks that handle diverse data formats while maintaining business logic and compliance.

Invest in Talent and Training to Keep Pace

The launch of a free tier and $100M training fund underscores the growing demand for skilled data and AI practitioners. Data leaders should plan for talent development and operational readiness to fully leverage evolving platforms.

The Road Ahead: Operationalizing AI the Datacoves Way

The Databricks AI Summit 2025 signals a fundamental shift: from scattered tools and isolated workflows to unified, governed, and AI-native platforms. It’s not just about building smarter systems; it’s about making those systems accessible, efficient, and scalable for the entire organization.

While these innovations are promising, putting them into practice takes more than vision; it requires infrastructure that balances speed, control, and usability.

That’s where Datacoves comes in.

Our platform accelerates the adoption of modern tools like dbt, Airflow, and emerging AI workflows, without the overhead of managing complex environments. We help teams operationalize best practices from day one, reducing total cost of ownership while enabling faster delivery, tighter governance, and AI readiness at scale. Datacoves supports Databricks, Snowflake, BigQuery, and any data platform with a dbt adapter. We believe in an open and interoperable feature where tools are integrated without increasing vendor lock-in. Talk to us to find out more.

Want to learn more? Book a demo with Datacoves.

-Photoroom.jpg)