"It looked so easy in the demo…"

— Every data team, six months after adopting a drag-and-drop ETL tool

If you lead a data team, you’ve probably seen the pitch: Slick visuals. Drag-and-drop pipelines. "No code required." Everything sounds great — and you can’t wait to start adding value with data!

At first, it does seem like the perfect solution: non-technical folks can build pipelines, onboarding is fast, and your team ships results quickly.

But our time in the data community has revealed the same pattern over and over: What feels easy and intuitive early on becomes rigid, brittle, and painfully complex later.

Let’s explore why no code ETL tools can lead to serious headaches for your data preparation efforts.

Before jumping into the why and the how, let’s start with the what.

When data is created in its source systems it is never ready to be used for analysis as is. It always needs to be massaged and transformed for downstream teams to gather any insights from the data. That is where ETL comes in. ETL stands for Extract, Transform, Load. This is the process of moving data from multiple sources, reshaping (transforming) it, and loading it into a system where it can be used for analysis.

At its core, ETL is about data preparation:

Without ETL, you’re stuck with messy, fragmented, and unreliable data. Good ETL enables better decisions, faster insights, and more trustworthy reporting. Think of ETL as the foundation that makes dashboards, analytics, Data Science, Machine Learning, GenAI, and lead to data-driven decision-making even possible.

Now the real question is how do we get from raw data to insights? That is where the topic of tooling comes into the picture. While this might be at a very high-level, we categorize tools into two categories: Code-based and no-code/low-code. Let’s look at these categories in a little more detail.

Code-based ETL tools require analysts to write scripts or code to build and manage data pipelines. This is typically done with programming languages like SQL, Python, possibly with specialized frameworks, like dbt, tailored for data workflows.

Instead of clicking through a UI, users define the extraction, transformation, and loading steps directly in code — giving them full control over how data moves, changes, and scales.

Common examples of code-based ETL tooling include dbt (data build tool), SQLMesh, Apache Airflow, and custom-built Python scripts designed to orchestrate complex workflows.

While code-based tools often come with a learning curve, they offer serious advantages:

Most importantly, code-based systems allow teams to treat pipelines like software, applying engineering best practices that make systems more reliable, auditable, and adaptable over time.

Building and maintaining robust ETL pipelines with code requires up-front work to set up CI/CD and developers who understand SQL or Python. Because of this investment in expertise, some teams are tempted to explore whether the grass is greener on the other side with no-code or low-code ETL tools that promise faster results with less engineering complexity. No hard-to-understand code, just drag and drop via nice-looking UIs. This is certainly less intimidating than seeing a SQL query.

As you might have already guessed, no-code ETL tools let users build data pipelines without writing code. Instead, they offer visual interfaces—typically drag-and-drop—that “simplify” the process of designing data workflows.

These tools aim to make data preparation accessible to a broader audience reducing complexity by removing coding. They create the impression that you don't need skilled engineers to build and maintain complex pipelines, allowing users to define transformations through menus, flowcharts, and configuration panels—no technical background required.

However, this perceived simplicity is misleading. No-code platforms often lack essential software engineering practices such as version control, modularization, and comprehensive testing frameworks. This can lead to a buildup of technical debt, making systems harder to maintain and scale over time. As workflows become more complex, the initial ease of use can give way to a tangled web of dependencies and configurations, challenging to untangle without skilled engineering expertise. Additional staff is needed to maintain data quality, manage growing complexity, and prevent the platform from devolving into a disorganized state. Over time, team velocity decreases due to layers of configuration menus.

Popular no-code ETL tools include Matillion, Talend, Azure Data Factory(ADF), Informatica, Talend, and Alteryx. They promise minimal coding while supporting complex ETL operations. However, it's important to recognize that while these tools can accelerate initial development, they may introduce challenges in long-term maintenance and scalability.

To help simplify why best-in-class orginazations typically avoid no-code tools, we've come up with 10 reasons that highlight their limitations.

Most no-code tools claim Git support, but it's often limited to unreadable exports like JSON or XML. This makes collaboration clunky, audits painful, and coordinated development nearly impossible.

Bottom Line: Scaling a data team requires clean, auditable change management — not hidden files and guesswork.

Without true modular design, teams end up recreating the same logic across pipelines. Small changes become massive, tedious updates, introducing risk and wasting your data team’s time. $$$

Bottom Line: When your team duplicates effort, innovation slows down.

When something breaks, tracing the root cause is often confusing and slow. Error messages are vague, logs are buried, and troubleshooting feels like a scavenger hunt. Again, wasting your data team’s time.

Bottom Line: Operational complexity gets hidden behind a "simple" interface — until it’s too late and it starts costing you money.

Most no-code tools make it difficult (or impossible) to automate testing. Without safeguards, small changes can ripple through your pipelines undetected. Users will notice it in their dashboards before your data teams have their morning coffee.

Bottom Line: If you can’t trust your pipelines, you can’t trust your dashboards or reports.

As requirements grow, "no-code" often becomes "some-code." But now you’re writing scripts inside a platform never designed for real software development. This leads to painful uphill battles to scale.

Bottom Line: You get the worst of both worlds: the pain of code, without the power of code.

Drag-and-drop tools aren’t built for teamwork at scale. Versioning, branching, peer review, and deployment pipelines — the basics of team productivity — are often afterthoughts. This makes it difficult for your teams to onboard, develop and collaborate. Less innovation, less insights, and more money to deliver insights!

Bottom Line: Without true team collaboration, scaling people becomes as hard as scaling data.

Your data might be portable, but the business logic that transforms it often isn't. Migrating away from a no-code tool can mean rebuilding your entire data stack from scratch. Want to switch tooling for best-in-class tools as the data space changes? Good luck.

Bottom Line: Short-term convenience can turn into long-term captivity.

When your data volume grows, you often discover that what worked for a few million rows collapses under real scale. Because the platform abstracts how work is done, optimization is hard — and costly to fix later. Your data team will struggle to lower that bill more than they would with fine tune code-based tools.

Bottom Line: You can’t improve what you can’t control.

Great analysts prefer tools that allow precision, performance tuning, and innovation. If your environment frustrates them, you risk losing your most valuable technical talent. Onboarding new people is expensive; you want to keep and cultivate the talent you do have.

Bottom Line: If your platform doesn’t attract builders, you’ll struggle to scale anything.

No-code tools feel fast at the beginning. Setup is quick, results come fast, and early wins are easy to showcase. But as complexity inevitably grows, you’ll face rigid workflows, limited customization, and painful workarounds. These tools are built for simplicity, not flexibility and that becomes a real problem when your needs evolve. Simple tasks like moving a few fields or renaming columns stay easy, but once you need complex business logic, large transformations, or multi-step workflows, it is a different matter. What once sped up delivery now slows it down, as teams waste time fighting platform limitations instead of building what the business needs.

Bottom Line: Early speed means little if you can’t sustain it. Scaling demands flexibility, not shortcuts.

No-code ETL tools often promise quick wins: rapid deployment, intuitive interfaces, and minimal coding. While these features can be appealing, especially for immediate needs, they can introduce challenges at scale.

As data complexity grows, the limitations of no-code solutions—such as difficulties in version control, limited reusability, and challenges in debugging—can lead to increased operational costs and hindered team efficiency. These factors not only strain resources but can also impact the quality and reliability of your data insights.

It's important to assess whether a no-code ETL tool aligns with your long-term data strategy. Always consider the trade-offs between immediate convenience and future scalability. Engaging with your data team to understand their needs and the potential implications of tool choices can provide valuable insights.

What has been your experience with no-code ETL tools? Have they met your expectations, or have you encountered unforeseen challenges?

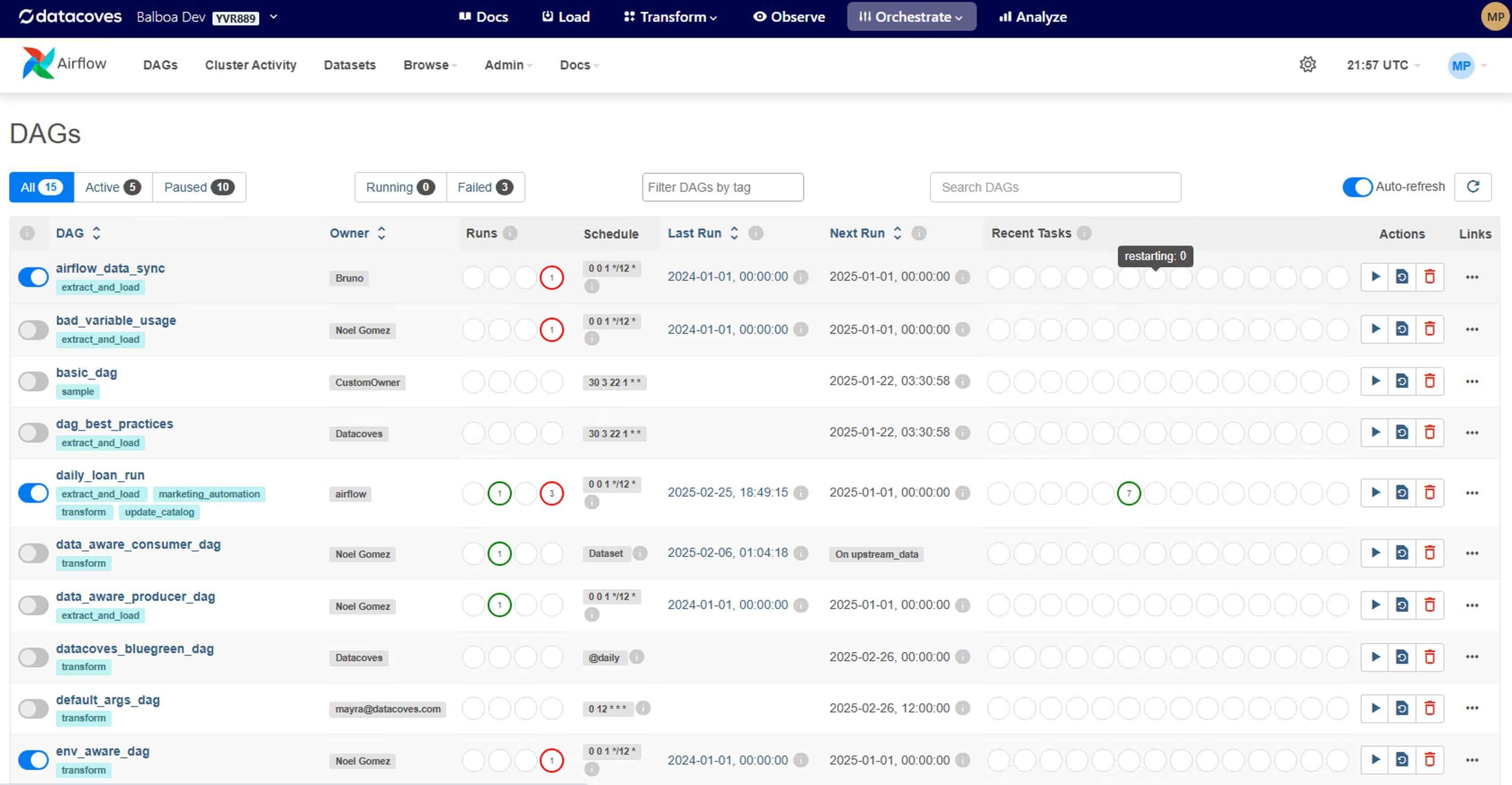

In Apache Airflow, scheduling workflows has traditionally been managed using the schedule_interval parameter, which accepts definitions such as datetime objects or cron expressions to establish time-based intervals for DAG (Directed Acyclic Graph) executions. Airflow was a powerful scheduler but became even more efficient when Airflow introduced a significant enhancement in the incorporation of datasets into scheduling. This advancement enables data-driven DAG execution, allowing workflows to be triggered by specific data updates rather than relying on predetermined time intervals.

In this article, we'll dive into the concept of Airflow datasets, explore their transformative impact on workflow orchestration, and provide a step-by-step guide to schedule your DAGs using Datasets!

DAG scheduling in Airflow was primarily time-based, relying on parameters like schedule_interval and start_date to define execution times. With this set up there were three ways to schedule your DAGs: Cron, presets, or timedelta objects. Let's examine each one.

schedule_interval='5 4 * * *'. @hourly: Runs the DAG at the beginning of every hour. @daily: Runs the DAG at midnight every day. @weekly: Runs the DAG at midnight on the first day of the week. @monthly: Runs the DAG at midnight on the first day of the month. @yearly: Runs the DAG at midnight on January 1st. schedule_interval=timedelta(hours=6) would schedule the DAG every six hours. While effective for most complex jobs, time-based scheduling had some limitations:

Fixed Timing: DAGs ran at predetermined times, regardless of data readiness (this is the key to Datasets). If data wasn't available at the scheduled time, tasks could fail or process incomplete data.

Sensors and Polling: To handle data dependencies, sensors were employed to wait for data availability. However, sensors often relied on continuous polling, which could be resource-intensive and lead to inefficiencies.

Airflow Datasets were created to overcome these scheduling limitations.

A Dataset is a way to represent a specific set of data. Think of it as a label or reference to a particular data resource. This can be anything: a csv file, an s3 bucket or SQL table. A Dataset is defined by passing a string path to the Dataset() object. This path acts as an identifier — it doesn't have to be a real file or URL, but it should be consistent, unique, and ideally in ASCII format (plain English letters, numbers, slashes, underscores, etc.).

from airflow.datasets import Dataset

my_dataset = Dataset("s3://my-bucket/my-data.csv")

# or

my_dataset = Dataset("my_folder/my_file.txt")When using Airflow Datasets, remember that Airflow does not monitor the actual contents of your data. It doesn’t check if a file or table has been updated.

Instead, it tracks task completion. When a task that lists a Dataset in its outlets finishes successfully, Airflow marks that Dataset as “updated.” This means the task doesn’t need to actually modify any data — even a task that only runs a print() statement will still trigger any Consumer DAGs scheduled on that Dataset. It’s up to your task logic to ensure the underlying data is actually being modified when necessary. Even though Airflow isn’t checking the data directly, this mechanism still enables event-driven orchestration because your workflows can run when upstream data should be ready.

For example, if one DAG has a task that generates a report and writes it to a file, you can define a Dataset for that file. Another DAG that depends on the report can be triggered automatically as soon as the first DAG’s task completes. This removes the need for rigid time-based scheduling and reduces the risk of running on incomplete or missing data.

Datasets give you a new way to schedule your DAGs—based on when upstream DAGs completion, not just on a time interval. Instead of relying on schedule_interval, Airflow introduced the schedule parameter to support both time-based and dataset-driven workflows. When a DAG finishes and "updates" a dataset, any DAGs that depend on that dataset can be triggered automatically. And if you want even more control, you can update your Dataset externally using the Airflow API.

When using Datasets in Airflow, you'll typically work with two types of DAGs: Producer and Consumer DAGs.

A DAG responsible for defining and "updating" a specific Dataset. We say "updating" because Airflow considers a Dataset "updated" simply when a task that lists it in its outlets completes successfully — regardless of whether the data was truly modified.

A Producer DAG:

✅ Must have the Dataset variable defined or imported

✅ Must include a task with the outlets parameter set to that Dataset

A DAG that is scheduled to execute once the Producer DAG successfully completes.

A Consumer DAG:

✅ Must reference the same Dataset using the schedule parameter

It’s this producer-consumer relationship that enables event-driven scheduling in Airflow — allowing workflows to run as soon as the data they're dependent on is ready, without relying on fixed time intervals.

1. Define your Dataset.

In a new DAG file, define a variable using the Dataset object and pass in the path to your data as a string. In this example, it’s the path to a CSV file.

# producer.py

from airflow.datasets import Dataset

# Define the dataset representing the CSV file

csv_dataset = Dataset("/path/to/your_dataset.csv") 2. Create a DAG with a task that updates the CSV dataset.

We’ll use the @dag and @task decorators for a cleaner structure. The key part is passing the outlets parameter to the task. This tells Airflow that the task updates a specific dataset. Once the task completes successfully, Airflow will consider the dataset "updated" and trigger any dependent DAGs.

We’re also using csv_dataset.uri to get the path to the dataset—this is the same path you defined earlier (e.g., "/path/to/your_dataset.csv").

# producer.py

from airflow.decorators import dag, task

from airflow.datasets import Dataset

from datetime import datetime

import pandas as pd

import os

# Define the dataset representing the CSV file

csv_dataset = Dataset("/path/to/your_dataset.csv")

@dag(

dag_id='producer_dag',

start_date=datetime(2025, 3, 31),

schedule='@daily',

catchup=False,

)

def producer_dag():

@task(outlets=[csv_dataset])

def update_csv():

data = {'column1': [1, 2, 3], 'column2': ['A', 'B', 'C']}

df = pd.DataFrame(data)

file_path = csv_dataset.uri

# Check if the file exists to append or write

if os.path.exists(file_path):

df.to_csv(file_path, mode='a', header=False, index=False)

else:

df.to_csv(file_path, index=False)

update_csv()

producer_dag()Now that we have a producer DAG that is updating a Dataset. We can create our DAG that will be dependent on the consumer DAG. This is where the magic happens since this DAG will no longer be time dependent but rather Dataset dependant.

1. Instantiate the same Dataset used in the Producer DAG

In a new DAG file (the consumer), start by defining the same Dataset that was used in the Producer DAG. This ensures both DAGs are referencing the exact same dataset path.

# consumer.py

from airflow.datasets import Dataset

# Define the dataset representing the CSV file

csv_dataset = Dataset("/path/to/your_dataset.csv") 2. Set the schedule to the Dataset

Create your DAG and set the schedule parameter to the Dataset you instantiated earlier (the one being updated by the producer DAG). This tells Airflow to trigger this DAG only when that dataset is updated—no need for time-based scheduling.

# consumer.py

import datetime

from airflow.decorators import dag, task

from airflow.datasets import Dataset

csv_dataset = Dataset("/path/to/your_dataset.csv")

@dag(

default_args={

"start_date": datetime.datetime(2024, 1, 1, 0, 0),

"owner": "Mayra Pena",

"email": "mayra@example.com",

"retries": 3

},

description="Sample Consumer DAG",

schedule=[csv_dataset],

tags=["transform"],

catchup=False,

)

def data_aware_consumer_dag():

@task

def run_consumer():

print("Processing updated CSV file")

run_consumer()

dag = data_aware_consumer_dag()

Thats it!🎉 Now this DAG will run whenever the first Producer DAG completes (updates the file).

When using Datasets you may be using the same dataset across multiple DAGs and therfore having to define it many times. There is a simple DRY (Dont Repeat Yourself) way to overcome this.

1. Create a central datasets.py file

To follow DRY (Don't Repeat Yourself) principles, centralize your dataset definitions in a utility module.

Simply create a utils folder and add a datasets.py file.

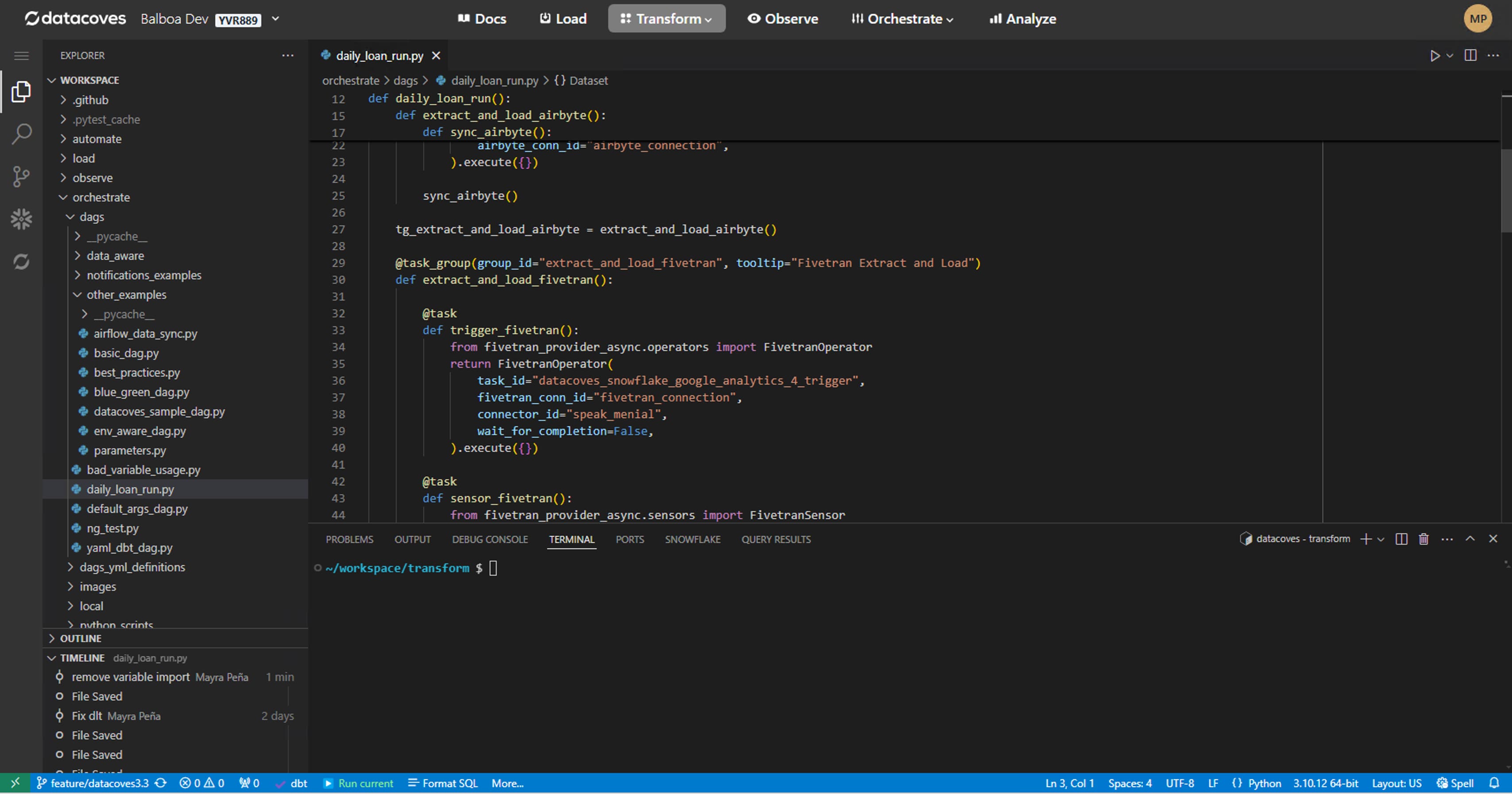

If you're using Datacoves, your Airflow-related files typically live in a folder named orchestrate, so your path might look like:orchestrate/utils/datasets.py

2. Import the Dataset object

Inside your datasets.py file, import the Dataset class from Airflow:

from airflow.datasets import Dataset 3. Define your Dataset in this file

Now that you’ve imported the Dataset object, define your dataset as a variable. For example, if your DAG writes to a CSV file:

from airflow.datasets import Dataset

# Define the dataset representing the CSV file

CSV_DATASET= Dataset("/path/to/your_dataset.csv") Notice we’ve written the variable name in all caps (CSV_DATASET)—this follows Python convention for constants, signaling that the value shouldn’t change. This makes your code easier to read and maintain.

4. Import the Dataset in your DAG

In your DAG file, simply import the dataset you defined in your utils/datasets.py file and use it as needed.

from airflow.decorators import dag, task

from orchestrate.utils.datasets import CSV_DATASET

from datetime import datetime

import pandas as pd

import os

@dag(

dag_id='producer_dag',

start_date=datetime(2025, 3, 31),

schedule='@daily',

catchup=False,

)

def producer_dag():

@task(outlets=[CSV_DATASET])

def update_csv():

data = {'column1': [1, 2, 3], 'column2': ['A', 'B', 'C']}

df = pd.DataFrame(data)

file_path = CSV_DATASET.uri

# Check if the file exists to append or write

if os.path.exists(file_path):

df.to_csv(file_path, mode='a', header=False, index=False)

else:

df.to_csv(file_path, index=False)

update_csv()

producer_dag()

Now you can reference CSV_DATASET in your DAG's schedule or as a task outlet, keeping your code clean and consistent across projects.🎉

You can visualize your Datasets as well as events triggered by Datasets in the Airflow UI. There are 3 tabs that will prove helpful for implementation and debugging your event triggered pipelines:

Dataset Events

The Dataset Events sub-tab shows a chronological list of recent events associated with datasets in your Airflow environment. Each entry details the dataset involved, the producer task that updated it, the timestamp of the update, and any triggered consumer DAGs. This view is important for monitoring the flow of data, ensuring that dataset updates occur as expected, and helps with prompt identification and resolution of issues within data pipelines.

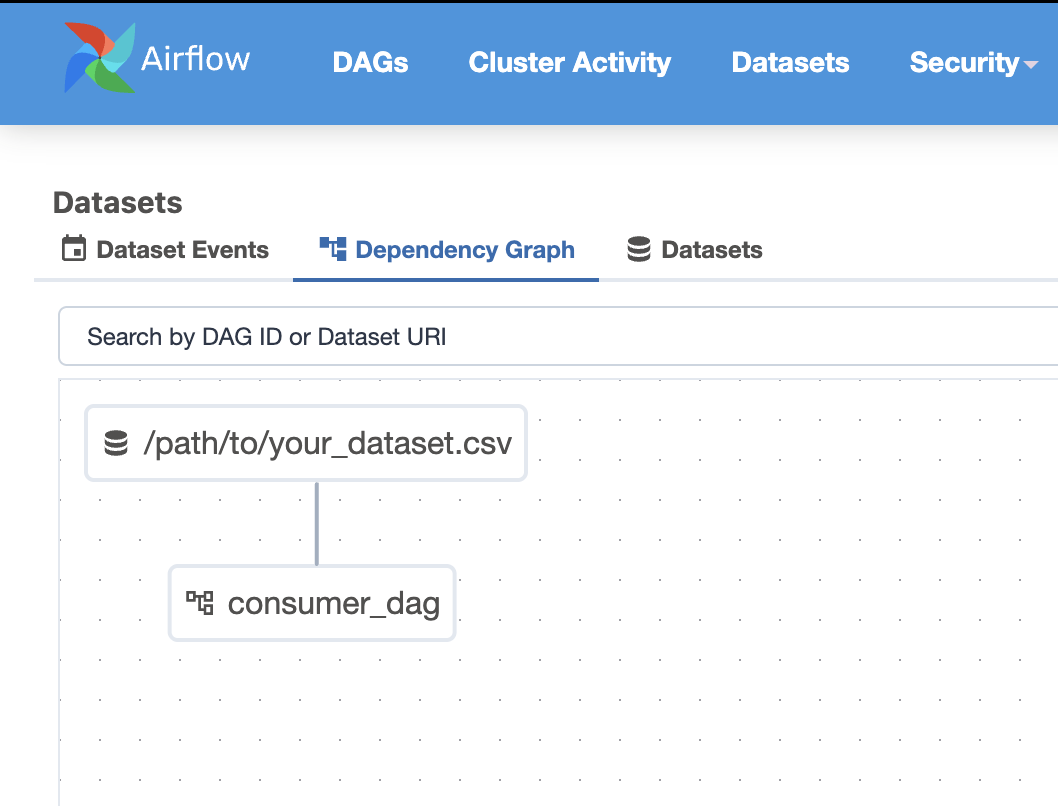

Dependency Graph

The Dependency Graph is a visual representation of the relationships between datasets and DAGs. It illustrates how producer tasks, datasets, and consumer DAGs interconnect, providing a clear overview of data dependencies within your workflows. This graphical depiction helps visualize the structure of your data pipelines to identify potential bottlenecks and optimize your pipeline.

Datasets

The Datasets sub-tab provides a list of all datasets defined in your Airflow instance. For each dataset, it shows important information such as the dataset's URI, associated producer tasks, and consumer DAGs. This centralized view provides efficient management of datasets, allowing users to track dataset usage across various workflows and maintain organized data dependencies.

When working with Datasets, there are a couple of things to take into consideration to maintain readability.

Naming datasets meaningfully: Ensure your names are verbose and descriptive. This will help the next person who is looking at your code and even future you.

Avoid overly granular datasets: While they are a great tool too many = hard to manage. So try to strike a balance.

Monitor for dataset DAG execution delays: It is important to keep an eye out for delays since this could point to an issue in your scheduler configuration or system performance.

Task Completion Signals Dataset Update: It’s important to understand that Airflow doesn’t actually check the contents of a dataset (like a file or table). A dataset is considered “updated” only when a task that lists it in its outlets completes successfully. So even if the file wasn’t truly changed, Airflow will still assume it was. At Datacoves, you can also trigger a DAG externally using the Airflow API and an AWS Lambda Function to trigger your DAG once data lands in an S3 Bucket.

Datacoves provides a scalable Managed Airflow solution and handles these upgrades for you. This alleviates the stress of managing Airflow Infrastructure so you can data teams focus on their pipelines. Checkout how Datadrive saved 200 hours yearly by choosing Datacoves.

The introduction of data-aware scheduling with Datasets in Apache Airflow is a big advancement in workflow orchestration. By enabling DAGs to trigger based on data updates rather than fixed time intervals, Airflow has become more adaptable and efficient in managing complex data pipelines.

By adopting Datasets, you can enhance the maintainability and scalability of your workflows, ensuring that tasks are executed exactly when the upstream data is ready. This not only optimizes resource utilization but also simplifies dependency management across DAGs.

Give it a try! 😎

There's a lot of buzz around Microsoft Fabric these days. Some people are all-in, singing its praises from the rooftops, while others are more skeptical, waving the "buyer beware" flag. After talking with the community and observing Fabric in action, we're leaning toward caution. Why? Well, like many things in the Microsoft ecosystem, it's a jack of all trades but a master of none. Many of the promises seem to be more marketing hype than substance, leaving you with "marketecture" instead of solid architecture. While the product has admirable, lofty goals, Microsoft has many wrinkles to iron out.

In this article, we'll dive into 10 reasons why Microsoft Fabric might not be the best fit for your organization in 2025. By examining both the promises and the current realities of Microsoft Fabric, we hope to equip you with the information needed to make an informed decision about its adoption.

Microsoft Fabric is marketed as a unified, cloud-based data platform developed to streamline data management and analytics within organizations. Its goal is to integrate various Microsoft services into a single environment and to centralize and simplify data operations.

This means that Microsoft Fabric is positioning itself as an all-in-one analytics platform designed to handle a wide range of data-related tasks. A place to handle data engineering, data integration, data warehousing, data science, real-time analytics, and business intelligence. A one stop shop if you will. By consolidating these functions, Fabric hopes to provide a seamless experience for organizations to manage, analyze, and gather insights from their data.

Fabric presents itself as an all-in-one solution, but is it really? Let’s break down where the marketing meets reality.

While Microsoft positions Fabric is making an innovative step forward, much of it is clever marketing and repackaging of existing tools. Here’s what’s claimed—and the reality behind these claims:

Claim: Fabric combines multiple services into a seamless platform, aiming to unify and simplify workflows, reduce tool sprawl, and make collaboration easier with a one-stop shop.

Reality:

Claim: Fabric offers a scalable and flexible platform.

Reality: In practice, managing scalability in Fabric can be difficult. Scaling isn’t a one‑click, all‑services solution—instead, it requires dedicated administrative intervention. For example, you often have to manually pause and un-pause capacity to save money, a process that is far from ideal if you’re aiming for automation. Although there are ways to automate these operations, setting up such automation is not straightforward. Additionally, scaling isn’t uniform across the board; each service or component must be configured individually, meaning that you must treat them on a case‑by‑case basis. This reality makes the promise of scalability and flexibility a challenge to realize without significant administrative overhead.

Claim: Fabric offers predictable, cost-effective pricing.

Reality: While Fabric's pricing structure appears straightforward, several hidden costs and adoption challenges can impact overall expenses and efficiency:

All this to say that the pricing model is not good unless you can predict with great accuracy exactly how much you will spend every single day, and who knows that? Check out this article on the hidden cost of fabric which goes into detail and cost comparisons.

Claim: Fabric supports a wide range of data tools and integrations.

Reality: Fabric is built around a tight integration with other Fabric services and Microsoft tools such as Office 365 and Power BI, making it less ideal for organizations that prefer a “best‑of‑breed” approach (or rely on tools like Tableau, Looker, open-source solutions like Lightdash, or other non‑Microsoft solutions), this can severely limit flexibility and complicate future migrations.

While third-party connections are possible, they don’t integrate as smoothly as those in the MS ecosystem like Power BI, potentially forcing organizations to switch tools just to make Fabric work.

Claim: Fabric simplifies automation and deployment for data teams by supporting modern DataOps workflows.

Reality: Despite some scripting support, many components remain heavily UI‑driven. This hinders full automation and integration with established best practices for CI/CD pipelines (e.g., using Terraform, dbt, or Airflow). Organizations that want to mature data operations with agile DataOps practices find themselves forced into manual workarounds and struggle to integrate Fabric tools into their CI/CD processes. Unlike tools such as dbt, there is not built-in Data Quality or Unit Testing, so additional tools would need to be added to Fabric to achieve this functionality.

Claim: Microsoft Fabric provides enterprise-grade security, compliance, and governance features.

Reality: While Microsoft Fabric offers robust security measures like data encryption, role-based access control, and compliance with various regulatory standards, there are some concerns organizations should consider.

One major complaint is that access permissions do not always persist consistently across Fabric services, leading to unintended data exposure.

For example, users can still retrieve restricted data from reports due to how Fabric handles permissions at the semantic model level. Even when specific data is excluded from a report, built-in features may allow users to access the data, creating compliance risks and potential unauthorized access. Read more: Zenity - Inherent Data Leakage in Microsoft Fabric.

While some of these security risks can be mitigated, they require additional configurations and ongoing monitoring, making management more complex than it should be. Ideally, these protections should be unified and work out of the box rather than requiring extra effort to lock down sensitive data.

Claim: Fabric is presented as a mature, production-ready analytics platform.

Reality: The good news for Fabric is that it is still evolving. The bad news is, it's still evolving. That evolution impacts users in several ways:

Claim: Fabric automates many complex data processes to simplify workflows.

Reality: Fabric is heavy on abstractions and this can be a double‑edged sword. While at first it may appear to simplify things, these abstractions lead to a lack of visibility and control. When things go wrong it is hard to debug and it may be difficult to fine-tune performance or optimize costs.

For organizations that need deep visibility into query performance, workload scheduling, or resource allocation, Fabric lacks the granular control offered by competitors like Databricks or Snowflake.

Claim: Fabric offers comprehensive resource governance and robust alerting mechanisms, enabling administrators to effectively manage and troubleshoot performance issues.

Reality: Fabric currently lacks fine-grained resource governance features making it challenging for administrators to control resource consumption and mitigate issues like the "noisy neighbor" problem, where one service consumes disproportionate resources, affecting others.

The platform's alerting mechanisms are also underdeveloped. While some basic alerting features exist, they often fail to provide detailed information about which processes or users are causing issues. This can make debugging an absolute nightmare. For example, users have reported challenges in identifying specific reports causing slowdowns due to limited visibility in the capacity metrics app. This lack of detailed alerting makes it difficult for administrators to effectively monitor and troubleshoot performance issues, often needing the adoption of third-party tools for more granular governance and alerting capabilities. In other words, not so all in one in this case.

Claim: Fabric aims to be an all-in-one platform that covers every aspect of data management.

Reality: Despite its broad ambitions, key features are missing such as:

While these are just a couple of examples it's important to note that missing features will compel users to seek third-party tools to fill the gaps, introducing additional complexities. Integrating external solutions is not always straight forward with Microsoft products and often introduces a lot of overhead. Alternatively, users will have to go without the features and create workarounds or add more tools which we know will lead to issues down the road.

Microsoft Fabric promises a lot, but its current execution falls short. Instead of an innovative new platform, Fabric repackages existing services, often making things more complex rather than simpler.

That’s not to say Fabric won’t improve—Microsoft has the resources to refine the platform. But as of 2025, the downsides outweigh the benefits for many organizations.

If your company values flexibility, cost control, and seamless third-party integrations, Fabric may not be the best choice. There are more mature, well-integrated, and cost-effective alternatives that offer the same features without the Microsoft lock-in.

Time will tell if Fabric evolves into the powerhouse it aspires to be. For now, the smart move is to approach it with a healthy dose of skepticism.

👉 Before making a decision, thoroughly evaluate how Fabric fits into your data strategy. Need help assessing your options? Check out this data platform evaluation worksheet.

.png)

Enterprises are increasingly relying on dbt (Data Build Tool) for their data analytics; however, dbt wasn’t designed to be an enterprise-ready platform on its own. This leads to struggles with scalability, orchestration, governance, and operational efficiency when implementing dbt at scale. But if dbt is so amazing why is this the case? Like our title suggests, you need more than just dbt to have a successful dbt analytics implementation. Keep on reading to learn exactly what you need to super charge your data analytics with dbt successfully.

dbt is popular because it solves problems facing the data analytics world. Enterprises today are dealing with growing volumes of data, making efficient data transformation a critical part of their analytics strategy. Traditionally, data transformation was handled using complex ETL (Extract, Transform, Load) processes, where data engineers wrote custom scripts to clean, structure, and prepare data before loading it into a warehouse. However, this approach has several challenges:

dbt (Data Build Tool) transforms this paradigm by enabling SQL-based, modular, and version-controlled transformations directly inside the data warehouse. By following the ELT (Extract, Load, Transform) approach, dbt allows raw data to be loaded into the warehouse first, then transformed within the warehouse itself—leveraging the scalability and processing power of modern cloud data platforms.

Unlike traditional ETL tools, dbt applies software engineering best practices to SQL-based transformations, making it easier to develop, test, document, and scale data pipelines. This shift has made dbt a preferred solution for enterprises looking to empower analysts, improve collaboration, and create maintainable data workflows.

With these benefits it is clear why over 40,000 companies are leveraging dbt today!

Despite dbt’s strengths, enterprises face several challenges when implementing it at scale for a variety of reasons:

Running dbt in production requires robust orchestration beyond simple scheduled jobs. dbt only manages transformations, but a complete end-to-end pipeline includes Extracting, Loading and Visualizing of data. To manage the full end-to-end data pipeline (ELT + Viz) organizations will need a full-fledged orchestrator like Airflow. While there are other orchestration options on the market, Airflow and dbt are a common pattern.

CI/CD pipelines are essential for dbt at the enterprise level, yet one of dbt Core’s major limitations is the lack of a built-in CI/CD pipeline for managing deployments. This makes workflows more complex and increases the likelihood of errors reaching production. To address this, teams can implement external tools like Jenkins, GitHub Actions, or GitLab Workflows that provide a flexible and customizable CI/CD process to automate deployments and enforce best practices.

While dbt Cloud does offer an out-of-the-box CI/CD solution, it lacks customization options. Some organizations find that their use cases demand greater flexibility, requiring them to build their own CI/CD processes instead.

Enterprises seek alternative solutions that provide greater control, scalability, and security over their data platform. However, this comes with the responsibility of managing their own infrastructure, which introduces significant operational overhead ($$$). Solutions like dbt Cloud do not offer Virtual Private Cloud (VPC) deployment, full CI/CD flexibility, and a fully-fledged orchestrator leaving organizations to handle additional platform components.

We saw a need for a middle ground that combined the best of both worlds; something as flexible as dbt Core and Airflow, but fully managed like dbt Cloud. This led to Datacoves which provides a seamless experience with no platform maintenance overhead or onboarding hassles. Teams can focus on generating insights from data and not worry about the platform.

Vendor lock-in is a major concern for organizations that want to maintain flexibility and avoid being tied to a single provider. The ability to switch out tools easily without excessive cost or effort is a key advantage of the modern data stack. Enterprises benefit from mixing and matching best-in-class solutions that meet their specific needs.

Datacoves is a fully managed enterprise platform for dbt, solving the challenges outlined above. Below is how Datacoves' features align with enterprise needs:

Datacoves offers flexible deployment and pricing options to accommodate various enterprise needs:

Datacoves is committed to delivering enterprise-grade support and resources through our white-glove service:

Enterprises need more than just dbt to achieve scalable and efficient analytics. While dbt is a powerful tool for data transformation, it lacks the necessary infrastructure, governance, and orchestration capabilities required for enterprise-level deployments. Datacoves fills these gaps by providing a fully managed environment that integrates dbt-Core, VS Code, Airflow, and Kubernetes-based deployments, Datacoves is the ultimate solution for organizations looking to scale dbt successfully.

The latest release of dbt 1.9, introduces some exciting features and updates meant to enhance functionality and tackle some pain points of dbt. With improvements like microbatch incremental strategy, snapshot enhancements, Iceberg table format support, and streamlined CI workflows, dbt 1.9 continues to help data teams work smarter, faster, and with greater precision. All the more reason to start using dbt today!

We looked through the release notes, so you don’t have to. This article highlights the key updates in dbt 1.9, giving you the insights needed to upgrade confidently and unlock new possibilities for your data workflows. If you need a flexible dbt and Airflow experience, Datacoves might be right for your organization. Lower total cost of ownership by 50% and shortened your time to market today!

If you are upgrading from dbt 1.7 or earlier, you will need to install both dbt-core and the appropriate adapter. This requirement stems from the decoupling introduced in dbt 1.8, a change that enhances modularity and flexibility in dbt’s architecture. These updates demonstrate dbt’s commitment to providing a streamlined and adaptable experience for its users while ensuring compatibility with modern tools and workflows.

pip install dbt-core dbt-snowflakeIn dbt 1.9, the microbatch incremental strategy is a new way to process massive datasets. In earlier versions of dbt, incremental materialization was available to process datasets which were too large to drop and recreate at every build. However, it struggled to efficiently manage very large datasets that are too large to fit into one query. This limitation led to timeouts and complex query management.

The microbatch incremental strategy comes to the rescue by breaking large datasets into smaller chunks for processing using the batch_size, event_time, and lookback configurations to automatically generate the necessary filters for you. However, at the time of this publication this feature is only available on the following adapters: Postgres, Redshift, Snowflake, BigQuery, Spark, and Databricks, with more on the way.

event_time, lookback, and batch_size configurations dbt will generate the necessary filters for each batch. One less thing to worry about! batch_size you set. Each batch is processed separately and in parallel, unless you disable this feature using the +concurrent_batches config. This independence in batch processing improves performance, minimizes the risk of query failures, allows you to retry failed batches using the dbt retry command, and provides the granularity to load specific batches. Gotta love the control without the extra leg work!

To take advantage of the microbatch incremental strategy, first upgrade to dbt 1.9 and ensure your project is configured correctly. By default, dbt will handle the microbatch logic for you, as explained above. However, if you’re using custom logic, such as a custom microbatch macro, don’t forget to set the require_batched_execution_for_custom_microbatch_strategy behavior flag to True in your dbt_project.yml file. This prevents deprecation warnings and ensures dbt knows how to handle your custom configuration.

If you have custom microbatch but wish to migrate, its important to note that earlier versions required setting the environment variable DBT_EXPERIMENTAL_MICROBATCH to enable microbatching, but this is no longer needed. Starting with Core 1.9, the microbatch strategy works seamlessly out of the box, so you can remove it.

With dbt 1.9, snapshots have become easier to use than ever! This is great news for dbt users since snapshots in dbt allow you to capture the state of your data at specific points in time, helping you track historical changes and maintain a clear picture of how your data evolves. Below are a couple of improvements to implement or be aware of.

snapshot_meta_column_names config you now have the option to rename metadata fields to match your project's naming conventions. This added flexibility helps ensure consistency across your data models and simplifies collaboration within teams. dbt_valid_to variable is set to NULL but you can now you can configure it to a data with the dbt_valid_to_current config. It is important to note that dbt will not automatically adjust the current value in the existing dbt_valid_to column. Meaning, any existing current records will still have dbt_valid_to set to NULL and new records will have this value set to your configured date. You will have to manually update existing data to match. Less NULL values to handle downstream! --empty flag is now supported for the dbt snapshot command, allowing you to execute snapshot operations without processing data. This enhancement is particularly useful in Continuous Integration (CI) environments, enabling the execution of unit tests for models downstream of snapshots without requiring actual data processing, streamlining the testing process. The empty flag, introduced in dbt 1.8, also has some powerful applications in Slim CI to optimize your CI/CD worth checking out. hard_deletes configuration enhances the management of deleted records in snapshots. This feature offers three methods: the default ignore, which takes no action on deleted records; invalidate, replacing the invalidate_hard_deletes=trueconfig, which marks deleted records as invalid by setting their dbt_valid_to timestamp to the current time; and lastly new_record, which tracks deletions by inserting a new record with a dbt_is_deleted config set to True.

It's important to note some migration efforts will be required for this. While the invalidate_hard_deletes configuration is still supported for existing snapshots, it cannot be used alongside hard_deletes. For new snapshots, it's recommended to use hard_deletes instead of the legacy invalidate_hard_deletes. If you switch an existing snapshot to use hard_deletes without migrating your data, you may encounter inconsistent or incorrect results, such as a mix of old and new data formats. Keep this in mind when implementing these new configs.

Testing is a vital part of maintaining high data quality and ensuring your data models work as intended. Unit testing was introduced in dbt 1.8 and has seen continued improvement in dbt 1.9.

unit_test: selector. This feature enables more granular control over test execution, allowing you to focus on particular tests without running the entire suite, thereby saving time and resources. dbt test --select unit_test:my_project.my_unit_test

dbt build --select unit_test:my_project.my_unit_test dbt list --resource-type test now correctly include only data tests, excluding unit tests. This distinction enhances clarity and precision when managing different test types within your project. dbt ls --select unit_test:my_project.my_unit_test In dbt version 1.9, the state:modified selector has been enhanced to improve the accuracy of Slim CI workflows. Previously, dynamic configurations—such as setting the database based on the environment—could lead to dbt perceiving changes in models, even when the actual model remained unchanged. This misinterpretation caused Slim CI to rebuild all models unnecessarily, resulting in false positives.

By comparing unrendered configuration values, dbt now accurately detects genuine modifications, eliminating false positives during state comparisons. This improvement ensures that only truly modified models are selected for rebuilding, streamlining your CI processes.

To enable this feature, set the state_modified_compare_more_unrendered_values flag to True in your dbt_project.yml file:

flags:

state_modified_compare_more_unrendered_values: True In dbt 1.9, the dbt docs serve command now has more customization abilities with a new --host flag. This flag allows users to specify the host address for serving documentation. Previously, dbt docs serve defaulted to binding the server to 127.0.0.1 (localhost) without an option to override this setting.

Users can now specify a custom host address using the --host flag when running dbt docs serve. This enhancement provides the flexibility to bind the documentation server to any desired address, accommodating various deployment needs. The default of the --host flag will continue to bind to 127.0.0.1 by default, ensuring backward compatibility and secure defaults.

dbt 1.9 includes several updates aimed at improving performance, usability, and compatibility across projects. These changes ensure a smoother experience for users while keeping dbt aligned with modern standards.

dbt clone command now executes clone operations concurrently, enhancing efficiency and reducing execution time. dbt show and dbt compile commands now support parseable JSON and text outputs when run in quiet mode, facilitating easier integration with other tools and scripts by providing machine-readable outputs. skip_nodes_if_on_run_start_fails Behavior Change Flag: A new behavior change flag, skip_nodes_if_on_run_start_fails, has been introduced to gracefully handle failures in on-run-start hooks. When enabled, if an on-run-start hook fails, subsequent hooks and nodes are skipped, preventing partial or inconsistent runs. dbt 1.9 introduces a range of powerful features and enhancements, reaffirming its role as a cornerstone tool for modern data transformations. The enhancements in this release reflect the community's commitment to innovation and excellence as well as its strength and vitality. There's no better time to join this dynamic ecosystem and elevate your data workflows!

If you're looking to implement dbt efficiently, consider partnering with Datacoves. We can help you reduce your total cost of ownership by 50% and accelerate your time to market. Book a call with us today to discover how we can help your organization in building a modern data stack with minimal technical debt.

Checkout the full release notes.

dbt and Airflow are cornerstone tools in the modern data stack, each excelling in different areas of data workflows. Together, dbt and Airflow provide the flexibility and scalability needed to handle complex, end-to-end workflows.

This article delves into what dbt and Airflow are, why they work so well together, and the challenges teams face when managing them independently. It also explores how Datacoves offers a fully managed solution that simplifies operations, allowing organizations to focus on delivering actionable insights rather than managing infrastructure.

dbt (Data Build Tool) is an open-source analytics engineering framework that transforms raw data into analysis-ready datasets using SQL. It enables teams to write modular, version-controlled workflows that are easy to test and document, bridging the gap between analysts and engineers.

Apache Airflow is an open-source platform designed to orchestrate workflows and automate tasks. Initially created for ETL processes, it has evolved into a versatile solution for managing any sequence of tasks in data engineering, machine learning, or beyond.

While dbt excels at SQL-based data transformations, it has no built-in scheduler, and solutions like dbt Cloud’s scheduling capabilities are limited to triggering jobs in isolation or getting a trigger from an external source. This approach risks running transformations on stale or incomplete data if upstream processes fail. Airflow eliminates this risk by orchestrating tasks across the entire pipeline, ensuring transformations occur at the right time as part of a cohesive, integrated workflow.

Tools like Airbyte and Fivetran also provide built-in schedulers, but these are designed for loading data at a given time and optionally trigger a dbt pipeline. As complexity grows and organizations need to trigger dbt pipelines after data loads via different means such as dlt and Fivetran, then this simple approach does not scale. It is also common to trigger operations after a dbt pipeline and scheduling using the data loading tool will not handle that complexity. With dbt and Airflow, a team can connect the entire process and assure that processes don’t run if upstream tasks fail or are delayed.

Airflow centralizes orchestration, automating the timing and dependencies of tasks—extracting and loading data, running dbt transformations, and delivering outputs. This connected approach reduces inefficiencies and ensures workflows run smoothly with minimal manual intervention.

Modern data workflows extend beyond SQL transformations. Airflow complements dbt by supporting complex, multi-stage processes such as integrating APIs, executing Python scripts, and training machine learning models. This flexibility allows pipelines to adapt as organizational needs evolve.

Airflow also provides a centralized view of pipeline health, offering data teams complete visibility. With its ability to trace issues and manage dependencies, Airflow helps prevent cascading failures and keeps workflows reliable.

By combining dbt’s transformation strengths with Airflow’s orchestration capabilities, teams can move past fragmented processes. Together, these tools enable scalable, efficient analytics workflows, helping organizations focus on delivering actionable insights without being bogged down by operational hurdles.

In our previous article, we discussed building vs buying your Airflow and dbt infrastructure. There are many cons associated with self-hosting these two tools, but Datacoves takes the complexity out of managing dbt and Airflow by offering a fully integrated, managed solution. Datacoves has given many organizations the flexibility of open-source tools with the freedom of managed tools. See how we helped Johnson and Johnson MedTech migrate to our managed dbt and airflow platform.

Datacoves offers the most flexible and robust managed dbt Core environment on the market, enabling teams to fully harness the power of dbt without the complexities of infrastructure management, environment setup, or upgrades. Here’s why our customers choose Datacoves to implement dbt:

Datacoves offers a fully managed Airflow environment, designed for scalability, reliability, and simplicity. Whether you're orchestrating complex ETL workflows, triggering dbt transformations, or integrating with third-party APIs, Datacoves takes care of the heavy lifting by managing the Kubernetes infrastructure, monitoring, and scaling. Here’s what sets Datacoves apart as a managed Airflow solution:

dbt and Airflow are a natural pair in the Modern Data Stack. dbt’s powerful SQL-based transformations enable teams to build clean, reliable datasets, while Airflow orchestrates these transformations within a larger, cohesive pipeline. Their combination allows teams to focus on delivering actionable insights rather than managing disjointed processes or stale data.

However, managing these tools independently can introduce challenges, from infrastructure setup to scaling and ongoing maintenance. That’s where platforms like Datacoves make a difference. For organizations seeking to unlock the full potential of dbt and Airflow without the operational overhead, solutions like Datacoves provide the scalability and efficiency needed to modernize data workflows and accelerate insights.

Book a call today to see how Datacoves can help your organization realize the power of Airflow and dbt.

.png)

The world of data moves at a lightning-fast pace, and you may be looking to keep up by migrating your data to a modern infrastructure. As you plan your data migration, you’ll quickly see the many moving parts involved, from data compatibility and security to performance optimization. Choosing the right partner is critical—making the wrong choice can lead to data loss or corruption, compliance failures, project delays, hidden costs and more. At worst, you could end up with a costly new process that fails to gain user adoption! This article provides 10 key factors to consider in a partner to ensure these pitfalls don’t happen to you, guiding you toward a smooth and successful migration. Lets dive in!

Data migration is the process of moving data pipelines from one platform to another. This process can include upgrading or replacing legacy platforms, performing critical maintenance, or transitioning to new infrastructure such as a cloud platform. Whether it's moving data to a modern data center or migrating workloads to the cloud, data migration is a pivotal undertaking that demands meticulous planning and execution.

Organizations may embark on this complex journey for many reasons. A common driver is the need to modernize and adopt cutting-edge solutions like cloud platforms such as Snowflake, which offer unparalleled scalability, performance, and the flexibility of ephemeral resources. Data migration may also be necessitated by mergers and acquisitions, where consolidating and standardizing data across multiple systems becomes essential for unified operations. Additionally, organizations might pursue migration to improve security, streamline workflows, or boost analytics capabilities.

Done right, data migration can be transformative, enhancing data usage and enabling organizations to unlock new opportunities for efficiency, deeper insights, and strategic growth.

Migrating data is a complex undertaking with many moving parts that vary based on your current system and the target system. Careful assessment of your current state and your desired future state is a critical step that should never be overlooked in this planning process. Key considerations include data security, optimizing configurations in the new environment, and transitioning existing pipelines seamlessly. Joe Reis and Matt Housley often emphasize that much of data engineering revolves around "plumbing"—the foundational connections and data flows—which must be meticulously managed for any successful migration.

A lift-and-shift approach, where pipelines are simply moved without modifications, should be avoided as much as possible. This method often undermines the purpose of migrating in the first place: to capitalize on modern features and enhancements offered by newer tools, such as dbt, to improve data quality, documentation, and impact analysis. Moving to dbt without re-thinking how data is cleansed and transformed can lead to outcomes that are worse than your current state such as increased compute costs and difficulty in debugging issues.

Given these complexities, detailed planning, skilled execution, prioritization, decommissioning unused assets, and effective risk management are crucial for a successful migration. Achieving this demands experienced professionals who can execute flawlessly while remaining adaptable to unexpected challenges.

As we have seen above, there are many complexities when it comes to data migration, making the selection of the right partner paramount. Choosing the wrong partner can potentially lead to longer implementation times, hidden costs, project failure, compliance failures, data loss and corruption, and lost opportunity costs. Let’s discuss each of these in a little more detail.

Inexperienced partners can cause significant delays due to suboptimal choices in planning, technology selection, and execution. These inefficiencies can lead to frequent setbacks, resource mismanagement, and potential catastrophic roadblocks. Prolonged implementation timelines may also result in missed opportunities to capture market value and reduce time-to-insight, while eroding trust in a system that has yet to be fully implemented.

Hiring the wrong partner often results in unforeseen costs due to extended project timelines as mentioned above, poor resource allocation, and the need for rework when initial efforts fall short. These hidden costs may include increased labor expenses, additional technology investments to rectify poor initial solutions, and higher costs associated with resolving data security or compliance issues. Budget overruns and unexpected expenses from lack of foresight, poor risk management, and inefficiency can quickly erode ROI.

A poorly executed data migration can lead to a new process that underperforms, costs more, or fails to gain user adoption. When users reject a poorly implemented system, organizations may be forced to maintain legacy systems, further compounding costs and delaying innovation. Worse still, critical data may be unusable or inconsistent, undermining trust in data-driven initiatives.

Hiring the right partner is essential for ensuring compliance with data regulations, industry standards, and security best practices. Without expertise in these areas, there is a heightened risk of data breaches, non-compliance fines, and reputational damage due to mishandling sensitive information. Such failures can lead to costly legal ramifications, operational downtime, and diminished customer trust.

Inadequate planning, testing, or execution can result in the loss or corruption of critical data during migration. Poor data management practices, such as insufficient backups, improper mapping of data fields, or inadequate validation procedures, can compromise data integrity and create gaps in your data sets. Data loss and corruption can disrupt business operations, degrade analytics capabilities, and require extensive rework to correct.

Choosing the wrong partner can lead to missed opportunities for optimizing data processes, modernizing workflows, and unlocking valuable business insights. Every moment spent fixing issues or addressing inefficiencies due to poor implementation represents lost time that could have been invested in enhancing data quality, streamlining operations, and driving strategic initiatives. This opportunity cost is often overlooked but can be the difference between gaining a competitive edge and falling behind.

Datacoves does not do data migrations, but we see companies hire companies to do this work as they implement our platform. Through our experience, we have compiled a list of 10 key factors to consider when selecting a data migration partner. Carefully evaluating these factors can significantly increase the likelihood of success for your data migration plan and ensure a smoother overall process.

When selecting a data migration partner, it’s crucial to thoroughly review their case studies, references, and client testimonials. Focus on case studies that feature companies with similar starting points and objectives to your own. Approach client testimonials with a discerning eye and validate their claims by contacting references. This is an excellent opportunity to determine whether the partner is merely focused on checking tasks off a to-do list or genuinely dedicated to setting things up correctly the first time, with a passion for leaving your organization in a strong position. While this may seem like a considerable effort, such diligence is essential for investing in your data’s success and ensuring the partner can deliver on their promises.

Building on the importance of a proven track record from above, this factor emphasizes the need for technical depth. Verify that your potential partner is proficient in overarching data terminology and best practices, with deep familiarity in areas such as data architecture, data modeling, data governance, data integration, and security protocols. A qualified data partner must have the expertise necessary to successfully guide you through every phase of your data migration. Skipping this crucial step can lead to poorly structured data, compromised system performance, and numerous missed opportunities for optimization.

This is often overlooked when selecting a data migration partner, yet it plays a critical role in ensuring a successful project. When evaluating potential partners, consider asking the following questions to assess their project management and communication capabilities:

This is by no means an exhaustive list of questions but rather a great starting point. The right partner should feel like a leader rather than a liability, demonstrating their expertise in a proactive manner. This ensures you don’t have to constantly direct their work but can trust them to drive the project forward effectively.

A common theme for a successful partnership is deep expertise, and this is especially true for industry-specific knowledge. Every industry has its unique challenges and pitfalls when it comes to data. It is important to seek out partners who are experts in your industry and have a proven track record of successfully guiding similar organizations to their goals. For example, if your organization operates within the Health and Life Sciences sector, a partner with experience exclusively in Retail may lack the nuanced understanding required for your specific data needs, such as handling PII data, adhering to stringent regulatory compliance, or managing complex clinical trial data. While industry familiarity shouldn’t necessarily be a dealbreaker for every organization, it can be critical for sectors like Health and Life Sciences due to their high regulatory demands. Other industries may find it less restrictive, which is why it remains a key factor to consider when finding the right fit. See how Datacoves helped J&J achieve a 66% reduction in data processing with their Modern Data Platform, best practices, and accelerators.

A partner's ability to minimize downtime, prevent data loss, and mitigate security risks throughout the migration process is essential to avoiding catastrophic consequences such as prolonged system outages, data breaches, or compliance failures. A comprehensive risk mitigation strategy ensures that every aspect of your data migration is thoughtfully planned and executed with contingencies in place. Ask potential partners how they approach risk assessment, what protocols they follow to maintain data integrity, and how they handle unexpected issues. The right partner will proactively identify potential risks and implement measures to address them, providing you with peace of mind during what can be an otherwise complex and challenging process.

A successful data migration partner should offer tailored solutions rather than relying on one-size-fits-all approaches. Every organization’s data needs are unique, and flexibility in meeting those needs is extremely important. Consider how a partner adapts their strategy and tools to align with your specific requirements, workflows, and constraints. Do they take the time to understand your goals and develop a plan accordingly, or do they push prepackaged solutions? The ability to customize their approach can be the difference between a migration that delivers optimal business value and one that merely "gets the job done."

Data migration doesn’t end with the initial project. A strong partner should offer ongoing support, optimization, and strategic guidance post-migration to ensure continued value from your data infrastructure. Ask about their approach to post-migration support: Will they provide continued monitoring, performance optimization, and assistance—and for how long? The best partners view your success as an ongoing journey, bringing the expertise needed to continuously refine and enhance your data systems. Their commitment to getting things right the first time minimizes future issues and demonstrates a vested interest in your long-term success. By prioritizing a forward-thinking approach, they ensure your data systems are built to last, rather than quickly implemented and forgotten. This is why Datacoves goes beyond just providing tools; we offer accelerators and best practices designed to help you implement dbt successfully, ensuring a strong foundation for your data transformation journey. We work with strategic migration partners that will help you set things up the right way and are around for the long haul.

For many organizations, the geographic location of a data migration partner can impact communication and project efficiency. Consider whether the partner’s working hours overlap with yours. How will they handle urgent requests or collaboration across different time zones? Effective time zone alignment can enhance communication, reduce delays, and ensure faster resolution of issues. The last thing you want is to find an issue and not be able to get an answer until the next day.

Successful data migration extends beyond the technical execution and tooling—it also requires effective change management. A capable partner will help your organization navigate the changes associated with data migration, including new processes, systems, and ways of working. How do they support employee training, communication, and adoption of new tools? Do they provide resources and strategies to ensure a smooth transition? Partners with a strong change management focus will work with you to minimize disruptions and maximize user adoption.

When evaluating potential partners, keep in mind that while their team lead may be highly technical, the team members you’ll work with day-to-day might not always match that level of expertise. Ensure that the team members working on your project possess relevant certifications for the key technologies you use. Certifications, such as dbt Certification, Snowflake Certification, or other relevant credentials, demonstrate expertise and a commitment to staying current with industry standards and best practices. Ask potential partners to provide proof of certification and inquire about how their team keeps pace with evolving technologies. While certifications alone don’t guarantee proficiency, they offer a solid starting point for assessing skill and commitment. This assurance of expertise can significantly impact the success of your project.

Cost should not be the determining factor when hiring a migration partner. Cost is an essential consideration as it will directly impact project budget, but you must consider the total cost of ownership of your new platform. In the long term, the initial migration cost will impact the long-term on-going costs. A low-cost partner will lack several of the items listed above and your migration team may be staffed with inexperienced team members. The migration will be done, but how much technical debt will you accumulate along the way?

Avoid simply searching for the lowest-cost vendor. Though this may lower upfront expenses, it often results in higher costs over time due to errors, inefficiencies, and the need for rework. Projects that are rushed or handled without proper expertise tend to exceed their budgets, take longer to complete, and are more challenging to maintain in the long run because they weren’t done correctly or optimized from the start. Experienced partners bring significant value by ensuring work is done right and to a high standard from the beginning. It is obvious that contracting a partner that meets most, if not all, of the key factors mentioned above most likely requires a monetary investment. This should be viewed as an investment in expertise that helps mitigate long-term costs and risks.

Choosing the right data migration partner is key to minimizing risks and ensuring optimal outcomes for your organization. The complexities and challenges of data migration demand a partner with proven expertise, industry-specific knowledge, effective communication, flexibility, and a commitment to long-term support. Each of the factors outlined above plays a vital role in determining the success of your migration project—potentially saving your organization from costly delays, hidden expenses, compliance pitfalls, and lost business opportunities.

Carefully evaluate potential partners using these key considerations to ensure you select a partner who will not only meet your immediate data migration needs but also support your organization’s continued success and growth. 📈

Datacoves has built-in best practices and accelerators built from our deep expertise in dbt, Airflow, and Snowflake. Our platform is designed to simplify your data transformation journey while providing excellent value by reducing your reliance on costly consultants. With our baked-in best practices, our customers have achieved faster implementations, enhanced efficiency, and long-term scalability.

Any experienced data engineer will tell you that efficiency and resource optimization are always top priorities. One powerful feature that can significantly optimize your dbt CI/CD workflow is dbt Slim CI. However, despite its benefits, some limitations have persisted. Fortunately, the recent addition of the --empty flag in dbt 1.8 addresses these issues. In this article, we will share a GitHub Action Workflow and demonstrate how the new --empty flag can save you time and resources.

dbt Slim CI is designed to make your continuous integration (CI) process more efficient by running only the models that have been changed and their dependencies, rather than running all models during every CI build. In large projects, this feature can lead to significant savings in both compute resources and time.

dbt Slim CI is implemented efficiently using these flags:

--select state:modified: The state:modified selector allows you to choose the models whose "state" has changed (modified) to be included in the run/build. This is done using the state:modified+ selector which tells dbt to run only the models that have been modified and their downstream dependencies.

--state <path to production manifest>: The --state flag specifies the directory where the artifacts from a previous dbt run are stored ie) the production dbt manifest. By comparing the current branch's manifest with the production manifest, dbt can identify which models have been modified.

--defer: The --defer flag tells dbt to pull upstream models that have not changed from a different environment (database). Why rebuild something that exists somewhere else? For this to work, dbt will need access to the dbt production manifest.

You may have noticed that there is an additional flag in the command above.

--fail-fast: The --fail-fast flag is an example of an optimization flag that is not essential to a barebones Slim CI but can provide powerful cost savings. This flag stops the build as soon as an error is encountered instead of allowing dbt to continue building downstream models, therefore reducing wasted builds. To learn more about these arguments you can use have a look at our dbt cheatsheet.

The following sample Github Actions workflow below is executed when a Pull Request is opened. ie) You have a feature branch that you want to merge into main.

Checkout Branch: The workflow begins by checking out the branch associated with the pull request to ensure that the latest code is being used.

Set Secure Directory: This step ensures the repository directory is marked as safe, preventing potential issues with Git operations.

List of Files Changed: This command lists the files changed between the PR branch and the base branch, providing context for the changes and helpful for debugging.

Install dbt Packages: This step installs all required dbt packages, ensuring the environment is set up correctly for the dbt commands that follow.

Create PR Database: This step creates a dedicated database for the PR, isolating the changes and tests from the production environment.

Get Production Manifest: Retrieves the production manifest file, which will be used for deferred runs and governance checks in the following steps.

Run dbt Build in Slim Mode or Run dbt Build Full Run: If a manifest is present in production, dbt will be run in slim mode with deferred models. This build includes only the modified models and their dependencies. If no manifest is present in production we will do a full refresh.

Grant Access to PR Database: Grants the necessary access to the new PR database for end user review.

Generate Docs Combining Production and Branch Catalog: If a dbt test is added to a YAML file, the model will not be run, meaning it will not be present in the PR database. However, governance checks (dbt-checkpoint) will need the model in the database for some checks and if not present this will cause a failure. To solve this, the generate docs step is added to merge the catalog.json from the current branch with the production catalog.json.

Run Governance Checks: Executes governance checks such as SQLFluff and dbt-checkpoint.

As mentioned in the beginning of the article, there is a limitation to this setup. In the existing workflow, governance checks need to run after the dbt build step. This is because dbt-checkpoint relies on the manifest.json and catalog.json. However, if these governance checks fail, it means that the dbt build step will need to run again once the governance issues are fixed. As shown in the diagram below, after running our dbt build, we proceed with governance checks. If these checks fail, we need to resolve the issue and re-trigger the pipeline, leading to another dbt build. This cycle can lead to unnecessary model builds even when leveraging dbt Slim CI.

The solution to this problem is the --empty flag in dbt 1.8. This flag allows dbt to perform schema-only dry runs without processing large datasets. It's like building the wooden frame of a house—it sets up the structure, including the metadata needed for governance checks, without filling it with data. The framework is there, but the data itself is left out, enabling you to perform governance checks without completing an actual build.

Let’s see how we can rework our Github Action:

Checkout Branch: The workflow begins by checking out the branch associated with the pull request to ensure that the latest code is being used.

Set Secure Directory: This step ensures the repository directory is marked as safe, preventing potential issues with Git operations.

List of Files Changed: This step lists the files changed between the PR branch and the base branch, providing context for the changes and helpful for debugging.

Install dbt Packages: This step installs all required dbt packages, ensuring the environment is set up correctly for the dbt commands that follow.

Create PR Database: This command creates a dedicated database for the PR, isolating the changes and tests from the production environment.

Get Production Manifest: Retrieves the production manifest file, which will be used for deferred runs and governance checks in the following steps.

*NEW* Governance Run of dbt (Slim or Full) with EMPTY Models: If there is a manifest in production, this step runs dbt with empty models using slim mode and using the empty flag. The models will be built in the PR database with no data inside and we can now use the catalog.json to run our governance checks since the models. Since the models are empty and we have everything we need to run our checks, we have saved on compute costs as well as run time.

Generate Docs Combining Production and Branch Catalog: If a dbt test is added to a YAML file, the model will not be run, meaning it will not be present in the PR database. However, governance checks (dbt-checkpoint) will need the model in the database for some checks and if not present this will cause a failure. To solve this, the generate docs step is added to merge the catalog.json from the current branch with the production catalog.json.

Run Governance Checks: Executes governance checks such as SQLFluff and dbt-checkpoint.

Run dbt Build: Runs dbt build using either slim mode or full run after passing governance checks.

Grant Access to PR Database: Grants the necessary access to the new PR database for end user review.

By leveraging the dbt --empty flag, we can materialize models in the PR database without the computational overhead, as the actual data is left out. We can then use the metadata that was generated during the empty build. If any checks fail, we can repeat the process again but without the worry of wasting any computational resources doing an actual build. The cycle still exists but we have moved our real build outside of this cycle and replaced it with an empty or fake build. Once all governance checks have passed, we can proceed with the real dbt build of the dbt models as seen in the diagram below.