Enterprises are increasingly relying on dbt (Data Build Tool) for their data analytics; however, dbt wasn’t designed to be an enterprise-ready platform on its own. This leads to struggles with scalability, orchestration, governance, and operational efficiency when implementing dbt at scale. But if dbt is so amazing why is this the case? Like our title suggests, you need more than just dbt to have a successful dbt analytics implementation. Keep on reading to learn exactly what you need to super charge your data analytics with dbt successfully.

Why Enterprises Adopt dbt for Data Transformation

dbt is popular because it solves problems facing the data analytics world. Enterprises today are dealing with growing volumes of data, making efficient data transformation a critical part of their analytics strategy. Traditionally, data transformation was handled using complex ETL (Extract, Transform, Load) processes, where data engineers wrote custom scripts to clean, structure, and prepare data before loading it into a warehouse. However, this approach has several challenges:

- Slow Development Cycles – ETL processes often required significant engineering effort, creating bottlenecks and slowing down analytics workflows.

- High Dependency on Engineers – Analysts and business users had to rely on data engineers to implement transformations, limiting agility.

- Difficult Collaboration & Maintenance – Custom scripts and siloed processes made it hard to track changes, ensure consistency, and maintain documentation.

dbt (Data Build Tool) transforms this paradigm by enabling SQL-based, modular, and version-controlled transformations directly inside the data warehouse. By following the ELT (Extract, Load, Transform) approach, dbt allows raw data to be loaded into the warehouse first, then transformed within the warehouse itself—leveraging the scalability and processing power of modern cloud data platforms.

Unlike traditional ETL tools, dbt applies software engineering best practices to SQL-based transformations, making it easier to develop, test, document, and scale data pipelines. This shift has made dbt a preferred solution for enterprises looking to empower analysts, improve collaboration, and create maintainable data workflows.

Key Benefits of dbt

- SQL-Based Transformations – dbt enables data teams to perform transformations within the data warehouse using standard SQL. By managing the Data Manipulation Language (DML) statements, dbt allows anyone with SQL skills to contribute to data modeling, making it more accessible to analysts and reducing reliance on specialized engineering resources.

- Automated Testing & Documentation – With more people contributing to data modeling things can become a mess but dbt shines by incorporating automated testing and documentation to ensure data reliability. With dbt teams can have a decentralized development pattern but maintain centralized governance.

- Version Control & Collaboration – Borrowing from software engineering best practices dbt enables teams to track changes using Git. Any changes made to data models can be clearly tracked and reverted, simplifying collaboration.

- Modular and Reusable Code – dbt's powerful combination of SQL and Jinja enables the creation of modular and reusable code, significantly enhancing maintainability. Using Jinja, dbt allows users to define macros—reusable code snippets that encapsulate complex logic. This means less redundancies and consistent application of business rules across models.

- Scalability & Performance Optimization – dbt leverages the data warehouse’s native processing power, enabling incremental models that minimize recomputation and improve efficiency.

- Extensibility & Ecosystem – dbt integrates with orchestration tools (e.g., Airflow) and metadata platforms (e.g., DataHub), supporting a growing ecosystem of plugins and APIs.

With these benefits it is clear why over 40,000 companies are leveraging dbt today!

The Challenges of Scaling dbt in the Enterprise

Despite dbt’s strengths, enterprises face several challenges when implementing it at scale for a variety of reasons:

Complexity of Scaling dbt

Running dbt in production requires robust orchestration beyond simple scheduled jobs. dbt only manages transformations, but a complete end-to-end pipeline includes Extracting, Loading and Visualizing of data. To manage the full end-to-end data pipeline (ELT + Viz) organizations will need a full-fledged orchestrator like Airflow. While there are other orchestration options on the market, Airflow and dbt are a common pattern.

Lack of Integrated CI/CD & Development Controls

CI/CD pipelines are essential for dbt at the enterprise level, yet one of dbt Core’s major limitations is the lack of a built-in CI/CD pipeline for managing deployments. This makes workflows more complex and increases the likelihood of errors reaching production. To address this, teams can implement external tools like Jenkins, GitHub Actions, or GitLab Workflows that provide a flexible and customizable CI/CD process to automate deployments and enforce best practices.

While dbt Cloud does offer an out-of-the-box CI/CD solution, it lacks customization options. Some organizations find that their use cases demand greater flexibility, requiring them to build their own CI/CD processes instead.

Infrastructure & Deployment Constraints

Enterprises seek alternative solutions that provide greater control, scalability, and security over their data platform. However, this comes with the responsibility of managing their own infrastructure, which introduces significant operational overhead ($$$). Solutions like dbt Cloud do not offer Virtual Private Cloud (VPC) deployment, full CI/CD flexibility, and a fully-fledged orchestrator leaving organizations to handle additional platform components.

We saw a need for a middle ground that combined the best of both worlds; something as flexible as dbt Core and Airflow, but fully managed like dbt Cloud. This led to Datacoves which provides a seamless experience with no platform maintenance overhead or onboarding hassles. Teams can focus on generating insights from data and not worry about the platform.

Avoiding Vendor Lock-In

Vendor lock-in is a major concern for organizations that want to maintain flexibility and avoid being tied to a single provider. The ability to switch out tools easily without excessive cost or effort is a key advantage of the modern data stack. Enterprises benefit from mixing and matching best-in-class solutions that meet their specific needs.

How Datacoves Solves Enterprise dbt Challenges

Datacoves is a fully managed enterprise platform for dbt, solving the challenges outlined above. Below is how Datacoves' features align with enterprise needs:

Platform Capabilities

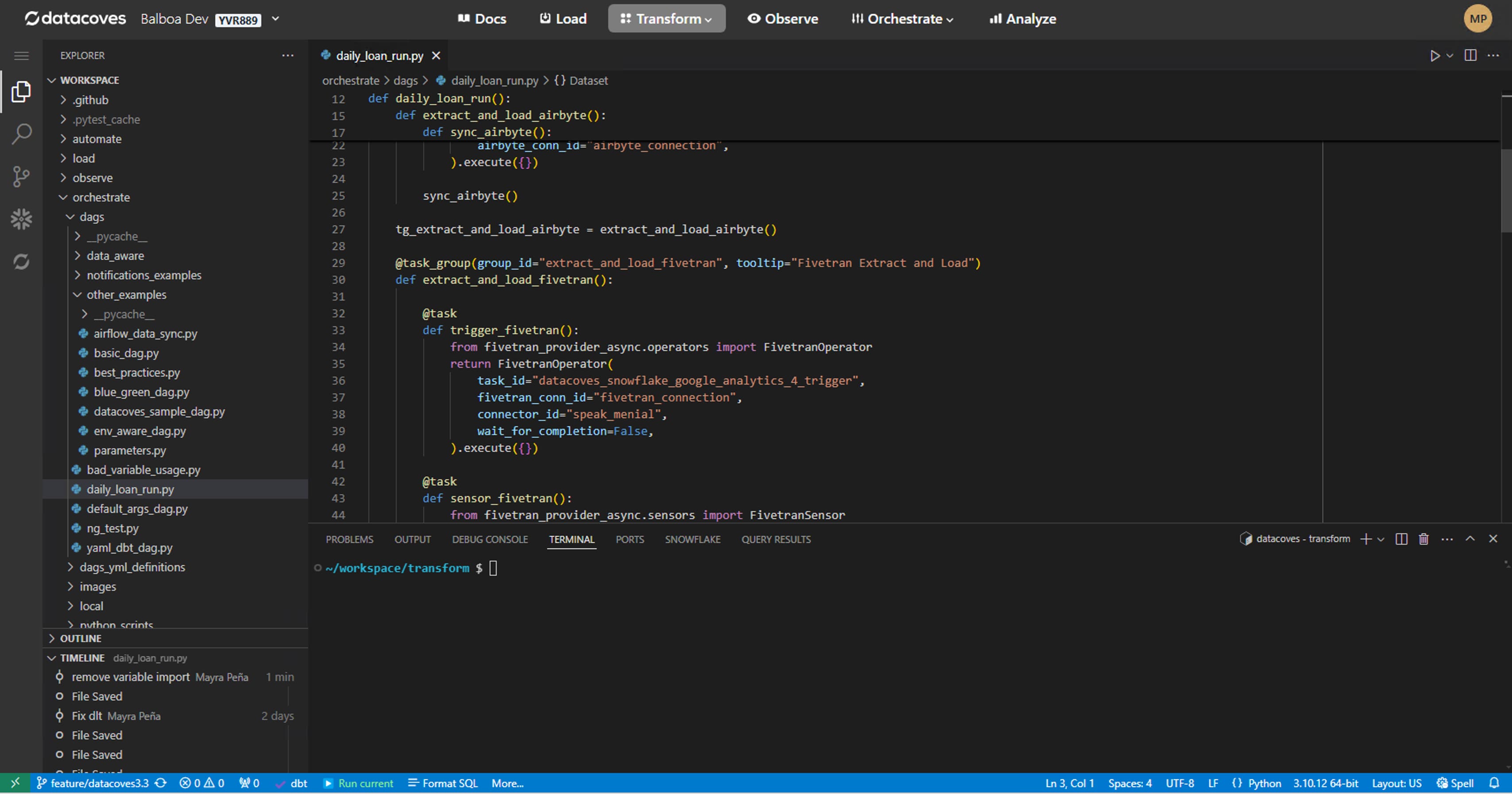

- Integrated Development Environment (IDE): With in-browser VS Code, users can develop SQL and Python seamlessly within a browser-based VS Code environment. This includes full access to the terminal, python libraries, and VS Code extensions for the most customizable development experience.

- Managed Development Environment: Pre-configured VS Code, dbt, and Airflow setup for enterprise teams. Everything is managed so project leads dont have to worry about dependencies, docker images, upgrades or onboarding. Datacoves users can be onboarded to a new project it minutes not days.

- Scalability & Flexibility: Kubernetes-powered infrastructure for elastic scaling. Users don’t have the operational overhead of managing their dbt and Airflow environments, they simply login and everything just works.

- Version Control & Collaboration: Datacoves integrates seamlessly with Git services like Github, Gitlab, Bitbucket, and Azure DevOps. When deployed in the customer’s VPC, Datacoves can even access private Git servers and Docker registries.

- Security & User Management: Datacoves can integrate Single Sign-On (SSO) for authentication., and AD groups for role management.

- Use of Open-Source Tools: Built on standard dbt Core, Airflow, and VS Code to ensure maximum flexibility. At the end of the day it is your code and you can take it with you.

Data Extraction and Loading

- With Datacoves, companies can leverage a managed Airbyte instance out of the box. However, if users are not using Airbyte or need addition EL tools Datacoves seamlessly integrates with enterprise EL solutions such as Amazon Glue, Azure Data Factory, Databricks, Streamsets, etc. Additionally, since Datacoves supports Python development organizations can leverage their custom Python frameworks or develop using tools like dlt (data load tool) with ease.

Data Transformation

- Support for SQL & Python: In addition to SQL or Python modeling via dbt, users can develop non-dbt Python scripts right within VS Code.

- Data Warehouse & Data Lake Support: As a platform, Datacoves is warehouse agnostic. It works with Snowflake, BigQuery, Redshift, Databricks, MS Fabric, and any other dbt-compatible warehouse.

Pipeline Orchestration

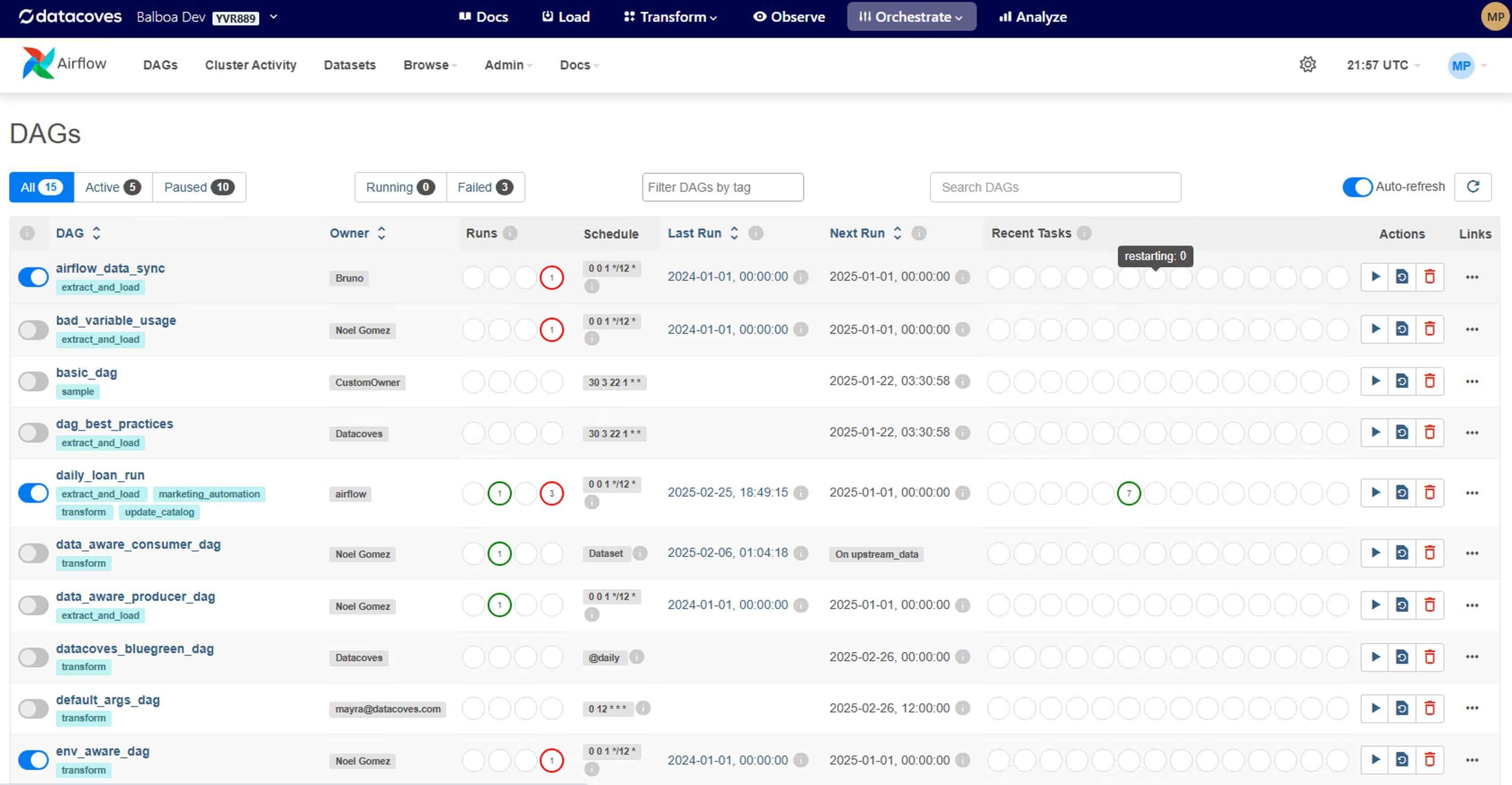

- Enterprise-Grade Managed Apache Airflow: By adopting a full fledged orchestrator, developers can orchestrate the full ELT + Viz pipeline minimizing cost and pipeline failures. One of the biggest benefits of Datacoves is its fully managed Airflow scheduler for data pipeline orchestration. Developers don’t have to worry about the infrastructure overhead or scaling headaches of managing their own Airflow.

- Developer Instance of Airflow ("My Airflow"): With a few clicks easily stand-up a solo Sandbox Airflow instance for testing DAGs before deployment. My Airflow can speed up DAG development by 20%+!

- Orchestrator Flexibility & Extensibility: Datacoves provides templated accelerators for creating Airflow DAGs and managing dbt runs. These best practices can be invaluable to an organization getting started or looking to optimize.

- Alerting & Monitoring: Out of the box SMTP integration as well as support for custom SMTP, Slack, and Microsoft Teams notifications for proactive monitoring.

Data Quality and Governance

- Cross-project lineage via Datacoves Mesh (aka dbt Mesh): Have a large dbt project that would benefit by being split into multiple projects? Datacoves enables large-scale cross-team collaboration with cross dbt project support.

- Enterprise-Grade Data Catalog (Datahub): Datacoves provides an optionally hosted Datahub instance which comes with Column-level lineage for tracking data transformations and includes cross project column-level lineage support.

- CI/CD Accelerators: Need a robust CI/CD pipeline? Datacoves provides accelerator scripts for Jenkins, Github Actions, and Gitlab workflows so teams dont start at square one. These scripts are fully customizable to meet any team’s needs.

- Enterprise Ready RBAC: Datacoves provides tools and processes that simplify Snowflake permissions while maintainig the controls necessary for securing PII data and complying with GDPR and CCPA regulations.

Licensing and Pricing Plans

Datacoves offers flexible deployment and pricing options to accommodate various enterprise needs:

- Deployment Options: Choose between Datacoves' multi-tenant SaaS platform or a customer-hosted Virtual Private Cloud (VPC) deployment, ensuring compliance with security and regulatory requirements.

- Scalable Pricing: Pricing structures are designed to scale to enterprise levels, optimizing costs as your data operations grow.

- Total Cost of Ownership (TCO): By providing a fully managed environment for dbt and Airflow, Datacoves reduces the need for in-house infrastructure management, lowering TCO by up to 50%.

Vendor Information and Support

Datacoves is committed to delivering enterprise-grade support and resources through our white-glove service:

- Dedicated Support: Comprehensive support packages, providing direct access to Datacoves' development team for timely assistance in Teams, Slack, and or email.

- Documentation and Training: Extensive documentation and optional training packages to help teams effectively utilize the platform.

- Change Management Expertise: We know that true adoption does not lie with the tools but rather change management. As a thought leader on the subject, Datacoves has guided many organizations through the implementation and scaling of dbt, ensuring a smooth transition and adoption of best practices.

Conclusion

Enterprises need more than just dbt to achieve scalable and efficient analytics. While dbt is a powerful tool for data transformation, it lacks the necessary infrastructure, governance, and orchestration capabilities required for enterprise-level deployments. Datacoves fills these gaps by providing a fully managed environment that integrates dbt-Core, VS Code, Airflow, and Kubernetes-based deployments, Datacoves is the ultimate solution for organizations looking to scale dbt successfully.

.png)