Not long ago, the data analytics world relied on monolithic infrastructures—tightly coupled systems that were difficult to scale, maintain, and adapt to changing needs. These legacy setups often resulted in operational bottlenecks, delayed insights, and high maintenance costs. To overcome these challenges, the industry shifted toward what was deemed the Modern Data Stack (MDS)—a suite of focused tools optimized for specific stages of the data engineering lifecycle.

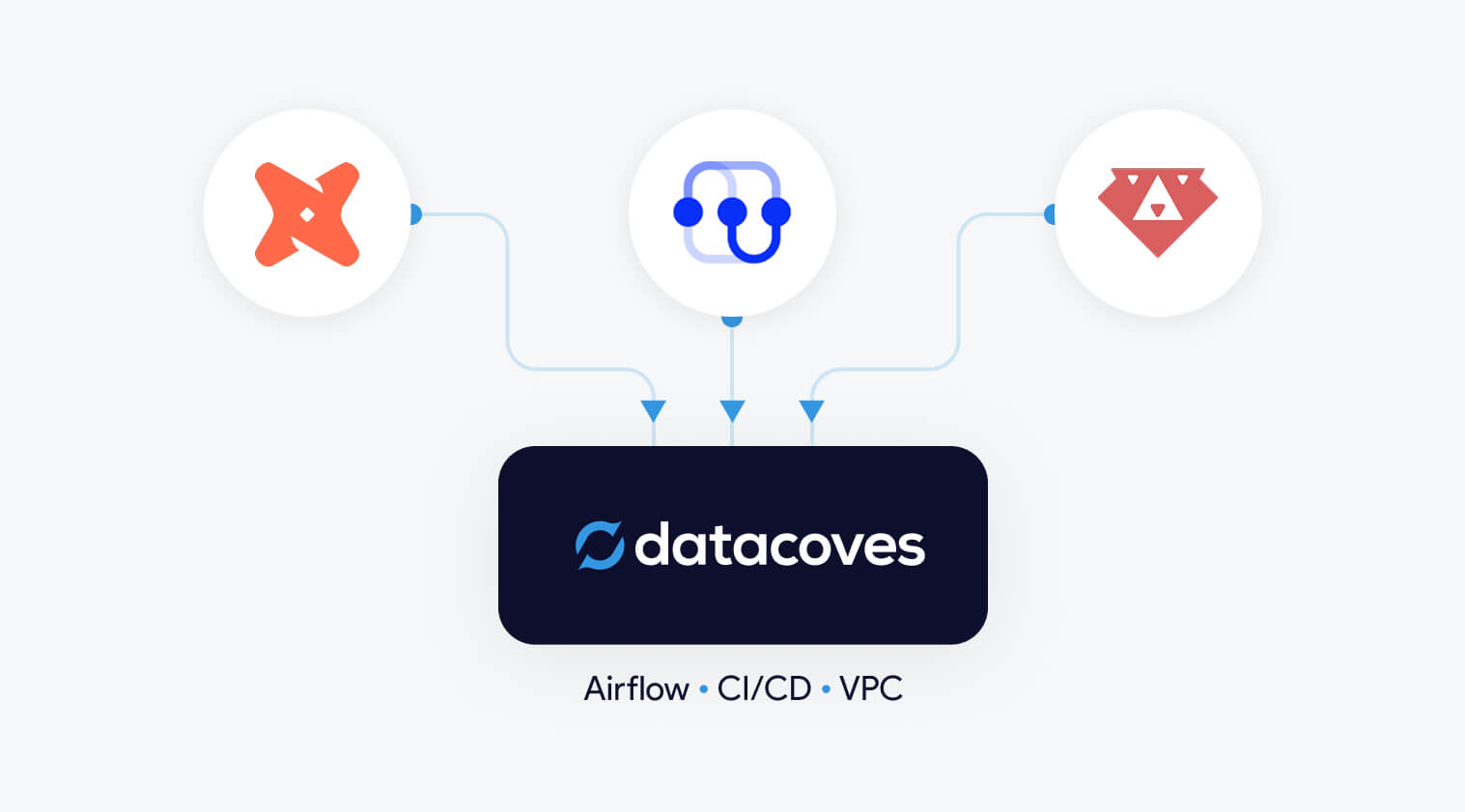

This modular approach was revolutionary, allowing organizations to select best-in-class tools like Airflow for Orchestration or a managed version of Airflow from Astronomer or Amazon without the need to build custom solutions. While the MDS improved scalability, reduced complexity, and enhanced flexibility, it also reshaped the build vs. buy decision for analytics platforms. Today, instead of deciding whether to create a component from scratch, data teams face a new question: Should they build the infrastructure to host open-source tools like Apache Airflow and dbt Core, or purchase their managed counterparts? This article focuses on these two components because pipeline orchestration and data transformation lie at the heart of any organization’s data platform.

When we say build in terms of open-source solutions, we mean building infrastructure to self-host and manage mature open-source tools like Airflow and dbt. These two tools are popular because they have been vetted by thousands of companies! In addition to hosting and managing, engineers must also ensure interoperability of these tools within their stack, handle security, scalability, and reliability. Needless to say, building is a huge undertaking that should not be taken lightly.

dbt and Airflow both started out as open-source tools, which were freely available to use due to their permissive licensing terms. Over time, cloud-based managed offerings of these tools were launched to simplify the setup and development process. These managed solutions build upon the open-source foundation, incorporating proprietary features like enhanced user interfaces, automation, security integration, and scalability. The goal is to make the tools more convenient and reduce the burden of maintaining infrastructure while lowering overall development costs. In other words, paid versions arose out of the pain points of self-managing the open-source tools.

This begs the important question: Should you self-manage or pay for your open-source analytics tools?

As with most things, both options come with trade-offs, and the “right” decision depends on your organization’s needs, resources, and priorities. By understanding the pros and cons of each approach, you can choose the option that aligns with your goals, budget, and long-term vision.

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Before moving on to the buy tradeoffs, it is important to set the record straight. You may have noticed that we did not include “the tool is free to use” as one of our pros for building with open-source. You might have guessed by reading the title of this section, but many people incorrectly believe that building their MDS using open-source tools like dbt is free. When in reality there are many factors that contribute to the true dbt pricing and the same is true for Airflow.

How can that be? Well, setting up everything you need and managing infrastructure for Airflow and dbt isn’t necessarily plug and play. There is day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges which require ongoing expertise and attention. Hiring a team to handle this will be critical particularly as you scale. High salaries and benefits are needed to avoid costly mistakes; this can easily cost anywhere from $5,000 to $26,000+/month depending on the size of your team.

In addition to the cost of salaries, let’s look at other possible hidden costs that come with using open-source tools.

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks and when issues arise, a you will need expertise to accelerate the time to resolution.

And then there’s the learning curve. Not all engineers on your team will be seniors, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents a hidden cost.

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business users are impacted as they become reliant on your insights.

A managed solution for Airflow and dbt can solve many of the problems that come with building your own solution from open-source tools such as: hassle-free maintenance, improved UI/UX experience, and integrated functionality. Let’s take a look at the pros.

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however additional configuration and development will be needed for teams to leverage it with dbt and to troubleshoot infrastructure issues suck as containers running out of memory.

For data teams, the allure of a custom-built solution often lies in its promise of complete control and customization. However, building this requires significant time, expertise, and ongoing maintenance. Datacoves bridges the gap between custom-built flexibility and the simplicity of managed services, offering the best of both worlds.

With Datacoves, teams can leverage managed Airflow and pre-configured dbt environments to eliminate the operational burden of infrastructure setup and maintenance. This allows data teams to focus on what truly matters—delivering insights and driving business decisions—without being bogged down by tool management.

Unlike other managed solutions for dbt or Airflow, which often compromise on flexibility for the sake of simplicity, Datacoves retains the adaptability that custom builds are known for. By combining this flexibility with the ease and efficiency of managed services, Datacoves empowers teams to accelerate their analytics workflows while ensuring scalability and control.

Datacoves doesn’t just run the open-source solutions, but through real-world implementations, the platform has been molded to handle enterprise complexity while simplifying project onboarding. With Datacoves, teams don’t have to compromize on features like Datacoves-Mesh (aka dbt-mesh), column level lineage, GenAI, Semantic Layer, etc. Best of all, the company’s goal is to make you successful and remove hosting complexity without introducing vendor lock-in. What Datacove does, you can do yourself if given enough time, experience, and money. Finally, for security concious organizations, Datacoves is the only solution on the market that can be deployed in your private cloud with white-glove enterprise support.

Datacoves isn’t just a platform—it’s a partnership designed to help your data team unlock their potential. With infrastructure taken care of, your team can focus on what they do best: generating actionable insights and maximizing your ROI.

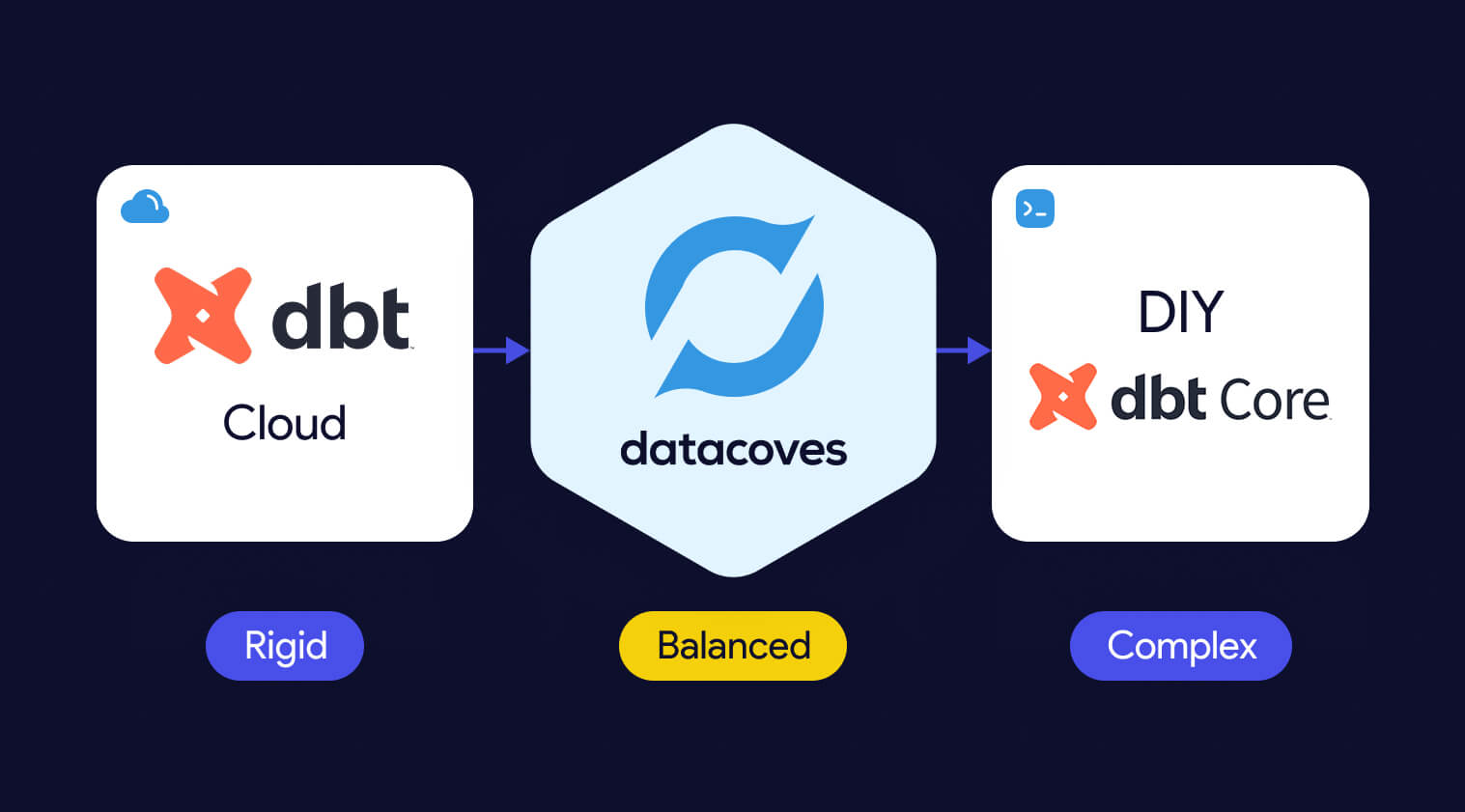

The build vs. buy debate has long been a challenge for data teams, with building offering flexibility at the cost of complexity, and buying sacrificing flexibility for simplicity. As discussed earlier in the article, solutions like dbt and Airflow are powerful, but managing them in-house requires significant time, resources, and expertise. On the other hand, managed offerings like dbt Cloud and MWAA simplify operations but often limit customization and control.

Datacoves bridges this gap, providing a managed platform that delivers the flexibility and control of a custom build without the operational headaches. By eliminating the need to manage infrastructure, scaling, and security. Datacoves enables data teams to focus on what matters most: delivering actionable insights and driving business outcomes.

As highlighted in Fundamentals of Data Engineering, data teams should prioritize extracting value from data rather than managing the tools that support them. Datacoves embodies this principle, making the argument to build obsolete. Why spend weeks—or even months—building when you can have the customization and adaptability of a build with the ease of a buy? Datacoves is not just a solution; it’s a rethinking of how modern data teams operate, helping you achieve your goals faster, with fewer trade-offs.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and visual ETL tools such as Alteryx, Matillion, and Informatica. Code-first engines offer stronger rigor, testing, and CI/CD, while GUI platforms emphasize ease of use and rapid prototyping. Teams choose these alternatives when they need more security, governance, or flexibility than dbt Core or dbt Cloud provide.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and GUI-based ETL tools such as Alteryx, Matillion, and Informatica.

Teams explore dbt alternatives when they need stronger governance, private deployments, or support for Python and code-first workflows that go beyond SQL. Many also prefer GUI-based ETL tools for faster onboarding. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in, which makes tool neutrality and platform flexibility more important than ever.

Teams look for dbt alternatives when they need stronger orchestration, consistent development environments, Python support, or private cloud deployment options that dbt Cloud does not provide.

Organizations evaluating dbt alternatives typically compare tools across three categories. Each category reflects a different approach to data transformation, development preferences, and organizational maturity.

Organizations consider alternatives to dbt Cloud when they need more flexibility, stronger security, or support for development workflows that extend beyond dbt. Teams comparing platform options often begin by evaluating the differences between dbt Cloud vs dbt Core.

Running enterprise-scale ELT pipelines often requires a full orchestration layer, consistent development environments, and private deployment options that dbt Cloud does not provide. Costs can also increase at scale (see our breakdown of dbt pricing considerations), and some organizations prefer to avoid features that are not open source to reduce long-term vendor lock-in.

This category includes platforms that deliver the benefits of dbt Cloud while providing more control, extensibility, and alignment with enterprise data platform requirements.

Datacoves provides a secure, flexible platform that supports dbt, SQLMesh, and Bruin in a unified environment with private cloud or VPC deployment.

Datacoves is an enterprise data platform that serves as a secure, flexible alternative to dbt Cloud. It supports dbt Core, SQLMesh, and Bruin inside a unified development and orchestration environment, and it can be deployed in your private cloud or VPC for full control over data access and governance.

Benefits

Flexibility and Customization:

Datacoves provides a customizable in-browser VS Code IDE, Git workflows, and support for Python libraries and VS Code extensions. Teams can choose the transformation engine that fits their needs without being locked into a single vendor.

Handling Enterprise Complexity:

Datacoves includes managed Airflow for end-to-end orchestration, making it easy to run dbt and Airflow together without maintaining your own infrastructure. It standardizes development environments, manages secrets, and supports multi-team and multi-project workflows without platform drift.

Cost Efficiency:

Datacoves reduces operational overhead by eliminating the need to maintain separate systems for orchestration, environments, CI, logging, and deployment. Its pricing model is predictable and designed for enterprise scalability.

Data Security and Compliance:

Datacoves can be deployed fully inside your VPC or private cloud. This gives organizations complete control over identity, access, logging, network boundaries, and compliance with industry and internal standards.

Reduced Vendor Lock-In:

Datacoves supports dbt, SQLMesh, and Bruin Data, giving teams long-term optionality. This avoids being locked into a single transformation engine or vendor ecosystem.

Running dbt Core yourself is a flexible option that gives teams full control over how dbt executes. It is also the most resource-intensive approach. Teams choosing DIY dbt Core must manage orchestration, scheduling, CI, secrets, environment consistency, and long-term platform maintenance on their own.

Benefits

Full Control:

Teams can configure dbt Core exactly as they want and integrate it with internal tools or custom workflows.

Cost Flexibility:

There are no dbt Cloud platform fees, but total cost of ownership often increases as the system grows.

Considerations

High Maintenance Overhead:

Teams must maintain Airflow or another orchestrator, build CI pipelines, manage secrets, and keep development environments consistent across users.

Requires Platform Engineering Skills:

DIY dbt Core works best for teams with strong Kubernetes, CI, Python, and DevOps expertise. Without this expertise, the environment becomes fragile over time.

Slow to Scale:

As more engineers join the team, keeping dbt environments aligned becomes challenging. Onboarding, upgrades, and platform drift create operational friction.

Security and Compliance Responsibility:

Identity, permissions, logging, and network controls must be designed and maintained internally, which can be significant for regulated organizations.

Teams that prefer code-first tools often look for dbt alternatives that provide strong SQL modeling, Python support, and seamless integration with CI/CD workflows and automated testing. These are part of a broader set of data transformation tools. Code-based ETL tools give developers greater control over transformations, environments, and orchestration patterns than GUI platforms. Below are four code-first contenders that organizations should evaluate.

Code-first dbt alternatives like SQLMesh, Bruin Data, and Dataform provide stronger CI/CD integration, automated testing, and more control over complex transformation workflows.

SQLMesh is an open-source framework for SQL and Python-based data transformations. It provides strong visibility into how changes impact downstream models and uses virtual data environments to preview changes before they reach production.

Benefits

Efficient Development Environments:

Virtual environments reduce unnecessary recomputation and speed up iteration.

Considerations

Part of the Fivetran Ecosystem:

SQLMesh was acquired by Fivetran, which may influence its future roadmap and level of independence.

Dataform is a SQL-based transformation framework focused specifically for BigQuery. It enables teams to create table definitions, manage dependencies, document models, and configure data quality tests inside the Google Cloud ecosystem. It also provides version control and integrates with GitHub and GitLab.

Benefits

Centralized BigQuery Development:

Dataform keeps all modeling and testing within BigQuery, reducing context switching and making it easier for teams to collaborate using familiar SQL workflows.

Considerations

Focused Only on the GCP Ecosystem:

Because Dataform is geared toward BigQuery, it may not be suitable for organizations that use multiple cloud data warehouses.

AWS Glue is a serverless data integration service that supports Python-based ETL and transformation workflows. It works well for organizations operating primarily in AWS and provides native integration with services like S3, Lambda, and Athena.

Benefits

Python-First ETL in AWS:

Glue supports Python scripts and PySpark jobs, making it a good fit for engineering teams already invested in the AWS ecosystem.

Considerations

Requires Engineering Expertise:

Glue can be complex to configure and maintain, and its Python-centric approach may not be ideal for SQL-first analytics teams.

Bruin is a modern SQL-based data modeling framework designed to simplify development, testing, and environment-aware deployments. It offers a familiar SQL developer experience while adding guardrails and automation to help teams manage complex transformation logic.

Benefits

Modern SQL Modeling Experience:

Bruin provides a clean SQL-first workflow with strong dependency management and testing.

Considerations

Growing Ecosystem:

Bruin is newer than dbt and has a smaller community and fewer third-party integrations.

While code-based transformation tools provide the most flexibility and long-term maintainability, some organizations prefer graphical user interface (GUI) tools. These platforms use visual, drag-and-drop components to build data integration and transformation workflows. Many of these platforms fall into the broader category of no-code ETL tools. GUI tools can accelerate onboarding for teams less comfortable with code editors and may simplify development in the short term. Below are several GUI-based options that organizations often consider as dbt alternatives.

GUI-based dbt alternatives such as Matillion, Informatica, and Alteryx use drag-and-drop interfaces that simplify development and accelerate onboarding for mixed-skill teams.

Matillion is a cloud-based data integration platform that enables teams to design ETL and transformation workflows through a visual, drag-and-drop interface. It is built for ease of use and supports major cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake.

Benefits

User-Friendly Visual Development:

Matillion simplifies pipeline building with a graphical interface, making it accessible for users who prefer low-code or no-code tooling.

Considerations

Limited Flexibility for Complex SQL Modeling:

Matillion’s visual approach can become restrictive for advanced transformation logic or engineering workflows that require version control and modular SQL development.

Informatica is an enterprise data integration platform with extensive ETL capabilities, hundreds of connectors, data quality tooling, metadata-driven workflows, and advanced security features. It is built for large and diverse data environments.

Benefits

Enterprise-Scale Data Management:

Informatica supports complex data integration, governance, and quality requirements, making it suitable for organizations with large data volumes and strict compliance needs.

Considerations

High Complexity and Cost:

Informatica’s power comes with a steep learning curve, and its licensing and operational costs can be significant compared to lighter-weight transformation tools.

Alteryx is a visual analytics and data preparation platform that combines data blending, predictive modeling, and spatial analysis in a single GUI-based environment. It is designed for analysts who want to build workflows without writing code and can be deployed on-premises or in the cloud.

Benefits

Powerful GUI Analytics Capabilities:

Alteryx allows users to prepare data, perform advanced analytics, and generate insights in one tool, enabling teams without strong coding skills to automate complex workflows.

Considerations

High Cost and Limited SQL Modeling Flexibility:

Alteryx is one of the more expensive platforms in this category and is less suited for SQL-first transformation teams who need modular modeling and version control.

Azure Data Factory (ADF) is a fully managed, serverless data integration service that provides a visual interface for building ETL and ELT pipelines. It integrates natively with Azure storage, compute, and analytics services, allowing teams to orchestrate and monitor pipelines without writing code.

Benefits

Strong Integration for Microsoft-Centric Teams:

ADF connects seamlessly with other Azure services and supports a pay-as-you-go model, making it ideal for organizations already invested in the Microsoft ecosystem.

Considerations

Limited Transformation Flexibility:

ADF excels at data movement and orchestration but offers limited capabilities for complex SQL modeling, making it less suitable as a primary transformation engine

Talend provides an end-to-end data management platform with support for batch and real-time data integration, data quality, governance, and metadata management. Talend Data Fabric combines these capabilities into a single low-code environment that can run in cloud, hybrid, or on-premises deployments.

Benefits

Comprehensive Data Quality and Governance:

Talend includes built-in tools for data cleansing, validation, and stewardship, helping organizations improve the reliability of their data assets.

Considerations

Broad Platform, Higher Operational Complexity:

Talend’s wide feature set can introduce complexity, and teams may need dedicated expertise to manage the platform effectively.

SQL Server Integration Services is part of the Microsoft SQL Server ecosystem and provides data integration and transformation workflows. It supports extracting, transforming, and loading data from a wide range of sources, and offers graphical tools and wizards for designing ETL pipelines.

Benefits

Strong Fit for SQL Server-Centric Teams:

SSIS integrates deeply with SQL Server and other Microsoft products, making it a natural choice for organizations with a Microsoft-first architecture.

Considerations

Not Designed for Modern Cloud Data Warehouses:

SSIS is optimized for on-premises SQL Server environments and is less suitable for cloud-native architectures or modern ELT workflows.

Recent consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in and pushed organizations to evaluate more flexible transformation platforms.

Organizations explore dbt alternatives when dbt no longer meets their architectural, security, or workflow needs. As teams scale, they often require stronger orchestration, consistent development environments, mixed SQL and Python workflows, and private deployment options that dbt Cloud does not provide.

Some teams prefer code-first engines for deeper CI/CD integration, automated testing, and strong guardrails across developers. Others choose GUI-based tools for faster onboarding or broader integration capabilities. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has also increased concerns about vendor lock-in.

These factors lead many organizations to evaluate tools that better align with their governance requirements, engineering preferences, and long-term strategy.

DIY dbt Core offers full control but requires significant engineering work to manage orchestration, CI/CD, security, and long-term platform maintenance.

Running dbt Core yourself can seem attractive because it offers full control and avoids platform subscription costs. However, building a stable, secure, and scalable dbt environment requires significantly more than executing dbt build on a server. It involves managing orchestration, CI/CD, and ensuring development environment consistency along with long-term platform maintenance, all of which require mature DataOps practices.

The true question for most organizations is not whether they can run dbt Core themselves, but whether it is the best use of engineering time. This is essentially a question of whether to build vs buy your data platform. DIY dbt platforms often start simple and gradually accumulate technical debt as teams grow, pipelines expand, and governance requirements increase.

For many organizations, DIY works in the early stages but becomes difficult to sustain as the platform matures.

The right dbt alternative depends on your team’s skills, governance requirements, pipeline complexity, and long-term data platform strategy.

Selecting the right dbt alternative depends on your team’s skills, security requirements, and long-term data platform strategy. Each category of tools solves different problems, so it is important to evaluate your priorities before committing to a solution.

If these are priorities, a platform with secure deployment options or multi-engine support may be a better fit than dbt Cloud.

Recent consolidation in the ecosystem has raised concerns about vendor dependency. Organizations that want long-term flexibility often look for:

Consider platform fees, engineering maintenance, onboarding time, and the cost of additional supporting tools such as orchestrators, IDEs, and environment management

dbt remains a strong choice for SQL-based transformations, but it is not the only option. As organizations scale, they often need stronger orchestration, consistent development environments, Python support, and private deployment capabilities that dbt Cloud or DIY dbt Core may not provide. Evaluating alternatives helps ensure that your transformation layer aligns with your long-term platform and governance strategy.

Code-first tools like SQLMesh, Bruin Data, and Dataform offer strong engineering workflows, while GUI-based tools such as Matillion, Informatica, and Alteryx support faster onboarding for mixed-skill teams. The right choice depends on the complexity of your pipelines, your team’s technical profile, and the level of security and control your organization requires.

Datacoves provides a flexible, secure alternative that supports dbt, SQLMesh, and Bruin in a unified environment. With private cloud or VPC deployment, managed Airflow, and a standardized development experience, Datacoves helps teams avoid vendor lock-in while gaining an enterprise-ready platform for analytics engineering.

Selecting the right dbt alternative is ultimately about aligning your transformation approach with your data architecture, governance needs, and long-term strategy. Taking the time to assess these factors will help ensure your platform remains scalable, secure, and flexible for your future needs.

.webp)

There is no doubt about the transformative potential of big data and analytics. This is particularly true for the Life Science sector as implementing technologies and ideologies can revolutionize drug development, tailor medicines to individual needs, dramatically improve patient care and more. The data supports this, with the longest running report of Fortune 1000 CIOs by Wavestone, showing 87.9% of companies believe “investments in Data & Analytics are a Top Organizational Priority.”

Such great promise and high buy in should mean easy cultural adoption, right? Well not exactly. The 2024 report shows there has been a notable improvement; the percentage of top executives facing significant challenges in culture, people, and process/organization decreased from a staggering 90% in 2020 to 77.6% in 2024. While this reduction signifies a positive trend towards addressing these issues, the fact that more than three-quarters of leaders still encounter these problems underscores the widespread nature of these challenges. The persistently high percentage highlights the need for continued and focused efforts to overcome these barriers.

In an effort to help further lower the 77.6%, this article aims to cover the benefits of data in the Life Science sector, highlight common culture issues, and provide some solutions to the problem. If your organization is among those facing these struggles, know that you are not alone.

The life science sector stands on the brink of a digital transformation, powered by the strategic use of data. It's clear that its impact is far-reaching, transforming every facet from research and development to patient care and beyond. Below are some examples of what this data-driven culture can improve.

As we identified earlier in this article, culture, people, and process/organization challenges are the biggest obstacles for companies to achieve digital transformation and become data-driven. Identifying specific challenges is key to developing a solution.

Pharma companies tend to be risk averse due to the nature of the data they are responsible for. This leads to limitations and constraints to innovative solutions. These constraints are often accepted without understanding the rationale behind these constraints or challenging them. New technology offers new ways to manage data that were not available in the past. If a company remains the most risk averse and the most conservative this will stifle innovation. The key takeaway is that it is not only about technology but about the new process potential that this new tech provides.

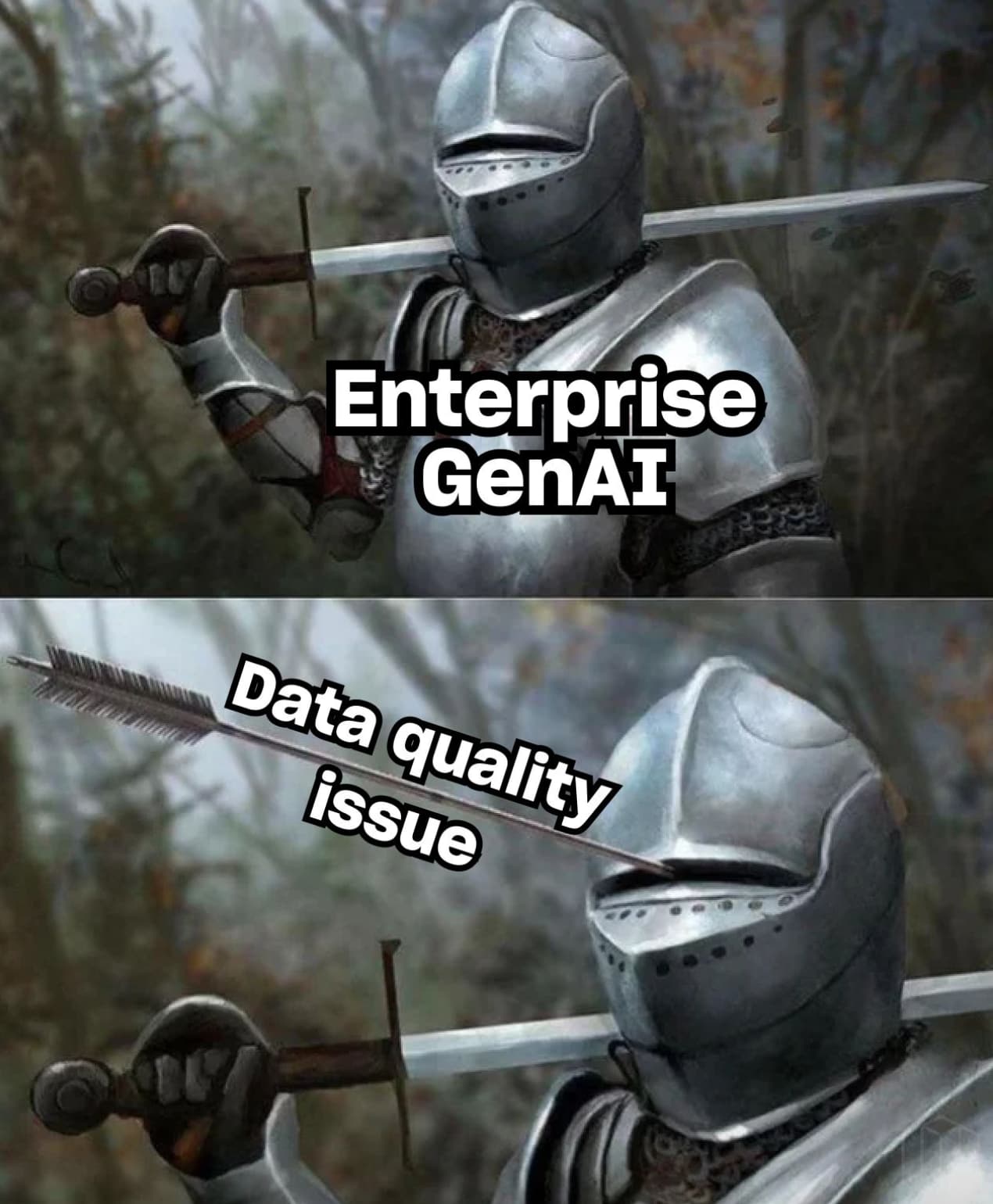

This goes back to the foundation. Technology changes, initiatives change, but data truths do not. These truths involve thinking about end-to-end processes, data quality, documentation, good guidelines conventions, data governance, reducing points of failure ect. It is easy to get swept up by the latest trend and want to jump in, but you cannot build a house on quicksand; new trends like Gen AI still require these things underneath.

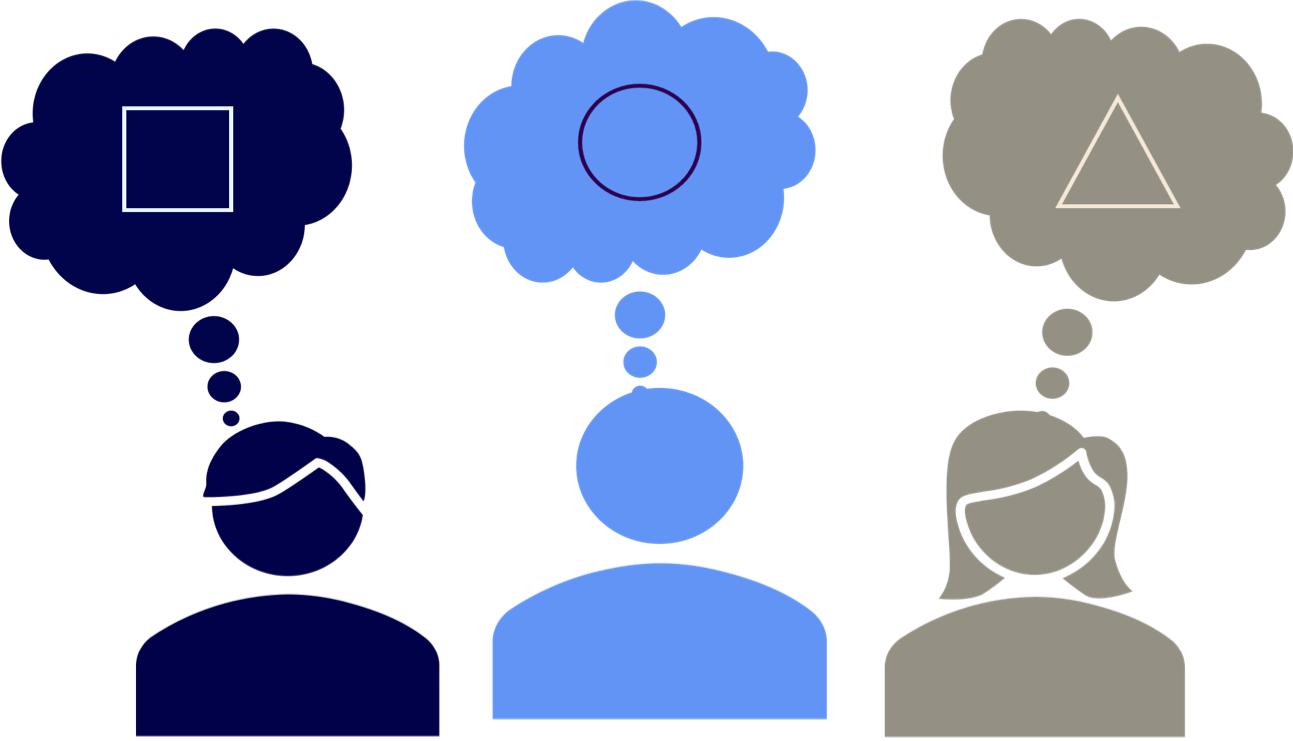

This starts with aligning the team. Alignment is one of the 3 core pillars to a data-driven culture. People will start out thinking they are on the same page because they are using the same terminology. But we all have different ideas influenced by our experiences. So, it is important to gather all stakeholders and go through a true alignment process which includes figuring out the current state, pain points, and aligning on the solution.

Fundamental alignment includes adopting a top-down approach. The efforts one team is making are sure to affect the efforts of another. Leadership must align and connect the dots between all moving parts and understand that things are not living in isolation. This will solve headaches downstream and ensure a smoother process.

Alignment alone won’t be enough to combat culture challenges. True alignment can lead to overly ambitious projects that may prove difficult to execute. This is the classic trap of trying to do too much at once. It is important to start with manageable, small-scale projects that can provide immediate benefits. These quick wins are vital for positively influencing the organizational culture. Additionally, they help maintain momentum: if a larger initiative is slow to yield results, these smaller successes ensure continuous progress. By allowing for ongoing adjustments and reprioritizations, these projects help prevent initiatives from being abandoned.

While the potential of big data and analytics in transforming the Life Science sector is undeniable, and investment in data is on the rise, the journey towards a data-driven culture remains one of the biggest challenges for data integration. The path forward requires a balanced approach that combines technology with fundamental changes in culture and processes. By embracing these changes, the life sciences sector can overcome existing barriers and fully harness the power of data to advance medical science and improve patient outcomes. At Datacoves we are passionate about helping companies achieve a data-driven culture. See how Datacoves helped Johnson&Johnson innovate their tech stack.

This article was inspired by RAN BioLinks’ podcast episode “Why Life Science Organizations Fail to Implement Effective Data Strategies”. The detailed conversation with Noel Gomez, a seasoned expert in data management within the life science industry, explores the critical challenges and innovative solutions for effective data strategies in healthcare.

Companies are investing heavily to become data-driven and to democratize data access. However, many are not achieving the transformative outcomes they expected.

The core issue? A lack of trust.

This mistrust stems from a lack of focus on core aspects that ensure a robust data-driven culture and critical mistakes in these areas.

Fortunately, these mistakes are self-inflicted which means they can be fixed, and this article aims to help highlight and address these pitfalls. By understanding and adhering to the core pillars of a data-driven culture and avoiding the common mistakes, organizations can develop and maintain a data-driven culture that people can trust.

It is no secret that there is power and opportunity in data, and data-driven culture is the approach which aims to take advantage of that.

A data-driven culture is not about hastily adopting the latest tools or technologies in the hope of resolving data challenges. This common mistake often leads to a focus on immediate results or 'shiny objects', such as acquiring cutting-edge technology or hiring new talent. Unfortunately, this approach tends to overlook essential priorities and gradually erodes the foundation of a data-driven culture: Trust in the data.

Many companies struggle with effectively using analytics because they overemphasize these immediate goals – the 'destination' – rather than appreciating the foundational journey necessary for impactful analytics. This journey involves more than just technology; it requires a shift in mindset and approach.

Data-driven culture represents an organizational approach where data is the cornerstone of decision-making processes. In such a culture, decisions are primarily informed by data analysis, rather than relying exclusively on intuition or past experiences. This approach involves strategically employing data at every level of the organization. It fosters an environment where data is not just an asset but the main driver of strategy, innovation, and operational choices. By harnessing the power and opportunities offered by data, a data-driven culture ensures that decisions across the organization are grounded in solid evidence and analytical insight, enhancing the overall decision-making quality and efficacy.

Empowered Decision Making: Decisions are based on data analysis, leading to objective and impactful outcomes.

Accessibility of Data: Data is accessible across the organization, breaking down silos and empowering all employees.

Investment in Technology: Adequate tools and technologies are provided for effective data collection and analysis.

Data Literacy: Continuous training is provided to enhance the workforce's understanding and use of data.

Quality and Governance: High standards of data accuracy and security are maintained.

Agility: The organization adapts quickly to insights derived from data.

Collaborative Integration: Data insights are shared and integrated across various functions.

Outcome-Focused: Emphasis on measurable results driven by data insights.

All of that sounds great, but how do we achieve a data-driven culture?

Like mentioned earlier in the article, true success in analytics comes not from merely chasing new tools or methodologies but from establishing three core pillars as part of a Data-Driven Culture:

By refocusing on these foundational elements, businesses can drive more meaningful and sustainable results from their analytic endeavors, leading to overall project success and satisfaction.

Let's dive deeper into the core pillars and examine the common pitfalls within each pillar that I have observed lead to challenges.

Fundamental alignment is about synchronizing analytics strategies with the organization's core business objectives. This ensures everyone involved, from executives to frontline employees, share a common vision and understanding of what analytics aims to achieve. This alignment is crucial for creating a unified direction in data-driven initiatives and ensuring that every analytics effort contributes meaningfully to the overall business strategy.

This sounds great right? So much so that every project I've participated in began with high hopes and enthusiasm. Initially, there was a sense of unity – funding secured, partnerships with vendors established, and the latest technology acquired. This honeymoon phase of the data driven transformation, filled with optimism, had everyone working diligently, with management receiving regular updates and a general belief that we were on the right track.

The real test emerged when critical decisions were required. This was the point where the honeymoon phase often faded, revealing a lack of true alignment. Meetings became prolonged discussions where the team struggled to reach consensus. This challenge stemmed from either not spending enough time initially to ensure everyone was on the same page or not conducting a discovery phase at the start of the project.

Although we agreed on high-level objectives like digital transformation and self-service analytics, there was a misalignment in our deeper understanding and perspectives. We were each influenced by our varied backgrounds and expertise in different aspects of the project.

This led me to a crucial fact: the importance of alignment before action. In projects where we dedicated time upfront for structured alignment and thorough product discovery, we not only achieved better estimations but also greater overall satisfaction. It became evident that successful analytics projects require a deep understanding of business objectives, the current state, potential risks, and a clear prioritization of features. This was because we developed a clear understanding and set up expectations that people could rely on throughout the course of implementation.

Crucially, alignment also involves clarity on what the project will not address, alongside the criteria for prioritization such as quality, completeness of features, and usability. Embracing agility does not mean forgoing thorough planning.

Ultimately, building trust in any project begins with listening, creating a shared vision, and setting the right expectations from the start. A well-defined and achievable plan, understood and agreed upon by all, is the foundation of success.

The end goal of analytics should be to serve the user's needs and involve designing practical solutions that add real value and enhance decision-making processes. This means creating analytics tools and processes that are intuitively aligned with how users work and make decisions, ensuring that these tools are not just technically proficient but also practically useful.

There are two pitfalls to avoid.

1. Trying to please everyone often leads to pleasing no one.

This is a common scenario in many companies where technical teams strive to meet all demands. Despite their efforts to deliver on projects, dissatisfaction with the end results is frequent.

2. Not addressing the actual user pain points.

This happens when the user does not actually get a good working solution out of the process.

During discovery it is important to discuss what is in scope, out of scope, essential, and nice to have. By categorizing this way you can better understand the needs of the group and use it to guide the process. With this process done, you can move forward with confidence that you are addressing the most important pain points.

Now that we have defined the pain points, the next step is to fully understand. The key is to not only understand their needs but the reasons behind them. What are the goals they're trying to achieve? What are the shortcomings of their current methods? Is the new process or tool genuinely an improvement over what they currently have? For example, if users need to navigate a tool quickly, finding ways to reduce unnecessary clicks and simplifying access becomes important. Sometimes, it's more practical to keep an existing process unchanged until other parts are enhanced. By bringing the most critical information to the forefront, the solution becomes more user centric.

It is important to have these needs in mind at the beginning of the project and strive to truly understand. If not, you risk investing time, money, and resources in a tool that users don't need, and this can have a detrimental effect on the overall culture.

More importantly, this approach allows you to justify your decisions and explain why certain aspects are prioritized over others. When users see that their needs and challenges are understood and addressed, they are more likely to trust and accept the solutions provided. This trust is built through consistently demonstrating that their best interests are at heart.

Efficient data management involves implementing robust processes to ensure data accuracy, accessibility, and understandability. This pillar is key to informed decision-making as it underpins the reliability of data-driven insights. Effective data management includes organizing, storing, and safeguarding data to make it readily available and useful for users across the organization.

Let's face it, your data processes get no love. This is usually because they are "too technical." Users often do not concern themselves with databases, schemas, tables, or columns, let alone the process that turns raw facts into business-ready insights. It is easy for management to get excited about a fancy dashboard and the potential of Machine Learning and Gen AI, but when it comes to the actual data, interest tends to wane.

It makes sense; most people don't understand how the power grid works. We take it for granted that we flip a switch and expect the lights to turn on. We move on without a second thought. No one really cares about electricity until something goes wrong. Similarly, in many organizations, data issues often go unnoticed until a failure occurs. Sometimes these issues are immediately apparent, but other times they are silent. When a failure does happen, there is a scramble to fix it. Meetings are held, issues are identified, and patches are implemented to "prevent" future failures. However, the best time to think about potential problems isn't after they happen, but before — building systems that anticipate and are designed for resilience.

The real issue is that the process from raw data to insights isn't often viewed as a single system. It is all interconnected and should be treated as such. In the world of analytics, it sometimes feels like companies are trying to build a mansion on a foundation of quicksand. Initially, everything seems fine, and everyone is busy with their tasks, but when the foundation starts to give way, the focus shifts to propping up the weak points. You can't effectively build on quicksand; you need solid, repeatable processes from the start.

The focus should be on building systems that anticipate challenges and are designed for resilience. This involves integrating data management practices into the company's culture from the start, ensuring users trust the data and the processes that generate insights. If you want effective collaboration and impact analysis, these are difficult to retrofit later — they need to be part of the initial plan. Documented analytics isn't a magical solution; it needs to be ingrained in the culture and process from the beginning. The good news is that there are many examples and best practices from those who have navigated these challenges successfully.

For users to truly trust in analytics, they need to have faith in the data and the processes that generate it. They need to see and believe in a robust system built on a solid foundation.

To achieve a data-driven culture, companies must refocus on three core pillars: fundamental alignment, user-focused solutions, efficient data management, and avoid common mistakes in these areas. Success in analytics isn't about chasing new tools or methodologies but about building a robust system from the ground up, aligning everyone's vision, and creating practical, value-added solutions. Prioritizing foundational elements over immediate shiny objects will lead to more meaningful, sustainable results and will build trust in the analytics process.

As the world of data management continues to grow, terms and new concepts are constantly popping up. It's important for data professionals to stay up to date with terms such as Data Mesh and data observability. For those coming into the field from other areas, it’s also good to understand terminology to communicate more effectively with others.

In this blog post, we've put together an extensive table that breaks down and explains the essential terms in modern data engineering, analytics, and architecture. This resource is designed to help both experienced data professionals and newcomers alike to navigate and understand the ever-evolving language of data.

We've covered basic concepts like data warehouses and ETL pipelines and advanced ideas like Data Mesh. Each of these terms is crucial in shaping today's data ecosystems. Think about how these terms apply to your business and can enhance your understanding. Have we missed any terms that you were hoping to see defined, or do you think we could improve the definitions of some of the terms already defined? Please share your thoughts with us by providing feedback through our contact page.

Interested in modern data solutions? Accelerate your journey to a modern data stack with Datacoves' managed solution, designed to streamline your data processes and implement best practices efficiently. Discover how Datacoves can help you quickly add value and transform your data strategy, ensuring you make the most informed decisions for your specific needs, by scheduling a demo.

dbt is wildly popular and has become a fundamental part of many data stacks. While it’s easy to spin up a project and get things running on a local machine, taking the next step and deploying dbt to production isn’t quite as simple.

In this article we will discuss options for deploying dbt to production, comparing some high, medium, and low effort options so that you can find which works best for your business and team. You might be deploying dbt using one of these patterns already; if you are, hopefully this guide will help highlight some improvements you can make to your existing deployment process.

We're going to assume you know how to run dbt on your own computer (aka your local dbt setup). We’re also going to assume that you either want to or need to run dbt in a “production” environment – a place where other tools and systems make use of the data models that dbt creates in your warehouse.

The deployment process for dbt jobs extends beyond basic scheduling and involves a multifaceted approach. This includes establishing various dbt environments with distinct roles and requirements, ensuring the reliability and scalability of these environments, integrating dbt with other tools in the (EL)T stack, and implementing effective scheduling strategies for dbt tasks. By focusing on these aspects, a comprehensive and robust dbt deployment strategy can be developed. This strategy will not only address current data processing needs but also adapt to future challenges and changes in your data landscape, ensuring long-term success and reliability.

In deploying dbt you have the creation and management of certain dbt environments. The development environment is the initial testing ground for creating and refining dbt models. It allows for experimentation without impacting production data. Following this, the testing environment, including stages like UAT and regression testing, rigorously evaluates the models for accuracy and performance. Finally, the production environment is where these models are executed on actual data, demanding high stability and performance.

Reliability and scalability of data models are also important. Ensuring that the data models produce accurate and consistent results is essential for maintaining trust in your data. As your data grows, the dbt deployment should be capable of scaling, handling increased volumes, and maintaining performance.

Integration with other data tools and systems is another key aspect. A seamless integration of dbt with EL tools, data visualization platforms, and data warehouses ensures efficient data flow and processing, making dbt a harmonious component of your broader data stack.

Effective dbt scheduling goes beyond mere time-based scheduling. It involves context-aware execution, such as triggering jobs based on data availability or other external events. Managing dependencies within your data models is critical to ensure that transformations occur in the correct sequence. Additionally, adapting to varying data loads is necessary to scale resources effectively and maintain the efficiency of dbt job executions.

They each have their place, and the trade-offs between setup costs and long-term maintainability is important to consider when you’re choosing one versus another.

Cron jobs are scripts that run at a set schedule. They can be defined in any language. For instance, we can use a simple bash script to run dbt. It’s just like running the CLI commands, but instead of you running them by hand, a computer process would do it for you.

Here’s a simple cron script:

In order to run on schedule, you’ll need to add this file to your system’s crontab.

As you can tell, this is a very basic dbt run script; we are doing the bare minimum to run the project. There is no consideration for tagged models, test, alerting, or more advanced checks.

Even though Cron jobs are the most basic way to deploy dbt there is still a learning curve. It requires some technical skills to set up this deployment. Additionally, because of its simplicity, it is pretty limited. If you are thinking of using crons for multi-step deployments, you might want to look elsewhere.

While it's relatively easy to set up a cron job to run on your laptop this defeats the purpose of using a cron altogether. Crons will only run when the daemon is running, so unless you plan on never turning off your laptop, you’ll want to set up the cron on an EC2 instance (or another server). Now you have infrastructure to support and added complexity to keep in mind when making changes. Running a cron on an EC2 instance is certainly doable, but likely not the best use of resources. Just because it can be done does not mean it should be done. At this point, you’re better off using a different deployment method.

The biggest downside, however, is that your cron script must handle any edge cases or errors gracefully. If it doesn’t, you might wind up with silent failures – a data engineer’s worst enemy.

Cron jobs might serve you well if you have some running servers you can use, have a strong handle on the types of problems your dbt runs and cron executions might run into, and you can get away with a simple deployment with limited dbt steps. It is also a solid choice if you are running a small side-project where missed deployments are probably not a big deal.

Use crons for anything more complex, and you might be setting yourself up for future headaches.

Ease of Use / Implementation – You need to know what you’re doing

Required Technical Ability – Medium/ High

Configurability – High, but with the added complexity of managing more complex code

Customization – High, but with a lot of overhead. Best to keep things very simple

Best for End-to-End Deployment - Low.

Cloud Service Runners like dbt Cloud are probably the most obvious way to deploy your dbt project without writing code for those deployments, but they are not perfect.

dbt Cloud is a product from dbt Labs, the creators of dbt. The platform has some out-of-the-box integrations, such as Github Actions and Webhooks, but anything more will have to be managed by your team. While there is an IDE (Integrated Developer Experience) that allows the user to write new dbt models, you are adding a layer of complexity by orchestrating your deployments in another tool. If you are only orchestrating dbt runs, dbt Cloud is a reasonable choice – it's designed for just that.

However, when you want to orchestrate more than just your dbt runs – for instance, kickoff multiple Extract / Load (EL) processes or trigger other jobs after dbt completes – you will need to look elsewhere.

dbt Cloud will host your project documentation and provide access to its APIs. But that is the lion’s share of the offering. Unless you spring for the Enterprise Tier, you will not be able to do custom deployments or trigger dbt runs based on incoming data with ease.

Deploying your dbt project with dbt Cloud is straightforward, though. And that is its best feature. All deployment commands use native dbt command line syntax, and you can create various "Jobs" through their UI to run specific models at different cadences.

If you are a data team with data pipelines that are not too complex and you are looking to handle dbt deployments without the need for standing up infrastructure or stringing together advanced deployment logic, then dbt Cloud will work for you. If you are interested in more complex triggers to kickoff your dbt runs - for instance, triggering a run immediately after your data is loaded – there are other options which natively support patterns like that. The most important factor is the complexity of the pieces you need to coordinate, not necessarily the size of your team or organization.

Overall, it is a great choice if you’re okay working within its limitations and support a simple workflow. As soon as you reach any scale, however, the cost may be too high.

Ease of Use / Implementation – Very easy

Required Technical Ability – Low

Configurability – Low / Medium

Customization – Low

Best for End-to-End Deployment - Low

The Modern Data Stack is a composite of tools. Unfortunately, many of those tools are disconnected because they specialize in handling one of the steps in the ELT process. Only after working with them do you realize that there are implicit dependencies between these tools. Tools like Datacoves bridge the gaps between the tools in the Modern Data Stack and enable some more flexible dbt deployment patterns. Additionally, they cover the End-to-End solution, from Extraction to Visualization, meaning it can handle steps before and after Transformation.

If you are loading your data into Snowflake with Fivetran or Airbyte, your dbt runs need to be coordinated with those EL processes. Often, this is done by manually setting the ETL schedule and then defining your dbt run schedule to coincide with your ETL completion. It is not a hard dependency, though. If you’re processing a spike in data or running a historical re-sync, your ETL pipeline might take significantly longer than usual. Your normal dbt run won’t play nicely with this extended ETL process, and you’ll wind up using Snowflake credits for nothing.

This is a common issue for companies moving from early stage / MVP data warehouses into more advanced patterns. There are ways to connect your EL processes and dbt deployments with code, but Datacoves makes it much easier. Datacoves will trigger the right dbt job immediately after the load is complete. No need to engineer a solution yourself. The value of the Modern Data Stack is being able to mix and match tools that are fit for purpose.

Meeting established data freshness and quality SLAs is challenging enough, but with Datacoves, you’re able to skip building custom solutions for these problems. Every piece of your data stack is integrated and working together. If you are orchestrating with Airflow, then you’re likely running a Docker container which may or may not have added dependencies. That’s one common challenge teams managing their own instances of Airflow will meet, but with Datacoves, container / image management and synchronization between EL and dbt executions are all handled on the platform. The setup and maintenance of the scalable Kubernetes infrastructure necessary to run Airflow is handled entirely by the Datacoves platform, which gives you flexibility but with a lower learning curve. And, it goes without saying that this works across multiple environments like development, UAT, and production.

With the End-to-End Pipeline in mind, one of the convenient features is that Datacoves provides a singular place to access all the tools within your normal analytics workflow - extraction + load, transformation, orchestration, and security controls are in a single place. The implicit dependencies are now codified; it is clear how a change to your dbt model will flow through to the various pieces downstream.

Datacoves is for teams who want to introduce a mature analytics workflow without the weight of adopting and integrating a new suite of tools on their own. This might mean you are a small team at a young company, or an established analytics team at an enterprise looking to simplify and reduce platform complexity and costs.

There are some prerequisites, though. To make use of Datacoves, you do need to write some code, but you’ll likely already be used to writing configuration files and dbt models that Datacoves expects. You won't be starting from scratch because best practices, accelerators, and expertise are already provided.

Ease of Use / Implementation – You can utilize YAML to generate DAGS for a simpler approach, but you also have the option to use Python DAGS for added flexibility and complexity in your pipelines.

Required Technical Ability – Medium

Configurability – High

Customization – High. Datacoves is modular, allowing you to embed the tools you already use

Best for End-to-End Deployment - High. Datacoves takes into account all of the factors of dbt Deployment

What do you use to deploy your dbt project when you have a large, complex set of models and dependencies? An orchestrator like Airflow is a popular choice, with many companies opting to use managed deployments through services such as Astronomer.

For many companies – especially in the enterprise – this is familiar territory. Adoption of these orchestrators is widespread. The tools are stable, but they are not without some downsides.

These orchestrators require a lot of setup and maintenance. If you’re not using a managed service, you’ll need to deploy the orchestrator yourself, and handle the upkeep of the infrastructure running your orchestrator, not to mention manage the code your orchestrator is executing. It’s no small feat, and a large part of the reason that many large engineering groups have dedicated data engineering and infrastructure teams.

Running your dbt deployment through Airflow or any other orchestrator is the most flexible option you can find, though. The increase in flexibility means more overhead in terms of setting up the systems you need to run and maintain this architecture. You might need to get DevOps involved, you’ll need to move your dbt project into a Docker image, you’ll want an airtight CI/CD process, and ultimately have well defined SLAs. This typically requires Docker images, container management, and some DevOps work. There can be a steep learning curve, especially if you’re unfamiliar with what’s needed to take an Airflow instance to a stable production release.

There are 3 ways to run Airflow, specifically – deploying on your own, using a managed service, or using an integrated platform like Datacoves. When using a managed service or an integrated platform like Datacoves, you need to consider a few factors:

Airflow is a multi-purpose tool. It’s not just for dbt deployments. Many organizations run complex data engineering pipelines with Airflow, and by design, it is flexible. If your use of Airflow extends well beyond dbt deployments or ELT jobs oriented around your data warehouse, you may be better suited for a dedicated managed service.

Similarly, if your organization has numerous teams dedicated to designing, building and maintaining your data infrastructure, you may want to use a dedicated Airflow solution. However, not every organization is able to stand up platform engineering teams or DevOps squads dedicated to the data infrastructure. Regardless of the size of your team, you will need to make sure that your data infrastructure needs do not outmatch your team’s ability to support and maintain that infrastructure.

Every part of the Modern Data Stack relies on other tools performing their jobs; data pipelines, transformations, data models, BI tools - they are all connected. Using Airflow for your dbt deployment adds another link in the dependency chain. Coordinating dbt deployments via Airflow can always be done through writing additional code, but this is an additional overhead you will need to design, implement, and maintain. With this approach, you begin to require strong software engineering and design principles. Your data models are only as useful as your data is fresh; meeting your required SLAs will require significant cross-tool integration and customization.

If you are a small team looking to deploy dbt, there are likely better options. If you are a growing team, there are certainly simpler options with less infrastructure overhead. For Data teams with complex data workflows that combine multiple tools and transformation technologies such as Python, Scala, and dbt, however, Airflow and other orchestrators can be a good choice.

Ease of Use / Implementation – Can be quite challenging starting from scratch

Required Technical Ability – High

Configurability – High

Customization – High, but build time and maintenance costs can be prohibitive

Best for End-to-End Deployment - High, but requires a lot of resources to set up and maintain

The way you should deploy your dbt project depends on a handful of factors – how much time you’re willing to invest up front, your level of technical expertise, as well as how much configuration and customization you need.

Small teams might have high technical acumen but not enough capacity to manage a deployment on their own. Enterprise teams might have enough resources but maintain disparate, interdependent projects for analytics. Thankfully, there are several options to move your project beyond your local and into a production environment with ease. And while specific tools like Airflow have their own pros and cons, it’s becoming increasingly important to evaluate your data stack vendor solution holistically. Ultimately, there are many ways to deploy dbt to production, and the decision comes down to spending time building a robust deployment pipeline or spending more time focusing on analytics.

Data transformation tools turn raw data into reliable, analytics-ready datasets, but choosing the right one requires understanding how transformation fits into your full data pipeline. Modern teams do not just transform data. They also orchestrate jobs, enforce quality, manage deployments, and ensure reliability at scale.

This guide explains what data transformation tools actually do, how popular options compare, and how to evaluate them as part of an end-to-end data stack rather than in isolation.

Evaluating data transformation tools requires looking beyond features and understanding how each tool fits into a production data pipeline.

Data transformation is the process of converting data from one format or structure to another. It improves the performance of data processing systems and compliance with data governance regulations.

Data transformation is just one of the steps on the road to deriving value from data.

The end-to-end process includes the following steps:

It’s worth taking each of these steps into consideration when determining the best data transformation tool for your organization.

There is a common misconception that the tool alone will solve all the problems.

However, using the right tools without addressing the underlying processes can lead to a data mess that can exacerbate the underlying issue, costing more time and money. This data mess could easily be avoided in the first place, not just by having the right tools but by also having the modern best practices in place.

Both help businesses extract, load, and transform data, but the sequence of events is different with their pros and cons.

ELT is generally more effective than ETL processes because it removes the uncertainty of not having the necessary data for future use cases and offers more flexibility in the long term. Since storage is typically affordable, it makes more sense to simplify the ingestion process.

Here’s a list of the top data transformation tools to manage the ETL process:

Each of these tools falls into one of two categories: code-based or visual/drag-and-drop interface. Both have their own set of pros and cons, which we’ll go through below.

Code-based tools allow you to transform data by using SQL or Python to explicitly define the transformation steps. Although it requires knowledge and experience, visual tools don’t negate the need to know SQL. This approach gives users a high degree of flexibility and control, and simplifies the maintainability and validation of work before releasing it to production.

Moreover, it is simpler to trace each data transformation step without having a disconnected document explaining what the transformation “should” do.

After having multiple conversations with data teams at enterprise companies, the challenge of developing and orchestrating dbt pipelines is a topic that has come up on numerous occasions.

There are a lot of tools to figure out when it comes to implementing the best practices for digital transformations and custom applications. It’s not uncommon for companies to end up with more than one SaaS platform and tool than they had initially planned. We built Datacoves to eliminate this need by providing the following:

Datacoves focuses on helping companies accelerate growth by providing a complete ELT solution, including orchestration and visualization. Therefore, the learning curve for data transformation is minimized because of our best-practice accelerators and the available tool integrations to form an end-to-end platform.

Here is the extended version of the ELT process with Datacoves:

Develop modular code and track version changes that you and your team can view. You’re also able to validate the quality of data transformations with our built-in testing frameworks and generate documents to leave a record of how you’re transforming data.

You develop in a VS Code environment that can be configured with a vast array of VS Code extensions and Python libraries All the modern data tools you need are provided in a structured workspace:

It’s suitable for medium and large companies that lack the expertise or don’t want to create and manage complex data processes and need the flexibility that complex enterprise processes require.

Data teams can use all the components provided within the dbt ecosystem in a structured, methodical way with Datacoves. This means you’ll have a simplified dbt experience, yet you’ll still see the same results of dbt when used to its full potential.

Smaller companies also gain competitive advantages with Datacoves because they’ll be able to implement DataOps, follow best practices, and get a fully managed VS Code environment accelerating time to value.

If you would like to know more about how Datacoves can help, you can schedule a demo here.

dbt Cloud allows businesses to build and maintain data pipelines. It’s a cloud-based platform with a web-based IDE that allows you to transform data within a cloud data warehouse. They can help you reduce the time spent setting up an end-to-end solution.

dbt Cloud works well for organizations looking to reduce the time and effort required to transform data pipelines.

Since dbt Cloud is a web-based IDE, it may feel limited for data teams that would rather use a VS Code environment. Moreover, dbt is not deployable in a company’s private cloud. It also typically requires other SaaS tools for complicated data pipelines, making it more difficult to manage unless you have the necessary integration experience with each of those SaaS tools.

Most importantly, dbt Cloud is focused solely on the data transformation step of the ELT process. Hence, you are unable to load VS Code extensions nor additional Python libraries. An enterprise with any level of complexity will also need a full-featured orchestrator.

Apache Airflow is an open-source platform for workflow management. You can orchestrate and schedule data pipelines. It’s a scalable and flexible platform that’s based on Python. You can also define your own operators with Airflow.

Apache Airflow works well for those needing a scalable data transformation tool with an open-source platform. It’s particularly a good choice for businesses mainly using Python to manage their data.

However, Airflow is primarily an orchestrator. That means you may end up building complex code in your data pipelines. Therefore, developing and maintaining this complexity requires experience and technical expertise. Managing the infrastructure for Airflow is not trivial and also requires an understanding of tools like Docker and Kubernetes.

SAS is a solution that allows you to transform and prepare data for analysis. It offers a wide range of features for data transformation, including data cleaning, data integration, and data mining.

SAS is ideal for companies with complex datasets, such as those in financial services, healthcare, and retail industries. Additionally, it’s ideal for professionals with advanced skills and knowledge in data transformation.

With that in mind, there are better solutions than SAS for those less experienced in programming and data management, as SAS licensing can be quite expensive.

SQLMesh is a complete DataOps solution for data testing and transformation. Teams can use SQLMesh to collaborate on data pipelines when transforming data.

SQLMesh is well-suited for businesses with SQL and Python expertise that need to collaborate on complex data transformations and pipelines. Although other open-source tools are available, teams can use SQLMesh to maintain data quality and perform unit testing of their transformations.

SQLMesh may not be ideal when you only need to perform simple data transformations. In this case, there are other more straightforward tools available. Moreover, SQLMesh may not be for you when your primary focus is on real-time data processing.

Visual tools make the ELT process more straightforward by removing the need to manually write code. It works by dragging and dropping pre-built components into a canvas. This makes them ideal for data teams who aren’t as experienced in programming.

The biggest advantage of graphical tools for ETL is that people who are less comfortable with code can use them. Conversely, drag-and-drop tools typically don’t offer the same level of flexibility and control as code-based tools, which can complicate the process of debugging data pipelines and long-term maintenance.

Informatica helps you turn your data into an asset. It’s a cloud-based or on-prem solution for data management with numerous data transformation libraries and APIs available.

Informatica can be a good choice for large enterprises and data professionals looking to quickly transform large volumes of complex data using an on-premise solution. It can also be a good choice for companies that need to comply with industry-specific data standards.

However, it may be too complicated to use for some organizations. Informatica requires a team of experienced data engineers with the necessary skills and experience. DataOps can also be a challenge. Since you’ll be dealing with multiple things simultaneously, it’s easy to get lost in the process when you don’t have the full technical expertise.

Moreover, it’s an expensive solution. There are other more affordable alternatives.

Talend is a cloud-native platform deployable on public cloud solutions such as AWS, Azure, and GCP. They also offer an on-prem solution and provide a variety of components and custom connectors for data transformation.

Talend works for most businesses and data professionals. It’s particularly well-suited for those who need to:

Still, you may want to consider other options when prioritizing DataOps and performing highly specialized data transformations such as machine learning or NLP. Talend enterprise licenses may also be costly.

Azure Data Factory helps you simplify the data transformation process at scale. You’re provided with a code-free and code-centric experience for orchestrating data transformation pipelines.

Azure Data Factory could be the right option for data professionals working within the Azure ecosystem. Azure may be worth considering when you’re looking into data warehousing using Azure Synapse and Azure DataOps and not just ELT.

However, Azure Data Factory might not be the best option when you’re on a budget. As with any visual ELT tooling, DataOps and pipeline maintainability may be more complex leading to an increased total cost of ownership.

Matillion is a cloud-based data transformation tool that provides you with on-premises databases, cloud applications, and SaaS platform integrations.

Matillion’s pre-built connectors and visual interface makes it an ideal solution for less experienced data professionals. The disadvantage is that it can be costly for businesses on a budget. Moreover, you must ensure that Matillion supports your specific requirements and how you intend to perform the data transformations. Care must be given to the long-term maintainability of pipelines that are both visual and code-based.

Getting started with Matillion is simple because they use a drag-and-drop interface for building data pipelines. But like with any other visual tool, there is still a learning curve and it’s typical to have a mix of code and visual components in a production data pipeline.

Alteryx simplifies the data transformation process. You can automate advanced analytics and prepare data through self-service. It’s an effective solution that makes it easier for teams to collaborate. Unlike the other visual tools above which are typically used by Data Engineers in IT, Alteryx is more widely adopted in less technical departments of an organization. It’s also typically paired with visualization tools like Tableau.

Alteryx is a good option to help ensure teams are on the same page throughout the data workflow. Data transformation projects can be shared and feedback provided seamlessly, making collaboration easier.

The downside is that Alteryx is costly compared to the other tools on this list. Moreover, there is still a bit of a learning curve, even if you’re experienced in data analytics. You should also check that Alteryx aligns with teams for effective collaboration.

Data transformation is a process that’s prone to multiple errors along the way. While many tools listed can help you reduce friction, they must be carefully evaluated. With Datacoves, you’ll be able to implement best data practices and DataOps so that you have a smooth process with a minimized learning curve.

If you’d like to learn more about how Datacoves helps you accelerate time to value, you can schedule a free demo here.

Jinja is the game changing feature of dbt Core that allows us to create dynamic SQL code. In addition to the standard Jinja library, dbt Core includes additional functions and variables to make working with dbt even more powerful out of the box.

See our original post, The Ultimate dbt Jinja Cheat Sheet, to get started with Jinja fundamentals like syntax, variable assignment, looping and more. Then dive into the information below which covers Jinja additions added by dbt Core.

This cheatsheet references the extra functions, macros, filters, variables and context methods that are specific to dbt Core.

Enjoy!

These pre-defined dbt Jinja functions are essential to the dbt workflow by allowing you to reference models and sources, write documentation, print, write to the log and more.

These macros are provided in dbt Core to improve the dbt workflow.

These dbt Jinja filters are used to modify data types.

These dbt core "variables" such as config, target, source, and others contain values that provide contextual information about your dbt project and configuration settings. They are typically used for accessing and customizing the behavior of your dbt models based on project-specific and environment-specific information.

These special variables provide information about the current context in which your dbt code is running, such as the model, schema, or project name.

These methods allow you to retrieve information about models, columns, or other elements of your project.

Please contact us with any errors or suggestions.