Implementing dbt (data build tool) can revolutionize your organization's data maturity, however, if your organization is not ready to take advantage of the benefits of dbt it might not be the right time to start. Why? Because the success of data initiatives often hinges on aspects beyond the tooling itself.

Many companies rush into implementing dbt without assessing their organization’s maturity and this leads to poor implementation. The consequences that come from a poorly implemented dbt initiative can leave the organization frustrated, overwhelmed with technical debt, and wasted resources. To avoid these pitfalls and ensure your organization is truly ready for dbt, you should complete an assessment of your organization's readiness by answering the questions presented later in this article.

Before diving into the maturity assessment questions, it’s important to understand what data maturity means. Data maturity is the extent to which an organization can effectively leverage its data to drive business value. It encompasses multiple areas, including:

Data-Driven Culture: Fostering an environment where data is integral to decision-making processes.

Data Quality: Ensuring data is accurate, consistent, and reliable.

Data Governance: Implementing policies and procedures to manage data assets.

Data Integration: Seamlessly combining data from various sources for a unified view.

A mature data organization not only ensures data accuracy and consistency but also embeds data-driven decision-making into its core operations.

By leveraging dbt's features, organizations can significantly enhance their data maturity, leading to better decision-making, improved data quality, robust governance, and seamless integration. For example:

Data-Driven Culture: By using dbt, you can improve many aspects that contribute to creating a data-driven culture within an organization. One way is by encouraging business users to be involved in providing or reviewing accurate model and column descriptions which are embedded in dbt. You can also involve them in defining what data to test with dbt. Better Data Quality will improve trust in the data. More trust in the data will always lead to more frequent use and reliance on it.

Data Quality and Observability: dbt enables automated testing and validation of data transformations. This ensures data quality by catching issues like schema changes or data anomalies early in the pipeline. As your data quality and data observability needs grow you can assess where you are on the data maturity curve. For example, in a sales data model, we can write tests to ensure there are no negative order quantities and that each order has a valid customer ID. With dbt you can also understand data lineage and this can improve impact and root cause analysis when insights don’t seem quite right.

Data Governance: dbt facilitates version control and documentation for all transformations, enhancing transparency and accountability. Organizations can track changes to data models ensuring compliance with data governance policies.

Data Integration: dbt supports the integration of data from multiple sources by providing a framework for consistent and reusable transformations. This allows for the creation of unified data models that provide a holistic view of business operations.

Now that we understand what data maturity is and how dbt can help improve it, you might be ready to jump on the dbt bandwagon. But first, we encourage you to assess your organization’s readiness for dbt. The journey to data maturity involves not only choosing the right tools but also ensuring that your organization is philosophically and operationally prepared to take full advantage of these tools. It is important to recognize that dbt’s approach requires a shift in mindset towards modern data practices, emphasizing transparency, collaboration, and automation.

To determine if your organization is mature enough for dbt or if dbt is the right fit, consider the following assessment questions:

dbt requires a philosophical alignment with its principles, such as ELT (Extract, Load, Transform) instead of the traditional ETL (Extract, Transform, Load) approach. dbt is also based on idempotency meaning that given the same input, you will always get the same output. This is different than traditional ETL that may use incompatible constructs like Auto-Incrementing Primary Keys. If your organization prefers processes that are incompatible with dbt’s methodology, you will face challenges fighting the dbt framework to make it do something it was not intended to do.

Simply migrating existing processes and code to dbt without rethinking them won’t leverage dbt’s full potential. Assess whether you’re ready to redesign your workflows to take advantage of dbt’s capabilities such as incremental tables, snapshots, seeds, etc.

dbt offers excellent features for data quality and documentation. Evaluate if your team is prepared to prioritize the utilization of these features to enhance transparency and trust in your data. Tests and model descriptions will not write themselves. When it comes to good descriptions, they shouldn't come from a data engineering team that does not know how the data is used or the best data quality rules to implement. Good descriptions must involve business user review at a minimum.

The goal of dbt is to empower various teams including IT and business users by using the same tooling. Consider if your organization is ready to foster this cross-functional collaboration. When you implement dbt correctly, you will empower anyone who knows SQL to contribute. You can have multiple teams contribute to the insight delivery process and still ensure proper governance and testing before updating production.

Automation is key to achieving efficiency with dbt. Implementing automated deployment, testing, and CI/CD pipelines can significantly improve your workflows. If you aren’t ready to automate, the benefits of dbt may not be fully realized. If you simply put in dbt without thinking about the end-to-end process and the failure points, you will miss opportunities for errors. The spaghetti code you have today didn't happen just because you were not using dbt.

dbt is a framework, not a silver bullet. Merely changing tools without altering your underlying processes will not solve existing issues. This is a huge issue with organizations that have not done the work to create a data-driven culture. Assess if your team is ready to adopt better naming conventions and more structured processes to make data more understandable.

Data immaturity might manifest as a reliance on manual processes, lack of data quality controls, or poor documentation practices. These factors can derail the effective implementation of dbt since dbt thrives in environments where data practices are robust and standardized. In other words, dbt alone will not solve these problems.

Ensuring your organization is ready for the changes that come with implementing dbt is not just best practice, it is essential for success. By thoroughly assessing your readiness, you can avoid technical debt, optimize your workflows, and fully harness the power of dbt. Remember, dbt is a powerful tool, but its effectiveness depends on the readiness of your organization to improve data practices and its alignment with dbt’s philosophy.

Digital transformation is often seen through the lens of technological advancement and process optimization. Most blog posts and guides out there revolve around implementing new software, automating tasks, and digitizing operations. Yet, there's a pivotal element that's frequently overlooked in these discussions, especially when it comes to an enterprise: the mindset and culture within an organization. This article aims to shed light on why this is crucial in achieving true digital transformation. But first, let's investigate what digital transformation is and why it is important.

Digital transformation is the integration of digital technology into all areas of a business, fundamentally changing how it operates and delivers value to customers. It is more than just a technological upgrade; it is a cultural shift that requires organizations to continually challenge the status quo, experiment, and get comfortable with failure. This often means walking away from long-standing business processes that companies were built upon to embrace new ways of working. Most organizations find this part the most challenging.

To achieve digital transformation in an enterprise 9 times out of 10 there must be a change in company culture. However, changing a company's culture is a formidable task. It is rare to hear statements like, “We need to fundamentally change our problem-solving approach.” This realization became clear to me through my past experiences as I noticed that managers often lacked the influence to drive change at the highest organizational levels. Additionally, the pressure to deliver quick results within budget cycles frequently hindered genuine cultural transformation.

During my tenure at various companies, under numerous managers, the consistent message was the need for improvement. However, I have come to understand that organizations, much like fireflies, develop their own rhythms. It is this unique rhythm that sets apart innovative and transformative companies from those that merely follow without achieving similar success. What do I mean by this? Let’s turn to nature for an explanation.

Nature is fascinating, especially when observing how hundreds or thousands of fireflies can synchronize their flashes.

In organizations, a similar phenomenon occurs. People will sync up and follow the status quo, even if it is not what is best for the organization. This dramatically hinders digital transformation because the loudest are not always right and yet they cause others to sync up with them. This will cause innovation to be stopped in its tracks.

In addition to this firefly phenomenon, often action differs from ambition. I recall a staff meeting with a former CIO discussing a future less dependent on Microsoft and more open to non-Windows devices. It was clear that iPhones were going to change the corporate landscape. Despite this, every new tool implemented was still optimized for Internet Explorer. This discrepancy between ambition and action often drives analytical people like me to frustration. To effect change, persistence is key. I have had ideas initially dismissed as “not my job,” only to see one later turn into a patented invention.

This manifests itself in other ways as well; have you ever seen a company advocate for fewer meetings while simultaneously criticizing those who do not include “everyone” in decision-making? I have been in such situations and can attest that decision-making by committee is not inherently superior. In fact, the more people involved in an initiative, the less effective it tends to be. This, I believe, is due to the Dunning-Kruger effect.

The more people you involve in a transformation initiative, the more likely the discussions will deteriorate to bike shedding discussions. When there is a disconnect between what is said and what is done, people take notice, and it breeds discontent.

Even in my most successful transformation initiatives, the radius of transformation has been limited to my sphere of influence. Sure, some of my tools and processes got global and cross-functional acceptance, but the underlying principles never took hold because they were too radical for the organization at the time. I was not part of the IT organization so the things I did were typically seen as shadow IT. Instead of focusing on what I should not be doing, it would have been more progressive for them to see how I was practicing Agile principles. They could have inquired about how my project was doing DevOps before that was in style, or how it was that this non-sanctioned product was extremely well received and people sought me out to help them improve their processes.

This means if you want the organization to be more innovative, you need to find the obstacles that hold people back from being innovative. Often politics and bureaucracy impact an initiative more than the solution itself. If you force everyone to comply with existing tools and processes, then you are imposing a constraint on the team that will limit innovation.

A typical way this manifests itself is leadership pushing the idea that one platform or process can solve every need. This can come in the form of imposing that a particular group do data transformation, or a visualization tool be the way that everyone can do analytics. I have never seen one tool that is good at everything, and you end up balancing the single solution with an unmanageable array of tools and processes. A healthy organization is a learning organization that is always open to improvement. When management encourages pushing boundaries and not taking anything as fact then the company can innovate.

A great example of driving innovation is seen in the approach of Steve Jobs, co-founder of Apple Inc. Jobs was known for his ability to challenge conventional wisdom and existing standards in the technology industry. He emphasized the importance of understanding the fundamental principles underlying a problem to innovate and create groundbreaking solutions. One notable instance was the development of the iPhone, which revolutionized the smartphone industry. Jobs and his team did not just improve on existing phones; they rethought what a phone could be, focusing on user experience and simplicity. This approach led to a product that dramatically altered how people interact with technology.

As a leader, you need to look for the fireflies who are using first principles like Steve Jobs to deliver innovative solutions and nurture, or create, a corporate culture that truly challenges what has been done without artificial constraints.

Reasoning by first principles removes the impurity of assumptions and conventions. What remains is the essentials. It’s one of the best mental models you can use to improve your thinking because the essentials allow you to see where reasoning by analogy might lead you astray.

The transformative and innovative thinkers will either comply or leave, both of which are undesirable. In my case I tended to leave. In every organization where I have worked, I have managed to make a significant impact, often through sheer determination. During my time at one such company, our goal was to introduce a data catalog. By analyzing the problem I was able to discern what was essential for our organization vs an elaborate and idealistic vision which was capable of doing everything. While the IT organization felt it would be better to create a home-grown catalog I understood that our biggest obstacle was getting people to use a catalog in the first place, so time to market was critical. I found that Alation met the needs we had and IT kept to their vision to build an all encompassing catalog, In 3 months I had deployed Alation and 1.5 years later, the home grown solution was a tenth as good. This approach of breaking down the problem to its basic elements and building up from there was critical. It is often underestimated how challenging it is to develop and maintain custom software. This experience highlights the effectiveness of first principles thinking in deploying practical and efficient solutions.

The reality is that not everyone possesses the tenacity to advocate for change, especially in the face of substantial resistance. Not only that, but I have also witnessed people being ostracized for thinking differently, while others were promoted for fitting in. It is crucial to seek out divergent thinkers and consider the validity of their perspectives, instead of forcing them to conform. This is why true digital transformation necessitates a shift in culture.

When an individual, much like a firefly that does not flash in unison with the rest, finds themselves out of sync with the collective rhythm, they face a decision: conform and synchronize with the group or venture out to find a new collective that resonates with their unique spark.

True transformational change must come from the top. Achieving enterprise digital transformation requires a deep and bold questioning of the status quo. We must critically assess our processes: Is a particular task truly necessary for a certain group? Can we identify and eliminate inefficiencies? Will adding another layer of approval or inspection genuinely enhance outcomes? It is essential to remember that human behavior often has a more profound impact than any technology or process we implement. When decision-making is centralized within one group, solutions are inevitably skewed to reflect their viewpoint. Too often, I have witnessed decisions justified by cost considerations that, upon closer inspection, proved detrimental in the broader context. An effective strategy involves analyzing the entire system, recognizing that optimizing the whole may require accepting lower efficiency in some areas.

The key is to align with the needs of users and the organization and engage leadership in this journey. With a united front, tackling the 'corporate dragons' becomes a more manageable endeavor. One practical approach is employing methodologies like the 'Job to be Done' framework.

Company culture and change management are frequently overlooked in the pursuit of process improvement. Employees operate within their limitations, while management ponders the lack of innovation and agility compared to other companies. The simpler path might seem to be increasing staff or updating technology, but the heart of transformation lies in the mindset of the organization. Leaders aiming for a lasting impact must embrace first principles thinking, ready to scrutinize and challenge established norms. Transformational change rarely stems from incremental improvements; truly innovative companies are those that dare to think and act differently. The organization thus faces a pivotal choice: will it adapt to a new rhythm, or compel its 'new fireflies' to fall in line with the existing order?

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and visual ETL tools such as Alteryx, Matillion, and Informatica. Code-first engines offer stronger rigor, testing, and CI/CD, while GUI platforms emphasize ease of use and rapid prototyping. Teams choose these alternatives when they need more security, governance, or flexibility than dbt Core or dbt Cloud provide.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and GUI-based ETL tools such as Alteryx, Matillion, and Informatica.

Teams explore dbt alternatives when they need stronger governance, private deployments, or support for Python and code-first workflows that go beyond SQL. Many also prefer GUI-based ETL tools for faster onboarding. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in, which makes tool neutrality and platform flexibility more important than ever.

Teams look for dbt alternatives when they need stronger orchestration, consistent development environments, Python support, or private cloud deployment options that dbt Cloud does not provide.

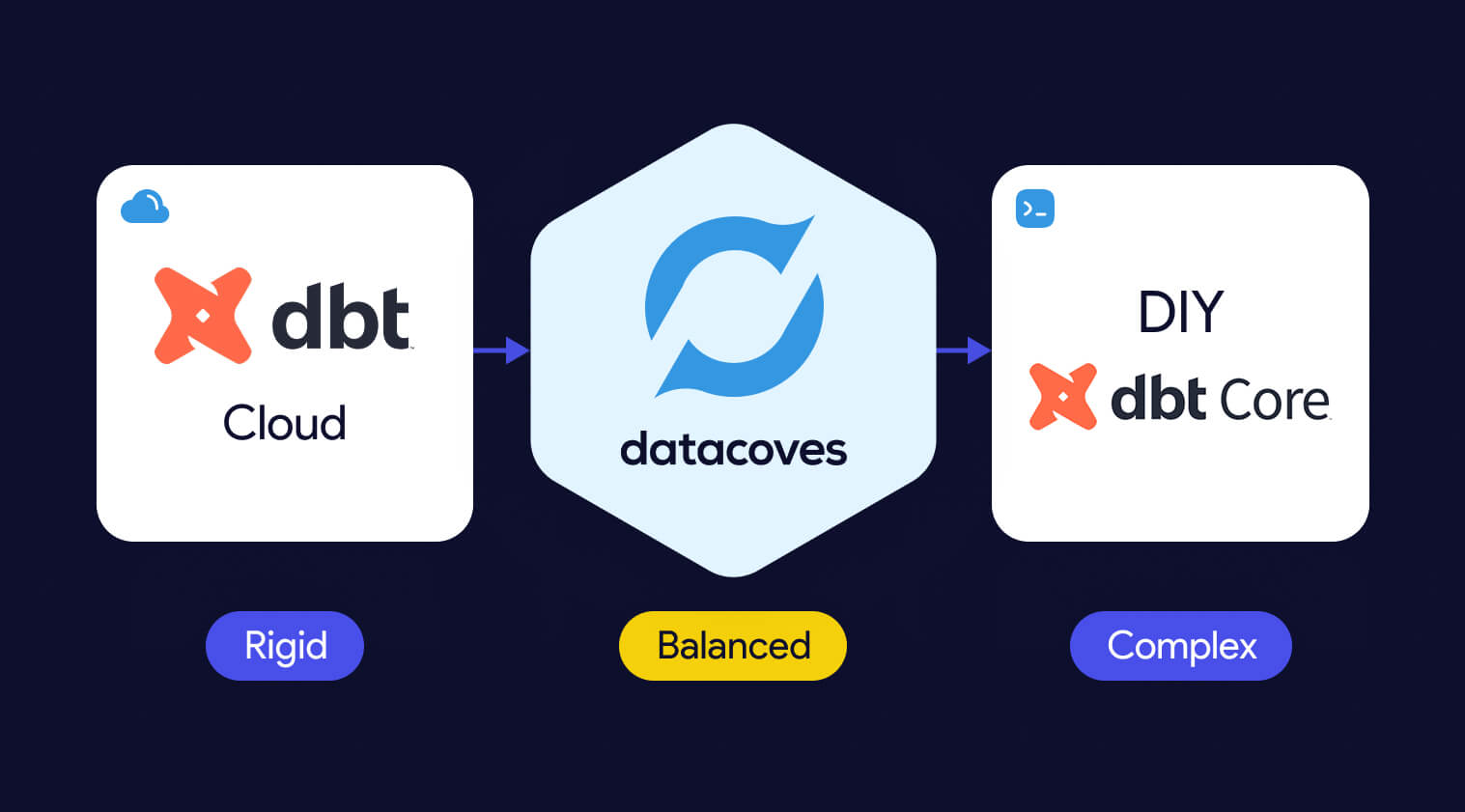

Organizations evaluating dbt alternatives typically compare tools across three categories. Each category reflects a different approach to data transformation, development preferences, and organizational maturity.

Organizations consider alternatives to dbt Cloud when they need more flexibility, stronger security, or support for development workflows that extend beyond dbt. Teams comparing platform options often begin by evaluating the differences between dbt Cloud vs dbt Core.

Running enterprise-scale ELT pipelines often requires a full orchestration layer, consistent development environments, and private deployment options that dbt Cloud does not provide. Costs can also increase at scale (see our breakdown of dbt pricing considerations), and some organizations prefer to avoid features that are not open source to reduce long-term vendor lock-in.

This category includes platforms that deliver the benefits of dbt Cloud while providing more control, extensibility, and alignment with enterprise data platform requirements.

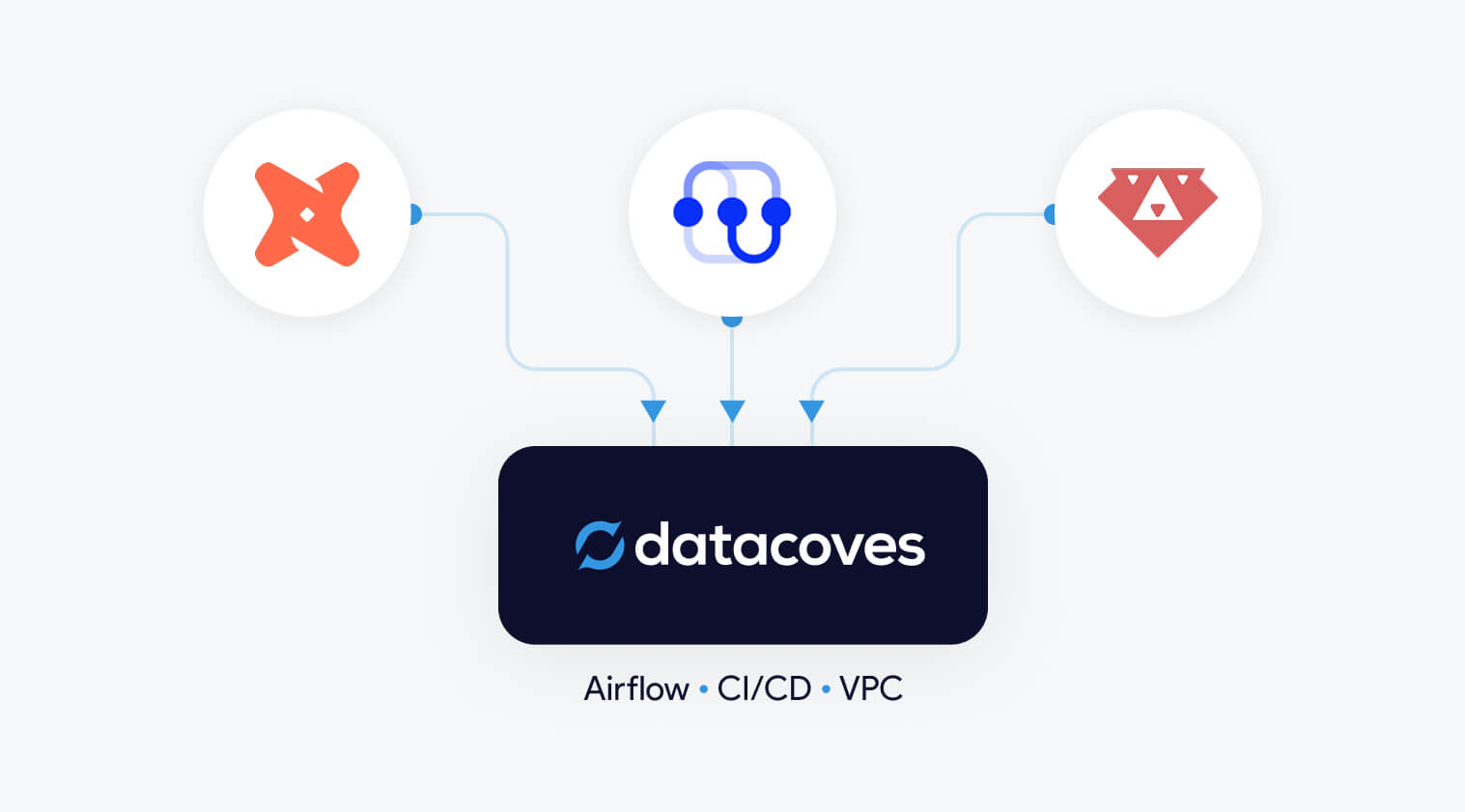

Datacoves provides a secure, flexible platform that supports dbt, SQLMesh, and Bruin in a unified environment with private cloud or VPC deployment.

Datacoves is an enterprise data platform that serves as a secure, flexible alternative to dbt Cloud. It supports dbt Core, SQLMesh, and Bruin inside a unified development and orchestration environment, and it can be deployed in your private cloud or VPC for full control over data access and governance.

Benefits

Flexibility and Customization:

Datacoves provides a customizable in-browser VS Code IDE, Git workflows, and support for Python libraries and VS Code extensions. Teams can choose the transformation engine that fits their needs without being locked into a single vendor.

Handling Enterprise Complexity:

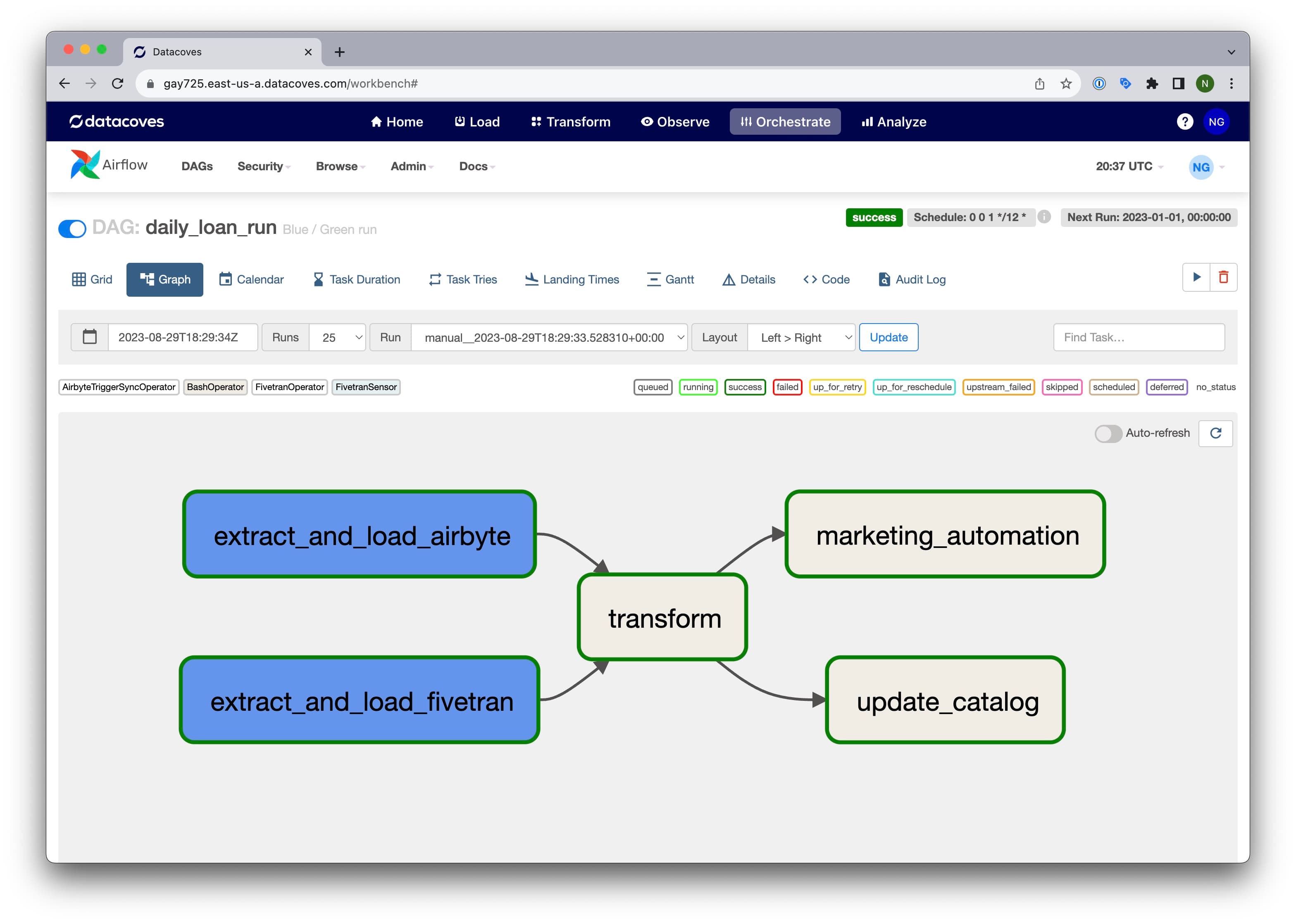

Datacoves includes managed Airflow for end-to-end orchestration, making it easy to run dbt and Airflow together without maintaining your own infrastructure. It standardizes development environments, manages secrets, and supports multi-team and multi-project workflows without platform drift.

Cost Efficiency:

Datacoves reduces operational overhead by eliminating the need to maintain separate systems for orchestration, environments, CI, logging, and deployment. Its pricing model is predictable and designed for enterprise scalability.

Data Security and Compliance:

Datacoves can be deployed fully inside your VPC or private cloud. This gives organizations complete control over identity, access, logging, network boundaries, and compliance with industry and internal standards.

Reduced Vendor Lock-In:

Datacoves supports dbt, SQLMesh, and Bruin Data, giving teams long-term optionality. This avoids being locked into a single transformation engine or vendor ecosystem.

Running dbt Core yourself is a flexible option that gives teams full control over how dbt executes. It is also the most resource-intensive approach. Teams choosing DIY dbt Core must manage orchestration, scheduling, CI, secrets, environment consistency, and long-term platform maintenance on their own.

Benefits

Full Control:

Teams can configure dbt Core exactly as they want and integrate it with internal tools or custom workflows.

Cost Flexibility:

There are no dbt Cloud platform fees, but total cost of ownership often increases as the system grows.

Considerations

High Maintenance Overhead:

Teams must maintain Airflow or another orchestrator, build CI pipelines, manage secrets, and keep development environments consistent across users.

Requires Platform Engineering Skills:

DIY dbt Core works best for teams with strong Kubernetes, CI, Python, and DevOps expertise. Without this expertise, the environment becomes fragile over time.

Slow to Scale:

As more engineers join the team, keeping dbt environments aligned becomes challenging. Onboarding, upgrades, and platform drift create operational friction.

Security and Compliance Responsibility:

Identity, permissions, logging, and network controls must be designed and maintained internally, which can be significant for regulated organizations.

Teams that prefer code-first tools often look for dbt alternatives that provide strong SQL modeling, Python support, and seamless integration with CI/CD workflows and automated testing. These are part of a broader set of data transformation tools. Code-based ETL tools give developers greater control over transformations, environments, and orchestration patterns than GUI platforms. Below are four code-first contenders that organizations should evaluate.

Code-first dbt alternatives like SQLMesh, Bruin Data, and Dataform provide stronger CI/CD integration, automated testing, and more control over complex transformation workflows.

SQLMesh is an open-source framework for SQL and Python-based data transformations. It provides strong visibility into how changes impact downstream models and uses virtual data environments to preview changes before they reach production.

Benefits

Efficient Development Environments:

Virtual environments reduce unnecessary recomputation and speed up iteration.

Considerations

Part of the Fivetran Ecosystem:

SQLMesh was acquired by Fivetran, which may influence its future roadmap and level of independence.

Dataform is a SQL-based transformation framework focused specifically for BigQuery. It enables teams to create table definitions, manage dependencies, document models, and configure data quality tests inside the Google Cloud ecosystem. It also provides version control and integrates with GitHub and GitLab.

Benefits

Centralized BigQuery Development:

Dataform keeps all modeling and testing within BigQuery, reducing context switching and making it easier for teams to collaborate using familiar SQL workflows.

Considerations

Focused Only on the GCP Ecosystem:

Because Dataform is geared toward BigQuery, it may not be suitable for organizations that use multiple cloud data warehouses.

AWS Glue is a serverless data integration service that supports Python-based ETL and transformation workflows. It works well for organizations operating primarily in AWS and provides native integration with services like S3, Lambda, and Athena.

Benefits

Python-First ETL in AWS:

Glue supports Python scripts and PySpark jobs, making it a good fit for engineering teams already invested in the AWS ecosystem.

Considerations

Requires Engineering Expertise:

Glue can be complex to configure and maintain, and its Python-centric approach may not be ideal for SQL-first analytics teams.

Bruin is a modern SQL-based data modeling framework designed to simplify development, testing, and environment-aware deployments. It offers a familiar SQL developer experience while adding guardrails and automation to help teams manage complex transformation logic.

Benefits

Modern SQL Modeling Experience:

Bruin provides a clean SQL-first workflow with strong dependency management and testing.

Considerations

Growing Ecosystem:

Bruin is newer than dbt and has a smaller community and fewer third-party integrations.

While code-based transformation tools provide the most flexibility and long-term maintainability, some organizations prefer graphical user interface (GUI) tools. These platforms use visual, drag-and-drop components to build data integration and transformation workflows. Many of these platforms fall into the broader category of no-code ETL tools. GUI tools can accelerate onboarding for teams less comfortable with code editors and may simplify development in the short term. Below are several GUI-based options that organizations often consider as dbt alternatives.

GUI-based dbt alternatives such as Matillion, Informatica, and Alteryx use drag-and-drop interfaces that simplify development and accelerate onboarding for mixed-skill teams.

Matillion is a cloud-based data integration platform that enables teams to design ETL and transformation workflows through a visual, drag-and-drop interface. It is built for ease of use and supports major cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake.

Benefits

User-Friendly Visual Development:

Matillion simplifies pipeline building with a graphical interface, making it accessible for users who prefer low-code or no-code tooling.

Considerations

Limited Flexibility for Complex SQL Modeling:

Matillion’s visual approach can become restrictive for advanced transformation logic or engineering workflows that require version control and modular SQL development.

Informatica is an enterprise data integration platform with extensive ETL capabilities, hundreds of connectors, data quality tooling, metadata-driven workflows, and advanced security features. It is built for large and diverse data environments.

Benefits

Enterprise-Scale Data Management:

Informatica supports complex data integration, governance, and quality requirements, making it suitable for organizations with large data volumes and strict compliance needs.

Considerations

High Complexity and Cost:

Informatica’s power comes with a steep learning curve, and its licensing and operational costs can be significant compared to lighter-weight transformation tools.

Alteryx is a visual analytics and data preparation platform that combines data blending, predictive modeling, and spatial analysis in a single GUI-based environment. It is designed for analysts who want to build workflows without writing code and can be deployed on-premises or in the cloud.

Benefits

Powerful GUI Analytics Capabilities:

Alteryx allows users to prepare data, perform advanced analytics, and generate insights in one tool, enabling teams without strong coding skills to automate complex workflows.

Considerations

High Cost and Limited SQL Modeling Flexibility:

Alteryx is one of the more expensive platforms in this category and is less suited for SQL-first transformation teams who need modular modeling and version control.

Azure Data Factory (ADF) is a fully managed, serverless data integration service that provides a visual interface for building ETL and ELT pipelines. It integrates natively with Azure storage, compute, and analytics services, allowing teams to orchestrate and monitor pipelines without writing code.

Benefits

Strong Integration for Microsoft-Centric Teams:

ADF connects seamlessly with other Azure services and supports a pay-as-you-go model, making it ideal for organizations already invested in the Microsoft ecosystem.

Considerations

Limited Transformation Flexibility:

ADF excels at data movement and orchestration but offers limited capabilities for complex SQL modeling, making it less suitable as a primary transformation engine

Talend provides an end-to-end data management platform with support for batch and real-time data integration, data quality, governance, and metadata management. Talend Data Fabric combines these capabilities into a single low-code environment that can run in cloud, hybrid, or on-premises deployments.

Benefits

Comprehensive Data Quality and Governance:

Talend includes built-in tools for data cleansing, validation, and stewardship, helping organizations improve the reliability of their data assets.

Considerations

Broad Platform, Higher Operational Complexity:

Talend’s wide feature set can introduce complexity, and teams may need dedicated expertise to manage the platform effectively.

SQL Server Integration Services is part of the Microsoft SQL Server ecosystem and provides data integration and transformation workflows. It supports extracting, transforming, and loading data from a wide range of sources, and offers graphical tools and wizards for designing ETL pipelines.

Benefits

Strong Fit for SQL Server-Centric Teams:

SSIS integrates deeply with SQL Server and other Microsoft products, making it a natural choice for organizations with a Microsoft-first architecture.

Considerations

Not Designed for Modern Cloud Data Warehouses:

SSIS is optimized for on-premises SQL Server environments and is less suitable for cloud-native architectures or modern ELT workflows.

Recent consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in and pushed organizations to evaluate more flexible transformation platforms.

Organizations explore dbt alternatives when dbt no longer meets their architectural, security, or workflow needs. As teams scale, they often require stronger orchestration, consistent development environments, mixed SQL and Python workflows, and private deployment options that dbt Cloud does not provide.

Some teams prefer code-first engines for deeper CI/CD integration, automated testing, and strong guardrails across developers. Others choose GUI-based tools for faster onboarding or broader integration capabilities. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has also increased concerns about vendor lock-in.

These factors lead many organizations to evaluate tools that better align with their governance requirements, engineering preferences, and long-term strategy.

DIY dbt Core offers full control but requires significant engineering work to manage orchestration, CI/CD, security, and long-term platform maintenance.

Running dbt Core yourself can seem attractive because it offers full control and avoids platform subscription costs. However, building a stable, secure, and scalable dbt environment requires significantly more than executing dbt build on a server. It involves managing orchestration, CI/CD, and ensuring development environment consistency along with long-term platform maintenance, all of which require mature DataOps practices.

The true question for most organizations is not whether they can run dbt Core themselves, but whether it is the best use of engineering time. This is essentially a question of whether to build vs buy your data platform. DIY dbt platforms often start simple and gradually accumulate technical debt as teams grow, pipelines expand, and governance requirements increase.

For many organizations, DIY works in the early stages but becomes difficult to sustain as the platform matures.

The right dbt alternative depends on your team’s skills, governance requirements, pipeline complexity, and long-term data platform strategy.

Selecting the right dbt alternative depends on your team’s skills, security requirements, and long-term data platform strategy. Each category of tools solves different problems, so it is important to evaluate your priorities before committing to a solution.

If these are priorities, a platform with secure deployment options or multi-engine support may be a better fit than dbt Cloud.

Recent consolidation in the ecosystem has raised concerns about vendor dependency. Organizations that want long-term flexibility often look for:

Consider platform fees, engineering maintenance, onboarding time, and the cost of additional supporting tools such as orchestrators, IDEs, and environment management

dbt remains a strong choice for SQL-based transformations, but it is not the only option. As organizations scale, they often need stronger orchestration, consistent development environments, Python support, and private deployment capabilities that dbt Cloud or DIY dbt Core may not provide. Evaluating alternatives helps ensure that your transformation layer aligns with your long-term platform and governance strategy.

Code-first tools like SQLMesh, Bruin Data, and Dataform offer strong engineering workflows, while GUI-based tools such as Matillion, Informatica, and Alteryx support faster onboarding for mixed-skill teams. The right choice depends on the complexity of your pipelines, your team’s technical profile, and the level of security and control your organization requires.

Datacoves provides a flexible, secure alternative that supports dbt, SQLMesh, and Bruin in a unified environment. With private cloud or VPC deployment, managed Airflow, and a standardized development experience, Datacoves helps teams avoid vendor lock-in while gaining an enterprise-ready platform for analytics engineering.

Selecting the right dbt alternative is ultimately about aligning your transformation approach with your data architecture, governance needs, and long-term strategy. Taking the time to assess these factors will help ensure your platform remains scalable, secure, and flexible for your future needs.

Companies are investing heavily to become data-driven and to democratize data access. However, many are not achieving the transformative outcomes they expected.

The core issue? A lack of trust.

This mistrust stems from a lack of focus on core aspects that ensure a robust data-driven culture and critical mistakes in these areas.

Fortunately, these mistakes are self-inflicted which means they can be fixed, and this article aims to help highlight and address these pitfalls. By understanding and adhering to the core pillars of a data-driven culture and avoiding the common mistakes, organizations can develop and maintain a data-driven culture that people can trust.

It is no secret that there is power and opportunity in data, and data-driven culture is the approach which aims to take advantage of that.

A data-driven culture is not about hastily adopting the latest tools or technologies in the hope of resolving data challenges. This common mistake often leads to a focus on immediate results or 'shiny objects', such as acquiring cutting-edge technology or hiring new talent. Unfortunately, this approach tends to overlook essential priorities and gradually erodes the foundation of a data-driven culture: Trust in the data.

Many companies struggle with effectively using analytics because they overemphasize these immediate goals – the 'destination' – rather than appreciating the foundational journey necessary for impactful analytics. This journey involves more than just technology; it requires a shift in mindset and approach.

Data-driven culture represents an organizational approach where data is the cornerstone of decision-making processes. In such a culture, decisions are primarily informed by data analysis, rather than relying exclusively on intuition or past experiences. This approach involves strategically employing data at every level of the organization. It fosters an environment where data is not just an asset but the main driver of strategy, innovation, and operational choices. By harnessing the power and opportunities offered by data, a data-driven culture ensures that decisions across the organization are grounded in solid evidence and analytical insight, enhancing the overall decision-making quality and efficacy.

Empowered Decision Making: Decisions are based on data analysis, leading to objective and impactful outcomes.

Accessibility of Data: Data is accessible across the organization, breaking down silos and empowering all employees.

Investment in Technology: Adequate tools and technologies are provided for effective data collection and analysis.

Data Literacy: Continuous training is provided to enhance the workforce's understanding and use of data.

Quality and Governance: High standards of data accuracy and security are maintained.

Agility: The organization adapts quickly to insights derived from data.

Collaborative Integration: Data insights are shared and integrated across various functions.

Outcome-Focused: Emphasis on measurable results driven by data insights.

All of that sounds great, but how do we achieve a data-driven culture?

Like mentioned earlier in the article, true success in analytics comes not from merely chasing new tools or methodologies but from establishing three core pillars as part of a Data-Driven Culture:

By refocusing on these foundational elements, businesses can drive more meaningful and sustainable results from their analytic endeavors, leading to overall project success and satisfaction.

Let's dive deeper into the core pillars and examine the common pitfalls within each pillar that I have observed lead to challenges.

Fundamental alignment is about synchronizing analytics strategies with the organization's core business objectives. This ensures everyone involved, from executives to frontline employees, share a common vision and understanding of what analytics aims to achieve. This alignment is crucial for creating a unified direction in data-driven initiatives and ensuring that every analytics effort contributes meaningfully to the overall business strategy.

This sounds great right? So much so that every project I've participated in began with high hopes and enthusiasm. Initially, there was a sense of unity – funding secured, partnerships with vendors established, and the latest technology acquired. This honeymoon phase of the data driven transformation, filled with optimism, had everyone working diligently, with management receiving regular updates and a general belief that we were on the right track.

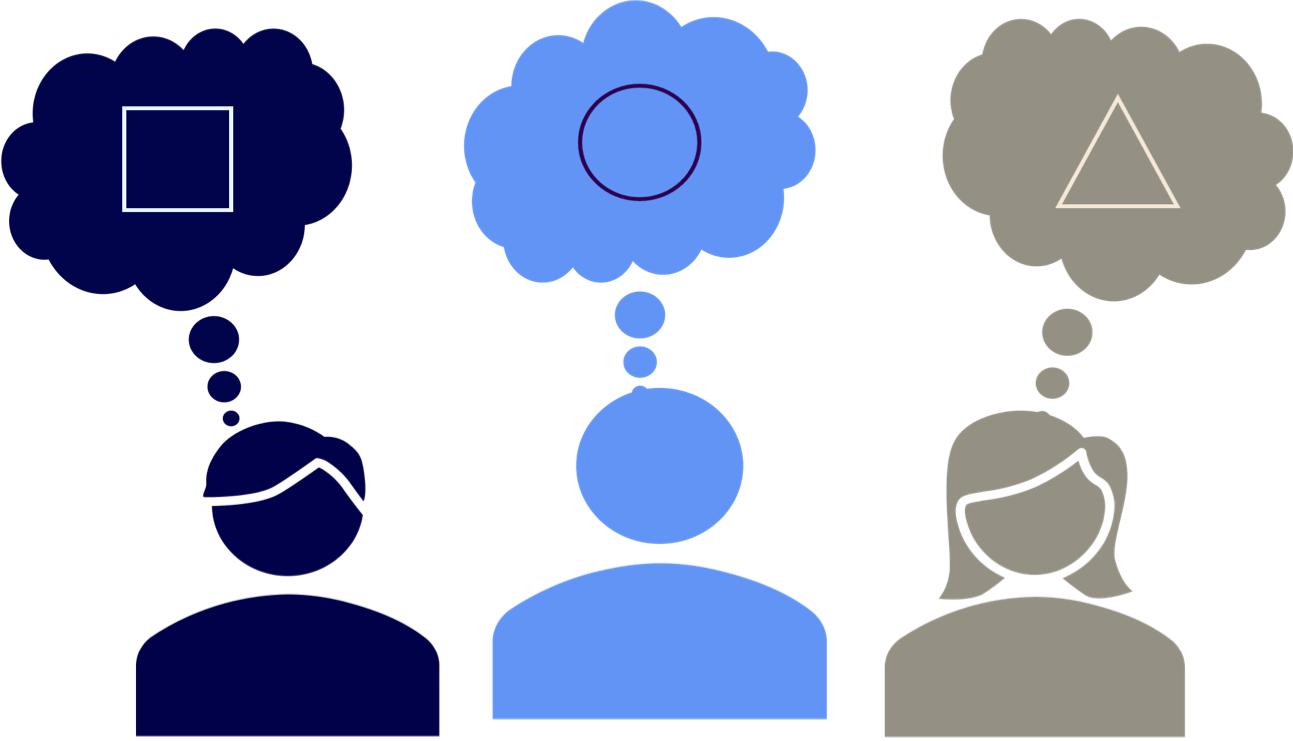

The real test emerged when critical decisions were required. This was the point where the honeymoon phase often faded, revealing a lack of true alignment. Meetings became prolonged discussions where the team struggled to reach consensus. This challenge stemmed from either not spending enough time initially to ensure everyone was on the same page or not conducting a discovery phase at the start of the project.

Although we agreed on high-level objectives like digital transformation and self-service analytics, there was a misalignment in our deeper understanding and perspectives. We were each influenced by our varied backgrounds and expertise in different aspects of the project.

This led me to a crucial fact: the importance of alignment before action. In projects where we dedicated time upfront for structured alignment and thorough product discovery, we not only achieved better estimations but also greater overall satisfaction. It became evident that successful analytics projects require a deep understanding of business objectives, the current state, potential risks, and a clear prioritization of features. This was because we developed a clear understanding and set up expectations that people could rely on throughout the course of implementation.

Crucially, alignment also involves clarity on what the project will not address, alongside the criteria for prioritization such as quality, completeness of features, and usability. Embracing agility does not mean forgoing thorough planning.

Ultimately, building trust in any project begins with listening, creating a shared vision, and setting the right expectations from the start. A well-defined and achievable plan, understood and agreed upon by all, is the foundation of success.

The end goal of analytics should be to serve the user's needs and involve designing practical solutions that add real value and enhance decision-making processes. This means creating analytics tools and processes that are intuitively aligned with how users work and make decisions, ensuring that these tools are not just technically proficient but also practically useful.

There are two pitfalls to avoid.

1. Trying to please everyone often leads to pleasing no one.

This is a common scenario in many companies where technical teams strive to meet all demands. Despite their efforts to deliver on projects, dissatisfaction with the end results is frequent.

2. Not addressing the actual user pain points.

This happens when the user does not actually get a good working solution out of the process.

During discovery it is important to discuss what is in scope, out of scope, essential, and nice to have. By categorizing this way you can better understand the needs of the group and use it to guide the process. With this process done, you can move forward with confidence that you are addressing the most important pain points.

Now that we have defined the pain points, the next step is to fully understand. The key is to not only understand their needs but the reasons behind them. What are the goals they're trying to achieve? What are the shortcomings of their current methods? Is the new process or tool genuinely an improvement over what they currently have? For example, if users need to navigate a tool quickly, finding ways to reduce unnecessary clicks and simplifying access becomes important. Sometimes, it's more practical to keep an existing process unchanged until other parts are enhanced. By bringing the most critical information to the forefront, the solution becomes more user centric.

It is important to have these needs in mind at the beginning of the project and strive to truly understand. If not, you risk investing time, money, and resources in a tool that users don't need, and this can have a detrimental effect on the overall culture.

More importantly, this approach allows you to justify your decisions and explain why certain aspects are prioritized over others. When users see that their needs and challenges are understood and addressed, they are more likely to trust and accept the solutions provided. This trust is built through consistently demonstrating that their best interests are at heart.

Efficient data management involves implementing robust processes to ensure data accuracy, accessibility, and understandability. This pillar is key to informed decision-making as it underpins the reliability of data-driven insights. Effective data management includes organizing, storing, and safeguarding data to make it readily available and useful for users across the organization.

Let's face it, your data processes get no love. This is usually because they are "too technical." Users often do not concern themselves with databases, schemas, tables, or columns, let alone the process that turns raw facts into business-ready insights. It is easy for management to get excited about a fancy dashboard and the potential of Machine Learning and Gen AI, but when it comes to the actual data, interest tends to wane.

It makes sense; most people don't understand how the power grid works. We take it for granted that we flip a switch and expect the lights to turn on. We move on without a second thought. No one really cares about electricity until something goes wrong. Similarly, in many organizations, data issues often go unnoticed until a failure occurs. Sometimes these issues are immediately apparent, but other times they are silent. When a failure does happen, there is a scramble to fix it. Meetings are held, issues are identified, and patches are implemented to "prevent" future failures. However, the best time to think about potential problems isn't after they happen, but before — building systems that anticipate and are designed for resilience.

The real issue is that the process from raw data to insights isn't often viewed as a single system. It is all interconnected and should be treated as such. In the world of analytics, it sometimes feels like companies are trying to build a mansion on a foundation of quicksand. Initially, everything seems fine, and everyone is busy with their tasks, but when the foundation starts to give way, the focus shifts to propping up the weak points. You can't effectively build on quicksand; you need solid, repeatable processes from the start.

The focus should be on building systems that anticipate challenges and are designed for resilience. This involves integrating data management practices into the company's culture from the start, ensuring users trust the data and the processes that generate insights. If you want effective collaboration and impact analysis, these are difficult to retrofit later — they need to be part of the initial plan. Documented analytics isn't a magical solution; it needs to be ingrained in the culture and process from the beginning. The good news is that there are many examples and best practices from those who have navigated these challenges successfully.

For users to truly trust in analytics, they need to have faith in the data and the processes that generate it. They need to see and believe in a robust system built on a solid foundation.

To achieve a data-driven culture, companies must refocus on three core pillars: fundamental alignment, user-focused solutions, efficient data management, and avoid common mistakes in these areas. Success in analytics isn't about chasing new tools or methodologies but about building a robust system from the ground up, aligning everyone's vision, and creating practical, value-added solutions. Prioritizing foundational elements over immediate shiny objects will lead to more meaningful, sustainable results and will build trust in the analytics process.

As the world of data management continues to grow, terms and new concepts are constantly popping up. It's important for data professionals to stay up to date with terms such as Data Mesh and data observability. For those coming into the field from other areas, it’s also good to understand terminology to communicate more effectively with others.

In this blog post, we've put together an extensive table that breaks down and explains the essential terms in modern data engineering, analytics, and architecture. This resource is designed to help both experienced data professionals and newcomers alike to navigate and understand the ever-evolving language of data.

We've covered basic concepts like data warehouses and ETL pipelines and advanced ideas like Data Mesh. Each of these terms is crucial in shaping today's data ecosystems. Think about how these terms apply to your business and can enhance your understanding. Have we missed any terms that you were hoping to see defined, or do you think we could improve the definitions of some of the terms already defined? Please share your thoughts with us by providing feedback through our contact page.

Interested in modern data solutions? Accelerate your journey to a modern data stack with Datacoves' managed solution, designed to streamline your data processes and implement best practices efficiently. Discover how Datacoves can help you quickly add value and transform your data strategy, ensuring you make the most informed decisions for your specific needs, by scheduling a demo.

Data transformation tools turn raw data into reliable, analytics-ready datasets, but choosing the right one requires understanding how transformation fits into your full data pipeline. Modern teams do not just transform data. They also orchestrate jobs, enforce quality, manage deployments, and ensure reliability at scale.

This guide explains what data transformation tools actually do, how popular options compare, and how to evaluate them as part of an end-to-end data stack rather than in isolation.

Evaluating data transformation tools requires looking beyond features and understanding how each tool fits into a production data pipeline.

Data transformation is the process of converting data from one format or structure to another. It improves the performance of data processing systems and compliance with data governance regulations.

Data transformation is just one of the steps on the road to deriving value from data.

The end-to-end process includes the following steps:

It’s worth taking each of these steps into consideration when determining the best data transformation tool for your organization.

There is a common misconception that the tool alone will solve all the problems.

However, using the right tools without addressing the underlying processes can lead to a data mess that can exacerbate the underlying issue, costing more time and money. This data mess could easily be avoided in the first place, not just by having the right tools but by also having the modern best practices in place.

Both help businesses extract, load, and transform data, but the sequence of events is different with their pros and cons.

ELT is generally more effective than ETL processes because it removes the uncertainty of not having the necessary data for future use cases and offers more flexibility in the long term. Since storage is typically affordable, it makes more sense to simplify the ingestion process.

Here’s a list of the top data transformation tools to manage the ETL process:

Each of these tools falls into one of two categories: code-based or visual/drag-and-drop interface. Both have their own set of pros and cons, which we’ll go through below.

Code-based tools allow you to transform data by using SQL or Python to explicitly define the transformation steps. Although it requires knowledge and experience, visual tools don’t negate the need to know SQL. This approach gives users a high degree of flexibility and control, and simplifies the maintainability and validation of work before releasing it to production.

Moreover, it is simpler to trace each data transformation step without having a disconnected document explaining what the transformation “should” do.

After having multiple conversations with data teams at enterprise companies, the challenge of developing and orchestrating dbt pipelines is a topic that has come up on numerous occasions.

There are a lot of tools to figure out when it comes to implementing the best practices for digital transformations and custom applications. It’s not uncommon for companies to end up with more than one SaaS platform and tool than they had initially planned. We built Datacoves to eliminate this need by providing the following:

Datacoves focuses on helping companies accelerate growth by providing a complete ELT solution, including orchestration and visualization. Therefore, the learning curve for data transformation is minimized because of our best-practice accelerators and the available tool integrations to form an end-to-end platform.

Here is the extended version of the ELT process with Datacoves:

Develop modular code and track version changes that you and your team can view. You’re also able to validate the quality of data transformations with our built-in testing frameworks and generate documents to leave a record of how you’re transforming data.

You develop in a VS Code environment that can be configured with a vast array of VS Code extensions and Python libraries All the modern data tools you need are provided in a structured workspace:

It’s suitable for medium and large companies that lack the expertise or don’t want to create and manage complex data processes and need the flexibility that complex enterprise processes require.

Data teams can use all the components provided within the dbt ecosystem in a structured, methodical way with Datacoves. This means you’ll have a simplified dbt experience, yet you’ll still see the same results of dbt when used to its full potential.

Smaller companies also gain competitive advantages with Datacoves because they’ll be able to implement DataOps, follow best practices, and get a fully managed VS Code environment accelerating time to value.

If you would like to know more about how Datacoves can help, you can schedule a demo here.

dbt Cloud allows businesses to build and maintain data pipelines. It’s a cloud-based platform with a web-based IDE that allows you to transform data within a cloud data warehouse. They can help you reduce the time spent setting up an end-to-end solution.

dbt Cloud works well for organizations looking to reduce the time and effort required to transform data pipelines.

Since dbt Cloud is a web-based IDE, it may feel limited for data teams that would rather use a VS Code environment. Moreover, dbt is not deployable in a company’s private cloud. It also typically requires other SaaS tools for complicated data pipelines, making it more difficult to manage unless you have the necessary integration experience with each of those SaaS tools.

Most importantly, dbt Cloud is focused solely on the data transformation step of the ELT process. Hence, you are unable to load VS Code extensions nor additional Python libraries. An enterprise with any level of complexity will also need a full-featured orchestrator.

Apache Airflow is an open-source platform for workflow management. You can orchestrate and schedule data pipelines. It’s a scalable and flexible platform that’s based on Python. You can also define your own operators with Airflow.

Apache Airflow works well for those needing a scalable data transformation tool with an open-source platform. It’s particularly a good choice for businesses mainly using Python to manage their data.

However, Airflow is primarily an orchestrator. That means you may end up building complex code in your data pipelines. Therefore, developing and maintaining this complexity requires experience and technical expertise. Managing the infrastructure for Airflow is not trivial and also requires an understanding of tools like Docker and Kubernetes.

SAS is a solution that allows you to transform and prepare data for analysis. It offers a wide range of features for data transformation, including data cleaning, data integration, and data mining.

SAS is ideal for companies with complex datasets, such as those in financial services, healthcare, and retail industries. Additionally, it’s ideal for professionals with advanced skills and knowledge in data transformation.

With that in mind, there are better solutions than SAS for those less experienced in programming and data management, as SAS licensing can be quite expensive.

SQLMesh is a complete DataOps solution for data testing and transformation. Teams can use SQLMesh to collaborate on data pipelines when transforming data.

SQLMesh is well-suited for businesses with SQL and Python expertise that need to collaborate on complex data transformations and pipelines. Although other open-source tools are available, teams can use SQLMesh to maintain data quality and perform unit testing of their transformations.

SQLMesh may not be ideal when you only need to perform simple data transformations. In this case, there are other more straightforward tools available. Moreover, SQLMesh may not be for you when your primary focus is on real-time data processing.

Visual tools make the ELT process more straightforward by removing the need to manually write code. It works by dragging and dropping pre-built components into a canvas. This makes them ideal for data teams who aren’t as experienced in programming.

The biggest advantage of graphical tools for ETL is that people who are less comfortable with code can use them. Conversely, drag-and-drop tools typically don’t offer the same level of flexibility and control as code-based tools, which can complicate the process of debugging data pipelines and long-term maintenance.

Informatica helps you turn your data into an asset. It’s a cloud-based or on-prem solution for data management with numerous data transformation libraries and APIs available.

Informatica can be a good choice for large enterprises and data professionals looking to quickly transform large volumes of complex data using an on-premise solution. It can also be a good choice for companies that need to comply with industry-specific data standards.

However, it may be too complicated to use for some organizations. Informatica requires a team of experienced data engineers with the necessary skills and experience. DataOps can also be a challenge. Since you’ll be dealing with multiple things simultaneously, it’s easy to get lost in the process when you don’t have the full technical expertise.

Moreover, it’s an expensive solution. There are other more affordable alternatives.

Talend is a cloud-native platform deployable on public cloud solutions such as AWS, Azure, and GCP. They also offer an on-prem solution and provide a variety of components and custom connectors for data transformation.

Talend works for most businesses and data professionals. It’s particularly well-suited for those who need to:

Still, you may want to consider other options when prioritizing DataOps and performing highly specialized data transformations such as machine learning or NLP. Talend enterprise licenses may also be costly.

Azure Data Factory helps you simplify the data transformation process at scale. You’re provided with a code-free and code-centric experience for orchestrating data transformation pipelines.

Azure Data Factory could be the right option for data professionals working within the Azure ecosystem. Azure may be worth considering when you’re looking into data warehousing using Azure Synapse and Azure DataOps and not just ELT.

However, Azure Data Factory might not be the best option when you’re on a budget. As with any visual ELT tooling, DataOps and pipeline maintainability may be more complex leading to an increased total cost of ownership.

Matillion is a cloud-based data transformation tool that provides you with on-premises databases, cloud applications, and SaaS platform integrations.

Matillion’s pre-built connectors and visual interface makes it an ideal solution for less experienced data professionals. The disadvantage is that it can be costly for businesses on a budget. Moreover, you must ensure that Matillion supports your specific requirements and how you intend to perform the data transformations. Care must be given to the long-term maintainability of pipelines that are both visual and code-based.

Getting started with Matillion is simple because they use a drag-and-drop interface for building data pipelines. But like with any other visual tool, there is still a learning curve and it’s typical to have a mix of code and visual components in a production data pipeline.

Alteryx simplifies the data transformation process. You can automate advanced analytics and prepare data through self-service. It’s an effective solution that makes it easier for teams to collaborate. Unlike the other visual tools above which are typically used by Data Engineers in IT, Alteryx is more widely adopted in less technical departments of an organization. It’s also typically paired with visualization tools like Tableau.

Alteryx is a good option to help ensure teams are on the same page throughout the data workflow. Data transformation projects can be shared and feedback provided seamlessly, making collaboration easier.

The downside is that Alteryx is costly compared to the other tools on this list. Moreover, there is still a bit of a learning curve, even if you’re experienced in data analytics. You should also check that Alteryx aligns with teams for effective collaboration.

Data transformation is a process that’s prone to multiple errors along the way. While many tools listed can help you reduce friction, they must be carefully evaluated. With Datacoves, you’ll be able to implement best data practices and DataOps so that you have a smooth process with a minimized learning curve.

If you’d like to learn more about how Datacoves helps you accelerate time to value, you can schedule a free demo here.

dbt and Airflow are not competing tools. They solve different problems in a modern data stack.

dbt focuses on transforming data inside the warehouse using analytics engineering best practices. Airflow focuses on orchestrating workflows across systems, schedules, and dependencies. Most mature data teams use both.

The real challenge is understanding where each tool fits, where responsibilities overlap, and how poor orchestration decisions lead to fragile pipelines and constant firefighting.

Airflow is a workflow scheduler, but it is only one part of a broader data orchestration problem that includes dependencies, retries, visibility, and ownership across the entire data lifecycle.

Imagine a scenario where you have a series of tasks: Task A, Task B, and Task C. These tasks need to be executed in sequence every day at a specific time. Airflow enables you to programmatically define the sequence of steps as well as what each step does. With Airflow you can also monitor the execution of each step and get alerts when something fails.

Airflow provides flexibility, which means you can script the logic of each task directly within the tool. However, this flexibility might be both a blessing and a curse. Just because you can code everything within Airflow, it doesn't mean that you should. Overly complicated workflows and incorporating too much logic within Airflow can make it difficult to manage and debug. Ensure that when you're using Airflow, it's the right tool for the specific task you're tackling. For example, it is far more efficient to transform data within a data warehouse than to move data to the Airflow server, perform the transformation, and write the data back to the warehouse.

At the heart of Apache Airflow's appeal is its flexibility when it comes to customizing each step in a workflow. Unlike other tools that may only let you schedule and order tasks, Airflow offers users the ability to define the code behind each task. This means you aren't just deciding the "what" and the "when" of your tasks, but also the"how". Whether it's extracting and loading data from sources, defining transformations, or integrating with other platforms, Airflow lets you tailor each step to your exact requirements. This granularity makes it a powerful ally for those looking to have granular control over their data workflows, ensuring that each step is executed precisely as intended.

While Airflow is powerful, it's important to strike a balance. You should use Airflow primarily as an orchestrator. If mature tools exist for specific tasks, consider integrating them into your workflow and allow Airflow to handle scheduling and coordination. Let specialized tools abstract away complexity. One example is leveraging a tool like Fivetran or Airbyte to perform data extraction from SaaS applications rather than building all the logic within Airflow.

As stated above, Airflow can be used for many things, but we suggest these use cases.

dbt Core is an open-source framework that leverages templated SQL to perform data transformations. Developed by dbt Labs, it specializes in transforming, testing, and documenting data. While it's firmly grounded in SQL, it infuses software engineering principles into the realm of analytics, promoting best practices like version control and DataOps.

Imagine you have a raw data set and you need to transform it for analytical purposes. dbt allows you to create transformation scripts using SQL which is enhanced with Jinja templating for dynamic execution. Once created, these scripts, called "models" in dbt, can be run to create or replace tables and views in your data warehouse. Each transformation can be executed sequentially and when possible, in parallel, ensuring your data is processed properly.

Unlike some traditional ETL tools which might abstract SQL into drag-and-drop interfaces, dbt embraces SQL as the lingua franca of data transformation. This makes it exceptionally powerful for those well-acquainted with SQL. But dbt goes a step further: by infusing Jinja, it introduces dynamic scripting, conditional logic, and reusable macros. Moreover, dbt's commitment to idempotency ensures that your data transformations are consistent and repeatable, promoting reliability.

Lastly, dbt emphasizes the importance of testing and documentation for data transformations. dbt facilitates the capture of data descriptions, data lineage, data quality tests, and other metadata about the data and it can generate a rich web-based documentation site. dbt's metadata can also be pushed to other tools such a specialized data catalog or data observability tools. While dbt is a transformative tool, it's essential to understand its position in the data stack. It excels at the "T" in ELT (Extract, Load, Transform) but requires complementary tools for extraction and loading.

A common misunderstanding within the data community is that dbt = dbt Cloud. When people say dbt they are referring to dbt Core. dbt Cloud is a commercial offering by dbt Labs and it is built upon dbt Core. It provides additional functionalities to the open source framework; these include a scheduler for automating dbt runs, alongside hosting, monitoring, and an integrated development environment (IDE). This means that you can use the open source dbt Core framework without paying for dbt Cloud, however, you will not get the added features dbt Cloud offers such as the scheduler. If you are using dbt Core you will eventually need an orchestrator such as Airflow to get the job done. For more information, check out our article where we cover the differences between dbt cloud vs dbt core.

As mentioned above, one of the key features of dbt Cloud is its scheduler which allows teams to automate their dbt runs at specified intervals. This functionality ensures that data transformations are executed regularly, maintaining the freshness and reliability of data models. However, it's important to note that dbt Cloud's scheduler only handles the scheduling of dbt jobs, i.e., your transformation jobs. You will still need an orchestrator to manage your Extract and Load (EL) processes and anything after Transform (T), such as visualization.

At Datacoves we solve the deployment and infrastructure problems for you so you can focus on data, not infrastructure. A managed Visual Studio Code editor gives developers the best dbt experience with bundled libraries and extensions that improve efficiency. Orchestration of the whole data pipeline is done with Datacoves’ managed Airflow that also offers a simplified YAML based Airflow job configuration to integrate Extract and Load with Transform. Datacoves has best practices and accelerators built in so companies can get a robust data platform up and running in minutes instead of months. To learn more, check out our product page.

Managing the deployment and infrastructure of dbt Core and Airflow is a not so hidden cost of choosing open source, however, at Datacoves we solve the deployment and infrastructure problems for you so you can focus on data, not infrastructure. A managed Visual Studio Code editor gives developers the best dbt experience with bundled libraries and extensions that improve efficiency. Orchestration of the whole data pipeline is done with Datacoves’ managed Airflow that also offers a simplified YAML based Airflow job configuration to integrate Extract and Load with Transform. Datacoves has best practices and accelerators built in so companies can get a robust data platform up and running in minutes instead of months. To learn more, check out our product page.

When looking at the strengths of each tool, it’s clear that the decision isn’t an either-or solution, but they each have a place in your data platform. Analyzing the strengths of each reveals that Airflow should be leveraged for the end-to-end orchestration of the data journey and dbt should be focused on data transformation, documentation, and data quality. This holds true if you are adopting dbt through dbt Cloud. dbt Core does not come with a scheduler, so you will eventually need an orchestrator such as Airflow to automate your transformations as well as other steps in your data pipeline. If you implement dbt with dbt Cloud, you will be able to schedule your transformations but will still need an orchestrator to handle the other steps in your pipeline. You can also check out other dbt alternatives.

The following table shows a high-level summary.

By now you can see that each tool has its place in an end-to-end data solution, but if you came to this article because you need to choose one to integrate, then here is the summary.

If you're orchestrating complex workflows, especially if they involve various tasks and processes, Apache Airflow should be your starting point as it gives you unparalleled flexibility and granular control over scheduling and monitoring.

An organization starting out with basic requirements may be fine starting with dbt Core, but when end-to-end orchestration is needed, Airflow will need to play a role.

If your primary focus is data transformation and you're looking to apply software development best practices to your analytics, dbt is the right answer. Here is the key takeaway: these tools are not rivals, but allies. While one might be the starting point based on immediate needs, having both in your arsenal unlocks the full potential of your data operations.

While Airflow and dbt are designed to assist data teams in deriving valuable insights, they each excel at unique stages of the workflow. For a holistic data pipeline approach, it's best to integrate both. Use tools such as Airbyte or Fivetran for data extraction and loading and trigger them through Airflow. Once your data is prepped, let Airflow guide dbt in its transformation and validation, readying it for downstream consumption. Post-transformation, Airflow can efficiently distribute data to a range of tools, executing tasks like data feeds to BI platforms, refreshing ML models, or initiating marketing automation processes.

However, a challenge arises when integrating dbt with Airflow: the intricacies of deploying and maintaining the combined infrastructure isn't trivial and can be resource-intensive if not approached correctly. But is there a way to harness the strengths of both Airflow and dbt without getting bogged down in the setup and ongoing maintenance? Yes!

Both Apache Airflow and dbt have firmly established themselves as indispensable tools in the data engineering landscape, each bringing their unique strengths and capabilities to the table. While Apache Airflow has emerged as the premier orchestrator, ensuring that tasks and workflows are scheduled and executed with precision, dbt stands out for its ability to streamline and enhance the data transformation process. The choice is not about picking one over the other, but about understanding how they can be integrated to provide a comprehensive solution.

It's vital to approach the integration and maintenance of these platforms pragmatically. Solutions like Datacoves offer a seamless experience, reducing the complexity of infrastructure management and allowing teams to focus on what truly matters: extracting value from their data. In the end, it's about harnessing the right tools, in the right way, to chart the path from raw data to actionable intelligence. See if Datacoves dbt pricing is right for your organization.

dbt, also known as data build tool, is a data transformation framework that leverages templated SQL to transform and test data. dbt is part of the modern data stack and helps practitioners apply software development best practices on data pipelines. Some of these best practices include code modularity, version control, and continuous testing via its built in data quality framework. In this article we will focus on how data can be tested with dbt via build in functionality and with additional dbt packages and libraries.

Adding tests to workflows does more than ensure code and data integrity; it facilitates a continuous dialogue with your data, enhancing understanding and responsiveness. By integrating testing into your regular workflows, you can:

By embedding testing into the development cycle and consuming the results diligently, teams not only safeguard the functionality of their data transformations but also enhance their overall data literacy and operational efficiency. This proactive approach to testing ensures that the insights derived from data are both accurate and actionable.

In dbt, there are two main categories of tests: data tests and unit tests.

Data tests are meant to be executed with every pipeline run to validate the integrity of the data and can be further divided into two types: Generic tests and Singular tests.

Regardless of the type of data test, the process is the same behind the scenes: dbt will compile the code to a SQL SELECT statement and execute it against your database. If any rows are returned by the query, this indicates a failure to dbt.

Unit tests, on the other hand, are meant to validate your transformation logic. They rely on predefined data for comparison to ensure your logic is returning an expected result. Unlike data tests, which are meant to be run with every pipeline execution, unit tests are typically run during the CI (Continuous Integration) step when new code is introduced. Unit tests were incorporated in dbt Core as of version 1.8.

.png)

These are foundational tests provided by dbt-core, focusing on basic schema validation and source freshness. These tests are ideal for ensuring that your data sources remain valid and up-to-date.

dbt-core provides four built-in generic tests that are essential for data modeling and ensuring data integrity:

unique: is a test to verify that every value in a column (e.g. customer_id) contains unique values. This is useful for finding records that may inadvertently be duplicated in your data.

not_null: is a test to check that the values for a given column are always present. This can help you find cases where data in a column suddenly arrives without being populated.

accepted_values: this test is used to validate whether a set of values within a column is present. For example, in a column called payment_status, there can be values like pending, failed, accepted, rejected, etc. This test is used to verify that each row within the column contains one of the different payment statuses, but no other. This is useful to detect changes in the data like when a value gets changed such as accepted being replaced with approved.

relationships: these tests check referential integrity. This type of test is useful when you have related columns (e.g. the customer identifier) in two different tables. One table serves as the “parent” and the other is the “child” table. This is common when one table has a transaction and only lists a customer_id and the other table has the details for that customer. With this test we can verify that every row in the transaction table has a corresponding record in the dimension/details table. For example, if you have orders for customer_ids 1, 2, 3 we can validate that we have information about each of these customers in the customer details table.

Using a generic test is done by adding it to the model's property (yml) file.

Generic tests can accept additional test configurations such as a where clause to apply the test on a subset of rows. This can be useful on large tables by limiting the test to recent data or excluding rows based on the value of another column. Since an error will stop a dbt build or dbt test of the project, it is also possible to assign a severity to a test and optionally a threshold where errors will be treated as warning instead of errors. Finally, since dbt will automatically generate a name for the test, it may be useful to override the auto generated test name for simplicity. Here's the same property file from above with the additional configurations defined.