dbt (data build tool) is a SQL-based transformation framework that turns raw data into trusted, analytics-ready datasets directly inside your data warehouse. It brings software engineering discipline to analytics: version control, automated testing, CI/CD, and auto-generated documentation. dbt handles the "T" in ELT. It does not extract, load, or move data.

What dbt Does: The Transformation Layer in ELT

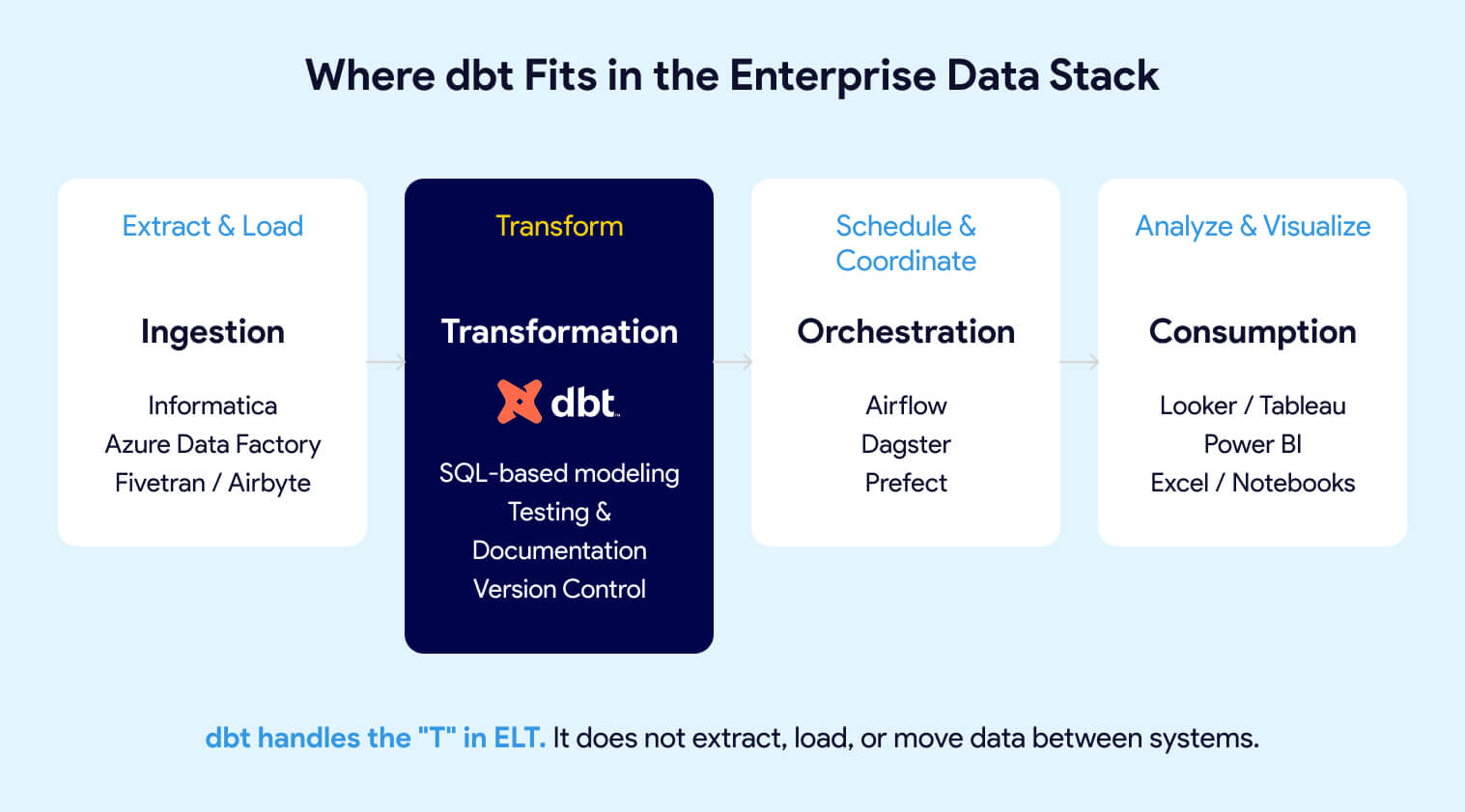

dbt focuses exclusively on the transformation layer of ELT (Extract, Load, Transform). Unlike traditional ETL tools that handle the entire pipeline, dbt assumes data already exists in your warehouse. Ingestion tools like Informatica, Azure Data Factory, or Fivetran load the raw data. dbt transforms it into trusted, analytics-ready datasets.

A dbt project consists of SQL files called models. Each model is a SELECT statement that defines a transformation. When you run dbt, it compiles these models, resolves dependencies, and executes the SQL directly in your warehouse. The results materialize as tables or views. Data never leaves your warehouse.

Example: A Simple dbt Model (models/marts/orders_summary.sql)

SELECT

customer_id,

COUNT(*) AS total_orders,

SUM(order_amount) AS lifetime_value,

MIN(order_date) AS first_order_date

FROM {{ ref('stg_orders') }}

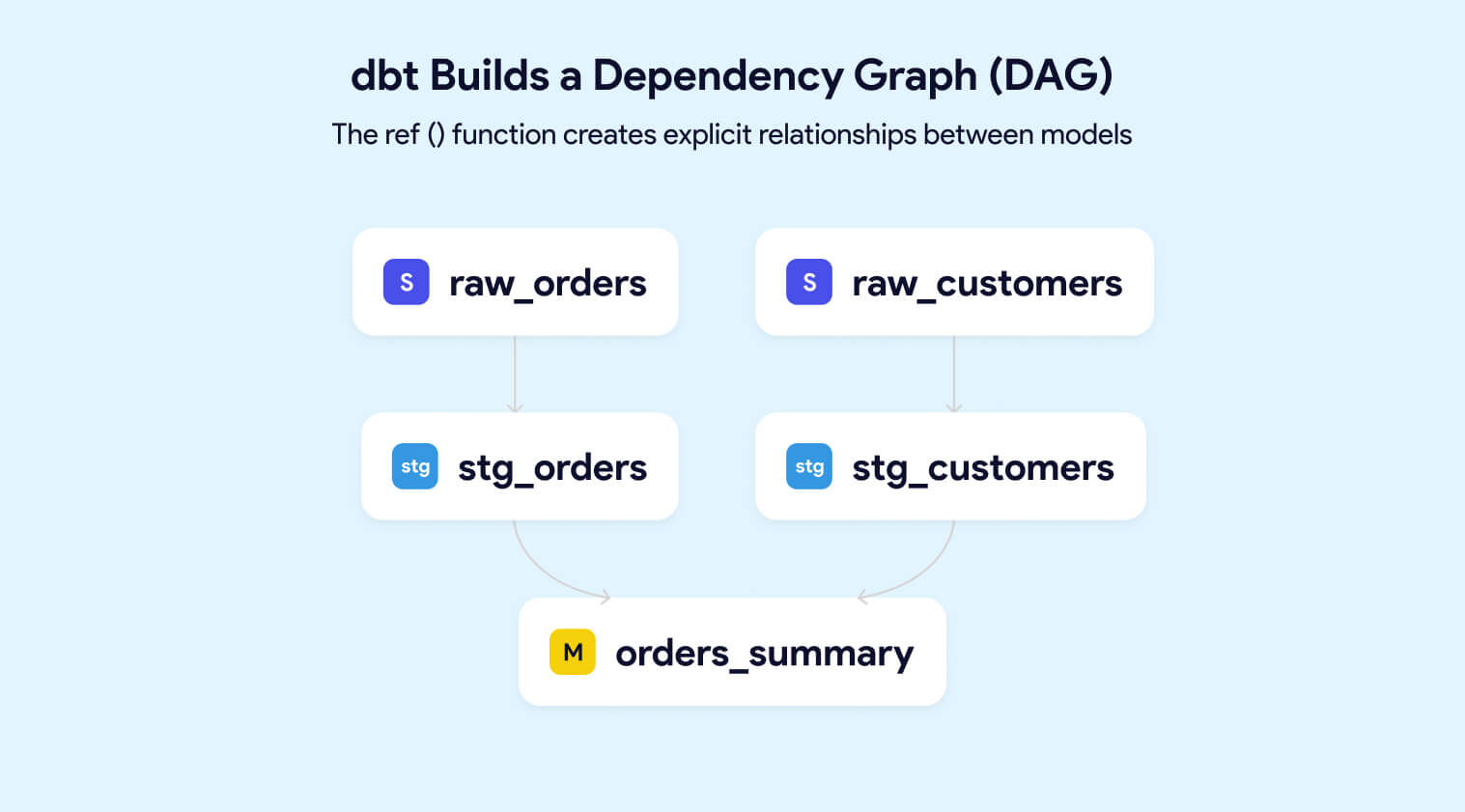

GROUP BY customer_idThe {{ref('stg_orders')}} syntax creates an explicit dependency. dbt uses these references to build a dependency graph (DAG) of your entire pipeline, ensuring models run in the correct order.

For large datasets, dbt supports incremental models that process only new or changed data. This keeps pipelines fast and warehouse costs controlled as data volumes grow.

With dbt, teams can:

- Write transformations as version-controlled SQL

- Define explicit dependencies between models

- Enforce data quality with automated tests

- Generate documentation and lineage automatically

- Deploy changes safely using CI/CD workflows

- Trace issues back to specific commits

dbt handles the "T" in ELT. It does not extract, load, or move data between systems.

How dbt Fits the Enterprise Stack

What dbt Is Not

Misaligned expectations are a primary cause of failed dbt implementations. Knowing what dbt does not do matters as much as knowing what it does.

This separation of concerns is intentional. By focusing exclusively on transformation, dbt allows enterprises to evolve their ingestion, orchestration, and visualization layers independently. You can swap Informatica for Azure Data Factory or migrate from Redshift to Snowflake without rewriting your business logic.

A common mistake: treating dbt as a silver bullet.

dbt is a tool, not a strategy. Organizations with unclear data ownership, no governance framework, or misaligned incentives will not solve those problems by adopting dbt. They will simply have the same problems with versioned SQL.

For a deeper comparison, see dbt vs Airflow: Which data tool is best for your organization?

Why Enterprises Standardize on dbt

Over 30,000+ companies use dbt weekly, including JetBlue, HubSpot, Roche, J&J, Block, and Nasdaq dbt Labs, 2024 State of Analytics Engineering

Enterprise adoption of dbt has accelerated because it solves problems that emerge specifically at scale. Small teams can manage transformation logic in spreadsheets and ad hoc scripts. At enterprise scale, that approach creates compounding risk.

Who Uses dbt in Production

dbt has moved well beyond startups into regulated, enterprise environments:

Life Sciences: Roche, Johnson & Johnson (See how J&J modernized their data stack with dbt), and pharmaceutical companies with strict compliance requirements

- Financial Services: Block (formerly Square), Nasdaq, and major banks processing billions of transactions

- Technology: GitLab, HubSpot, and companies operating data platforms at massive scale

These are not proof-of-concept deployments. These are production systems powering executive dashboards, regulatory reporting, and customer-facing analytics.

The Problem: Scattered Business Logic

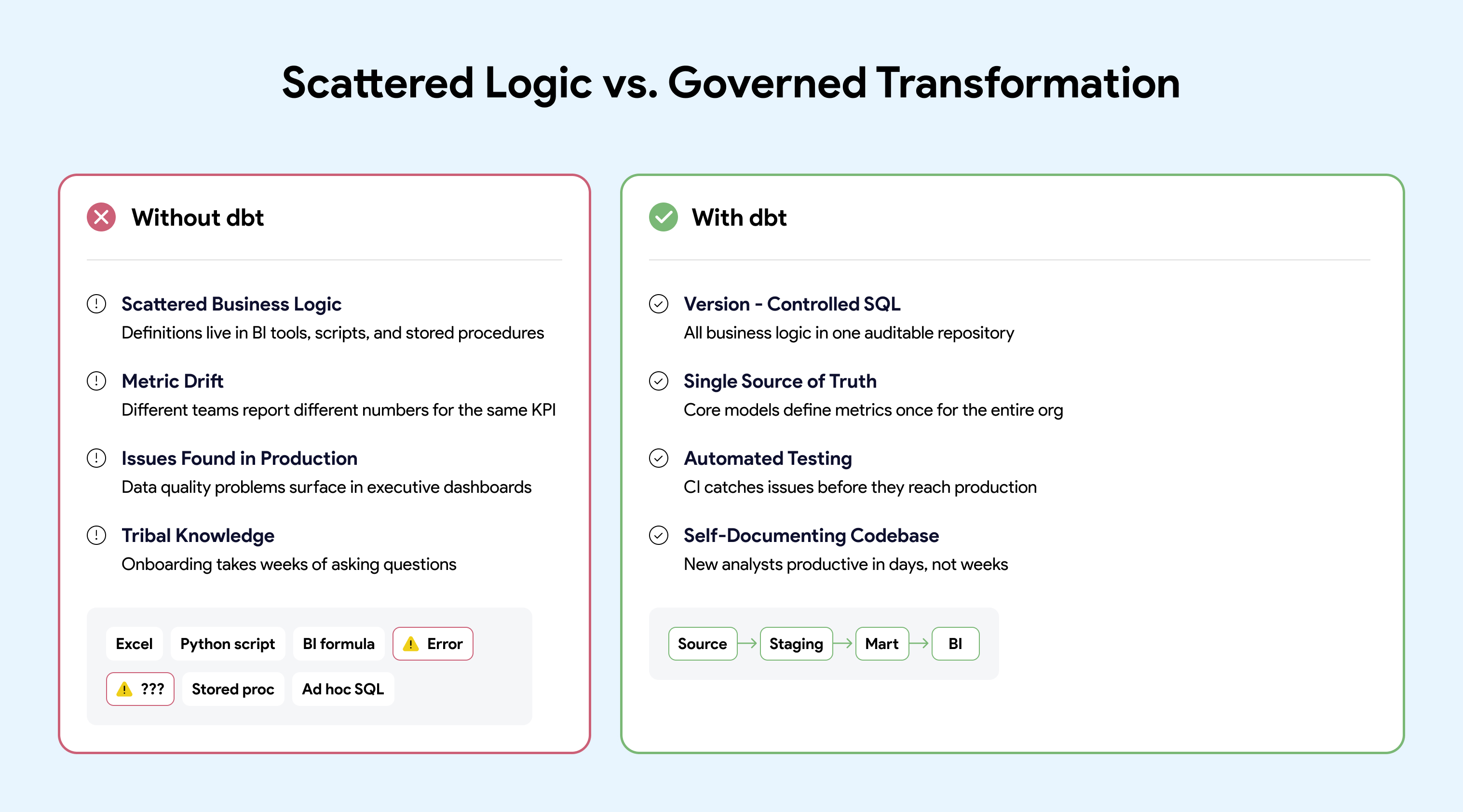

Without a standardized transformation layer, enterprise analytics fails in predictable ways:

- Business logic sprawls across BI tools, Python scripts, stored procedures, and ad hoc queries

- The same metric (revenue, active user, churn rate) gets defined differently by different teams

- Data quality issues surface in executive dashboards, not in development

- Changes to upstream data silently break downstream reports

- New analysts spend weeks understanding tribal knowledge before contributing

- Auditors cannot trace how reported numbers were calculated

Organizations report 45% of analyst time is spent finding, understanding, and fixing data quality issues Gartner Data Quality Market Survey, 2023

The Solution: Transformation as Code

dbt addresses these problems by treating transformation logic as production code:

The dbt Ecosystem

One of the most underappreciated reasons enterprises adopt dbt is leverage. dbt is not just a transformation framework. It sits at the center of a broad ecosystem that reduces implementation risk and accelerates delivery.

dbt Packages

dbt packages are reusable projects available at hub.getdbt.com. They provide pre-built tests, macros, and modeling patterns that let teams leverage proven approaches instead of building from scratch.

Popular packages include:

- dbt-utils: Generic tests and utility macros used by most dbt projects

- dbt-expectations: Data quality testing inspired by Great Expectations

- dbt-audit-helper: Compare model results during refactoring

- Source-specific packages for HubSpot, Salesforce, Stripe, and dozens of other systems

Using packages signals operational maturity. It reflects a preference for shared, tested patterns over bespoke solutions that create maintenance burden. Mature organizations also create internal packages they can share across teams to leverage learnings across the company.

Integrations with Enterprise Tools

dbt integrates with the broader data stack through its rich metadata (lineage, tests, documentation):

- Data Catalogs: Atlan, Alation, DataHub ingest dbt metadata for discovery and governance

- Data Observability: Monte Carlo, Bigeye, and Elementary use dbt context for smarter alerting

- BI and Semantic Layer: Looker, Tableau, and other semantic layers for consistent metrics

- Orchestration: Airflow, Dagster, and Prefect trigger and monitor dbt runs

- CI/CD: GitHub Actions, GitLab CI, Jenkins, Azure DevOps for automated testing and deployment

Because dbt produces machine-readable metadata, it acts as a foundation that other tools build on. This makes dbt a natural anchor point for enterprise data platforms.

The dbt Community

The dbt Slack community has 100,000+ members sharing patterns, answering questions, and debugging issues dbt Labs Community Stats, 2024

For enterprises, community size matters because:

- New hires often already know dbt, reducing onboarding time and training costs

- Common problems have well-documented solutions and patterns

- Best practices are discovered and shared quickly across organizations

- It reduces reliance on vendor documentation or expensive consultants

When you adopt dbt, you are not just adopting a tool. You are joining an ecosystem with momentum.

How dbt Works: The Development Workflow

A typical dbt workflow follows software engineering practices familiar to any developer:

- Write a model: Create a SQL file using SELECT statements and dbt's ref() function for dependencies.

- Test locally: Run dbt run to execute models against a development schema. Run dbt test to validate data quality.

- Document: Add descriptions to models and columns in YAML files. dbt generates a searchable documentation site automatically.

- Submit for review: Open a pull request. CI pipelines compile models, run tests, and check for standards compliance.

- Deploy to production: After approval, changes merge to main and deploy to production schemas via CD pipelines.

- Orchestrate: Airflow (or another orchestrator) schedules dbt runs, coordinates with upstream ingestion, and handles retries.

models:

- name: orders_summary

description: "Customer-level order aggregations"

columns:

- name: customer_id

description: "Primary key from source system"

tests:

- unique

- not_null

- name: lifetime_value

description: "Sum of all order amounts in USD" What dbt Delivers for Enterprise Leaders

For executives and data leaders, dbt is less about SQL syntax and more about risk reduction and operational efficiency.

Measurable Outcomes

Organizations implementing dbt with proper DataOps practices report:

- Dramatic productivity gains (Gartner predicts DataOps-guided teams will be 10x more productive by 2026)

- Faster incident resolution through lineage-based root cause analysis (from hours to minutes)

- Shorter onboarding with a self-documenting codebase (vs. 3+ months industry average)

- Elimination of metric drift where teams report different numbers for the same KPI

- Audit-ready transformation history with full traceability to code changes

Governance and Compliance

dbt supports enterprise governance requirements by making transformations explicit and auditable:

- Every transformation is version-controlled with full commit history

- Code review processes enforce four-eyes principles on data logic changes

- Lineage shows exactly how sensitive data flows through the pipeline

- Test results provide evidence of data quality controls for auditors

DIY vs. Managed: The Infrastructure Decision

The question for enterprise leaders is not "Should we use dbt?" The question is "How do we operate dbt as production infrastructure?"

dbt Core is open source, and many teams start by running it on a laptop. But open source looks free the way a free puppy looks free. The cost is not in the acquisition. The cost is in the care and feeding.

For a detailed comparison, see Build vs Buy Analytics Platform: Hosting Open-Source Tools.

The hard part is not installing dbt. The complexity comes from everything around it:

- Managing consistent environments across development, CI, and production

- Operating Airflow for orchestration and retry logic

- Handling secrets, credentials, and access controls

- Coordinating upgrades across dbt, Airflow, and dependencies

- Supporting dozens of developers working safely in parallel

Building your own dbt platform is like wiring your own home: possible, but very few teams should. Most enterprises find that building and maintaining this infrastructure becomes a distraction from their core mission of delivering data products.

dbt delivers value when supported by clear architecture, testing standards, CI/CD automation, and a platform that enables teams to work safely at scale.

Skip the Infrastructure. Start Delivering.

Datacoves provides managed dbt and Airflow deployed in your private cloud, with pre-built CI/CD, VS Code environments, and best-practice architecture out of the box. Your data never leaves your network. No VPC peering required.

Learn more about Managed dbt + Airflow

Decision Checklist for Leaders

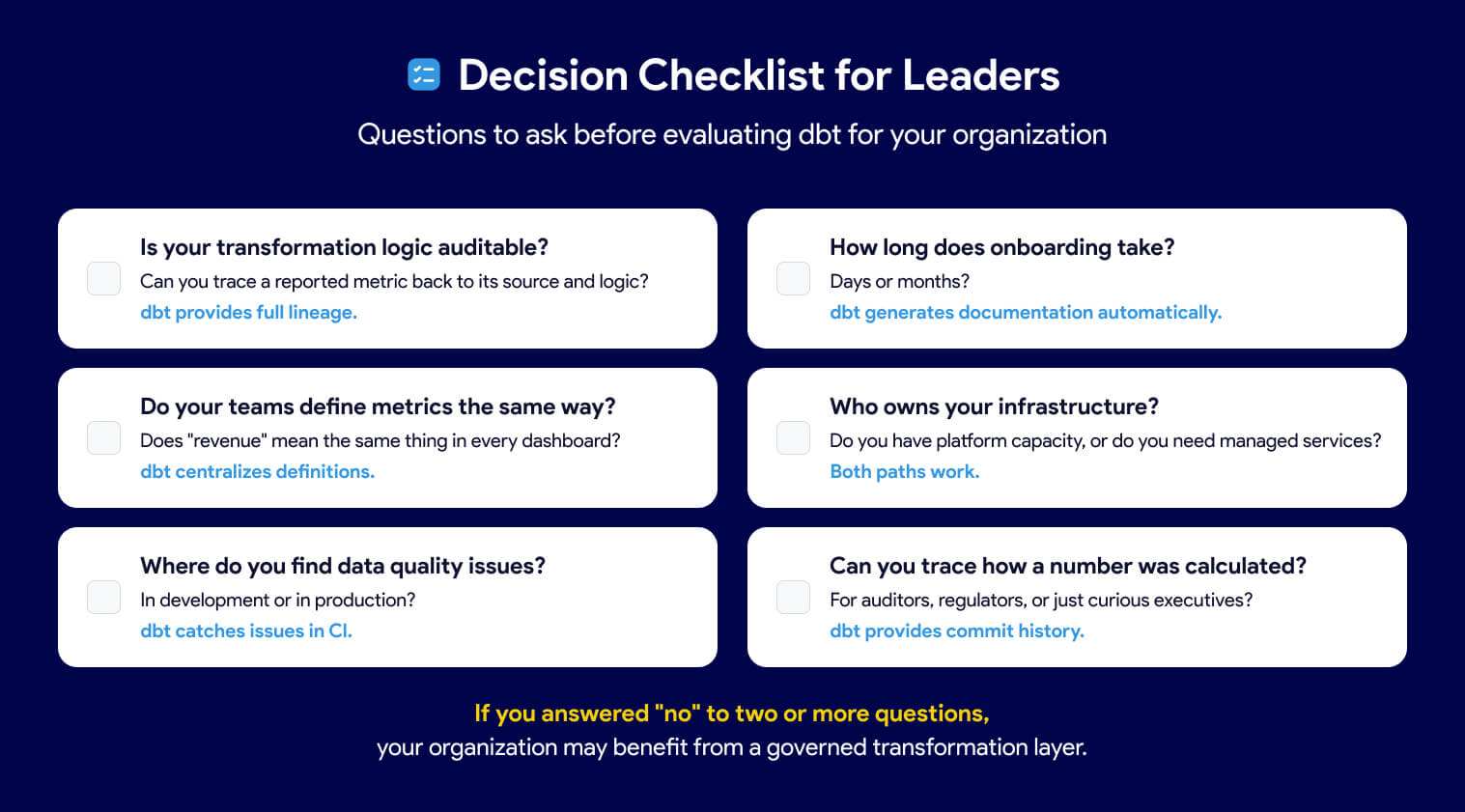

Before adopting or expanding dbt, leaders should ask:

Is your transformation logic auditable? If business rules live in dashboards, stored procedures, or tribal knowledge, the answer is no. dbt makes every transformation visible, version-controlled, and traceable.

Do your teams define metrics the same way? If "revenue" or "active user" means different things to different teams, you have metric drift. dbt centralizes definitions in code so everyone works from a single source of truth.

Where do you find data quality issues? If problems surface in executive dashboards instead of daily data quality check, you lack automated testing. dbt runs tests on every build, catching issues before they reach end users.

How long does onboarding take? If new analysts spend weeks decoding tribal knowledge, your codebase is not self-documenting. dbt generates documentation and lineage automatically from code.

Who owns your infrastructure? Decide whether your engineers should be building platforms or building models. Operating dbt at scale requires CI/CD, orchestration, environments, and security. That work must live somewhere.

Can you trace how a number was calculated? If auditors or regulators ask how a reported figure was derived, you need full lineage from source to dashboard. dbt provides that traceability by design.

The Bottom Line

dbt has become the standard for enterprise data transformation because it makes business logic visible, testable, and auditable. But the tool alone is not the strategy. Organizations that treat dbt as production infrastructure, with proper orchestration, CI/CD, and governance, unlock its full value. Those who skip the foundation often find themselves rebuilding later.

Ready to skip the infrastructure complexity? See how Datacoves helps enterprises operate dbt at scale

FAQ

Do you need to know Python to use dbt?

No. dbt is SQL-first. Any analyst who can write a SELECT statement can build dbt models. dbt also supports Python models for use cases that require it, but the vast majority of dbt work is SQL. This is one reason dbt has gained widespread adoption among analytics engineers who are not traditional software developers.

Does dbt replace Airflow?

No. dbt transforms data. Airflow orchestrates workflows. They solve different problems and work better together than apart. Airflow schedules dbt runs, handles retries, manages dependencies across ingestion and transformation jobs, and gives you a single control plane for your entire pipeline. Running dbt without an orchestrator in production is like building a plane without an instrument panel. See dbt vs Airflow: Which Tool Is Best?

How does dbt handle data quality testing?

dbt has a built-in testing framework. You define tests in YAML files alongside your models. Out-of-the-box tests cover uniqueness, not-null, accepted values, and referential integrity. You can extend this with packages like dbt-expectations for more advanced coverage. Tests can run automatically each time you process new data and can help detect issues during your release cycle. For a full breakdown, see Testing Options for dbt.

Is dbt a replacement for ETL tools like Informatica or Fivetran?

No. dbt handles only the transformation step. It does not extract or load data. Ingestion tools like Fivetran, Informatica, or Azure Data Factory bring raw data into your warehouse. dbt then transforms that data into trusted datasets. The two complement each other in an ELT architecture.

What data warehouses does dbt support?

dbt works with any warehouse that has a dbt adapter. This includes Snowflake, BigQuery, Redshift, Databricks, Microsoft Fabric, DuckDB, Postgres, and more. Your transformation logic stays portable. If you migrate warehouses, you repoint your adapter, not rewrite your models. For a list of supported adapters, see our dbt libraries list.

What does dbt actually do?

dbt takes raw data already loaded into your warehouse and transforms it into clean, analytics-ready tables and views using SQL SELECT statements. It compiles your models, resolves dependencies via a DAG, runs tests, and generates documentation automatically. Every transformation is version-controlled and auditable.

What does dbt stand for?

dbt stands for data build tool. It is an open-source transformation framework that lets data teams write SQL-based models, run automated tests, and generate documentation directly inside their data warehouse. It handles the "T" in ELT. It does not extract or load data.

What is a dbt model?

A dbt model is a SQL file containing a SELECT statement. When you run dbt, it compiles the model, resolves its dependencies using ref() functions, and materializes the result as a table or view in your warehouse. Models are organized into layers, typically staging, intermediate, and mart, to keep transformation logic clean and reusable.

What is the difference between dbt Core and dbt Cloud?

dbt Core is the free, open-source command-line tool. dbt Cloud is dbt Labs' commercial SaaS platform that wraps dbt Core with a browser-based IDE, job scheduling, and collaboration features. Both run the same transformation engine. The difference is how you operate and manage dbt in production. Teams that need private deployment, Airflow orchestration, or enterprise security often choose alternatives like Datacoves over dbt Cloud. See dbt Core vs dbt Cloud: Key Differences.

What is the real cost of running dbt Core yourself?

dbt Core is free to use. The cost is everything around it. Running dbt in production requires an orchestrator like Airflow, CI/CD pipelines, secrets management, consistent environments across dev and prod, and ongoing maintenance as versions change. For small teams, this overhead can consume more engineering time than it saves. Open source looks free the way a free puppy looks free. For teams that want the control of dbt Core without the infrastructure burden, Datacoves provides managed dbt and Airflow deployed in your private cloud.

-Photoroom.jpg)