Not long ago, the data analytics world relied on monolithic infrastructures—tightly coupled systems that were difficult to scale, maintain, and adapt to changing needs. These legacy setups often resulted in operational bottlenecks, delayed insights, and high maintenance costs. To overcome these challenges, the industry shifted toward what was deemed the Modern Data Stack (MDS)—a suite of focused tools optimized for specific stages of the data engineering lifecycle.

This modular approach was revolutionary, allowing organizations to select best-in-class tools like Airflow for Orchestration or a managed version of Airflow from Astronomer or Amazon without the need to build custom solutions. While the MDS improved scalability, reduced complexity, and enhanced flexibility, it also reshaped the build vs. buy decision for analytics platforms. Today, instead of deciding whether to create a component from scratch, data teams face a new question: Should they build the infrastructure to host open-source tools like Apache Airflow and dbt Core, or purchase their managed counterparts? This article focuses on these two components because pipeline orchestration and data transformation lie at the heart of any organization’s data platform.

When we say build in terms of open-source solutions, we mean building infrastructure to self-host and manage mature open-source tools like Airflow and dbt. These two tools are popular because they have been vetted by thousands of companies! In addition to hosting and managing, engineers must also ensure interoperability of these tools within their stack, handle security, scalability, and reliability. Needless to say, building is a huge undertaking that should not be taken lightly.

dbt and Airflow both started out as open-source tools, which were freely available to use due to their permissive licensing terms. Over time, cloud-based managed offerings of these tools were launched to simplify the setup and development process. These managed solutions build upon the open-source foundation, incorporating proprietary features like enhanced user interfaces, automation, security integration, and scalability. The goal is to make the tools more convenient and reduce the burden of maintaining infrastructure while lowering overall development costs. In other words, paid versions arose out of the pain points of self-managing the open-source tools.

This begs the important question: Should you self-manage or pay for your open-source analytics tools?

As with most things, both options come with trade-offs, and the “right” decision depends on your organization’s needs, resources, and priorities. By understanding the pros and cons of each approach, you can choose the option that aligns with your goals, budget, and long-term vision.

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Before moving on to the buy tradeoffs, it is important to set the record straight. You may have noticed that we did not include “the tool is free to use” as one of our pros for building with open-source. You might have guessed by reading the title of this section, but many people incorrectly believe that building their MDS using open-source tools like dbt is free. When in reality there are many factors that contribute to the true dbt pricing and the same is true for Airflow.

How can that be? Well, setting up everything you need and managing infrastructure for Airflow and dbt isn’t necessarily plug and play. There is day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges which require ongoing expertise and attention. Hiring a team to handle this will be critical particularly as you scale. High salaries and benefits are needed to avoid costly mistakes; this can easily cost anywhere from $5,000 to $26,000+/month depending on the size of your team.

In addition to the cost of salaries, let’s look at other possible hidden costs that come with using open-source tools.

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks and when issues arise, a you will need expertise to accelerate the time to resolution.

And then there’s the learning curve. Not all engineers on your team will be seniors, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents a hidden cost.

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business users are impacted as they become reliant on your insights.

A managed solution for Airflow and dbt can solve many of the problems that come with building your own solution from open-source tools such as: hassle-free maintenance, improved UI/UX experience, and integrated functionality. Let’s take a look at the pros.

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however additional configuration and development will be needed for teams to leverage it with dbt and to troubleshoot infrastructure issues suck as containers running out of memory.

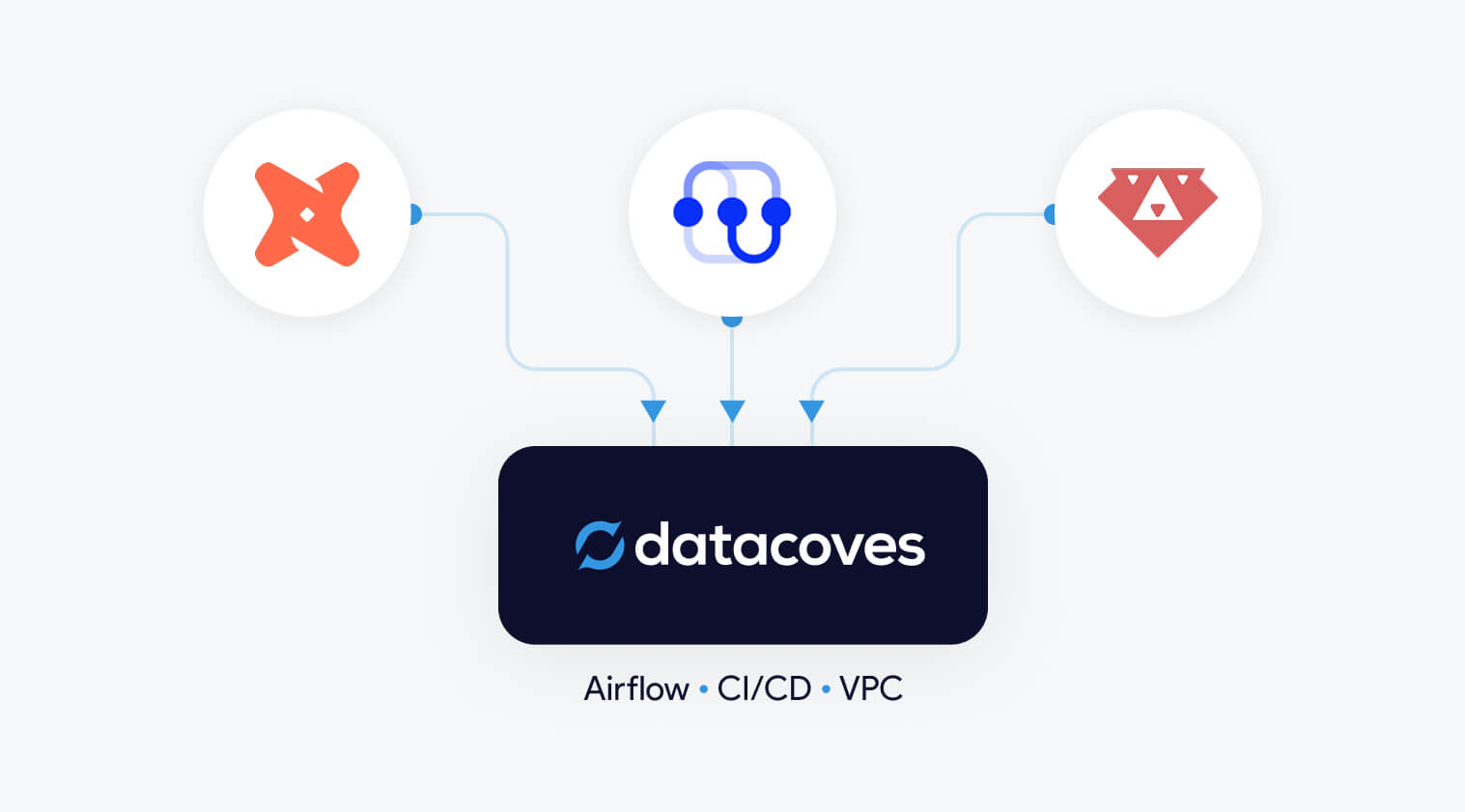

For data teams, the allure of a custom-built solution often lies in its promise of complete control and customization. However, building this requires significant time, expertise, and ongoing maintenance. Datacoves bridges the gap between custom-built flexibility and the simplicity of managed services, offering the best of both worlds.

With Datacoves, teams can leverage managed Airflow and pre-configured dbt environments to eliminate the operational burden of infrastructure setup and maintenance. This allows data teams to focus on what truly matters—delivering insights and driving business decisions—without being bogged down by tool management.

Unlike other managed solutions for dbt or Airflow, which often compromise on flexibility for the sake of simplicity, Datacoves retains the adaptability that custom builds are known for. By combining this flexibility with the ease and efficiency of managed services, Datacoves empowers teams to accelerate their analytics workflows while ensuring scalability and control.

Datacoves doesn’t just run the open-source solutions, but through real-world implementations, the platform has been molded to handle enterprise complexity while simplifying project onboarding. With Datacoves, teams don’t have to compromize on features like Datacoves-Mesh (aka dbt-mesh), column level lineage, GenAI, Semantic Layer, etc. Best of all, the company’s goal is to make you successful and remove hosting complexity without introducing vendor lock-in. What Datacove does, you can do yourself if given enough time, experience, and money. Finally, for security concious organizations, Datacoves is the only solution on the market that can be deployed in your private cloud with white-glove enterprise support.

Datacoves isn’t just a platform—it’s a partnership designed to help your data team unlock their potential. With infrastructure taken care of, your team can focus on what they do best: generating actionable insights and maximizing your ROI.

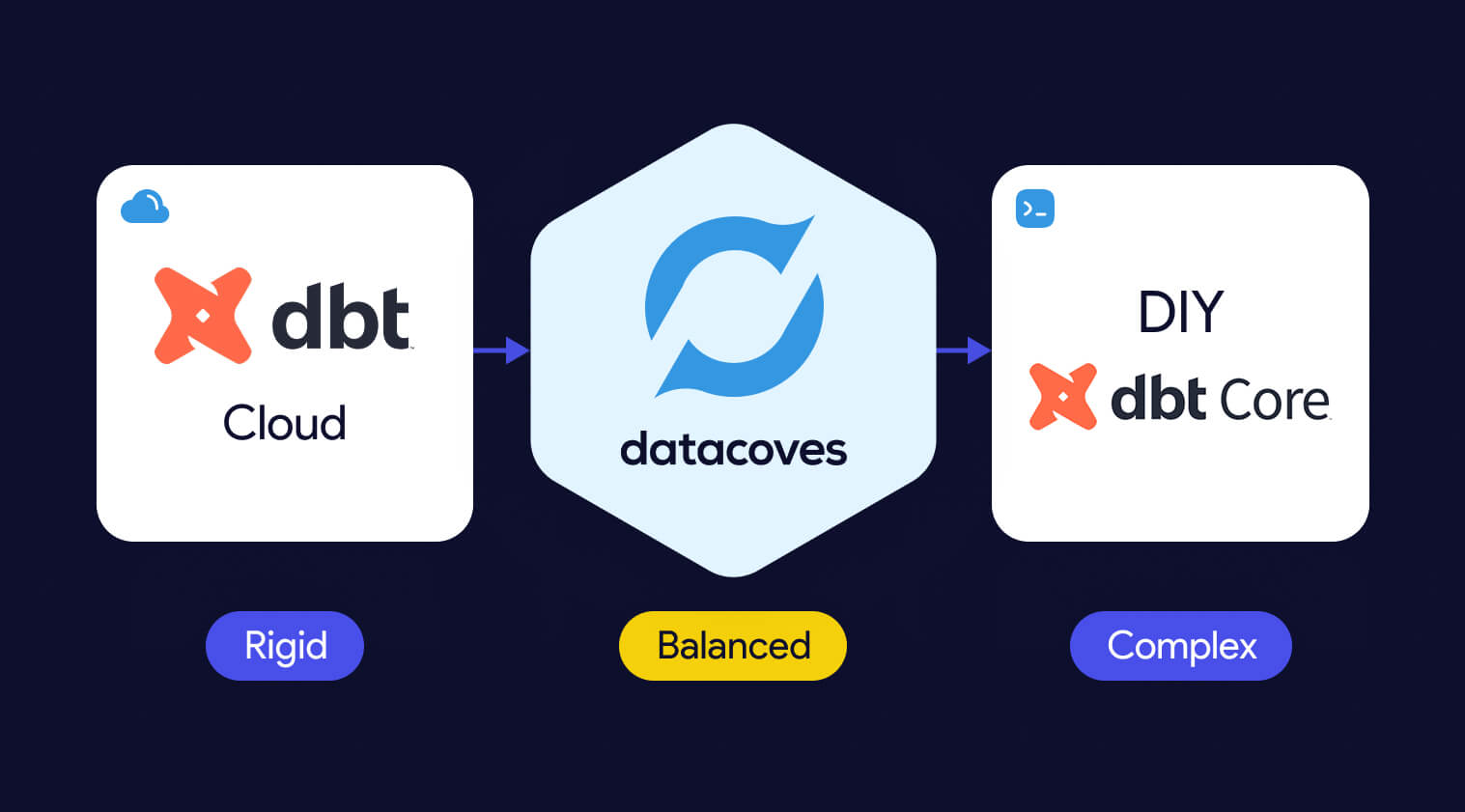

The build vs. buy debate has long been a challenge for data teams, with building offering flexibility at the cost of complexity, and buying sacrificing flexibility for simplicity. As discussed earlier in the article, solutions like dbt and Airflow are powerful, but managing them in-house requires significant time, resources, and expertise. On the other hand, managed offerings like dbt Cloud and MWAA simplify operations but often limit customization and control.

Datacoves bridges this gap, providing a managed platform that delivers the flexibility and control of a custom build without the operational headaches. By eliminating the need to manage infrastructure, scaling, and security. Datacoves enables data teams to focus on what matters most: delivering actionable insights and driving business outcomes.

As highlighted in Fundamentals of Data Engineering, data teams should prioritize extracting value from data rather than managing the tools that support them. Datacoves embodies this principle, making the argument to build obsolete. Why spend weeks—or even months—building when you can have the customization and adaptability of a build with the ease of a buy? Datacoves is not just a solution; it’s a rethinking of how modern data teams operate, helping you achieve your goals faster, with fewer trade-offs.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and visual ETL tools such as Alteryx, Matillion, and Informatica. Code-first engines offer stronger rigor, testing, and CI/CD, while GUI platforms emphasize ease of use and rapid prototyping. Teams choose these alternatives when they need more security, governance, or flexibility than dbt Core or dbt Cloud provide.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and GUI-based ETL tools such as Alteryx, Matillion, and Informatica.

Teams explore dbt alternatives when they need stronger governance, private deployments, or support for Python and code-first workflows that go beyond SQL. Many also prefer GUI-based ETL tools for faster onboarding. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in, which makes tool neutrality and platform flexibility more important than ever.

Teams look for dbt alternatives when they need stronger orchestration, consistent development environments, Python support, or private cloud deployment options that dbt Cloud does not provide.

Organizations evaluating dbt alternatives typically compare tools across three categories. Each category reflects a different approach to data transformation, development preferences, and organizational maturity.

Organizations consider alternatives to dbt Cloud when they need more flexibility, stronger security, or support for development workflows that extend beyond dbt. Teams comparing platform options often begin by evaluating the differences between dbt Cloud vs dbt Core.

Running enterprise-scale ELT pipelines often requires a full orchestration layer, consistent development environments, and private deployment options that dbt Cloud does not provide. Costs can also increase at scale (see our breakdown of dbt pricing considerations), and some organizations prefer to avoid features that are not open source to reduce long-term vendor lock-in.

This category includes platforms that deliver the benefits of dbt Cloud while providing more control, extensibility, and alignment with enterprise data platform requirements.

Datacoves provides a secure, flexible platform that supports dbt, SQLMesh, and Bruin in a unified environment with private cloud or VPC deployment.

Datacoves is an enterprise data platform that serves as a secure, flexible alternative to dbt Cloud. It supports dbt Core, SQLMesh, and Bruin inside a unified development and orchestration environment, and it can be deployed in your private cloud or VPC for full control over data access and governance.

Benefits

Flexibility and Customization:

Datacoves provides a customizable in-browser VS Code IDE, Git workflows, and support for Python libraries and VS Code extensions. Teams can choose the transformation engine that fits their needs without being locked into a single vendor.

Handling Enterprise Complexity:

Datacoves includes managed Airflow for end-to-end orchestration, making it easy to run dbt and Airflow together without maintaining your own infrastructure. It standardizes development environments, manages secrets, and supports multi-team and multi-project workflows without platform drift.

Cost Efficiency:

Datacoves reduces operational overhead by eliminating the need to maintain separate systems for orchestration, environments, CI, logging, and deployment. Its pricing model is predictable and designed for enterprise scalability.

Data Security and Compliance:

Datacoves can be deployed fully inside your VPC or private cloud. This gives organizations complete control over identity, access, logging, network boundaries, and compliance with industry and internal standards.

Reduced Vendor Lock-In:

Datacoves supports dbt, SQLMesh, and Bruin Data, giving teams long-term optionality. This avoids being locked into a single transformation engine or vendor ecosystem.

Running dbt Core yourself is a flexible option that gives teams full control over how dbt executes. It is also the most resource-intensive approach. Teams choosing DIY dbt Core must manage orchestration, scheduling, CI, secrets, environment consistency, and long-term platform maintenance on their own.

Benefits

Full Control:

Teams can configure dbt Core exactly as they want and integrate it with internal tools or custom workflows.

Cost Flexibility:

There are no dbt Cloud platform fees, but total cost of ownership often increases as the system grows.

Considerations

High Maintenance Overhead:

Teams must maintain Airflow or another orchestrator, build CI pipelines, manage secrets, and keep development environments consistent across users.

Requires Platform Engineering Skills:

DIY dbt Core works best for teams with strong Kubernetes, CI, Python, and DevOps expertise. Without this expertise, the environment becomes fragile over time.

Slow to Scale:

As more engineers join the team, keeping dbt environments aligned becomes challenging. Onboarding, upgrades, and platform drift create operational friction.

Security and Compliance Responsibility:

Identity, permissions, logging, and network controls must be designed and maintained internally, which can be significant for regulated organizations.

Teams that prefer code-first tools often look for dbt alternatives that provide strong SQL modeling, Python support, and seamless integration with CI/CD workflows and automated testing. These are part of a broader set of data transformation tools. Code-based ETL tools give developers greater control over transformations, environments, and orchestration patterns than GUI platforms. Below are four code-first contenders that organizations should evaluate.

Code-first dbt alternatives like SQLMesh, Bruin Data, and Dataform provide stronger CI/CD integration, automated testing, and more control over complex transformation workflows.

SQLMesh is an open-source framework for SQL and Python-based data transformations. It provides strong visibility into how changes impact downstream models and uses virtual data environments to preview changes before they reach production.

Benefits

Efficient Development Environments:

Virtual environments reduce unnecessary recomputation and speed up iteration.

Considerations

Part of the Fivetran Ecosystem:

SQLMesh was acquired by Fivetran, which may influence its future roadmap and level of independence.

Dataform is a SQL-based transformation framework focused specifically for BigQuery. It enables teams to create table definitions, manage dependencies, document models, and configure data quality tests inside the Google Cloud ecosystem. It also provides version control and integrates with GitHub and GitLab.

Benefits

Centralized BigQuery Development:

Dataform keeps all modeling and testing within BigQuery, reducing context switching and making it easier for teams to collaborate using familiar SQL workflows.

Considerations

Focused Only on the GCP Ecosystem:

Because Dataform is geared toward BigQuery, it may not be suitable for organizations that use multiple cloud data warehouses.

AWS Glue is a serverless data integration service that supports Python-based ETL and transformation workflows. It works well for organizations operating primarily in AWS and provides native integration with services like S3, Lambda, and Athena.

Benefits

Python-First ETL in AWS:

Glue supports Python scripts and PySpark jobs, making it a good fit for engineering teams already invested in the AWS ecosystem.

Considerations

Requires Engineering Expertise:

Glue can be complex to configure and maintain, and its Python-centric approach may not be ideal for SQL-first analytics teams.

Bruin is a modern SQL-based data modeling framework designed to simplify development, testing, and environment-aware deployments. It offers a familiar SQL developer experience while adding guardrails and automation to help teams manage complex transformation logic.

Benefits

Modern SQL Modeling Experience:

Bruin provides a clean SQL-first workflow with strong dependency management and testing.

Considerations

Growing Ecosystem:

Bruin is newer than dbt and has a smaller community and fewer third-party integrations.

While code-based transformation tools provide the most flexibility and long-term maintainability, some organizations prefer graphical user interface (GUI) tools. These platforms use visual, drag-and-drop components to build data integration and transformation workflows. Many of these platforms fall into the broader category of no-code ETL tools. GUI tools can accelerate onboarding for teams less comfortable with code editors and may simplify development in the short term. Below are several GUI-based options that organizations often consider as dbt alternatives.

GUI-based dbt alternatives such as Matillion, Informatica, and Alteryx use drag-and-drop interfaces that simplify development and accelerate onboarding for mixed-skill teams.

Matillion is a cloud-based data integration platform that enables teams to design ETL and transformation workflows through a visual, drag-and-drop interface. It is built for ease of use and supports major cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake.

Benefits

User-Friendly Visual Development:

Matillion simplifies pipeline building with a graphical interface, making it accessible for users who prefer low-code or no-code tooling.

Considerations

Limited Flexibility for Complex SQL Modeling:

Matillion’s visual approach can become restrictive for advanced transformation logic or engineering workflows that require version control and modular SQL development.

Informatica is an enterprise data integration platform with extensive ETL capabilities, hundreds of connectors, data quality tooling, metadata-driven workflows, and advanced security features. It is built for large and diverse data environments.

Benefits

Enterprise-Scale Data Management:

Informatica supports complex data integration, governance, and quality requirements, making it suitable for organizations with large data volumes and strict compliance needs.

Considerations

High Complexity and Cost:

Informatica’s power comes with a steep learning curve, and its licensing and operational costs can be significant compared to lighter-weight transformation tools.

Alteryx is a visual analytics and data preparation platform that combines data blending, predictive modeling, and spatial analysis in a single GUI-based environment. It is designed for analysts who want to build workflows without writing code and can be deployed on-premises or in the cloud.

Benefits

Powerful GUI Analytics Capabilities:

Alteryx allows users to prepare data, perform advanced analytics, and generate insights in one tool, enabling teams without strong coding skills to automate complex workflows.

Considerations

High Cost and Limited SQL Modeling Flexibility:

Alteryx is one of the more expensive platforms in this category and is less suited for SQL-first transformation teams who need modular modeling and version control.

Azure Data Factory (ADF) is a fully managed, serverless data integration service that provides a visual interface for building ETL and ELT pipelines. It integrates natively with Azure storage, compute, and analytics services, allowing teams to orchestrate and monitor pipelines without writing code.

Benefits

Strong Integration for Microsoft-Centric Teams:

ADF connects seamlessly with other Azure services and supports a pay-as-you-go model, making it ideal for organizations already invested in the Microsoft ecosystem.

Considerations

Limited Transformation Flexibility:

ADF excels at data movement and orchestration but offers limited capabilities for complex SQL modeling, making it less suitable as a primary transformation engine

Talend provides an end-to-end data management platform with support for batch and real-time data integration, data quality, governance, and metadata management. Talend Data Fabric combines these capabilities into a single low-code environment that can run in cloud, hybrid, or on-premises deployments.

Benefits

Comprehensive Data Quality and Governance:

Talend includes built-in tools for data cleansing, validation, and stewardship, helping organizations improve the reliability of their data assets.

Considerations

Broad Platform, Higher Operational Complexity:

Talend’s wide feature set can introduce complexity, and teams may need dedicated expertise to manage the platform effectively.

SQL Server Integration Services is part of the Microsoft SQL Server ecosystem and provides data integration and transformation workflows. It supports extracting, transforming, and loading data from a wide range of sources, and offers graphical tools and wizards for designing ETL pipelines.

Benefits

Strong Fit for SQL Server-Centric Teams:

SSIS integrates deeply with SQL Server and other Microsoft products, making it a natural choice for organizations with a Microsoft-first architecture.

Considerations

Not Designed for Modern Cloud Data Warehouses:

SSIS is optimized for on-premises SQL Server environments and is less suitable for cloud-native architectures or modern ELT workflows.

Recent consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in and pushed organizations to evaluate more flexible transformation platforms.

Organizations explore dbt alternatives when dbt no longer meets their architectural, security, or workflow needs. As teams scale, they often require stronger orchestration, consistent development environments, mixed SQL and Python workflows, and private deployment options that dbt Cloud does not provide.

Some teams prefer code-first engines for deeper CI/CD integration, automated testing, and strong guardrails across developers. Others choose GUI-based tools for faster onboarding or broader integration capabilities. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has also increased concerns about vendor lock-in.

These factors lead many organizations to evaluate tools that better align with their governance requirements, engineering preferences, and long-term strategy.

DIY dbt Core offers full control but requires significant engineering work to manage orchestration, CI/CD, security, and long-term platform maintenance.

Running dbt Core yourself can seem attractive because it offers full control and avoids platform subscription costs. However, building a stable, secure, and scalable dbt environment requires significantly more than executing dbt build on a server. It involves managing orchestration, CI/CD, and ensuring development environment consistency along with long-term platform maintenance, all of which require mature DataOps practices.

The true question for most organizations is not whether they can run dbt Core themselves, but whether it is the best use of engineering time. This is essentially a question of whether to build vs buy your data platform. DIY dbt platforms often start simple and gradually accumulate technical debt as teams grow, pipelines expand, and governance requirements increase.

For many organizations, DIY works in the early stages but becomes difficult to sustain as the platform matures.

The right dbt alternative depends on your team’s skills, governance requirements, pipeline complexity, and long-term data platform strategy.

Selecting the right dbt alternative depends on your team’s skills, security requirements, and long-term data platform strategy. Each category of tools solves different problems, so it is important to evaluate your priorities before committing to a solution.

If these are priorities, a platform with secure deployment options or multi-engine support may be a better fit than dbt Cloud.

Recent consolidation in the ecosystem has raised concerns about vendor dependency. Organizations that want long-term flexibility often look for:

Consider platform fees, engineering maintenance, onboarding time, and the cost of additional supporting tools such as orchestrators, IDEs, and environment management

dbt remains a strong choice for SQL-based transformations, but it is not the only option. As organizations scale, they often need stronger orchestration, consistent development environments, Python support, and private deployment capabilities that dbt Cloud or DIY dbt Core may not provide. Evaluating alternatives helps ensure that your transformation layer aligns with your long-term platform and governance strategy.

Code-first tools like SQLMesh, Bruin Data, and Dataform offer strong engineering workflows, while GUI-based tools such as Matillion, Informatica, and Alteryx support faster onboarding for mixed-skill teams. The right choice depends on the complexity of your pipelines, your team’s technical profile, and the level of security and control your organization requires.

Datacoves provides a flexible, secure alternative that supports dbt, SQLMesh, and Bruin in a unified environment. With private cloud or VPC deployment, managed Airflow, and a standardized development experience, Datacoves helps teams avoid vendor lock-in while gaining an enterprise-ready platform for analytics engineering.

Selecting the right dbt alternative is ultimately about aligning your transformation approach with your data architecture, governance needs, and long-term strategy. Taking the time to assess these factors will help ensure your platform remains scalable, secure, and flexible for your future needs.

A lean analytics stack built with dlt, DuckDB, DuckLake, and dbt delivers fast insights without the cost or complexity of a traditional cloud data warehouse. For teams prioritizing speed, simplicity, and control, this architecture provides a practical path from raw data to production-ready analytics.

In practice, teams run this stack using Datacoves to standardize environments, manage workflows, and apply production guardrails without adding operational overhead.

A lean analytics stack built with dlt, DuckDB, DuckLake, and dbt delivers fast, production-ready insights without the cost or complexity of a traditional cloud data warehouse.

A lean analytics stack works when each tool has a clear responsibility. In this architecture, ingestion, storage, and transformation are intentionally separated so the system stays fast, simple, and flexible.

Together, these tools form a modern lakehouse-style stack without the operational cost of a traditional cloud data warehouse.

Running DuckDB locally is easy. Running it consistently across machines, environments, and teams is not. This is where MotherDuck matters.

MotherDuck provides a managed control plane for DuckDB and DuckLake, handling authentication, metadata coordination, and cloud-backed storage without changing how DuckDB works. You still query DuckDB. You just stop worrying about where it runs.

To get started:

MOTHERDUCK_TOKEN).This single token is used by dlt, DuckDB, and dbt to authenticate securely with MotherDuck. No additional credentials or service accounts are required.

At this point, you have:

That consistency is what makes the rest of the stack reliable.

In a lean data stack, ingestion should be reliable, repeatable, and boring. That is exactly what dlt is designed to do.

dlt loads raw data into DuckDB with strong defaults for schema handling, incremental loads, and metadata tracking. It removes the need for custom ingestion frameworks while remaining flexible enough for real-world data sources.

In this example, dlt ingests a CSV file and loads it into a DuckDB database hosted in MotherDuck. The same pattern works for APIs, databases, and file-based sources.

To keep dependencies lightweight and avoid manual environment setup, we use uv to run the ingestion script with inline dependencies.

pip install uv

touch us_populations.py

chmod +x us_populations.pyThe script below uses dlt’s MotherDuck destination. Authentication is handled through the MOTHERDUCK_TOKEN environment variable, and data is written to a raw schema in DuckDB.

#!/usr/bin/env -S uv run

# /// script

# dependencies = [

# "dlt[motherduck]==1.16.0",

# "psutil",

# "pandas",

# "duckdb==1.3.0"

# ]

# ///

"""Loads a CSV file to MotherDuck"""

import dlt

import pandas as pd

from utils.datacoves_utils import pipelines_dir

@dlt.resource(write_disposition="replace")

def us_population():

url = "https://raw.githubusercontent.com/dataprofessor/dashboard-v3/master/data/us-population-2010-2019.csv"

df = pd.read_csv(url)

yield df

@dlt.source

def us_population_source():

return [us_population()]

if __name__ == "__main__":

# Configure MotherDuck destination with explicit credentials

motherduck_destination = dlt.destinations.motherduck(

destination_name="motherduck",

credentials={

"database": "raw",

"motherduck_token": dlt.secrets.get("MOTHERDUCK_TOKEN")

}

)

pipeline = dlt.pipeline(

progress = "log",

pipeline_name = "us_population_data",

destination = motherduck_destination,

pipelines_dir = pipelines_dir,

# dataset_name is the target schema name in the "raw" database

dataset_name="us_population"

)

load_info = pipeline.run([

us_population_source()

])

print(load_info)Running the script loads the data into DuckDB:

./us_populations.pyAt this point, raw data is available in DuckDB and ready for transformation. Ingestion is fully automated, reproducible, and versionable, without introducing a separate ingestion platform.

Once raw data is loaded into DuckDB, transformations should follow the same disciplined workflow teams already use elsewhere. This is where dbt fits naturally.

dbt provides version-controlled models, testing, documentation, and repeatable builds. The difference in this stack is not how dbt works, but where tables are materialized.

By enabling DuckLake, dbt materializes tables as Parquet files with centralized metadata instead of opaque DuckDB-only files. This turns DuckDB into a true lakehouse engine while keeping the developer experience unchanged.

To get started, install dbt and the DuckDB adapter:

pip install dbt-core==1.10.17

pip install dbt-duckdb==1.10.0

dbt initNext, configure your dbt profile to target DuckLake through MotherDuck:

default:

outputs:

dev:

type: duckdb

# This requires the environment var MOTHERDUCK_TOKEN to be set

path: 'md:datacoves_ducklake'

threads: 4

schema: dev # this will be the prefix used in the duckdb schema

is_ducklake: true

target: devThis configuration does a few important things:

MOTHERDUCK_TOKEN environment variableWith this in place, dbt models behave exactly as expected. Models materialized as tables are stored in DuckLake, while views and ephemeral models remain lightweight and fast.

From here, teams can:

This is the key advantage of the stack: modern analytics engineering practices, without the overhead of a traditional warehouse.

This lean stack is not trying to replace every enterprise data warehouse. It is designed for teams that value speed, simplicity, and cost control over heavyweight infrastructure.

This approach works especially well when:

The trade-offs are real and intentional. DuckDB and DuckLake excel at analytical workloads and developer productivity, but they are not designed for high-concurrency BI at massive scale. Teams with hundreds of dashboards and thousands of daily users may still need a traditional warehouse.

Where this stack shines is time to value. You can move from raw data to trusted analytics quickly, with minimal infrastructure, and without locking yourself into a platform that is expensive to unwind later.

In practice, many teams use this architecture as:

When paired with Datacoves, teams get the operational guardrails this stack needs to run reliably. Datacoves standardizes environments, integrates orchestration and CI/CD, and applies best practices so the simplicity of the stack does not turn into fragility over time.

Teams often run this stack with Datacoves to standardize environments, apply production guardrails, and avoid the operational drag of DIY platform management.

If you want to see this stack running end to end, watch the Datacoves + MotherDuck webinar. It walks through ingestion with dlt, transformations with dbt and DuckLake, and how teams operationalize the workflow with orchestration and governance.

The session also covers:

The merger of dbt Labs and Fivetran (which we refer to as dbt Fivetran for simplicity) represents a new era in enterprise analytics. The combined company is expected to create a streamlined, end-to-end data workflow consolidating data ingestion, transformation, and activation with the stated goal of reducing operational overhead and accelerating delivery. Yet, at the dbt Coalesce conference in October 2025 and in ongoing conversations with data leaders, many are voicing concerns about price uncertainty, reduced flexibility, and the long-term future of dbt Core.

As enterprises evaluate the implications of this merger, understanding both the opportunities and risks is critical for making informed decisions about their organization's long-term analytics strategy.

In this article, you’ll learn:

1. What benefits could the dbt Fivetran merger offer enterprise data teams

2. Key risks and lessons from past open-source acquisitions

3. How enterprises can manage risks and challenges

4. Practical steps dbt Fivetran can take to address community anxiety

For enterprise data teams, the dbt Fivetran merger may bring compelling opportunities:

1. Integrated Analytics Stack:

The combination of ingestion, transformation, and activation (reverse ETL) processes may enhance onboarding by streamlining contract management, security evaluations, and user training.

2. Resource Investment:

The merged company has the potential to speed up feature development across the data landscape. Open data standards like Iceberg could see increased adoption, fostering interoperability between platforms such as Snowflake and Databricks.

While these prospects are enticing, they are not guaranteed. The newly formed organization now faces the non-trivial task of merging various teams, including Fivetran, HVR (Oct 2021), Census (May 2025), SQLMesh/Tobiko (Sept 2025), and dbt Labs (Oct 2025). Successfully integrating their tools, development practices, and support functions will be crucial. To create a truly seamless, end-to-end platform, alignment of product roadmaps, engineering standards, and operational processes will be necessary. Enterprises should carefully assess the execution risks when considering the promised benefits of this merger, as these advantages hinge on Fivetran's ability to effectively integrate these technologies and teams.

The future openness and flexibility of dbt Core is being questioned, with significant consequences for enterprise data teams that rely on open-source tooling for agility, security, and control.

dbt’s rapid adoption, now exceeding 80,000 projects, was fueled by its permissive Apache License and a vibrant, collaborative community. This openness allowed organizations to deploy, customize, and extend dbt to fit their needs, and enabled companies like Datacoves to build complementary tools, sponsor open-source projects, and simplify enterprise data workflows.

However, recent moves by dbt Labs, accelerated by the Fivetran merger, signal a natural evolution toward monetization and enterprise alignment:

1. Licensing agreement with Snowflake

2. Rewriting dbt Core as dbt Fusion under a more restrictive ELv2 license

3. Introducing a “freemium” model for the dbt VS Code Extension, limiting free use to 15 registered users per organization

While these steps are understandable from a business perspective, they introduce uncertainty and anxiety within the data community. The risk is that the balance between open innovation and commercial control could tip, raising understandable questions about long-term flexibility that enterprises have come to expect from dbt Core.

dbt Labs and Fivetran have both stated that dbt Core's license would not change, and I believe them. The vast majority of dbt users are using dbt Core and changing the licenses risks fragmentation and loss of goodwill in the community. The future vision for dbt is not dbt Core, but instead dbt Fusion.

While I see a future for dbt Core, I don't feel the same about SQLMesh. There is little chance that the dbt Fivetran organization would continue to invest in two open-source projects. It is also unlikely that SQLMesh innovations would make their way into dbt Core, as that would directly compete with dbt Fusion.

Recent history offers important cautionary tales for enterprises. While not a direct parallel, it’s worth learning from:

1. Terraform: A license change led to fragmentation and the creation of OpenTofu, eroding trust in the original steward.

2. ElasticSearch: License restrictions resulted in the OpenSearch fork, dividing the community and increasing support risks.

3. Redis and MongoDB: Similar license shifts caused forks or migrations to alternative solutions, increasing risk and migration costs.

For enterprise data leaders, these precedents highlight the dangers of vendor fragmentation, increased migration costs, and uncertainty around long-term support. When foundational tools become less open, organizations may face difficult decisions about adapting, migrating, or seeking alternatives. If you're considering your options, check out our Platform Evaluation Worksheet.

On the other hand, there are successful models where open-source projects and commercial offerings coexist and thrive:

1. Airflow: Maintains a permissive license, with commercial providers offering managed services and enterprise features.

2. GitLab, Spark, and Kafka: Each has built a sustainable business around a robust open-source core, monetizing through value-added services and features.

These examples show that a healthy open-source core, supported by managed services and enterprise features, can benefit all stakeholders, provided the commitment to openness remains.

To navigate the evolving landscape, enterprises should:

1. Monitor licensing and governance changes closely.

2. Engage in community and governance discussions to advocate for transparency.

3. Plan for contingencies, including potential migration or multi-vendor strategies.

4. Diversify by avoiding over-reliance on a single vendor or platform.

Avoid Vendor Lock-In:

1. Continue to leverage multiple tools for data ingestion and orchestration (e.g., Airflow) instead of relying solely on a single vendor’s stack.

2. Why? This preserves your ability to adapt as technology and vendor priorities evolve. While tighter tool integration is a potential promise of consolidation, options exist to reduce the burden of a multi-tool architecture.

For instance, Datacoves is built to help enterprises maintain governance, reliability, and freedom of choice to deploy securely in their own network, specifically supporting multi-tool architectures and open standards to minimize vendor lock-in risk.

Demand Roadmap Transparency:

1. Engage with your vendors about their product direction and advocate for community-driven development.

2. Why? Transparency helps align vendor decisions with your business needs and reduces the risk of disruptive surprises.

Participate in Open-Source Communities:

1. Contribute to and help maintain the open-source projects that underpin your data platform.

2. Why? Active participation ensures your requirements are heard and helps sustain the projects you depend on.

Attend and Sponsor Diverse Conferences:

1. Support and participate in community-driven events (such as Airflow Summit) to foster innovation and avoid concentration of influence.

2. Why? Exposure to a variety of perspectives leads to stronger solutions and a healthier ecosystem.

Support OSS Creators Financially and Through Advocacy:

1. Sponsor projects or directly support maintainers of critical open-source tools.

2. Why? Sustainable funding and engagement are vital for the health and reliability of the open-source ecosystem.

Encourage Openness and Diversity

1. Champion Diversity in OSS Governance: Advocate for broad, meritocratic project leadership and a diverse contributor base.

2. Why? Diverse stewardship drives innovation, resilience, and reduces the risk of any one entity dominating the project’s direction.

Long-term analytics success isn’t just about technology selection. It’s about actively shaping the ecosystem through strategic diversification, transparent vendor engagement, and meaningful support of open standards and communities. Enterprises that invest in these areas will be best equipped to thrive, no matter how the vendor landscape evolves.

While both dbt Labs and Fivetran have stated that the dbt Core license would remain permissive, to preserve trust and innovation in the data community, dbt Fivetran should commit to neutral governance and open standards for dbt Core, ensuring it remains a true foundation for collaboration, not fragmentation.

It is common knowledge that the dbt community has powered a remarkable flywheel of innovation, career growth, and ecosystem expansion. Disrupting this momentum risks technical fragmentation and loss of goodwill, outcomes that benefit no one in the analytics landscape.

To maintain community trust and momentum, dbt Fivetran should:

1. Establish Neutral Governance:

Place dbt Core under independent oversight, where its roadmap is shaped by a diverse set of contributors, not just a single commercial entity. Projects like Iceberg have shown that broad-based governance sustains engagement and innovation, compared to more vendor-driven models like Delta Lake.

2. Consider Neutral Stewardship Models:

One possible long-term approach that has been seen in projects like Iceberg and OpenTelemetry is to place an open-source core under neutral foundation governance (for example, the Linux Foundation or Apache Software Foundation).

While dbt Labs and Fivetran have both reaffirmed their commitment to keeping dbt Core open, exploring such models in the future could further strengthen community trust and ensure continued neutrality as the platform evolves.

3. Encourage Meritocratic Development: Empower a core team representing the broader community to guide dbt Core’s future. This approach minimizes the risk of forks and fragmentation and ensures that innovation is driven by real-world needs.

4. Apply Lessons from MetricFlow: When dbt Labs acquired MetricFlow and changed its license to BSL, it led to further fragmentation in the semantic layer space. Now, with MetricFlow relicensed as Apache and governed by the Open Semantic Interchange (OSI) initiative (including dbt Labs, Snowflake, and Tableau), the project is positioned as a vendor-neutral standard. This kind of model should be considered for dbt Core as well.

1. Technical teams: By ensuring continued access to an open, extensible framework, and reducing the risk of disruptive migration.

2. Business leaders: By protecting investments in analytics workflows and minimizing vendor lock-in or unexpected costs.

Solidifying dbt Core as a true open standard benefits the entire ecosystem, including dbt Fivetran, which is building its future, dbt Fusion, on this foundation. Taking these steps would not only calm community anxiety but also position dbt Fivetran as a trusted leader for the next era of enterprise analytics.

The dbt Fivetran merger represents a defining moment for the modern data stack, promising streamlined workflows while simultaneously raising critical questions about vendor lock-in, open-source governance, and long-term flexibility. Successfully navigating this shift requires a proactive, diversified strategy, one that champions open standards and avoids over-reliance on any single vendor. Enterprises that invest in active community engagement and robust contingency planning will be best equipped to maintain control and unlock maximum value from their analytics platforms.

If your organization is looking for a way to mitigate these risks and secure your workflows with enterprise-grade governance and multi-tool architecture, Datacoves offers a managed platform designed for maximum flexibility and control. For a deeper look, find out what Datacoves has to offer.

Ready to take control of your data future? Contact us today to explore how Datacoves allows organizations to take control while still simplifying platform management and tool integration.

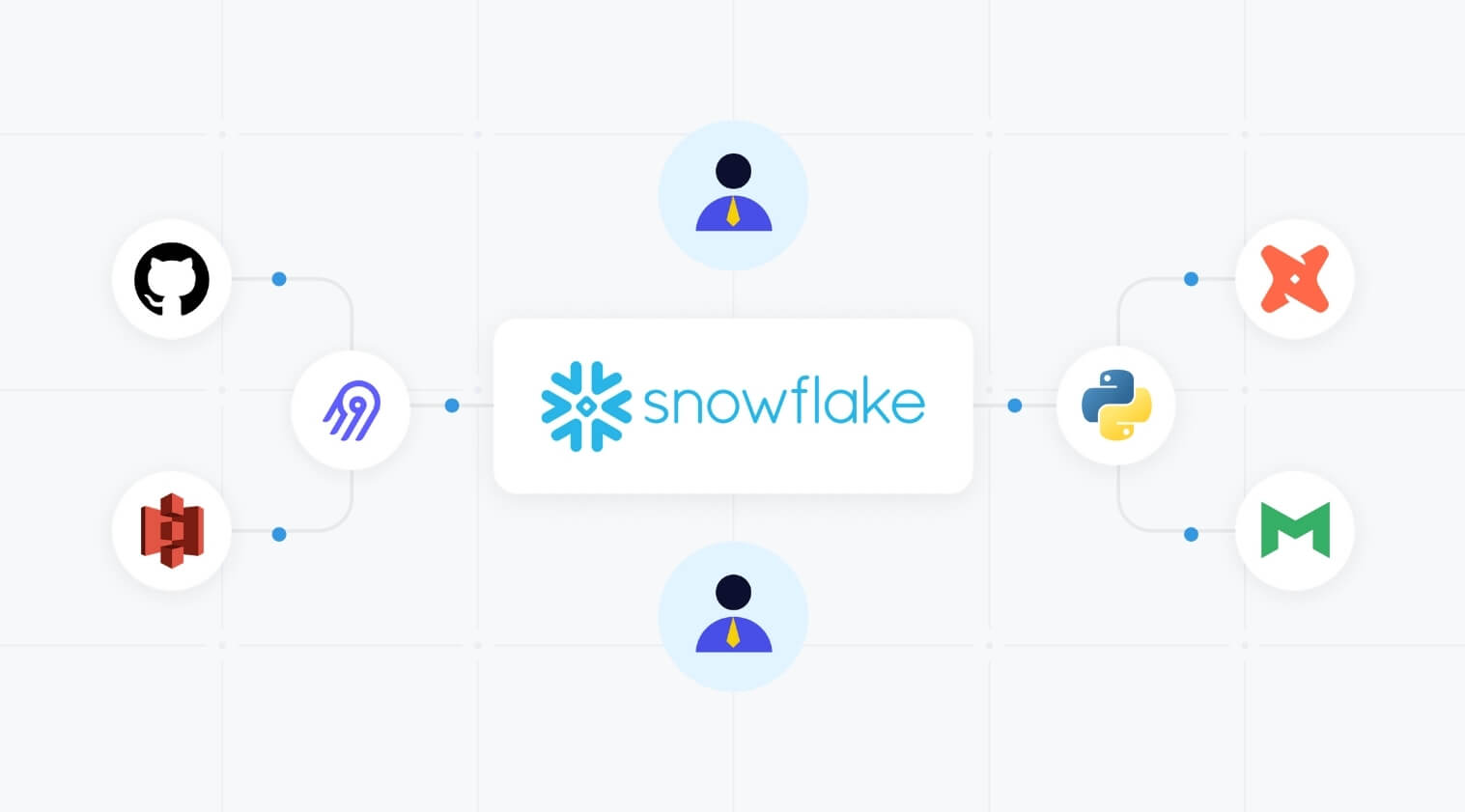

Data orchestration is the foundation that ensures every step in your data value chain runs in the correct order, with the right dependencies, and with full visibility. Without it, even the best tools such as dbt, Airflow, Snowflake, or your BI platform operate in silos. This disconnect creates delays, data fires, and unreliable insights.

For executives, data orchestration is not optional. It prevents fragmented workflows, reduces operational risk, and helps teams deliver trusted insights quickly and consistently. When orchestration is built into the data platform from the start, organizations eliminate hidden technical debt, scale more confidently, and avoid the costly rework that slows innovation.

In short, data orchestration is how modern data teams deliver reliable, end-to-end value without surprises.

In today’s fast-paced business environment, executives are under increased pressure to deliver quick wins and measurable results. However, one capability that is often overlooked is data orchestration.

This oversight can sabotage progress as the promise of data modernization efforts fails to deliver expected outcomes in terms of ROI and improved efficiencies.

In this article, we will explain what data orchestration is, the risks of not implementing proper data orchestration, and how executives benefit from end-to-end data orchestration.

Data orchestration ensures every step in your data value chain runs in the right order, with the right dependencies, and with full visibility.

Data orchestration is the practice of coordinating all the steps in your organization’s data processes so they run smoothly, in the right order, and without surprises. Think of it as the conductor ensuring each instrument plays at the right time to create beautiful music.

Generating insights is a multi-tool process. What’s the problem with this setup? Each of these tools may include its own scheduler, and they will each run in a silo. Even if an upstream step fails or is delayed, the subsequent steps will run. This disconnect leads to surprises for executives expecting trusted insights. This in turn, leads to delays and data fires, which are disruptive and inefficient for the organization.

Imagine you are baking a chocolate cake. You would need a recipe, all the ingredients, and a functioning oven. However, you wouldn’t turn on the oven before buying the ingredients and mixing the batter if your milk had spoiled. Not having someone orchestrating all the steps in the right sequence would lead to a disorganized process that is inefficient and wasteful. You also know not to continue if there is a critical issue, such as spoiled milk.

Data orchestration solves the problem of having siloed tools by connecting all the steps in the data value chain. This way, if one step is delayed or fails, subsequent steps do not run. With a data orchestration tool, we can also notify someone to resolve the issue so they can act quickly, reducing fires and providing visibility to the entire process.

ETL (Extract, Transform, and Load) focuses on moving and transforming data, but data orchestration is about making sure everything happens in the right sequence across all tools and systems. It’s the difference between just having the pieces of a puzzle and putting them together into a clear picture.

Without data orchestration, even the best tools operate in silos, creating delays, data fires, and unreliable insights.

Executives make many decisions but rarely have the time to dive into technical details. They delegate research and expect quick wins, which often leads to mixed messaging. Leaders want resilient, scalable, future-proof solutions, yet they also pressure teams to deliver “something now.” Vendors exploit this tension. They sell tools that solve one slice of the data value chain but rarely explain that their product won't fix the underlying fragmentation. Quick wins may ship, but the systemic problems remain.

Data orchestration removes this friction. When workflows are unified, adding steps to the data flow is straightforward, pipelines are predictable, and teams deliver high-quality data products faster and with far fewer surprises.

A major Datacoves customer summarized the difference clearly:

“Before, we had many data fires disrupting the organization. Now issues still occur, but we catch them immediately and prevent bad data from reaching stakeholders.”

Without orchestration, each new tool adds another blind spot. Teams don’t see failures until they hit downstream systems or show up in dashboards. This reactive posture creates endless rework, late-night outages, and a reputation problem with stakeholders.

With orchestration, failures surface early. Dependencies, quality checks, and execution paths are clear. Teams prevent incidents instead of reacting to them.

Data orchestration isn’t just about automation; it’s about governance.

It ensures:

This visibility dramatically improves trust. Stakeholders no longer get “chocolate cake” made with spoiled milk. A new tool may bake faster, but if upstream data is broken, the final product is still compromised.

Orchestration ensures the entire value chain is healthy, not just one ingredient.

Modern data teams rely heavily on tools like dbt and Airflow, but these tools do not magically align themselves. Without orchestration:

With orchestration in place, ingestion, dbt scheduling, and activation become reliable, governed, and transparent, ensuring every step runs at the right time, in the right order, with the right dependencies. Learn more in our guide on the difference between dbt Cloud vs dbt Core.

For more details on how dbt schedules and runs models, see the official dbt documentation.

To learn how Airflow manages task dependencies and scheduling, visit the official Apache Airflow documentation.

It is tempting to postpone data orchestration until the weight of data problems makes it unavoidable. Even the best tools and talented teams can struggle without a clear orchestration strategy. When data processes aren’t coordinated, organizations face inefficiencies, errors, and lost opportunities.

Implementing data orchestration early reduces hidden technical debt, prevents rework, and helps teams deliver trusted insights faster.

When data pipelines rely on multiple systems that don’t communicate well, teams spend extra time manually moving data, reconciling errors, and firefighting issues. This slows decision-making and increases operational costs.

Common symptoms of fragmented tools include:

Many organizations focus on a “quick wins” approach only to discover that the cost of moving fast was long-term lack of agility and technical debt. This approach may deliver immediate results but leads to technical debt, wasted spend, and fragile data processes that are hard to scale. A great example is Data Drive’s journey, before adding data orchestration, when issues occurred, they had to spend time debugging each step of their disconnected process. Now it is clear where an issue has occurred, enabling them to resolve issues faster for their stakeholders.

As organizations grow, the absence of orchestration forces teams to revisit and fix processes repeatedly. Embedding orchestration from the start avoids repeated firefighting, accelerates innovation, and makes scaling smoother. Improving one step alone cannot deliver the desired outcome, just like a single egg cannot make a cake.

Organizations without data orchestration are effectively flying blind. Disconnected processes run out of order and issues are discovered by frustrated stakeholders. Resource-constrained data teams spend their time firefighting instead of delivering new insights. The result is delays in decision-making, higher operating costs, and an erosion of trust in data. Embedding orchestration from the start avoids repeated firefighting, accelerates innovation, and makes scaling smoother.

If data orchestration is so important, why do organizations go without it? We often hear some common objections:

Many organizations have not heard of data orchestration and tool vendors rarely highlight this need. It’s only after a painful experience that they realize this essential need.

It’s true that data orchestration adds another layer, but without it, you have disconnected, siloed processes. The real cost comes from chaos, not from coordination.

Vendor sprawl can indeed introduce additional risks, that’s why all-in-one platforms like Datacoves reduce integration overhead by bundling enterprise-grade orchestration, like Airflow, without increasing vendor lock-in. Explore Datacoves’ Integrated Orchestration Platform.

Data value chains are inherently complex, with multiple data sources, ingestion processes, transformations, and data consumers. Data orchestration does not introduce complexity; it provides visibility and control over this complexity.

It may seem reasonable to postpone data orchestration in the short term. But every mature data organization, both large and small, eventually needs to scale. By building-in data orchestration into the data platform from the start, you set up your teams for success, reduce firefighting, and avoid costly and time-consuming rework. Most importantly, the business receives trustworthy insights faster.

Implementing data orchestration doesn’t have to be complicated. The key is to approach it strategically, ensuring that every process is aligned, visible, and scalable.

Begin by mapping your existing data processes and identifying where inefficiencies or risks exist. Knowing exactly how data flows across teams and tools allows you to prioritize the areas that will benefit most from orchestration.

Key outcomes:

Focus first on automating repetitive and error-prone steps such as data collection, cleaning, and routing. Automation reduces manual effort, frees up your team for higher-value work, and ensures processes run consistently.

Key outcomes:

Implement dashboards or monitoring tools that provide executives and teams with real-time visibility into data flows. Early detection of errors prevents costly mistakes and increases confidence in the insights being delivered.

Key outcomes:

Start small with high-impact processes and expand orchestration across more workflows over time. Scaling gradually ensures that teams adopt the changes effectively and that processes remain manageable as data volume grows.

Key outcomes:

Select tools that integrate well with your existing systems, and provide flexibility for future growth. Popular orchestration tools include dbt and Airflow, but the best choice depends on your organization’s specific workflows and needs. Explore how these capabilities come packaged in the Datacoves Platform Features overview.

Key outcomes:

Investing in data orchestration delivers tangible business value. Organizations that implement orchestration gain efficiency, reliability, and confidence in their decision-making.

Data orchestration reduces manual work, prevents duplicated efforts, and streamlines processes. Teams can focus on higher-value initiatives instead of firefighting data issues.

With coordinated workflows and monitoring, executives and stakeholders can trust the data they rely on. Decisions are backed by accurate, timely, and actionable insights.

By embedding data orchestration early, organizations avoid expensive rework, reduce errors, and prevent the accumulation of technical debt from ad hoc solutions.

Data orchestration ensures that data pipelines scale smoothly as the organization grows. Teams can launch new analytics initiatives faster, confident that their underlying processes are robust and repeatable.

Executives gain a clear view of the entire data lifecycle, enabling better oversight, risk management, and strategic planning.

Data orchestration should not be seen as a “nice to have” feature that can be postponed. Mature organizations understand that it is the foundation needed to deliver trusted insights faster. Without it, companies risk setting up siloed tools, increased data firefighting, and eroding trust in both the data and the data team. With it, organizations gain visibility, agility, and the confidence that insights fueling decisions are accurate.

The real question for strategic leaders is whether to try to piece together disconnected solutions, focusing only on short-term wins, or invest in data orchestration early and unlock the full potential of a connected ecosystem.

For executives, prioritizing data orchestration will mean fewer data fires, accelerated innovation, and an environment where trusted insights flow as reliably as the business demands.

To see how orchestration is built into the Datacoves platform, visit our Integrated Orchestration page.

Don’t wait until complexity forces your hand. Your team deserves to move faster and fight fewer fires.

Book a personalized demo to see how data orchestration with Datacoves helps leaders unlock value from day one.

The Databricks AI Summit 2025 revealed a major shift toward simpler, AI-ready, and governed data platforms. From no-code analytics to serverless OLTP and agentic workflows, the announcements show Databricks is building for a unified future.

In this post, we break down the six most impactful features announced at the summit and what they mean for the future of data teams.

Databricks One (currently in private preview) introduces a no-code analytics platform aimed at democratizing access to insights across the organization. Powered by Genie, users can now interact with business data through natural language Q&A, no SQL or dashboards required. By lowering the barrier to entry, tools like Genie can drive better, faster decision-making across all functions.

Datacoves Take: As with any AI we have used to date, having a solid foundation is key. AI can not solve ambiguous metrics and a lack of knowledge. As we have mentioned, there are some dangers in trusting AI, and these caveats still exist.

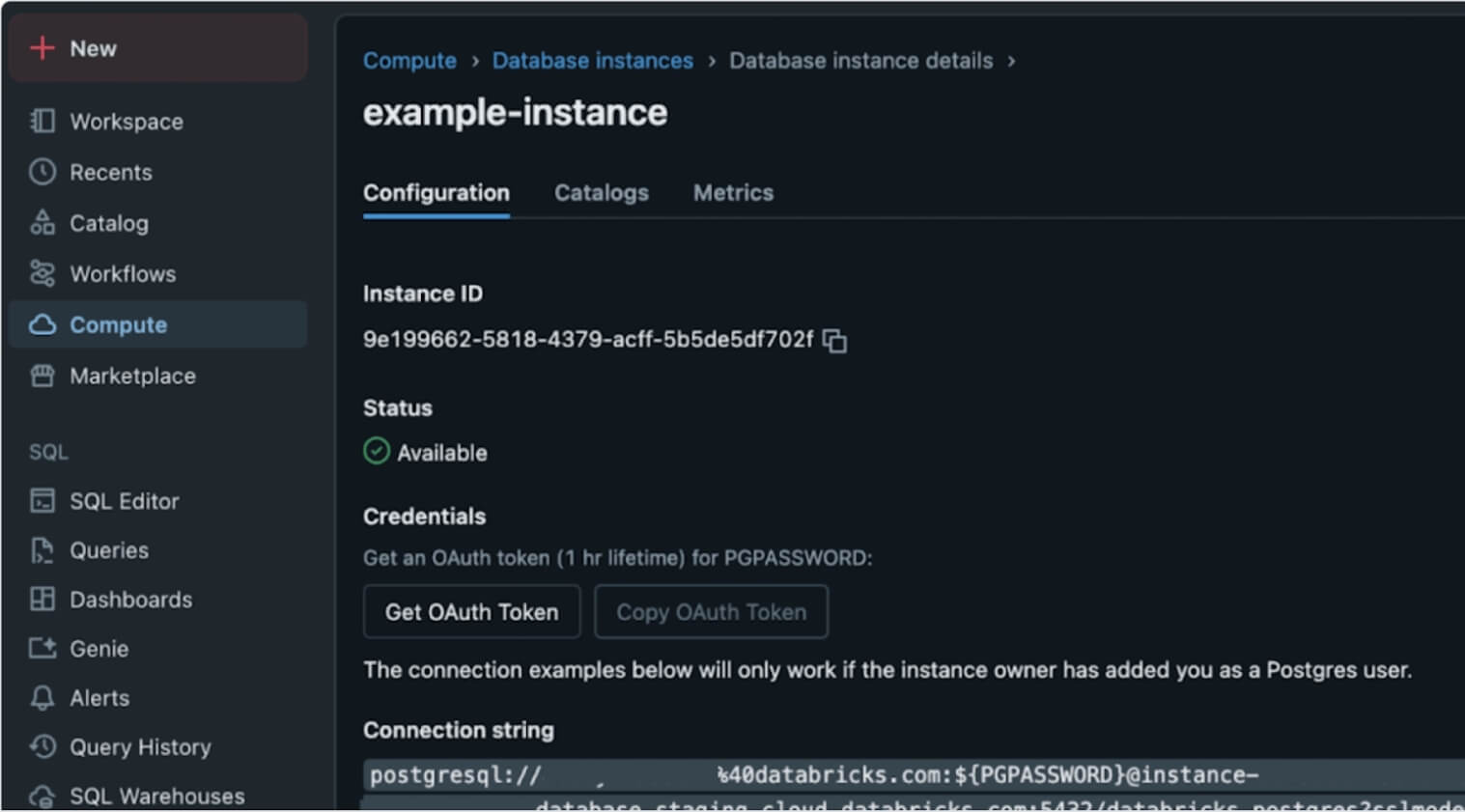

In a bold move, Databricks launched Lakebase, a Postgres-compatible, serverless OLTP database natively integrated into the lakehouse. Built atop the foundations laid by the NeonDB acquisition, Lakebase reimagines transactional workloads within the unified lakehouse architecture. This is more than just a database release; it’s a structural shift that brings transactional (OLTP) and analytical (OLAP) workloads together, unlocking powerful agentic and AI use cases without architectural sprawl.

Datacoves Take: We see both Databricks and Snowflake integrating Postgres into their offering. Ducklake is also demonstrating a simpler future for Iceberg catalogs. Postgres has a strong future ahead, and the unification of OLAP and OLTP seems certain.

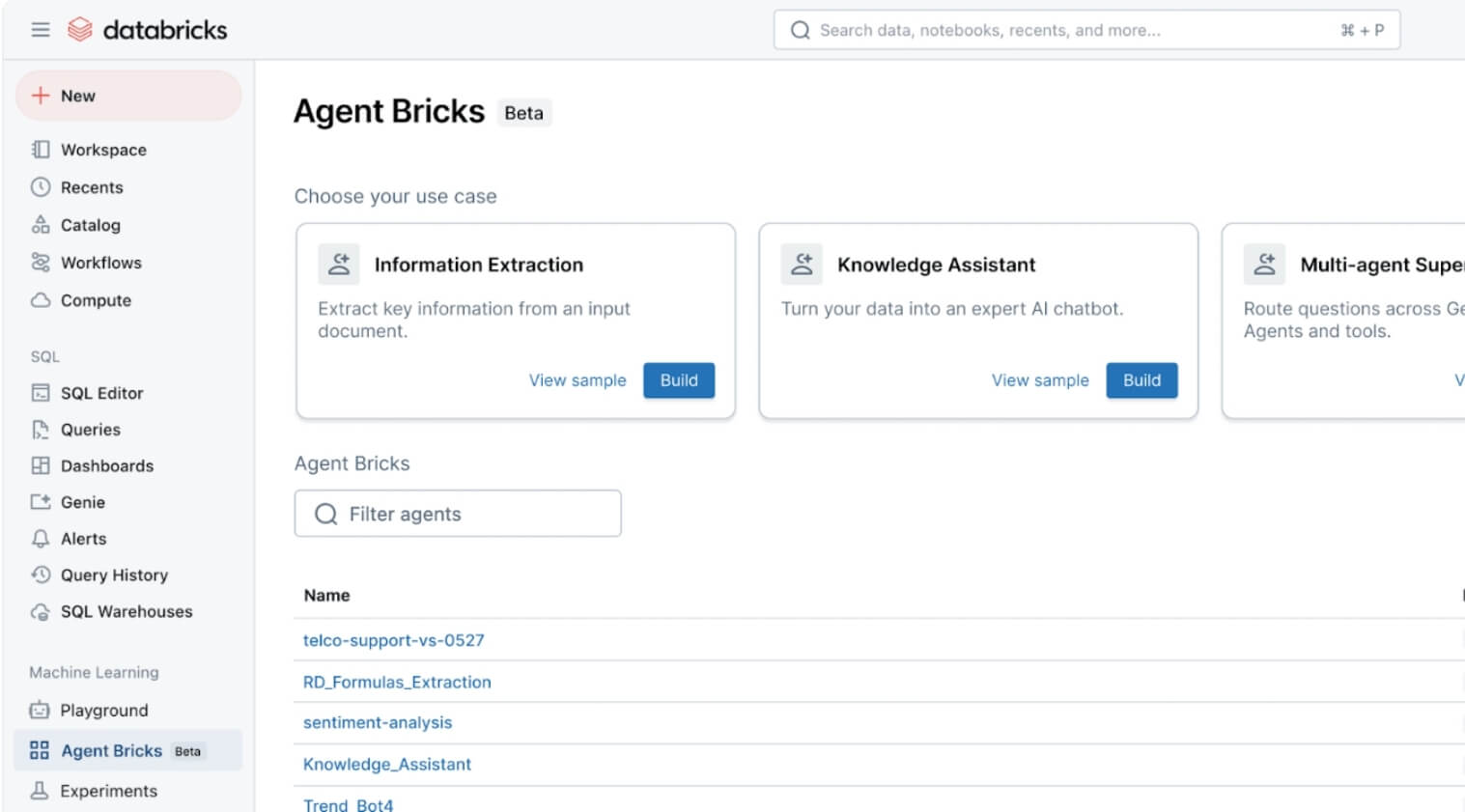

With the introduction of Agent Bricks, Databricks is making it easier to build, evaluate, and operationalize agents for AI-driven workflows. What sets this apart is the use of built-in “judges” - LLMs that automatically assess agent quality and performance. This moves agents from hackathon demos into the enterprise spotlight, giving teams a foundation to develop production-grade AI assistants grounded in company data and governance frameworks.

Datacoves Take: This looks interesting, and the key here still lies in having a strong data foundation with good processes. Reproducibility is also key. Testing and proving that the right actions are performed will be important for any organization implementing this feature.

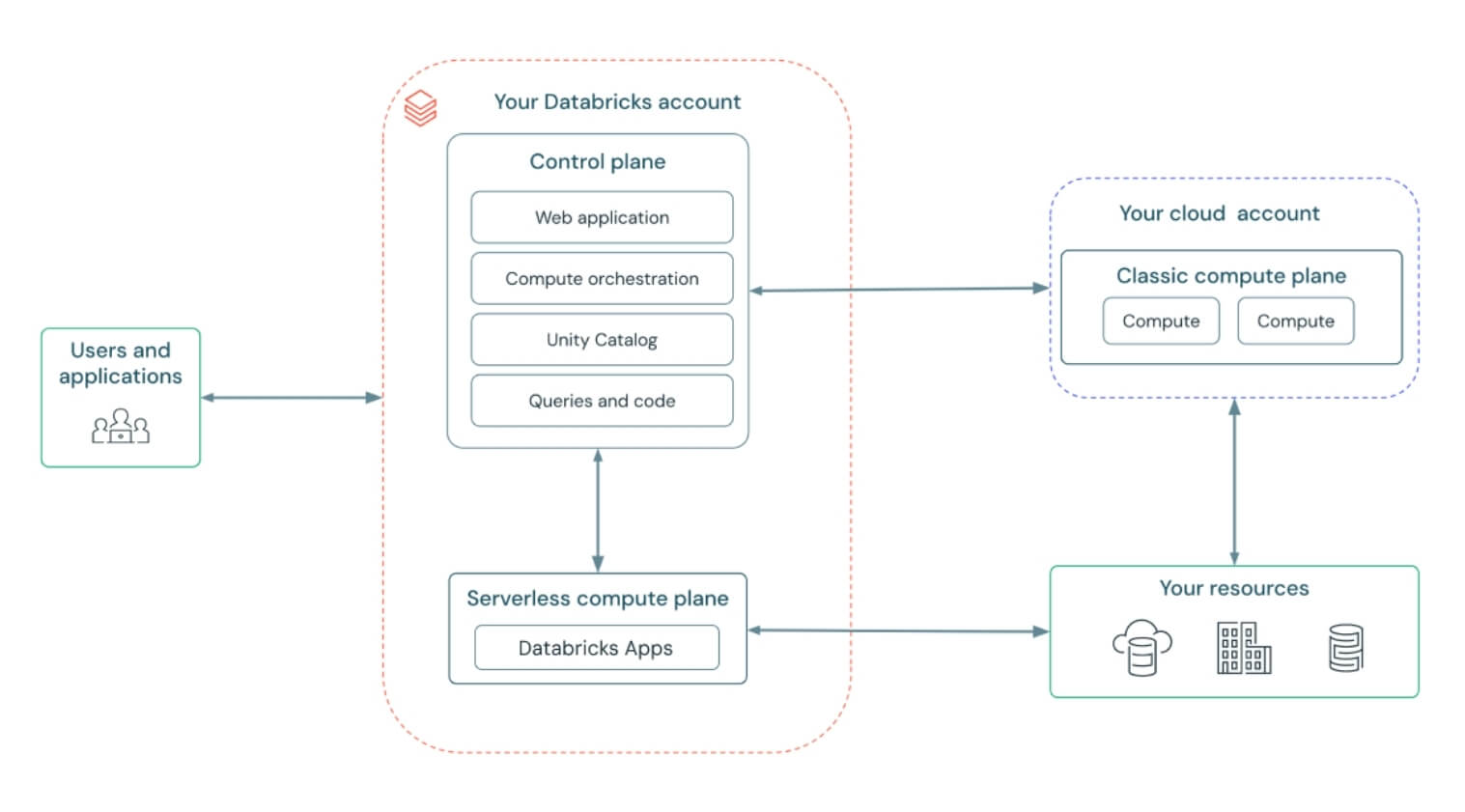

Databricks introduced Databricks Apps, allowing developers to build custom user interfaces that automatically respect Unity Catalog permissions and metadata. A standout demo showed glossary terms appearing inline inside Chrome, giving business users governed definitions directly in the tools they use every day. This bridges the gap between data consumers and governed metadata, making governance feel less like overhead and more like embedded intelligence.

Datacoves Take: Metadata and catalogs are important for AI, so we see both Databricks and Snowflake investing in this area. As with any of these changes, technology is not the only change needed in the organization. Change management is also important. Without proper stewardship, ownership, and review processes, apps can’t provide the experience promised.

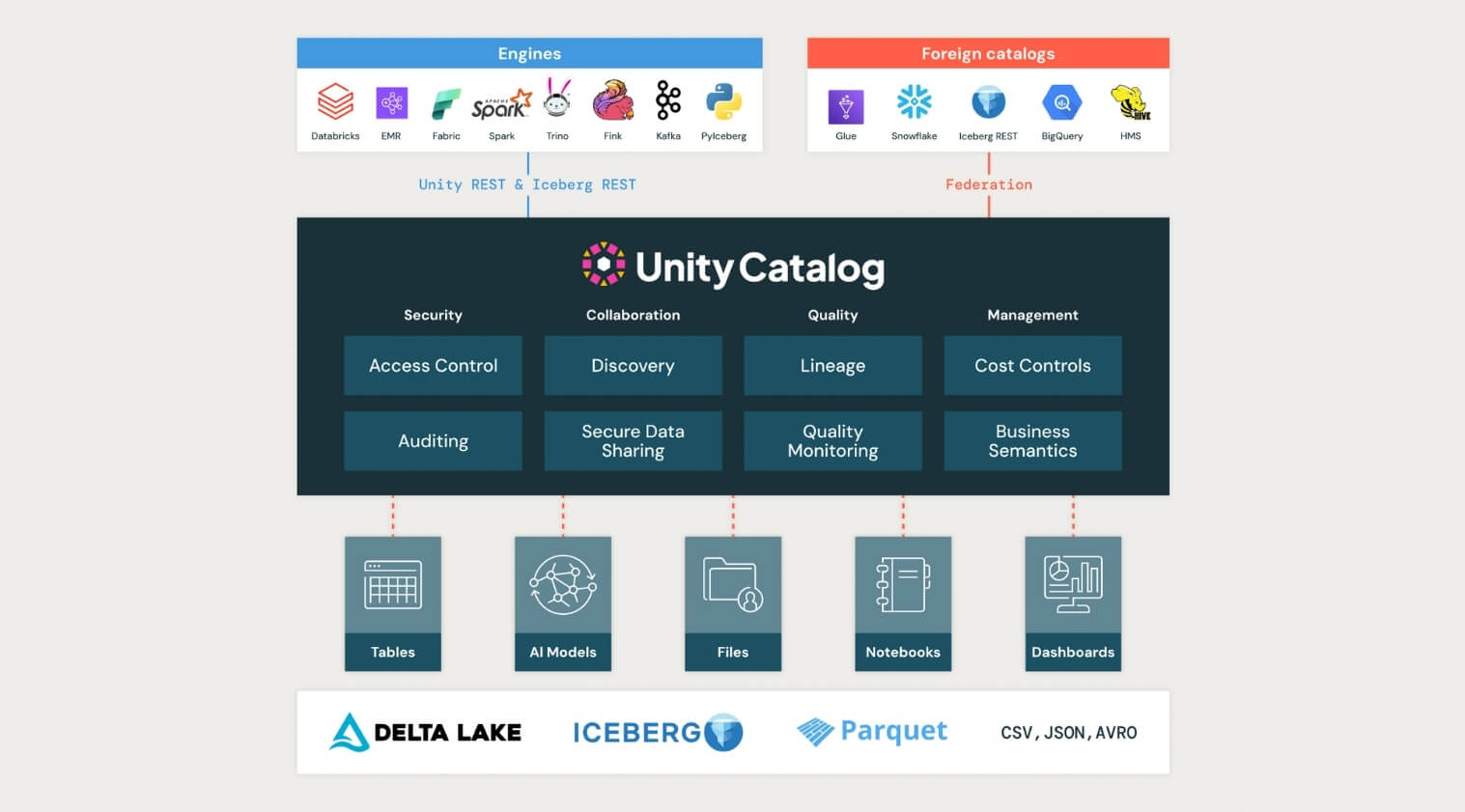

Unity Catalog took a major step forward at the Databricks AI Summit 2025, now supporting managed Apache Iceberg tables, cross-engine interoperability, and introducing Unity Catalog Metrics to define and track business logic across the organization.

This kind of standardization is critical for teams navigating increasingly complex data landscapes. By supporting both Iceberg and Delta formats, enabling two-way sync, and contributing to the open-source ecosystem, Unity Catalog is positioning itself as the true backbone for open, interoperable governance.

Datacoves Take: The Iceberg data format has the momentum behind it; now it is up to the platforms to enable true interoperability. Organizations are expecting a future where a table can be written and read from any platform. DuckLake is also getting in the game, simplifying how metadata is managed, and multi-table transactions are enabled. It will be interesting to see if Unity and Polaris take some of the DuckLake learnings and integrate them in the next few years.

In a community-building move, Databricks introduced a forever-free edition of the platform and committed $100 million toward AI and data training. This massive investment creates a pipeline of talent ready to use and govern AI responsibly. For organizations thinking long-term, this is a wake-up call: governance, security, and education need to scale with AI adoption, not follow behind.

Datacoves Take: This feels like a good way to get more people to try Databricks without a big commitment. Hopefully, competitors take note and do the same. This will benefit the entire data community.

Read the full post from Databricks here:

https://www.databricks.com/blog/summary-dais-2025-announcements-through-lens-games

With tools like Databricks One and Genie enabling no-code, natural language analytics, data leaders must prioritize making insights accessible beyond technical teams to drive faster, data-informed decisions at every level.

Lakebase’s integration of transactional and analytical workloads signals a move toward simpler, more efficient data stacks. Leaders should rethink their architectures to reduce complexity and support real-time, AI-driven applications.

Agent Bricks and built-in AI judges highlight the shift from experimental AI agents to production-ready, measurable workflows. Data leaders need to invest in frameworks and governance to safely scale AI agents across use cases.

Unity Catalog’s expanded support for Iceberg, Delta, and cross-engine interoperability emphasizes the need for unified governance frameworks that handle diverse data formats while maintaining business logic and compliance.

The launch of a free tier and $100M training fund underscores the growing demand for skilled data and AI practitioners. Data leaders should plan for talent development and operational readiness to fully leverage evolving platforms.

The Databricks AI Summit 2025 signals a fundamental shift: from scattered tools and isolated workflows to unified, governed, and AI-native platforms. It’s not just about building smarter systems; it’s about making those systems accessible, efficient, and scalable for the entire organization.

While these innovations are promising, putting them into practice takes more than vision; it requires infrastructure that balances speed, control, and usability.

That’s where Datacoves comes in.

Our platform accelerates the adoption of modern tools like dbt, Airflow, and emerging AI workflows, without the overhead of managing complex environments. We help teams operationalize best practices from day one, reducing total cost of ownership while enabling faster delivery, tighter governance, and AI readiness at scale. Datacoves supports Databricks, Snowflake, BigQuery, and any data platform with a dbt adapter. We believe in an open and interoperable feature where tools are integrated without increasing vendor lock-in. Talk to us to find out more.

Want to learn more? Book a demo with Datacoves.

Large Language Models (LLMs) like ChatGPT and Claude are becoming common in modern data workflows. From writing SQL queries to summarizing dashboards, they offer speed and support across both technical and non-technical teams. But as organizations begin to rely more heavily on these tools, the risks start to surface.

The dangers of AI are not in what it cannot do, but in what it does too confidently. LLMs are built to sound convincing, even when the information they generate is inaccurate or incomplete. In the context of data analytics, this can lead to hallucinated metrics, missed context, and decisions based on misleading outputs. Without human oversight, these issues can erode trust, waste time, and create costly setbacks.

This article explores where LLMs tend to go wrong in analytics workflows and why human involvement remains essential. Drawing from current industry perspectives and real-world examples, we will look at how to use LLMs effectively without sacrificing accuracy, accountability, or completeness.

LLMs like ChatGPT and Claude are excellent at sounding smart. That’s the problem.

They’re built to generate natural-sounding language, not truth. So, while they may give you a SQL query or dashboard summary in seconds, that doesn’t mean it’s accurate. In fact, many LLMs can hallucinate metrics, invent dimensions, or produce outputs that seem plausible but are completely wrong.

You wouldn’t hand over your revenue targets to someone who “sounds confident,” right? So why are so many teams doing that with AI?

Here's the challenge. Even experienced data professionals can be fooled. The more fluent the output, the easier it is to miss the flaws underneath.

This isn’t just theory, Castor warns that “most AI-generated queries will work, but they won’t always be right.” That tiny gap between function and accuracy is where risk lives.

If you’re leading a data-driven team or making decisions based on LLM-generated outputs, these are the real risks you need to watch out for.

LLMs can fabricate filters, columns, and logic that don’t exist. In the moment, you might not notice but if those false insights inform a slide for board meeting or product decision, the damage is done.

Here is an example where an image was provided to ChatGPT and asked to point out the three differences between the two pictures as illustrated below:

Here is the output from ChatGPT:

Here are the 3 differences between the two images:

Let me know if you want these highlighted visually!

When asked to highlight the differences, ChatGPT produced the following image:

As you can see, ChatGPT skewed the information and exaggerated the differences in the image. Only one (the shirt stripe) out of the three was correct, while it missed the differences with the sock, the hair, and it even changed the author in the copyright!

AI doesn’t know your KPIs, fiscal calendars, or market pressures. Business context still requires a human lens to interpret properly. Without that, you risk misreading what the data is trying to say.

AI doesn’t give sources or confidence scores. You often can’t tell where a number came from. Secoda notes that teams still need to double-check model outputs before trusting them in critical workflows.

One of the great things about LLMs is how accessible they are. But that also means anyone can generate analytics even if they don’t understand the data underneath. This creates a gap between surface-level insight and actual understanding.

Over-relying on LLMs can create more cleanup work than if a skilled analyst had just done it from the start. Over-relying on automation often creates cleanup work that slows teams down. As we noted in Modern Data Stack Acceleration, true speed comes from workflows designed with governance and best practices.

If you’ve ever skimmed an LLM-generated responce and thought, “That’s not quite right,” you already know the value of human oversight.

AI is fast, but it doesn’t understand what matters to your stakeholders. It can’t distinguish between a seasonal dip and a business-critical loss. And it won’t ask follow-up questions when something looks off.

Think of LLMs like smart interns. They can help you move faster, but they still need supervision. Your team’s expertise, your mental model of how data maps to outcomes, is irreplaceable. Tools like Datacoves embed governance throughout the data journey to ensure humans stay in the loop. Or as Scott Schlesinger says, “AI is the accelerator, not the driver.” It’s a reminder that human hands still need to stay on the wheel to ensure we’re heading in the right direction.

Datacoves helps teams enforce best practices around documentation, version control, and human-in-the-loop development. By giving analysts structure and control, Datacoves makes it easier to integrate AI tools without losing trust or accountability. Here are some examples where Datacoves’ integrated GenAI can boost productivity while keeping you in control.

Want to keep the speed of LLMs and the trust of your team? Here’s how to make that balance work.

AI can enhance analytics but should not be used blindly. LLMs bring speed, scale, and support, but without human oversight they can also introduce costly errors that undermine trust in decision-making.

Human judgment remains essential. It provides the reliability, context, and accountability that AI alone cannot deliver. Confidence doesn’t equal correctness, and sounding right isn’t the same as being right.

The best results come from collaboration between humans and AI. Use LLMs as powerful partners, not replacements. How is your team approaching this balance? Explore how platforms like Datacoves can help you create workflows that keep humans in the loop.

It is clear that Snowflake is positioning itself as an all-in-one platform—from data ingestion, to transformation, to AI. The announcements covered a wide range of topics, with AI mentioned over 60 times during the 2-hour keynote. While time will tell how much value organizations get from these features, one thing remains clear: a solid foundation and strong governance are essential to deliver on the promise of AI.

Conversational AI via natural language at ai.snowflake.com, powered by Anthropic/OpenAI LLMs and Cortex Agents, unifying insights across structured and unstructured data. Access is available through your account representative.

Datacoves Take: Companies with strong governance—including proper data modeling, clear documentation, and high data quality—will benefit most from this feature. AI cannot solve foundational issues, and organizations that skip governance will struggle to realize its full potential.

An AI companion for automating ML workflows—covering data prep, feature engineering, model training, and more.

Datacoves Take: This could be a valuable assistant for data scientists, augmenting rather than replacing their skills. As always, we'll be better able to assess its value once it's generally available.

Enables multimodal AI processing (like images, documents) within SQL syntax, plus enhanced Document AI and Cortex Search.

Datacoves Take: The potential here is exciting, especially for teams working with unstructured data. But given historical challenges with Document AI, we’ll be watching closely to see how this performs in real-world use cases.

No-code monitoring tools for generative AI apps, supporting LLMs from OpenAI (via Azure), Anthropic, Meta, Mistral, and others.

Datacoves Take: Observability and security are critical for LLM-based apps. We’re concerned that the current rush to AI could lead to technical debt and security risks. Organizations must establish monitoring and mitigation strategies now, before issues arise 12–18 months down the line.

Managed, extensible multimodal data ingestion service built on Apache NiFi with hundreds of connectors, simplifying ETL and change-data capture.

Datacoves Take: While this simplifies ingestion, GUI tools often hinder CI/CD and code reviews. We prefer code-first tools like DLT that align with modern software development practices. Note: Openflow requires additional AWS setup beyond Snowflake configuration.

Native dbt development, execution, monitoring with Git integration and AI-assisted code in Snowsight Workspaces.

Datacoves Take: While this makes dbt more accessible for newcomers, it’s not a full replacement for the flexibility and power of VS Code. Our customers rely on VS Code not just for dbt, but also for Python ingestion development, managing security as code, orchestration pipelines, and more. Datacoves provides an integrated environment that supports all of this—and more. See this walkthrough for details: https://www.youtube.com/watch?v=w7C7OkmYPFs

Read/write Iceberg tables via Open Catalog, dynamic pipelines, VARIANT support, and Merge-on-Read functionality.

Datacoves Take: Interoperability is key. Many of our customers use both Snowflake and Databricks, and Iceberg helps reduce vendor lock-in. Snowflake’s support for Iceberg with advanced features like VARIANT is a big step forward for the ecosystem.

Custom Git URLs, Terraform provider now GA, and Python 3.9 support in Snowflake Notebooks.

Datacoves Take: Python 3.9 is a good start, but we’d like to see support for newer versions. With PyPi integration, teams must carefully vet packages to manage security risks. Datacoves offers guardrails to help organizations scale Python workflows safely.

Define business metrics inside Snowflake for consistent, AI-friendly semantic modeling.

Datacoves Take: A semantic layer is only as good as the underlying data. Without solid governance, it becomes another failure point. Datacoves helps teams implement the foundations—testing, deployment, ownership—that make semantic layers effective.

Hardware and performance upgrades delivering ~2.1× faster analytics for updates, deletes, merges, and table scans.

Datacoves Take: Performance improvements are always welcome, especially when easy to adopt. Still, test carefully—these upgrades can increase costs, and in some cases existing warehouses may still be the better fit.

Free, automated migration of legacy data warehouses, BI systems, and ETL pipelines with code conversion and validation.

Datacoves Take: These tools are intriguing, but migrating platforms is a chance to rethink your approach—not just lift and shift legacy baggage. Datacoves helps organizations modernize with intention.

Enrich native apps with real-time content from publishers like USA TODAY, AP, Stack Overflow, and CB Insights.

Datacoves Take: Powerful in theory, but only effective if your core data is clean. Before enrichment, organizations must resolve entities and ensure quality.