Large Language Models (LLMs) like ChatGPT and Claude are becoming common in modern data workflows. From writing SQL queries to summarizing dashboards, they offer speed and support across both technical and non-technical teams. But as organizations begin to rely more heavily on these tools, the risks start to surface.

The dangers of AI are not in what it cannot do, but in what it does too confidently. LLMs are built to sound convincing, even when the information they generate is inaccurate or incomplete. In the context of data analytics, this can lead to hallucinated metrics, missed context, and decisions based on misleading outputs. Without human oversight, these issues can erode trust, waste time, and create costly setbacks.

This article explores where LLMs tend to go wrong in analytics workflows and why human involvement remains essential. Drawing from current industry perspectives and real-world examples, we will look at how to use LLMs effectively without sacrificing accuracy, accountability, or completeness.

LLMs like ChatGPT and Claude are excellent at sounding smart. That’s the problem.

They’re built to generate natural-sounding language, not truth. So, while they may give you a SQL query or dashboard summary in seconds, that doesn’t mean it’s accurate. In fact, many LLMs can hallucinate metrics, invent dimensions, or produce outputs that seem plausible but are completely wrong.

You wouldn’t hand over your revenue targets to someone who “sounds confident,” right? So why are so many teams doing that with AI?

Here's the challenge. Even experienced data professionals can be fooled. The more fluent the output, the easier it is to miss the flaws underneath.

This isn’t just theory, Castor warns that “most AI-generated queries will work, but they won’t always be right.” That tiny gap between function and accuracy is where risk lives.

If you’re leading a data-driven team or making decisions based on LLM-generated outputs, these are the real risks you need to watch out for.

LLMs can fabricate filters, columns, and logic that don’t exist. In the moment, you might not notice but if those false insights inform a slide for board meeting or product decision, the damage is done.

Here is an example where an image was provided to ChatGPT and asked to point out the three differences between the two pictures as illustrated below:

Here is the output from ChatGPT:

Here are the 3 differences between the two images:

Let me know if you want these highlighted visually!

When asked to highlight the differences, ChatGPT produced the following image:

As you can see, ChatGPT skewed the information and exaggerated the differences in the image. Only one (the shirt stripe) out of the three was correct, while it missed the differences with the sock, the hair, and it even changed the author in the copyright!

AI doesn’t know your KPIs, fiscal calendars, or market pressures. Business context still requires a human lens to interpret properly. Without that, you risk misreading what the data is trying to say.

AI doesn’t give sources or confidence scores. You often can’t tell where a number came from. Secoda notes that teams still need to double-check model outputs before trusting them in critical workflows.

One of the great things about LLMs is how accessible they are. But that also means anyone can generate analytics even if they don’t understand the data underneath. This creates a gap between surface-level insight and actual understanding.

Over-relying on LLMs can create more cleanup work than if a skilled analyst had just done it from the start. Over-relying on automation often creates cleanup work that slows teams down. As we noted in Modern Data Stack Acceleration, true speed comes from workflows designed with governance and best practices.

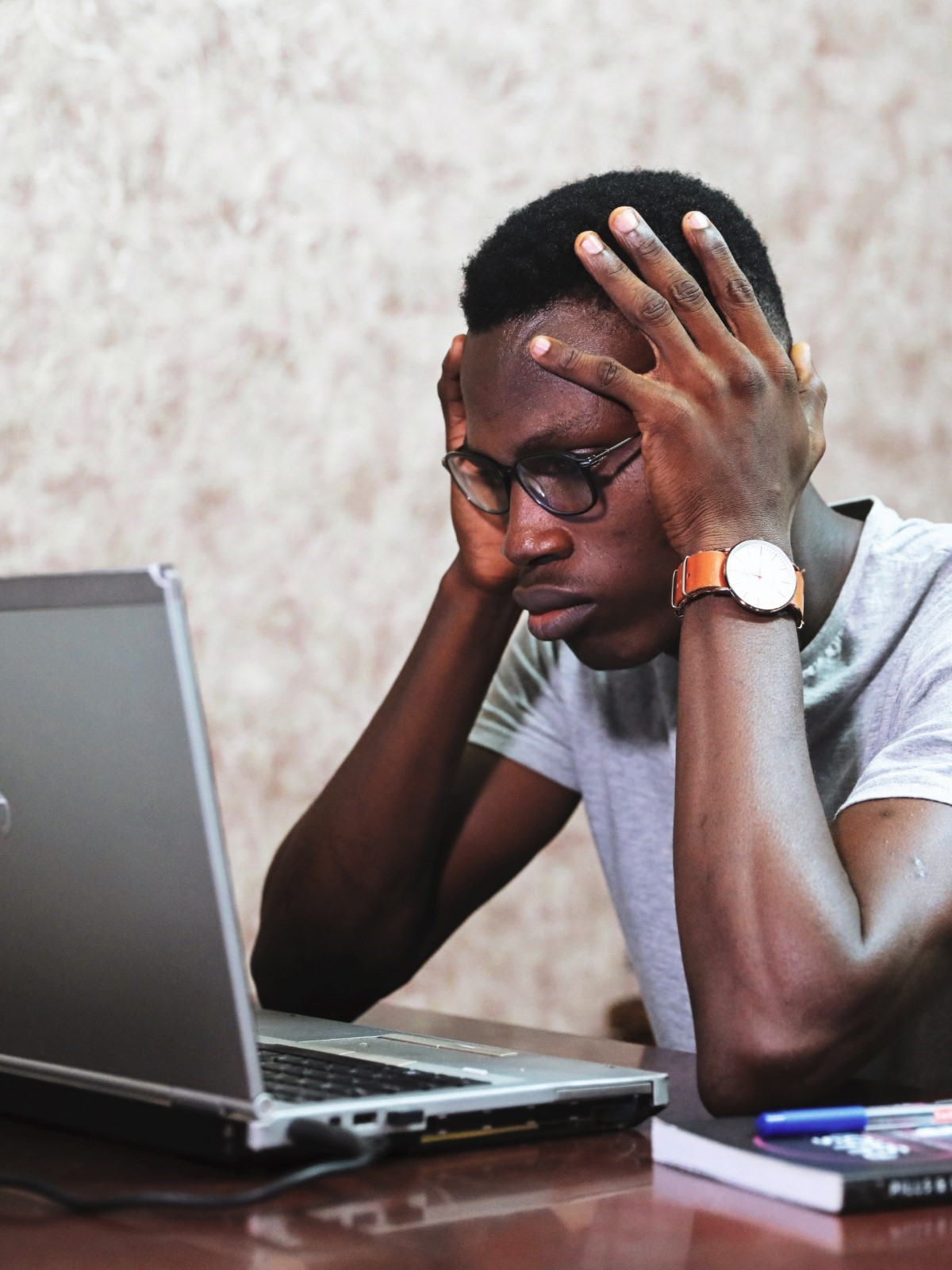

If you’ve ever skimmed an LLM-generated responce and thought, “That’s not quite right,” you already know the value of human oversight.

AI is fast, but it doesn’t understand what matters to your stakeholders. It can’t distinguish between a seasonal dip and a business-critical loss. And it won’t ask follow-up questions when something looks off.

Think of LLMs like smart interns. They can help you move faster, but they still need supervision. Your team’s expertise, your mental model of how data maps to outcomes, is irreplaceable. Tools like Datacoves embed governance throughout the data journey to ensure humans stay in the loop. Or as Scott Schlesinger says, “AI is the accelerator, not the driver.” It’s a reminder that human hands still need to stay on the wheel to ensure we’re heading in the right direction.

Datacoves helps teams enforce best practices around documentation, version control, and human-in-the-loop development. By giving analysts structure and control, Datacoves makes it easier to integrate AI tools without losing trust or accountability. Here are some examples where Datacoves’ integrated GenAI can boost productivity while keeping you in control.

Want to keep the speed of LLMs and the trust of your team? Here’s how to make that balance work.

AI can enhance analytics but should not be used blindly. LLMs bring speed, scale, and support, but without human oversight they can also introduce costly errors that undermine trust in decision-making.

Human judgment remains essential. It provides the reliability, context, and accountability that AI alone cannot deliver. Confidence doesn’t equal correctness, and sounding right isn’t the same as being right.

The best results come from collaboration between humans and AI. Use LLMs as powerful partners, not replacements. How is your team approaching this balance? Explore how platforms like Datacoves can help you create workflows that keep humans in the loop.

.png)

The world of data moves at a lightning-fast pace, and you may be looking to keep up by migrating your data to a modern infrastructure. As you plan your data migration, you’ll quickly see the many moving parts involved, from data compatibility and security to performance optimization. Choosing the right partner is critical—making the wrong choice can lead to data loss or corruption, compliance failures, project delays, hidden costs and more. At worst, you could end up with a costly new process that fails to gain user adoption! This article provides 10 key factors to consider in a partner to ensure these pitfalls don’t happen to you, guiding you toward a smooth and successful migration. Lets dive in!

Data migration is the process of moving data pipelines from one platform to another. This process can include upgrading or replacing legacy platforms, performing critical maintenance, or transitioning to new infrastructure such as a cloud platform. Whether it's moving data to a modern data center or migrating workloads to the cloud, data migration is a pivotal undertaking that demands meticulous planning and execution.

Organizations may embark on this complex journey for many reasons. A common driver is the need to modernize and adopt cutting-edge solutions like cloud platforms such as Snowflake, which offer unparalleled scalability, performance, and the flexibility of ephemeral resources. Data migration may also be necessitated by mergers and acquisitions, where consolidating and standardizing data across multiple systems becomes essential for unified operations. Additionally, organizations might pursue migration to improve security, streamline workflows, or boost analytics capabilities.

Done right, data migration can be transformative, enhancing data usage and enabling organizations to unlock new opportunities for efficiency, deeper insights, and strategic growth.

Migrating data is a complex undertaking with many moving parts that vary based on your current system and the target system. Careful assessment of your current state and your desired future state is a critical step that should never be overlooked in this planning process. Key considerations include data security, optimizing configurations in the new environment, and transitioning existing pipelines seamlessly. Joe Reis and Matt Housley often emphasize that much of data engineering revolves around "plumbing"—the foundational connections and data flows—which must be meticulously managed for any successful migration.

A lift-and-shift approach, where pipelines are simply moved without modifications, should be avoided as much as possible. This method often undermines the purpose of migrating in the first place: to capitalize on modern features and enhancements offered by newer tools, such as dbt, to improve data quality, documentation, and impact analysis. Moving to dbt without re-thinking how data is cleansed and transformed can lead to outcomes that are worse than your current state such as increased compute costs and difficulty in debugging issues.

Given these complexities, detailed planning, skilled execution, prioritization, decommissioning unused assets, and effective risk management are crucial for a successful migration. Achieving this demands experienced professionals who can execute flawlessly while remaining adaptable to unexpected challenges.

As we have seen above, there are many complexities when it comes to data migration, making the selection of the right partner paramount. Choosing the wrong partner can potentially lead to longer implementation times, hidden costs, project failure, compliance failures, data loss and corruption, and lost opportunity costs. Let’s discuss each of these in a little more detail.

Inexperienced partners can cause significant delays due to suboptimal choices in planning, technology selection, and execution. These inefficiencies can lead to frequent setbacks, resource mismanagement, and potential catastrophic roadblocks. Prolonged implementation timelines may also result in missed opportunities to capture market value and reduce time-to-insight, while eroding trust in a system that has yet to be fully implemented.

Hiring the wrong partner often results in unforeseen costs due to extended project timelines as mentioned above, poor resource allocation, and the need for rework when initial efforts fall short. These hidden costs may include increased labor expenses, additional technology investments to rectify poor initial solutions, and higher costs associated with resolving data security or compliance issues. Budget overruns and unexpected expenses from lack of foresight, poor risk management, and inefficiency can quickly erode ROI.

A poorly executed data migration can lead to a new process that underperforms, costs more, or fails to gain user adoption. When users reject a poorly implemented system, organizations may be forced to maintain legacy systems, further compounding costs and delaying innovation. Worse still, critical data may be unusable or inconsistent, undermining trust in data-driven initiatives.

Hiring the right partner is essential for ensuring compliance with data regulations, industry standards, and security best practices. Without expertise in these areas, there is a heightened risk of data breaches, non-compliance fines, and reputational damage due to mishandling sensitive information. Such failures can lead to costly legal ramifications, operational downtime, and diminished customer trust.

Inadequate planning, testing, or execution can result in the loss or corruption of critical data during migration. Poor data management practices, such as insufficient backups, improper mapping of data fields, or inadequate validation procedures, can compromise data integrity and create gaps in your data sets. Data loss and corruption can disrupt business operations, degrade analytics capabilities, and require extensive rework to correct.

Choosing the wrong partner can lead to missed opportunities for optimizing data processes, modernizing workflows, and unlocking valuable business insights. Every moment spent fixing issues or addressing inefficiencies due to poor implementation represents lost time that could have been invested in enhancing data quality, streamlining operations, and driving strategic initiatives. This opportunity cost is often overlooked but can be the difference between gaining a competitive edge and falling behind.

Datacoves does not do data migrations, but we see companies hire companies to do this work as they implement our platform. Through our experience, we have compiled a list of 10 key factors to consider when selecting a data migration partner. Carefully evaluating these factors can significantly increase the likelihood of success for your data migration plan and ensure a smoother overall process.

When selecting a data migration partner, it’s crucial to thoroughly review their case studies, references, and client testimonials. Focus on case studies that feature companies with similar starting points and objectives to your own. Approach client testimonials with a discerning eye and validate their claims by contacting references. This is an excellent opportunity to determine whether the partner is merely focused on checking tasks off a to-do list or genuinely dedicated to setting things up correctly the first time, with a passion for leaving your organization in a strong position. While this may seem like a considerable effort, such diligence is essential for investing in your data’s success and ensuring the partner can deliver on their promises.

Building on the importance of a proven track record from above, this factor emphasizes the need for technical depth. Verify that your potential partner is proficient in overarching data terminology and best practices, with deep familiarity in areas such as data architecture, data modeling, data governance, data integration, and security protocols. A qualified data partner must have the expertise necessary to successfully guide you through every phase of your data migration. Skipping this crucial step can lead to poorly structured data, compromised system performance, and numerous missed opportunities for optimization.

This is often overlooked when selecting a data migration partner, yet it plays a critical role in ensuring a successful project. When evaluating potential partners, consider asking the following questions to assess their project management and communication capabilities:

This is by no means an exhaustive list of questions but rather a great starting point. The right partner should feel like a leader rather than a liability, demonstrating their expertise in a proactive manner. This ensures you don’t have to constantly direct their work but can trust them to drive the project forward effectively.

A common theme for a successful partnership is deep expertise, and this is especially true for industry-specific knowledge. Every industry has its unique challenges and pitfalls when it comes to data. It is important to seek out partners who are experts in your industry and have a proven track record of successfully guiding similar organizations to their goals. For example, if your organization operates within the Health and Life Sciences sector, a partner with experience exclusively in Retail may lack the nuanced understanding required for your specific data needs, such as handling PII data, adhering to stringent regulatory compliance, or managing complex clinical trial data. While industry familiarity shouldn’t necessarily be a dealbreaker for every organization, it can be critical for sectors like Health and Life Sciences due to their high regulatory demands. Other industries may find it less restrictive, which is why it remains a key factor to consider when finding the right fit. See how Datacoves helped J&J achieve a 66% reduction in data processing with their Modern Data Platform, best practices, and accelerators.

A partner's ability to minimize downtime, prevent data loss, and mitigate security risks throughout the migration process is essential to avoiding catastrophic consequences such as prolonged system outages, data breaches, or compliance failures. A comprehensive risk mitigation strategy ensures that every aspect of your data migration is thoughtfully planned and executed with contingencies in place. Ask potential partners how they approach risk assessment, what protocols they follow to maintain data integrity, and how they handle unexpected issues. The right partner will proactively identify potential risks and implement measures to address them, providing you with peace of mind during what can be an otherwise complex and challenging process.

A successful data migration partner should offer tailored solutions rather than relying on one-size-fits-all approaches. Every organization’s data needs are unique, and flexibility in meeting those needs is extremely important. Consider how a partner adapts their strategy and tools to align with your specific requirements, workflows, and constraints. Do they take the time to understand your goals and develop a plan accordingly, or do they push prepackaged solutions? The ability to customize their approach can be the difference between a migration that delivers optimal business value and one that merely "gets the job done."

Data migration doesn’t end with the initial project. A strong partner should offer ongoing support, optimization, and strategic guidance post-migration to ensure continued value from your data infrastructure. Ask about their approach to post-migration support: Will they provide continued monitoring, performance optimization, and assistance—and for how long? The best partners view your success as an ongoing journey, bringing the expertise needed to continuously refine and enhance your data systems. Their commitment to getting things right the first time minimizes future issues and demonstrates a vested interest in your long-term success. By prioritizing a forward-thinking approach, they ensure your data systems are built to last, rather than quickly implemented and forgotten. This is why Datacoves goes beyond just providing tools; we offer accelerators and best practices designed to help you implement dbt successfully, ensuring a strong foundation for your data transformation journey. We work with strategic migration partners that will help you set things up the right way and are around for the long haul.

For many organizations, the geographic location of a data migration partner can impact communication and project efficiency. Consider whether the partner’s working hours overlap with yours. How will they handle urgent requests or collaboration across different time zones? Effective time zone alignment can enhance communication, reduce delays, and ensure faster resolution of issues. The last thing you want is to find an issue and not be able to get an answer until the next day.

Successful data migration extends beyond the technical execution and tooling—it also requires effective change management. A capable partner will help your organization navigate the changes associated with data migration, including new processes, systems, and ways of working. How do they support employee training, communication, and adoption of new tools? Do they provide resources and strategies to ensure a smooth transition? Partners with a strong change management focus will work with you to minimize disruptions and maximize user adoption.

When evaluating potential partners, keep in mind that while their team lead may be highly technical, the team members you’ll work with day-to-day might not always match that level of expertise. Ensure that the team members working on your project possess relevant certifications for the key technologies you use. Certifications, such as dbt Certification, Snowflake Certification, or other relevant credentials, demonstrate expertise and a commitment to staying current with industry standards and best practices. Ask potential partners to provide proof of certification and inquire about how their team keeps pace with evolving technologies. While certifications alone don’t guarantee proficiency, they offer a solid starting point for assessing skill and commitment. This assurance of expertise can significantly impact the success of your project.

Cost should not be the determining factor when hiring a migration partner. Cost is an essential consideration as it will directly impact project budget, but you must consider the total cost of ownership of your new platform. In the long term, the initial migration cost will impact the long-term on-going costs. A low-cost partner will lack several of the items listed above and your migration team may be staffed with inexperienced team members. The migration will be done, but how much technical debt will you accumulate along the way?

Avoid simply searching for the lowest-cost vendor. Though this may lower upfront expenses, it often results in higher costs over time due to errors, inefficiencies, and the need for rework. Projects that are rushed or handled without proper expertise tend to exceed their budgets, take longer to complete, and are more challenging to maintain in the long run because they weren’t done correctly or optimized from the start. Experienced partners bring significant value by ensuring work is done right and to a high standard from the beginning. It is obvious that contracting a partner that meets most, if not all, of the key factors mentioned above most likely requires a monetary investment. This should be viewed as an investment in expertise that helps mitigate long-term costs and risks.

Choosing the right data migration partner is key to minimizing risks and ensuring optimal outcomes for your organization. The complexities and challenges of data migration demand a partner with proven expertise, industry-specific knowledge, effective communication, flexibility, and a commitment to long-term support. Each of the factors outlined above plays a vital role in determining the success of your migration project—potentially saving your organization from costly delays, hidden expenses, compliance pitfalls, and lost business opportunities.

Carefully evaluate potential partners using these key considerations to ensure you select a partner who will not only meet your immediate data migration needs but also support your organization’s continued success and growth. 📈

Datacoves has built-in best practices and accelerators built from our deep expertise in dbt, Airflow, and Snowflake. Our platform is designed to simplify your data transformation journey while providing excellent value by reducing your reliance on costly consultants. With our baked-in best practices, our customers have achieved faster implementations, enhanced efficiency, and long-term scalability.

The reason companies fail at leveraging analytics stems from the fact that people tend to focus on the destination instead of the journey that will lead to the solutions that will have the most impact on the business. Time and time again, I see people focus on the so-called shiny objects, like new tools, new techniques, or even new people, that appear to be the silver bullet everyone needs. The truth is, if you go back to the first principles and start with true alignment, good data processes, and user-centric experiences, project success and satisfaction are achievable.

Every project I have been a part of started with a sense of optimism and excitement. The honeymoon phase was great. Everyone was united; we had gotten the funding, selected vendor partners, and purchased whatever technology was part of the solution. We all spoke the same language, everyone got to work, management started getting progress updates, and everyone thought we were off to a great start.

It wasn't until real decisions needed to be made that we realized the honeymoon was over. In every single instance, an excessive amount of time was spent in meetings arguing and reaching some level of consensus until the next decision. The reason this happened was because we didn't really spend the time to get on the same page. People assumed that we were aligned because at a high level, we all talking about the key points of the given initiative: digital transformation, self-service analytics, customer mastering, data lakes, etc.

But we were not really thinking the same things. Everyone had different backgrounds and had expertise on different parts of the solution: regulatory requirement, technology limitations, end-user needs, etc. There were also things no one knew at the start, and we didn't have a north star to guide these decisions. We all appeared to be saying the same things, but we were thinking very differently.

I have seen the pressure to get started on a project and show progress lead to delays and ultimate dissatisfaction with the end result. On projects where we have spent a couple of weeks getting aligned using a structured approach to product discovery, we ended up with better estimates and better overall satisfaction.

In any analytics-related project, the same things apply: the team needs to understand the business objectives, the current state (so the new process isn't worse), the risks, and prioritize the high-level features. Most importantly, the team needs to align on what's NOT in the new solution and the prioritizing criteria such as quality, feature completeness, or usability that will be used when making decisions. Agile does not mean no planning.

Trust starts by listening to people and creating a shared vision that sets the right expectations from day one. You can create an achievable plan if everyone knows what you are trying to achieve.

Let's face it, your data processes get no love. This is usually because this is "too technical." Your users don't care about databases, schemas, tables, or columns, let alone the process of converting raw facts into business-ready insights. It's easy for management to see a fancy dashboard and get excited about the possibility of machine learning, but talk about data and people's eyes gloss over.

It kind of makes sense; most people don't understand how the power grid works. We all take it for granted. We flip a switch, the light turns on, and we move forward. No one cares about electricity until something goes wrong. In a lot of organizations, things go wrong with data more often than you would think. Sometimes people notice right away, but other times failures are silent. When something does go wrong, everyone goes into firefighting mode. Meetings are held, issues are discovered, and patches to "prevent" the failure are put in place. The time to think about the inevitable is not once things break; you need to anticipate failure and design for resilience.

The issue here is that we don't think of the process of going from raw data to insights as a single system. It is all interconnected and needs to be treated as such. When it comes to analytics, sometimes it feels like companies want to build a mansion on a foundation atop quicksand. Initially, all seems fine, and everyone is in the house decorating until someone notices that a corner of the house is sinking. Everyone goes outside, props up the corner, and they happily go back inside to decide what color to paint the next room.

You can't build a house on quicksand; you need to set up repeatable processes with quality built in from the start. If we want collaboration, we have to build it in. If you want to be able to do impact analysis, guess what? You can't retrofit that later if you didn't do it from the start. Having documented analytics is not magic; you need this to be part of the culture and part of the process. The good thing is that many smart people have faced the same issues, and there are examples we can see where people are doing things right.

If you want users to trust data analytics, they need to trust the data, and they need to believe in a solid process that is built on a solid foundation.

When you try to please everyone, you please no one, and in many companies, technical teams try to do everything they are asked. They jump through hoops to deliver projects, but it is very common for people to be dissatisfied with the end results. I have also seen new tools used like old ones. Teams sometimes take the approach that the new process is just affecting some part of the current broken process, so they only incrementally change it. I have seen Tableau dashboards that are essentially Excel on the web with some automation.

Instead of asking users what they want, we need to understand what they need and why. What are they trying to accomplish? What's wrong with how they do things today? Is the new process / tool you are putting in place better than what they already have? Sometimes it makes more sense to leave a current process as-is until other parts of the system are improved.

When you understand the real need for an omni-channel dashboard or a sales dashboard, you design the solution to help you achieve that goal. If your users need to quickly get in and out of the tool, you can find ways to reduce the number of clicks it takes them to get there. You simplify access, and you surface the most important information first. You build the solution around them, and more importantly, you are able to justify your decisions and why certain things need to be de-prioritized. When users see that you empathize with them, they trust you. They don't push back on every choice because they know you have their best interests at heart because you have demonstrated time and again that you do care.

Getting decision-makers to trust data analytics is no different than getting anyone to trust anything. You need to start with alignment and set the right expectations; you need to build end-to-end processes that are robust; and you need to deliver the tools that facilitate the job users do.

.webp)

There is no doubt about the transformative potential of big data and analytics. This is particularly true for the Life Science sector as implementing technologies and ideologies can revolutionize drug development, tailor medicines to individual needs, dramatically improve patient care and more. The data supports this, with the longest running report of Fortune 1000 CIOs by Wavestone, showing 87.9% of companies believe “investments in Data & Analytics are a Top Organizational Priority.”

Such great promise and high buy in should mean easy cultural adoption, right? Well not exactly. The 2024 report shows there has been a notable improvement; the percentage of top executives facing significant challenges in culture, people, and process/organization decreased from a staggering 90% in 2020 to 77.6% in 2024. While this reduction signifies a positive trend towards addressing these issues, the fact that more than three-quarters of leaders still encounter these problems underscores the widespread nature of these challenges. The persistently high percentage highlights the need for continued and focused efforts to overcome these barriers.

In an effort to help further lower the 77.6%, this article aims to cover the benefits of data in the Life Science sector, highlight common culture issues, and provide some solutions to the problem. If your organization is among those facing these struggles, know that you are not alone.

The life science sector stands on the brink of a digital transformation, powered by the strategic use of data. It's clear that its impact is far-reaching, transforming every facet from research and development to patient care and beyond. Below are some examples of what this data-driven culture can improve.

As we identified earlier in this article, culture, people, and process/organization challenges are the biggest obstacles for companies to achieve digital transformation and become data-driven. Identifying specific challenges is key to developing a solution.

Pharma companies tend to be risk averse due to the nature of the data they are responsible for. This leads to limitations and constraints to innovative solutions. These constraints are often accepted without understanding the rationale behind these constraints or challenging them. New technology offers new ways to manage data that were not available in the past. If a company remains the most risk averse and the most conservative this will stifle innovation. The key takeaway is that it is not only about technology but about the new process potential that this new tech provides.

This goes back to the foundation. Technology changes, initiatives change, but data truths do not. These truths involve thinking about end-to-end processes, data quality, documentation, good guidelines conventions, data governance, reducing points of failure ect. It is easy to get swept up by the latest trend and want to jump in, but you cannot build a house on quicksand; new trends like Gen AI still require these things underneath.

This starts with aligning the team. Alignment is one of the 3 core pillars to a data-driven culture. People will start out thinking they are on the same page because they are using the same terminology. But we all have different ideas influenced by our experiences. So, it is important to gather all stakeholders and go through a true alignment process which includes figuring out the current state, pain points, and aligning on the solution.

Fundamental alignment includes adopting a top-down approach. The efforts one team is making are sure to affect the efforts of another. Leadership must align and connect the dots between all moving parts and understand that things are not living in isolation. This will solve headaches downstream and ensure a smoother process.

Alignment alone won’t be enough to combat culture challenges. True alignment can lead to overly ambitious projects that may prove difficult to execute. This is the classic trap of trying to do too much at once. It is important to start with manageable, small-scale projects that can provide immediate benefits. These quick wins are vital for positively influencing the organizational culture. Additionally, they help maintain momentum: if a larger initiative is slow to yield results, these smaller successes ensure continuous progress. By allowing for ongoing adjustments and reprioritizations, these projects help prevent initiatives from being abandoned.

While the potential of big data and analytics in transforming the Life Science sector is undeniable, and investment in data is on the rise, the journey towards a data-driven culture remains one of the biggest challenges for data integration. The path forward requires a balanced approach that combines technology with fundamental changes in culture and processes. By embracing these changes, the life sciences sector can overcome existing barriers and fully harness the power of data to advance medical science and improve patient outcomes. At Datacoves we are passionate about helping companies achieve a data-driven culture. See how Datacoves helped Johnson&Johnson innovate their tech stack.

This article was inspired by RAN BioLinks’ podcast episode “Why Life Science Organizations Fail to Implement Effective Data Strategies”. The detailed conversation with Noel Gomez, a seasoned expert in data management within the life science industry, explores the critical challenges and innovative solutions for effective data strategies in healthcare.

Companies are investing heavily to become data-driven and to democratize data access. However, many are not achieving the transformative outcomes they expected.

The core issue? A lack of trust.

This mistrust stems from a lack of focus on core aspects that ensure a robust data-driven culture and critical mistakes in these areas.

Fortunately, these mistakes are self-inflicted which means they can be fixed, and this article aims to help highlight and address these pitfalls. By understanding and adhering to the core pillars of a data-driven culture and avoiding the common mistakes, organizations can develop and maintain a data-driven culture that people can trust.

It is no secret that there is power and opportunity in data, and data-driven culture is the approach which aims to take advantage of that.

A data-driven culture is not about hastily adopting the latest tools or technologies in the hope of resolving data challenges. This common mistake often leads to a focus on immediate results or 'shiny objects', such as acquiring cutting-edge technology or hiring new talent. Unfortunately, this approach tends to overlook essential priorities and gradually erodes the foundation of a data-driven culture: Trust in the data.

Many companies struggle with effectively using analytics because they overemphasize these immediate goals – the 'destination' – rather than appreciating the foundational journey necessary for impactful analytics. This journey involves more than just technology; it requires a shift in mindset and approach.

Data-driven culture represents an organizational approach where data is the cornerstone of decision-making processes. In such a culture, decisions are primarily informed by data analysis, rather than relying exclusively on intuition or past experiences. This approach involves strategically employing data at every level of the organization. It fosters an environment where data is not just an asset but the main driver of strategy, innovation, and operational choices. By harnessing the power and opportunities offered by data, a data-driven culture ensures that decisions across the organization are grounded in solid evidence and analytical insight, enhancing the overall decision-making quality and efficacy.

Empowered Decision Making: Decisions are based on data analysis, leading to objective and impactful outcomes.

Accessibility of Data: Data is accessible across the organization, breaking down silos and empowering all employees.

Investment in Technology: Adequate tools and technologies are provided for effective data collection and analysis.

Data Literacy: Continuous training is provided to enhance the workforce's understanding and use of data.

Quality and Governance: High standards of data accuracy and security are maintained.

Agility: The organization adapts quickly to insights derived from data.

Collaborative Integration: Data insights are shared and integrated across various functions.

Outcome-Focused: Emphasis on measurable results driven by data insights.

All of that sounds great, but how do we achieve a data-driven culture?

Like mentioned earlier in the article, true success in analytics comes not from merely chasing new tools or methodologies but from establishing three core pillars as part of a Data-Driven Culture:

By refocusing on these foundational elements, businesses can drive more meaningful and sustainable results from their analytic endeavors, leading to overall project success and satisfaction.

Let's dive deeper into the core pillars and examine the common pitfalls within each pillar that I have observed lead to challenges.

Fundamental alignment is about synchronizing analytics strategies with the organization's core business objectives. This ensures everyone involved, from executives to frontline employees, share a common vision and understanding of what analytics aims to achieve. This alignment is crucial for creating a unified direction in data-driven initiatives and ensuring that every analytics effort contributes meaningfully to the overall business strategy.

This sounds great right? So much so that every project I've participated in began with high hopes and enthusiasm. Initially, there was a sense of unity – funding secured, partnerships with vendors established, and the latest technology acquired. This honeymoon phase of the data driven transformation, filled with optimism, had everyone working diligently, with management receiving regular updates and a general belief that we were on the right track.

The real test emerged when critical decisions were required. This was the point where the honeymoon phase often faded, revealing a lack of true alignment. Meetings became prolonged discussions where the team struggled to reach consensus. This challenge stemmed from either not spending enough time initially to ensure everyone was on the same page or not conducting a discovery phase at the start of the project.

Although we agreed on high-level objectives like digital transformation and self-service analytics, there was a misalignment in our deeper understanding and perspectives. We were each influenced by our varied backgrounds and expertise in different aspects of the project.

This led me to a crucial fact: the importance of alignment before action. In projects where we dedicated time upfront for structured alignment and thorough product discovery, we not only achieved better estimations but also greater overall satisfaction. It became evident that successful analytics projects require a deep understanding of business objectives, the current state, potential risks, and a clear prioritization of features. This was because we developed a clear understanding and set up expectations that people could rely on throughout the course of implementation.

Crucially, alignment also involves clarity on what the project will not address, alongside the criteria for prioritization such as quality, completeness of features, and usability. Embracing agility does not mean forgoing thorough planning.

Ultimately, building trust in any project begins with listening, creating a shared vision, and setting the right expectations from the start. A well-defined and achievable plan, understood and agreed upon by all, is the foundation of success.

The end goal of analytics should be to serve the user's needs and involve designing practical solutions that add real value and enhance decision-making processes. This means creating analytics tools and processes that are intuitively aligned with how users work and make decisions, ensuring that these tools are not just technically proficient but also practically useful.

There are two pitfalls to avoid.

1. Trying to please everyone often leads to pleasing no one.

This is a common scenario in many companies where technical teams strive to meet all demands. Despite their efforts to deliver on projects, dissatisfaction with the end results is frequent.

2. Not addressing the actual user pain points.

This happens when the user does not actually get a good working solution out of the process.

During discovery it is important to discuss what is in scope, out of scope, essential, and nice to have. By categorizing this way you can better understand the needs of the group and use it to guide the process. With this process done, you can move forward with confidence that you are addressing the most important pain points.

Now that we have defined the pain points, the next step is to fully understand. The key is to not only understand their needs but the reasons behind them. What are the goals they're trying to achieve? What are the shortcomings of their current methods? Is the new process or tool genuinely an improvement over what they currently have? For example, if users need to navigate a tool quickly, finding ways to reduce unnecessary clicks and simplifying access becomes important. Sometimes, it's more practical to keep an existing process unchanged until other parts are enhanced. By bringing the most critical information to the forefront, the solution becomes more user centric.

It is important to have these needs in mind at the beginning of the project and strive to truly understand. If not, you risk investing time, money, and resources in a tool that users don't need, and this can have a detrimental effect on the overall culture.

More importantly, this approach allows you to justify your decisions and explain why certain aspects are prioritized over others. When users see that their needs and challenges are understood and addressed, they are more likely to trust and accept the solutions provided. This trust is built through consistently demonstrating that their best interests are at heart.

Efficient data management involves implementing robust processes to ensure data accuracy, accessibility, and understandability. This pillar is key to informed decision-making as it underpins the reliability of data-driven insights. Effective data management includes organizing, storing, and safeguarding data to make it readily available and useful for users across the organization.

Let's face it, your data processes get no love. This is usually because they are "too technical." Users often do not concern themselves with databases, schemas, tables, or columns, let alone the process that turns raw facts into business-ready insights. It is easy for management to get excited about a fancy dashboard and the potential of Machine Learning and Gen AI, but when it comes to the actual data, interest tends to wane.

It makes sense; most people don't understand how the power grid works. We take it for granted that we flip a switch and expect the lights to turn on. We move on without a second thought. No one really cares about electricity until something goes wrong. Similarly, in many organizations, data issues often go unnoticed until a failure occurs. Sometimes these issues are immediately apparent, but other times they are silent. When a failure does happen, there is a scramble to fix it. Meetings are held, issues are identified, and patches are implemented to "prevent" future failures. However, the best time to think about potential problems isn't after they happen, but before — building systems that anticipate and are designed for resilience.

The real issue is that the process from raw data to insights isn't often viewed as a single system. It is all interconnected and should be treated as such. In the world of analytics, it sometimes feels like companies are trying to build a mansion on a foundation of quicksand. Initially, everything seems fine, and everyone is busy with their tasks, but when the foundation starts to give way, the focus shifts to propping up the weak points. You can't effectively build on quicksand; you need solid, repeatable processes from the start.

The focus should be on building systems that anticipate challenges and are designed for resilience. This involves integrating data management practices into the company's culture from the start, ensuring users trust the data and the processes that generate insights. If you want effective collaboration and impact analysis, these are difficult to retrofit later — they need to be part of the initial plan. Documented analytics isn't a magical solution; it needs to be ingrained in the culture and process from the beginning. The good news is that there are many examples and best practices from those who have navigated these challenges successfully.

For users to truly trust in analytics, they need to have faith in the data and the processes that generate it. They need to see and believe in a robust system built on a solid foundation.

To achieve a data-driven culture, companies must refocus on three core pillars: fundamental alignment, user-focused solutions, efficient data management, and avoid common mistakes in these areas. Success in analytics isn't about chasing new tools or methodologies but about building a robust system from the ground up, aligning everyone's vision, and creating practical, value-added solutions. Prioritizing foundational elements over immediate shiny objects will lead to more meaningful, sustainable results and will build trust in the analytics process.

As the world of data management continues to grow, terms and new concepts are constantly popping up. It's important for data professionals to stay up to date with terms such as Data Mesh and data observability. For those coming into the field from other areas, it’s also good to understand terminology to communicate more effectively with others.

In this blog post, we've put together an extensive table that breaks down and explains the essential terms in modern data engineering, analytics, and architecture. This resource is designed to help both experienced data professionals and newcomers alike to navigate and understand the ever-evolving language of data.

We've covered basic concepts like data warehouses and ETL pipelines and advanced ideas like Data Mesh. Each of these terms is crucial in shaping today's data ecosystems. Think about how these terms apply to your business and can enhance your understanding. Have we missed any terms that you were hoping to see defined, or do you think we could improve the definitions of some of the terms already defined? Please share your thoughts with us by providing feedback through our contact page.

Interested in modern data solutions? Accelerate your journey to a modern data stack with Datacoves' managed solution, designed to streamline your data processes and implement best practices efficiently. Discover how Datacoves can help you quickly add value and transform your data strategy, ensuring you make the most informed decisions for your specific needs, by scheduling a demo.

Digital transformation is often seen through the lens of technological advancement and process optimization. Most blog posts and guides out there revolve around implementing new software, automating tasks, and digitizing operations. Yet, there's a pivotal element that's frequently overlooked in these discussions, especially when it comes to an enterprise: the mindset and culture within an organization. This article aims to shed light on why this is crucial in achieving true digital transformation. But first, let's investigate what digital transformation is and why it is important.

Digital transformation is the integration of digital technology into all areas of a business, fundamentally changing how it operates and delivers value to customers. It is more than just a technological upgrade; it is a cultural shift that requires organizations to continually challenge the status quo, experiment, and get comfortable with failure. This often means walking away from long-standing business processes that companies were built upon to embrace new ways of working. Most organizations find this part the most challenging.

To achieve digital transformation in an enterprise 9 times out of 10 there must be a change in company culture. However, changing a company's culture is a formidable task. It is rare to hear statements like, “We need to fundamentally change our problem-solving approach.” This realization became clear to me through my past experiences as I noticed that managers often lacked the influence to drive change at the highest organizational levels. Additionally, the pressure to deliver quick results within budget cycles frequently hindered genuine cultural transformation.

During my tenure at various companies, under numerous managers, the consistent message was the need for improvement. However, I have come to understand that organizations, much like fireflies, develop their own rhythms. It is this unique rhythm that sets apart innovative and transformative companies from those that merely follow without achieving similar success. What do I mean by this? Let’s turn to nature for an explanation.

Nature is fascinating, especially when observing how hundreds or thousands of fireflies can synchronize their flashes.

In organizations, a similar phenomenon occurs. People will sync up and follow the status quo, even if it is not what is best for the organization. This dramatically hinders digital transformation because the loudest are not always right and yet they cause others to sync up with them. This will cause innovation to be stopped in its tracks.

In addition to this firefly phenomenon, often action differs from ambition. I recall a staff meeting with a former CIO discussing a future less dependent on Microsoft and more open to non-Windows devices. It was clear that iPhones were going to change the corporate landscape. Despite this, every new tool implemented was still optimized for Internet Explorer. This discrepancy between ambition and action often drives analytical people like me to frustration. To effect change, persistence is key. I have had ideas initially dismissed as “not my job,” only to see one later turn into a patented invention.

This manifests itself in other ways as well; have you ever seen a company advocate for fewer meetings while simultaneously criticizing those who do not include “everyone” in decision-making? I have been in such situations and can attest that decision-making by committee is not inherently superior. In fact, the more people involved in an initiative, the less effective it tends to be. This, I believe, is due to the Dunning-Kruger effect.

The more people you involve in a transformation initiative, the more likely the discussions will deteriorate to bike shedding discussions. When there is a disconnect between what is said and what is done, people take notice, and it breeds discontent.

Even in my most successful transformation initiatives, the radius of transformation has been limited to my sphere of influence. Sure, some of my tools and processes got global and cross-functional acceptance, but the underlying principles never took hold because they were too radical for the organization at the time. I was not part of the IT organization so the things I did were typically seen as shadow IT. Instead of focusing on what I should not be doing, it would have been more progressive for them to see how I was practicing Agile principles. They could have inquired about how my project was doing DevOps before that was in style, or how it was that this non-sanctioned product was extremely well received and people sought me out to help them improve their processes.

This means if you want the organization to be more innovative, you need to find the obstacles that hold people back from being innovative. Often politics and bureaucracy impact an initiative more than the solution itself. If you force everyone to comply with existing tools and processes, then you are imposing a constraint on the team that will limit innovation.

A typical way this manifests itself is leadership pushing the idea that one platform or process can solve every need. This can come in the form of imposing that a particular group do data transformation, or a visualization tool be the way that everyone can do analytics. I have never seen one tool that is good at everything, and you end up balancing the single solution with an unmanageable array of tools and processes. A healthy organization is a learning organization that is always open to improvement. When management encourages pushing boundaries and not taking anything as fact then the company can innovate.

A great example of driving innovation is seen in the approach of Steve Jobs, co-founder of Apple Inc. Jobs was known for his ability to challenge conventional wisdom and existing standards in the technology industry. He emphasized the importance of understanding the fundamental principles underlying a problem to innovate and create groundbreaking solutions. One notable instance was the development of the iPhone, which revolutionized the smartphone industry. Jobs and his team did not just improve on existing phones; they rethought what a phone could be, focusing on user experience and simplicity. This approach led to a product that dramatically altered how people interact with technology.

As a leader, you need to look for the fireflies who are using first principles like Steve Jobs to deliver innovative solutions and nurture, or create, a corporate culture that truly challenges what has been done without artificial constraints.

Reasoning by first principles removes the impurity of assumptions and conventions. What remains is the essentials. It’s one of the best mental models you can use to improve your thinking because the essentials allow you to see where reasoning by analogy might lead you astray.

The transformative and innovative thinkers will either comply or leave, both of which are undesirable. In my case I tended to leave. In every organization where I have worked, I have managed to make a significant impact, often through sheer determination. During my time at one such company, our goal was to introduce a data catalog. By analyzing the problem I was able to discern what was essential for our organization vs an elaborate and idealistic vision which was capable of doing everything. While the IT organization felt it would be better to create a home-grown catalog I understood that our biggest obstacle was getting people to use a catalog in the first place, so time to market was critical. I found that Alation met the needs we had and IT kept to their vision to build an all encompassing catalog, In 3 months I had deployed Alation and 1.5 years later, the home grown solution was a tenth as good. This approach of breaking down the problem to its basic elements and building up from there was critical. It is often underestimated how challenging it is to develop and maintain custom software. This experience highlights the effectiveness of first principles thinking in deploying practical and efficient solutions.

The reality is that not everyone possesses the tenacity to advocate for change, especially in the face of substantial resistance. Not only that, but I have also witnessed people being ostracized for thinking differently, while others were promoted for fitting in. It is crucial to seek out divergent thinkers and consider the validity of their perspectives, instead of forcing them to conform. This is why true digital transformation necessitates a shift in culture.

When an individual, much like a firefly that does not flash in unison with the rest, finds themselves out of sync with the collective rhythm, they face a decision: conform and synchronize with the group or venture out to find a new collective that resonates with their unique spark.

True transformational change must come from the top. Achieving enterprise digital transformation requires a deep and bold questioning of the status quo. We must critically assess our processes: Is a particular task truly necessary for a certain group? Can we identify and eliminate inefficiencies? Will adding another layer of approval or inspection genuinely enhance outcomes? It is essential to remember that human behavior often has a more profound impact than any technology or process we implement. When decision-making is centralized within one group, solutions are inevitably skewed to reflect their viewpoint. Too often, I have witnessed decisions justified by cost considerations that, upon closer inspection, proved detrimental in the broader context. An effective strategy involves analyzing the entire system, recognizing that optimizing the whole may require accepting lower efficiency in some areas.

The key is to align with the needs of users and the organization and engage leadership in this journey. With a united front, tackling the 'corporate dragons' becomes a more manageable endeavor. One practical approach is employing methodologies like the 'Job to be Done' framework.

Company culture and change management are frequently overlooked in the pursuit of process improvement. Employees operate within their limitations, while management ponders the lack of innovation and agility compared to other companies. The simpler path might seem to be increasing staff or updating technology, but the heart of transformation lies in the mindset of the organization. Leaders aiming for a lasting impact must embrace first principles thinking, ready to scrutinize and challenge established norms. Transformational change rarely stems from incremental improvements; truly innovative companies are those that dare to think and act differently. The organization thus faces a pivotal choice: will it adapt to a new rhythm, or compel its 'new fireflies' to fall in line with the existing order?

.webp)

In the age of data-driven decision-making, companies grapple with the mammoth task of setting up a robust Modern Data Stack. The on premise legacy systems struggle to keep up, and standing up a Modern Data Stack (MDS) isn't just a tech upgrade; it's an essential pivot, ensuring businesses extract actionable insights from the raw data they encounter. However, the road to achieving this is complex and slower than the line at the DMV.

If the responsibility of establishing a Modern Data Stack falls on your shoulders and you're feeling the weight of its time/resource/knowledge-intensive nature, this post offers insights and solutions.We explore the hurdles businesses encounter while shaping their data infrastructure and how you can streamline and expedite the process.

A Modern Data Stack refers to a suite of tools and digital technologies specifically designed for data management. Within this stack, some tools specialize in collecting data, while others focus on storing or processing it. As data moves through this system, it's transformed from raw input into actionable insights.

Many of these tools come from various providers and must be seamlessly integrated to ensure optimal performance. Leveraging the latest technologies, the modern data stack efficiently manages the entire data lifecycle, from collection to analysis. This stack is both scalable and flexible, ensuring it can adapt and grow with the ever-evolving demands of a business, and provide consistent performance regardless of data volume or complexity.

Below you can see an example Modern Data Architecture Diagram.

The path to a comprehensive end-to-end enterprise data platform is not without challenges. Embarking on such a journey requires diligent research, because the process of migrating to a Modern Data Stack or establishing it from the ground up is intricate and piecemeal. Since there are many individual tools in the Modern Data Stack, you may have to tackle each tool individually so you can focus on setting it up correctly. Given the complexity of the endeavor, even with a skilled team on board, it can take between 6 to 9 months to build a complete end-to-end data solution. This may be frustrating, but understanding the pain points in setting up a Modern Data Stack can help to make educated decisions that accelerate the process.

A strong data platform is the backbone of good decision-making. It helps us see clear insights fast and strengthens our data teams. When creating or choosing such a system, keep these principles in mind:

Following these rules can help us get the most from our data and make the best decisions

Understanding the challenges and intricacies of setting up a Modern Data Stack makes it clear why we need efficient solutions. In the data world things move fast and speed is imperative. While there are numerous tools available that cater to specific components, Datacoves offers a more comprehensive approach, addressing the end-to-end data stack. Datacoves could reduce the setup of your Enterprise Data Platform from the usual 6-9 months to just 2-3 weeks. But how does it achieve this feat?

Datacoves is not just another platform; it's a game-changer. Its project-based structure integrates seamlessly with any git repository, and it can be swiftly deployed in a private cloud to connect with existing tools. Each project provides multiple environments, facilitating role-based access and ensuring user-specific needs are met.

Datacoves aims to simplify, reduce friction, enhance collaboration, and inject software engineering practices into data operations. It seeks to empower teams, enabling swift productivity and ensuring teams function cohesively.

Intrigued by Datacoves? Dive deeper by watching the full video below or book a demo to experience its magic first-hand.