Not long ago, the data analytics world relied on monolithic infrastructures—tightly coupled systems that were difficult to scale, maintain, and adapt to changing needs. These legacy setups often resulted in operational bottlenecks, delayed insights, and high maintenance costs. To overcome these challenges, the industry shifted toward what was deemed the Modern Data Stack (MDS)—a suite of focused tools optimized for specific stages of the data engineering lifecycle.

This modular approach was revolutionary, allowing organizations to select best-in-class tools like Airflow for Orchestration or a managed version of Airflow from Astronomer or Amazon without the need to build custom solutions. While the MDS improved scalability, reduced complexity, and enhanced flexibility, it also reshaped the build vs. buy decision for analytics platforms. Today, instead of deciding whether to create a component from scratch, data teams face a new question: Should they build the infrastructure to host open-source tools like Apache Airflow and dbt Core, or purchase their managed counterparts? This article focuses on these two components because pipeline orchestration and data transformation lie at the heart of any organization’s data platform.

When we say build in terms of open-source solutions, we mean building infrastructure to self-host and manage mature open-source tools like Airflow and dbt. These two tools are popular because they have been vetted by thousands of companies! In addition to hosting and managing, engineers must also ensure interoperability of these tools within their stack, handle security, scalability, and reliability. Needless to say, building is a huge undertaking that should not be taken lightly.

dbt and Airflow both started out as open-source tools, which were freely available to use due to their permissive licensing terms. Over time, cloud-based managed offerings of these tools were launched to simplify the setup and development process. These managed solutions build upon the open-source foundation, incorporating proprietary features like enhanced user interfaces, automation, security integration, and scalability. The goal is to make the tools more convenient and reduce the burden of maintaining infrastructure while lowering overall development costs. In other words, paid versions arose out of the pain points of self-managing the open-source tools.

This begs the important question: Should you self-manage or pay for your open-source analytics tools?

As with most things, both options come with trade-offs, and the “right” decision depends on your organization’s needs, resources, and priorities. By understanding the pros and cons of each approach, you can choose the option that aligns with your goals, budget, and long-term vision.

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Before moving on to the buy tradeoffs, it is important to set the record straight. You may have noticed that we did not include “the tool is free to use” as one of our pros for building with open-source. You might have guessed by reading the title of this section, but many people incorrectly believe that building their MDS using open-source tools like dbt is free. When in reality there are many factors that contribute to the true dbt pricing and the same is true for Airflow.

How can that be? Well, setting up everything you need and managing infrastructure for Airflow and dbt isn’t necessarily plug and play. There is day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges which require ongoing expertise and attention. Hiring a team to handle this will be critical particularly as you scale. High salaries and benefits are needed to avoid costly mistakes; this can easily cost anywhere from $5,000 to $26,000+/month depending on the size of your team.

In addition to the cost of salaries, let’s look at other possible hidden costs that come with using open-source tools.

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks and when issues arise, a you will need expertise to accelerate the time to resolution.

And then there’s the learning curve. Not all engineers on your team will be seniors, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents a hidden cost.

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business users are impacted as they become reliant on your insights.

A managed solution for Airflow and dbt can solve many of the problems that come with building your own solution from open-source tools such as: hassle-free maintenance, improved UI/UX experience, and integrated functionality. Let’s take a look at the pros.

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however additional configuration and development will be needed for teams to leverage it with dbt and to troubleshoot infrastructure issues suck as containers running out of memory.

For data teams, the allure of a custom-built solution often lies in its promise of complete control and customization. However, building this requires significant time, expertise, and ongoing maintenance. Datacoves bridges the gap between custom-built flexibility and the simplicity of managed services, offering the best of both worlds.

With Datacoves, teams can leverage managed Airflow and pre-configured dbt environments to eliminate the operational burden of infrastructure setup and maintenance. This allows data teams to focus on what truly matters—delivering insights and driving business decisions—without being bogged down by tool management.

Unlike other managed solutions for dbt or Airflow, which often compromise on flexibility for the sake of simplicity, Datacoves retains the adaptability that custom builds are known for. By combining this flexibility with the ease and efficiency of managed services, Datacoves empowers teams to accelerate their analytics workflows while ensuring scalability and control.

Datacoves doesn’t just run the open-source solutions, but through real-world implementations, the platform has been molded to handle enterprise complexity while simplifying project onboarding. With Datacoves, teams don’t have to compromize on features like Datacoves-Mesh (aka dbt-mesh), column level lineage, GenAI, Semantic Layer, etc. Best of all, the company’s goal is to make you successful and remove hosting complexity without introducing vendor lock-in. What Datacove does, you can do yourself if given enough time, experience, and money. Finally, for security concious organizations, Datacoves is the only solution on the market that can be deployed in your private cloud with white-glove enterprise support.

Datacoves isn’t just a platform—it’s a partnership designed to help your data team unlock their potential. With infrastructure taken care of, your team can focus on what they do best: generating actionable insights and maximizing your ROI.

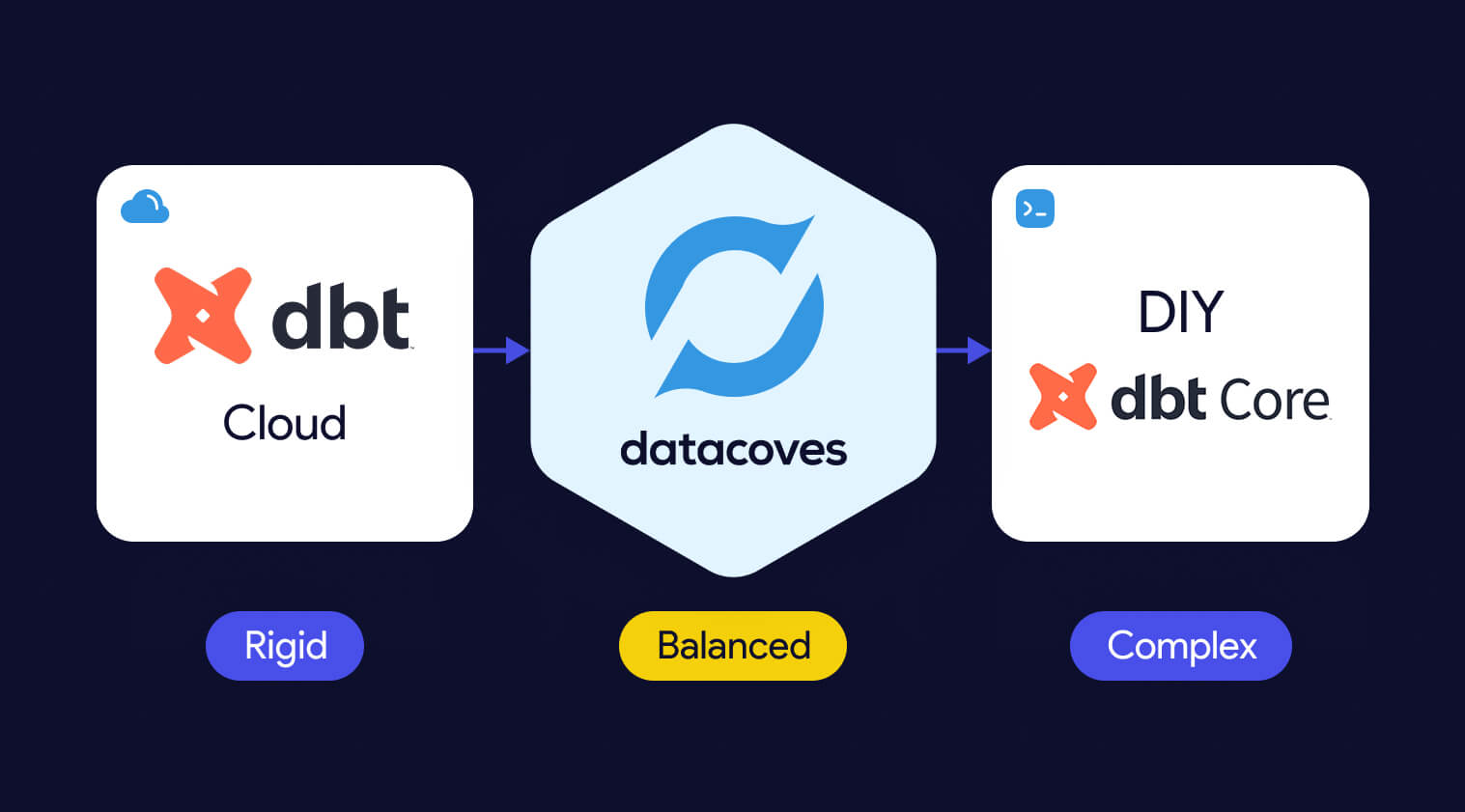

The build vs. buy debate has long been a challenge for data teams, with building offering flexibility at the cost of complexity, and buying sacrificing flexibility for simplicity. As discussed earlier in the article, solutions like dbt and Airflow are powerful, but managing them in-house requires significant time, resources, and expertise. On the other hand, managed offerings like dbt Cloud and MWAA simplify operations but often limit customization and control.

Datacoves bridges this gap, providing a managed platform that delivers the flexibility and control of a custom build without the operational headaches. By eliminating the need to manage infrastructure, scaling, and security. Datacoves enables data teams to focus on what matters most: delivering actionable insights and driving business outcomes.

As highlighted in Fundamentals of Data Engineering, data teams should prioritize extracting value from data rather than managing the tools that support them. Datacoves embodies this principle, making the argument to build obsolete. Why spend weeks—or even months—building when you can have the customization and adaptability of a build with the ease of a buy? Datacoves is not just a solution; it’s a rethinking of how modern data teams operate, helping you achieve your goals faster, with fewer trade-offs.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and visual ETL tools such as Alteryx, Matillion, and Informatica. Code-first engines offer stronger rigor, testing, and CI/CD, while GUI platforms emphasize ease of use and rapid prototyping. Teams choose these alternatives when they need more security, governance, or flexibility than dbt Core or dbt Cloud provide.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and GUI-based ETL tools such as Alteryx, Matillion, and Informatica.

Teams explore dbt alternatives when they need stronger governance, private deployments, or support for Python and code-first workflows that go beyond SQL. Many also prefer GUI-based ETL tools for faster onboarding. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in, which makes tool neutrality and platform flexibility more important than ever.

Teams look for dbt alternatives when they need stronger orchestration, consistent development environments, Python support, or private cloud deployment options that dbt Cloud does not provide.

Organizations evaluating dbt alternatives typically compare tools across three categories. Each category reflects a different approach to data transformation, development preferences, and organizational maturity.

Organizations consider alternatives to dbt Cloud when they need more flexibility, stronger security, or support for development workflows that extend beyond dbt. Teams comparing platform options often begin by evaluating the differences between dbt Cloud vs dbt Core.

Running enterprise-scale ELT pipelines often requires a full orchestration layer, consistent development environments, and private deployment options that dbt Cloud does not provide. Costs can also increase at scale (see our breakdown of dbt pricing considerations), and some organizations prefer to avoid features that are not open source to reduce long-term vendor lock-in.

This category includes platforms that deliver the benefits of dbt Cloud while providing more control, extensibility, and alignment with enterprise data platform requirements.

Datacoves provides a secure, flexible platform that supports dbt, SQLMesh, and Bruin in a unified environment with private cloud or VPC deployment.

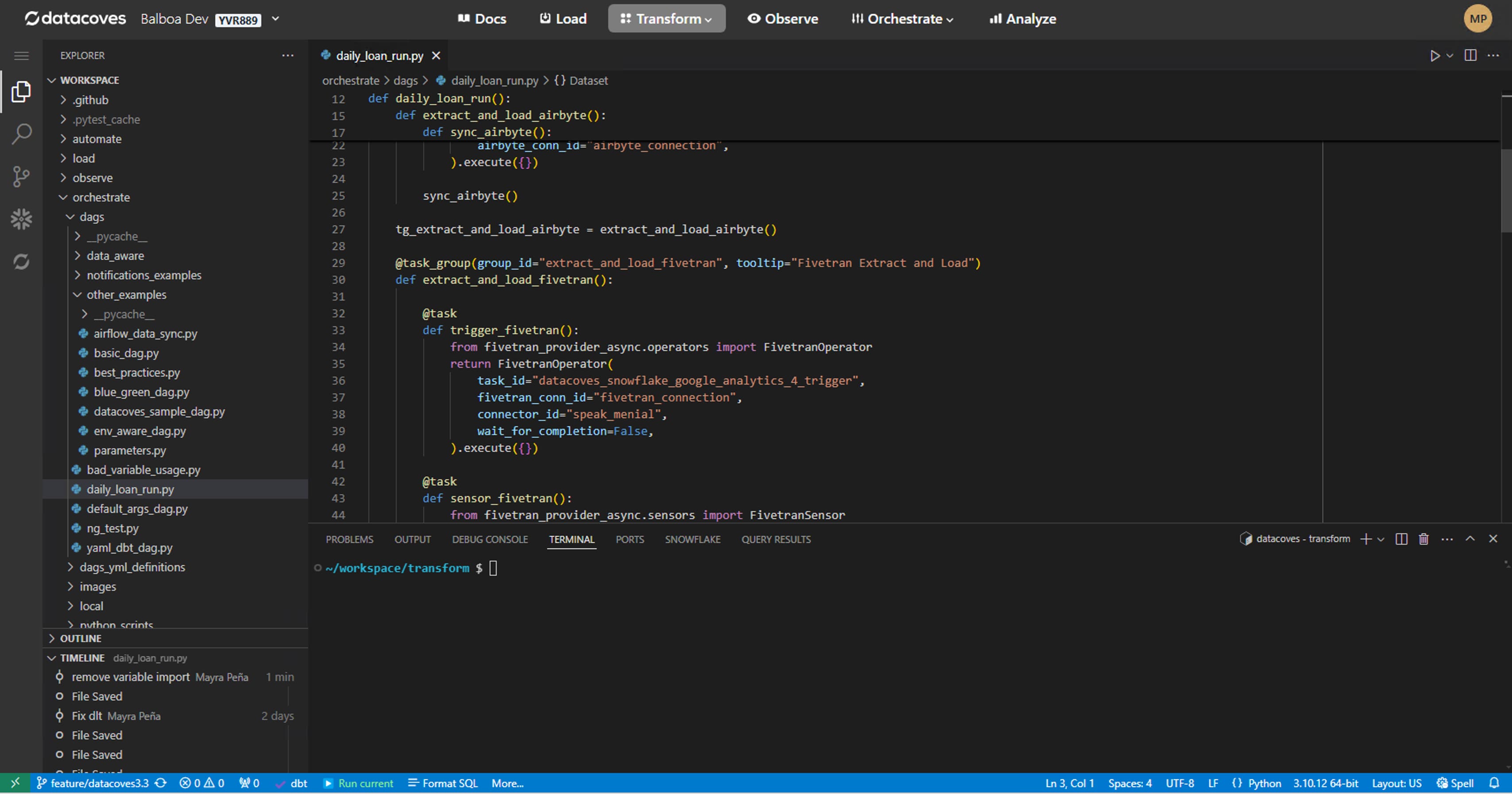

Datacoves is an enterprise data platform that serves as a secure, flexible alternative to dbt Cloud. It supports dbt Core, SQLMesh, and Bruin inside a unified development and orchestration environment, and it can be deployed in your private cloud or VPC for full control over data access and governance.

Benefits

Flexibility and Customization:

Datacoves provides a customizable in-browser VS Code IDE, Git workflows, and support for Python libraries and VS Code extensions. Teams can choose the transformation engine that fits their needs without being locked into a single vendor.

Handling Enterprise Complexity:

Datacoves includes managed Airflow for end-to-end orchestration, making it easy to run dbt and Airflow together without maintaining your own infrastructure. It standardizes development environments, manages secrets, and supports multi-team and multi-project workflows without platform drift.

Cost Efficiency:

Datacoves reduces operational overhead by eliminating the need to maintain separate systems for orchestration, environments, CI, logging, and deployment. Its pricing model is predictable and designed for enterprise scalability.

Data Security and Compliance:

Datacoves can be deployed fully inside your VPC or private cloud. This gives organizations complete control over identity, access, logging, network boundaries, and compliance with industry and internal standards.

Reduced Vendor Lock-In:

Datacoves supports dbt, SQLMesh, and Bruin Data, giving teams long-term optionality. This avoids being locked into a single transformation engine or vendor ecosystem.

Running dbt Core yourself is a flexible option that gives teams full control over how dbt executes. It is also the most resource-intensive approach. Teams choosing DIY dbt Core must manage orchestration, scheduling, CI, secrets, environment consistency, and long-term platform maintenance on their own.

Benefits

Full Control:

Teams can configure dbt Core exactly as they want and integrate it with internal tools or custom workflows.

Cost Flexibility:

There are no dbt Cloud platform fees, but total cost of ownership often increases as the system grows.

Considerations

High Maintenance Overhead:

Teams must maintain Airflow or another orchestrator, build CI pipelines, manage secrets, and keep development environments consistent across users.

Requires Platform Engineering Skills:

DIY dbt Core works best for teams with strong Kubernetes, CI, Python, and DevOps expertise. Without this expertise, the environment becomes fragile over time.

Slow to Scale:

As more engineers join the team, keeping dbt environments aligned becomes challenging. Onboarding, upgrades, and platform drift create operational friction.

Security and Compliance Responsibility:

Identity, permissions, logging, and network controls must be designed and maintained internally, which can be significant for regulated organizations.

Teams that prefer code-first tools often look for dbt alternatives that provide strong SQL modeling, Python support, and seamless integration with CI/CD workflows and automated testing. These are part of a broader set of data transformation tools. Code-based ETL tools give developers greater control over transformations, environments, and orchestration patterns than GUI platforms. Below are four code-first contenders that organizations should evaluate.

Code-first dbt alternatives like SQLMesh, Bruin Data, and Dataform provide stronger CI/CD integration, automated testing, and more control over complex transformation workflows.

SQLMesh is an open-source framework for SQL and Python-based data transformations. It provides strong visibility into how changes impact downstream models and uses virtual data environments to preview changes before they reach production.

Benefits

Efficient Development Environments:

Virtual environments reduce unnecessary recomputation and speed up iteration.

Considerations

Part of the Fivetran Ecosystem:

SQLMesh was acquired by Fivetran, which may influence its future roadmap and level of independence.

Dataform is a SQL-based transformation framework focused specifically for BigQuery. It enables teams to create table definitions, manage dependencies, document models, and configure data quality tests inside the Google Cloud ecosystem. It also provides version control and integrates with GitHub and GitLab.

Benefits

Centralized BigQuery Development:

Dataform keeps all modeling and testing within BigQuery, reducing context switching and making it easier for teams to collaborate using familiar SQL workflows.

Considerations

Focused Only on the GCP Ecosystem:

Because Dataform is geared toward BigQuery, it may not be suitable for organizations that use multiple cloud data warehouses.

AWS Glue is a serverless data integration service that supports Python-based ETL and transformation workflows. It works well for organizations operating primarily in AWS and provides native integration with services like S3, Lambda, and Athena.

Benefits

Python-First ETL in AWS:

Glue supports Python scripts and PySpark jobs, making it a good fit for engineering teams already invested in the AWS ecosystem.

Considerations

Requires Engineering Expertise:

Glue can be complex to configure and maintain, and its Python-centric approach may not be ideal for SQL-first analytics teams.

Bruin is a modern SQL-based data modeling framework designed to simplify development, testing, and environment-aware deployments. It offers a familiar SQL developer experience while adding guardrails and automation to help teams manage complex transformation logic.

Benefits

Modern SQL Modeling Experience:

Bruin provides a clean SQL-first workflow with strong dependency management and testing.

Considerations

Growing Ecosystem:

Bruin is newer than dbt and has a smaller community and fewer third-party integrations.

While code-based transformation tools provide the most flexibility and long-term maintainability, some organizations prefer graphical user interface (GUI) tools. These platforms use visual, drag-and-drop components to build data integration and transformation workflows. Many of these platforms fall into the broader category of no-code ETL tools. GUI tools can accelerate onboarding for teams less comfortable with code editors and may simplify development in the short term. Below are several GUI-based options that organizations often consider as dbt alternatives.

GUI-based dbt alternatives such as Matillion, Informatica, and Alteryx use drag-and-drop interfaces that simplify development and accelerate onboarding for mixed-skill teams.

Matillion is a cloud-based data integration platform that enables teams to design ETL and transformation workflows through a visual, drag-and-drop interface. It is built for ease of use and supports major cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake.

Benefits

User-Friendly Visual Development:

Matillion simplifies pipeline building with a graphical interface, making it accessible for users who prefer low-code or no-code tooling.

Considerations

Limited Flexibility for Complex SQL Modeling:

Matillion’s visual approach can become restrictive for advanced transformation logic or engineering workflows that require version control and modular SQL development.

Informatica is an enterprise data integration platform with extensive ETL capabilities, hundreds of connectors, data quality tooling, metadata-driven workflows, and advanced security features. It is built for large and diverse data environments.

Benefits

Enterprise-Scale Data Management:

Informatica supports complex data integration, governance, and quality requirements, making it suitable for organizations with large data volumes and strict compliance needs.

Considerations

High Complexity and Cost:

Informatica’s power comes with a steep learning curve, and its licensing and operational costs can be significant compared to lighter-weight transformation tools.

Alteryx is a visual analytics and data preparation platform that combines data blending, predictive modeling, and spatial analysis in a single GUI-based environment. It is designed for analysts who want to build workflows without writing code and can be deployed on-premises or in the cloud.

Benefits

Powerful GUI Analytics Capabilities:

Alteryx allows users to prepare data, perform advanced analytics, and generate insights in one tool, enabling teams without strong coding skills to automate complex workflows.

Considerations

High Cost and Limited SQL Modeling Flexibility:

Alteryx is one of the more expensive platforms in this category and is less suited for SQL-first transformation teams who need modular modeling and version control.

Azure Data Factory (ADF) is a fully managed, serverless data integration service that provides a visual interface for building ETL and ELT pipelines. It integrates natively with Azure storage, compute, and analytics services, allowing teams to orchestrate and monitor pipelines without writing code.

Benefits

Strong Integration for Microsoft-Centric Teams:

ADF connects seamlessly with other Azure services and supports a pay-as-you-go model, making it ideal for organizations already invested in the Microsoft ecosystem.

Considerations

Limited Transformation Flexibility:

ADF excels at data movement and orchestration but offers limited capabilities for complex SQL modeling, making it less suitable as a primary transformation engine

Talend provides an end-to-end data management platform with support for batch and real-time data integration, data quality, governance, and metadata management. Talend Data Fabric combines these capabilities into a single low-code environment that can run in cloud, hybrid, or on-premises deployments.

Benefits

Comprehensive Data Quality and Governance:

Talend includes built-in tools for data cleansing, validation, and stewardship, helping organizations improve the reliability of their data assets.

Considerations

Broad Platform, Higher Operational Complexity:

Talend’s wide feature set can introduce complexity, and teams may need dedicated expertise to manage the platform effectively.

SQL Server Integration Services is part of the Microsoft SQL Server ecosystem and provides data integration and transformation workflows. It supports extracting, transforming, and loading data from a wide range of sources, and offers graphical tools and wizards for designing ETL pipelines.

Benefits

Strong Fit for SQL Server-Centric Teams:

SSIS integrates deeply with SQL Server and other Microsoft products, making it a natural choice for organizations with a Microsoft-first architecture.

Considerations

Not Designed for Modern Cloud Data Warehouses:

SSIS is optimized for on-premises SQL Server environments and is less suitable for cloud-native architectures or modern ELT workflows.

Recent consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in and pushed organizations to evaluate more flexible transformation platforms.

Organizations explore dbt alternatives when dbt no longer meets their architectural, security, or workflow needs. As teams scale, they often require stronger orchestration, consistent development environments, mixed SQL and Python workflows, and private deployment options that dbt Cloud does not provide.

Some teams prefer code-first engines for deeper CI/CD integration, automated testing, and strong guardrails across developers. Others choose GUI-based tools for faster onboarding or broader integration capabilities. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has also increased concerns about vendor lock-in.

These factors lead many organizations to evaluate tools that better align with their governance requirements, engineering preferences, and long-term strategy.

DIY dbt Core offers full control but requires significant engineering work to manage orchestration, CI/CD, security, and long-term platform maintenance.

Running dbt Core yourself can seem attractive because it offers full control and avoids platform subscription costs. However, building a stable, secure, and scalable dbt environment requires significantly more than executing dbt build on a server. It involves managing orchestration, CI/CD, and ensuring development environment consistency along with long-term platform maintenance, all of which require mature DataOps practices.

The true question for most organizations is not whether they can run dbt Core themselves, but whether it is the best use of engineering time. This is essentially a question of whether to build vs buy your data platform. DIY dbt platforms often start simple and gradually accumulate technical debt as teams grow, pipelines expand, and governance requirements increase.

For many organizations, DIY works in the early stages but becomes difficult to sustain as the platform matures.

The right dbt alternative depends on your team’s skills, governance requirements, pipeline complexity, and long-term data platform strategy.

Selecting the right dbt alternative depends on your team’s skills, security requirements, and long-term data platform strategy. Each category of tools solves different problems, so it is important to evaluate your priorities before committing to a solution.

If these are priorities, a platform with secure deployment options or multi-engine support may be a better fit than dbt Cloud.

Recent consolidation in the ecosystem has raised concerns about vendor dependency. Organizations that want long-term flexibility often look for:

Consider platform fees, engineering maintenance, onboarding time, and the cost of additional supporting tools such as orchestrators, IDEs, and environment management

dbt remains a strong choice for SQL-based transformations, but it is not the only option. As organizations scale, they often need stronger orchestration, consistent development environments, Python support, and private deployment capabilities that dbt Cloud or DIY dbt Core may not provide. Evaluating alternatives helps ensure that your transformation layer aligns with your long-term platform and governance strategy.

Code-first tools like SQLMesh, Bruin Data, and Dataform offer strong engineering workflows, while GUI-based tools such as Matillion, Informatica, and Alteryx support faster onboarding for mixed-skill teams. The right choice depends on the complexity of your pipelines, your team’s technical profile, and the level of security and control your organization requires.

Datacoves provides a flexible, secure alternative that supports dbt, SQLMesh, and Bruin in a unified environment. With private cloud or VPC deployment, managed Airflow, and a standardized development experience, Datacoves helps teams avoid vendor lock-in while gaining an enterprise-ready platform for analytics engineering.

Selecting the right dbt alternative is ultimately about aligning your transformation approach with your data architecture, governance needs, and long-term strategy. Taking the time to assess these factors will help ensure your platform remains scalable, secure, and flexible for your future needs.

There's a lot of buzz around Microsoft Fabric these days. Some people are all-in, singing its praises from the rooftops, while others are more skeptical, waving the "buyer beware" flag. After talking with the community and observing Fabric in action, we're leaning toward caution. Why? Well, like many things in the Microsoft ecosystem, it's a jack of all trades but a master of none. Many of the promises seem to be more marketing hype than substance, leaving you with "marketecture" instead of solid architecture. While the product has admirable, lofty goals, Microsoft has many wrinkles to iron out.

In this article, we'll dive into 10 reasons why Microsoft Fabric might not be the best fit for your organization in 2025. By examining both the promises and the current realities of Microsoft Fabric, we hope to equip you with the information needed to make an informed decision about its adoption.

Microsoft Fabric is marketed as a unified, cloud-based data platform developed to streamline data management and analytics within organizations. Its goal is to integrate various Microsoft services into a single environment and to centralize and simplify data operations.

This means that Microsoft Fabric is positioning itself as an all-in-one analytics platform designed to handle a wide range of data-related tasks. A place to handle data engineering, data integration, data warehousing, data science, real-time analytics, and business intelligence. A one stop shop if you will. By consolidating these functions, Fabric hopes to provide a seamless experience for organizations to manage, analyze, and gather insights from their data.

Fabric presents itself as an all-in-one solution, but is it really? Let’s break down where the marketing meets reality.

While Microsoft positions Fabric is making an innovative step forward, much of it is clever marketing and repackaging of existing tools. Here’s what’s claimed—and the reality behind these claims:

Claim: Fabric combines multiple services into a seamless platform, aiming to unify and simplify workflows, reduce tool sprawl, and make collaboration easier with a one-stop shop.

Reality:

Claim: Fabric offers a scalable and flexible platform.

Reality: In practice, managing scalability in Fabric can be difficult. Scaling isn’t a one‑click, all‑services solution—instead, it requires dedicated administrative intervention. For example, you often have to manually pause and un-pause capacity to save money, a process that is far from ideal if you’re aiming for automation. Although there are ways to automate these operations, setting up such automation is not straightforward. Additionally, scaling isn’t uniform across the board; each service or component must be configured individually, meaning that you must treat them on a case‑by‑case basis. This reality makes the promise of scalability and flexibility a challenge to realize without significant administrative overhead.

Claim: Fabric offers predictable, cost-effective pricing.

Reality: While Fabric's pricing structure appears straightforward, several hidden costs and adoption challenges can impact overall expenses and efficiency:

All this to say that the pricing model is not good unless you can predict with great accuracy exactly how much you will spend every single day, and who knows that? Check out this article on the hidden cost of fabric which goes into detail and cost comparisons.

Claim: Fabric supports a wide range of data tools and integrations.

Reality: Fabric is built around a tight integration with other Fabric services and Microsoft tools such as Office 365 and Power BI, making it less ideal for organizations that prefer a “best‑of‑breed” approach (or rely on tools like Tableau, Looker, open-source solutions like Lightdash, or other non‑Microsoft solutions), this can severely limit flexibility and complicate future migrations.

While third-party connections are possible, they don’t integrate as smoothly as those in the MS ecosystem like Power BI, potentially forcing organizations to switch tools just to make Fabric work.

Claim: Fabric simplifies automation and deployment for data teams by supporting modern DataOps workflows.

Reality: Despite some scripting support, many components remain heavily UI‑driven. This hinders full automation and integration with established best practices for CI/CD pipelines (e.g., using Terraform, dbt, or Airflow). Organizations that want to mature data operations with agile DataOps practices find themselves forced into manual workarounds and struggle to integrate Fabric tools into their CI/CD processes. Unlike tools such as dbt, there is not built-in Data Quality or Unit Testing, so additional tools would need to be added to Fabric to achieve this functionality.

Claim: Microsoft Fabric provides enterprise-grade security, compliance, and governance features.

Reality: While Microsoft Fabric offers robust security measures like data encryption, role-based access control, and compliance with various regulatory standards, there are some concerns organizations should consider.

One major complaint is that access permissions do not always persist consistently across Fabric services, leading to unintended data exposure.

For example, users can still retrieve restricted data from reports due to how Fabric handles permissions at the semantic model level. Even when specific data is excluded from a report, built-in features may allow users to access the data, creating compliance risks and potential unauthorized access. Read more: Zenity - Inherent Data Leakage in Microsoft Fabric.

While some of these security risks can be mitigated, they require additional configurations and ongoing monitoring, making management more complex than it should be. Ideally, these protections should be unified and work out of the box rather than requiring extra effort to lock down sensitive data.

Claim: Fabric is presented as a mature, production-ready analytics platform.

Reality: The good news for Fabric is that it is still evolving. The bad news is, it's still evolving. That evolution impacts users in several ways:

Claim: Fabric automates many complex data processes to simplify workflows.

Reality: Fabric is heavy on abstractions and this can be a double‑edged sword. While at first it may appear to simplify things, these abstractions lead to a lack of visibility and control. When things go wrong it is hard to debug and it may be difficult to fine-tune performance or optimize costs.

For organizations that need deep visibility into query performance, workload scheduling, or resource allocation, Fabric lacks the granular control offered by competitors like Databricks or Snowflake.

Claim: Fabric offers comprehensive resource governance and robust alerting mechanisms, enabling administrators to effectively manage and troubleshoot performance issues.

Reality: Fabric currently lacks fine-grained resource governance features making it challenging for administrators to control resource consumption and mitigate issues like the "noisy neighbor" problem, where one service consumes disproportionate resources, affecting others.

The platform's alerting mechanisms are also underdeveloped. While some basic alerting features exist, they often fail to provide detailed information about which processes or users are causing issues. This can make debugging an absolute nightmare. For example, users have reported challenges in identifying specific reports causing slowdowns due to limited visibility in the capacity metrics app. This lack of detailed alerting makes it difficult for administrators to effectively monitor and troubleshoot performance issues, often needing the adoption of third-party tools for more granular governance and alerting capabilities. In other words, not so all in one in this case.

Claim: Fabric aims to be an all-in-one platform that covers every aspect of data management.

Reality: Despite its broad ambitions, key features are missing such as:

While these are just a couple of examples it's important to note that missing features will compel users to seek third-party tools to fill the gaps, introducing additional complexities. Integrating external solutions is not always straight forward with Microsoft products and often introduces a lot of overhead. Alternatively, users will have to go without the features and create workarounds or add more tools which we know will lead to issues down the road.

Microsoft Fabric promises a lot, but its current execution falls short. Instead of an innovative new platform, Fabric repackages existing services, often making things more complex rather than simpler.

That’s not to say Fabric won’t improve—Microsoft has the resources to refine the platform. But as of 2025, the downsides outweigh the benefits for many organizations.

If your company values flexibility, cost control, and seamless third-party integrations, Fabric may not be the best choice. There are more mature, well-integrated, and cost-effective alternatives that offer the same features without the Microsoft lock-in.

Time will tell if Fabric evolves into the powerhouse it aspires to be. For now, the smart move is to approach it with a healthy dose of skepticism.

👉 Before making a decision, thoroughly evaluate how Fabric fits into your data strategy. Need help assessing your options? Check out this data platform evaluation worksheet.

.png)

Enterprises are increasingly relying on dbt (Data Build Tool) for their data analytics; however, dbt wasn’t designed to be an enterprise-ready platform on its own. This leads to struggles with scalability, orchestration, governance, and operational efficiency when implementing dbt at scale. But if dbt is so amazing why is this the case? Like our title suggests, you need more than just dbt to have a successful dbt analytics implementation. Keep on reading to learn exactly what you need to super charge your data analytics with dbt successfully.

dbt is popular because it solves problems facing the data analytics world. Enterprises today are dealing with growing volumes of data, making efficient data transformation a critical part of their analytics strategy. Traditionally, data transformation was handled using complex ETL (Extract, Transform, Load) processes, where data engineers wrote custom scripts to clean, structure, and prepare data before loading it into a warehouse. However, this approach has several challenges:

dbt (Data Build Tool) transforms this paradigm by enabling SQL-based, modular, and version-controlled transformations directly inside the data warehouse. By following the ELT (Extract, Load, Transform) approach, dbt allows raw data to be loaded into the warehouse first, then transformed within the warehouse itself—leveraging the scalability and processing power of modern cloud data platforms.

Unlike traditional ETL tools, dbt applies software engineering best practices to SQL-based transformations, making it easier to develop, test, document, and scale data pipelines. This shift has made dbt a preferred solution for enterprises looking to empower analysts, improve collaboration, and create maintainable data workflows.

With these benefits it is clear why over 40,000 companies are leveraging dbt today!

Despite dbt’s strengths, enterprises face several challenges when implementing it at scale for a variety of reasons:

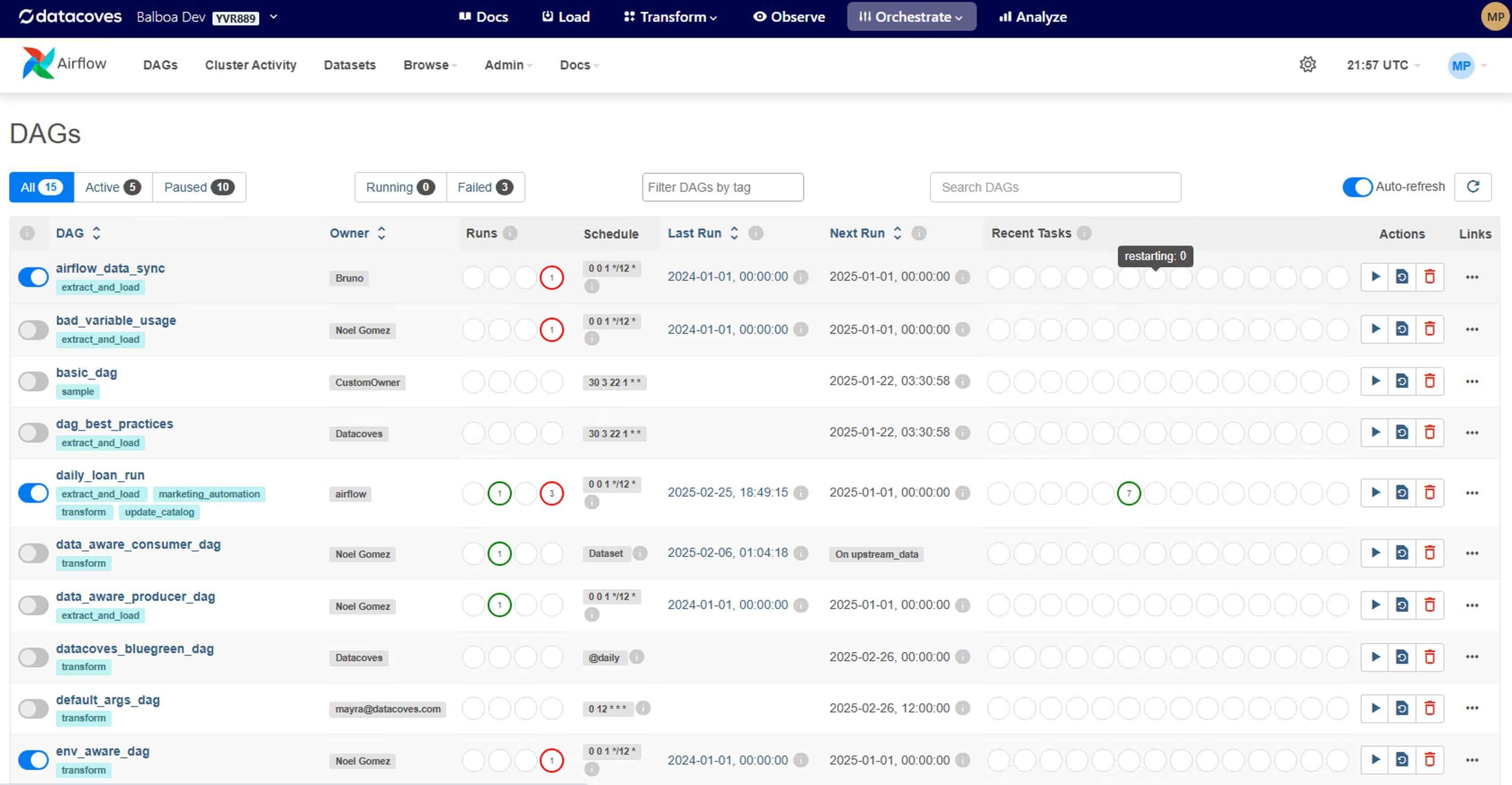

Running dbt in production requires robust orchestration beyond simple scheduled jobs. dbt only manages transformations, but a complete end-to-end pipeline includes Extracting, Loading and Visualizing of data. To manage the full end-to-end data pipeline (ELT + Viz) organizations will need a full-fledged orchestrator like Airflow. While there are other orchestration options on the market, Airflow and dbt are a common pattern.

CI/CD pipelines are essential for dbt at the enterprise level, yet one of dbt Core’s major limitations is the lack of a built-in CI/CD pipeline for managing deployments. This makes workflows more complex and increases the likelihood of errors reaching production. To address this, teams can implement external tools like Jenkins, GitHub Actions, or GitLab Workflows that provide a flexible and customizable CI/CD process to automate deployments and enforce best practices.

While dbt Cloud does offer an out-of-the-box CI/CD solution, it lacks customization options. Some organizations find that their use cases demand greater flexibility, requiring them to build their own CI/CD processes instead.

Enterprises seek alternative solutions that provide greater control, scalability, and security over their data platform. However, this comes with the responsibility of managing their own infrastructure, which introduces significant operational overhead ($$$). Solutions like dbt Cloud do not offer Virtual Private Cloud (VPC) deployment, full CI/CD flexibility, and a fully-fledged orchestrator leaving organizations to handle additional platform components.

We saw a need for a middle ground that combined the best of both worlds; something as flexible as dbt Core and Airflow, but fully managed like dbt Cloud. This led to Datacoves which provides a seamless experience with no platform maintenance overhead or onboarding hassles. Teams can focus on generating insights from data and not worry about the platform.

Vendor lock-in is a major concern for organizations that want to maintain flexibility and avoid being tied to a single provider. The ability to switch out tools easily without excessive cost or effort is a key advantage of the modern data stack. Enterprises benefit from mixing and matching best-in-class solutions that meet their specific needs.

Datacoves is a fully managed enterprise platform for dbt, solving the challenges outlined above. Below is how Datacoves' features align with enterprise needs:

Datacoves offers flexible deployment and pricing options to accommodate various enterprise needs:

Datacoves is committed to delivering enterprise-grade support and resources through our white-glove service:

Enterprises need more than just dbt to achieve scalable and efficient analytics. While dbt is a powerful tool for data transformation, it lacks the necessary infrastructure, governance, and orchestration capabilities required for enterprise-level deployments. Datacoves fills these gaps by providing a fully managed environment that integrates dbt-Core, VS Code, Airflow, and Kubernetes-based deployments, Datacoves is the ultimate solution for organizations looking to scale dbt successfully.

The latest release of dbt 1.9, introduces some exciting features and updates meant to enhance functionality and tackle some pain points of dbt. With improvements like microbatch incremental strategy, snapshot enhancements, Iceberg table format support, and streamlined CI workflows, dbt 1.9 continues to help data teams work smarter, faster, and with greater precision. All the more reason to start using dbt today!

We looked through the release notes, so you don’t have to. This article highlights the key updates in dbt 1.9, giving you the insights needed to upgrade confidently and unlock new possibilities for your data workflows. If you need a flexible dbt and Airflow experience, Datacoves might be right for your organization. Lower total cost of ownership by 50% and shortened your time to market today!

If you are upgrading from dbt 1.7 or earlier, you will need to install both dbt-core and the appropriate adapter. This requirement stems from the decoupling introduced in dbt 1.8, a change that enhances modularity and flexibility in dbt’s architecture. These updates demonstrate dbt’s commitment to providing a streamlined and adaptable experience for its users while ensuring compatibility with modern tools and workflows.

pip install dbt-core dbt-snowflakeIn dbt 1.9, the microbatch incremental strategy is a new way to process massive datasets. In earlier versions of dbt, incremental materialization was available to process datasets which were too large to drop and recreate at every build. However, it struggled to efficiently manage very large datasets that are too large to fit into one query. This limitation led to timeouts and complex query management.

The microbatch incremental strategy comes to the rescue by breaking large datasets into smaller chunks for processing using the batch_size, event_time, and lookback configurations to automatically generate the necessary filters for you. However, at the time of this publication this feature is only available on the following adapters: Postgres, Redshift, Snowflake, BigQuery, Spark, and Databricks, with more on the way.

event_time, lookback, and batch_size configurations dbt will generate the necessary filters for each batch. One less thing to worry about! batch_size you set. Each batch is processed separately and in parallel, unless you disable this feature using the +concurrent_batches config. This independence in batch processing improves performance, minimizes the risk of query failures, allows you to retry failed batches using the dbt retry command, and provides the granularity to load specific batches. Gotta love the control without the extra leg work!

To take advantage of the microbatch incremental strategy, first upgrade to dbt 1.9 and ensure your project is configured correctly. By default, dbt will handle the microbatch logic for you, as explained above. However, if you’re using custom logic, such as a custom microbatch macro, don’t forget to set the require_batched_execution_for_custom_microbatch_strategy behavior flag to True in your dbt_project.yml file. This prevents deprecation warnings and ensures dbt knows how to handle your custom configuration.

If you have custom microbatch but wish to migrate, its important to note that earlier versions required setting the environment variable DBT_EXPERIMENTAL_MICROBATCH to enable microbatching, but this is no longer needed. Starting with Core 1.9, the microbatch strategy works seamlessly out of the box, so you can remove it.

With dbt 1.9, snapshots have become easier to use than ever! This is great news for dbt users since snapshots in dbt allow you to capture the state of your data at specific points in time, helping you track historical changes and maintain a clear picture of how your data evolves. Below are a couple of improvements to implement or be aware of.

snapshot_meta_column_names config you now have the option to rename metadata fields to match your project's naming conventions. This added flexibility helps ensure consistency across your data models and simplifies collaboration within teams. dbt_valid_to variable is set to NULL but you can now you can configure it to a data with the dbt_valid_to_current config. It is important to note that dbt will not automatically adjust the current value in the existing dbt_valid_to column. Meaning, any existing current records will still have dbt_valid_to set to NULL and new records will have this value set to your configured date. You will have to manually update existing data to match. Less NULL values to handle downstream! --empty flag is now supported for the dbt snapshot command, allowing you to execute snapshot operations without processing data. This enhancement is particularly useful in Continuous Integration (CI) environments, enabling the execution of unit tests for models downstream of snapshots without requiring actual data processing, streamlining the testing process. The empty flag, introduced in dbt 1.8, also has some powerful applications in Slim CI to optimize your CI/CD worth checking out. hard_deletes configuration enhances the management of deleted records in snapshots. This feature offers three methods: the default ignore, which takes no action on deleted records; invalidate, replacing the invalidate_hard_deletes=trueconfig, which marks deleted records as invalid by setting their dbt_valid_to timestamp to the current time; and lastly new_record, which tracks deletions by inserting a new record with a dbt_is_deleted config set to True.

It's important to note some migration efforts will be required for this. While the invalidate_hard_deletes configuration is still supported for existing snapshots, it cannot be used alongside hard_deletes. For new snapshots, it's recommended to use hard_deletes instead of the legacy invalidate_hard_deletes. If you switch an existing snapshot to use hard_deletes without migrating your data, you may encounter inconsistent or incorrect results, such as a mix of old and new data formats. Keep this in mind when implementing these new configs.

Testing is a vital part of maintaining high data quality and ensuring your data models work as intended. Unit testing was introduced in dbt 1.8 and has seen continued improvement in dbt 1.9.

unit_test: selector. This feature enables more granular control over test execution, allowing you to focus on particular tests without running the entire suite, thereby saving time and resources. dbt test --select unit_test:my_project.my_unit_test

dbt build --select unit_test:my_project.my_unit_test dbt list --resource-type test now correctly include only data tests, excluding unit tests. This distinction enhances clarity and precision when managing different test types within your project. dbt ls --select unit_test:my_project.my_unit_test In dbt version 1.9, the state:modified selector has been enhanced to improve the accuracy of Slim CI workflows. Previously, dynamic configurations—such as setting the database based on the environment—could lead to dbt perceiving changes in models, even when the actual model remained unchanged. This misinterpretation caused Slim CI to rebuild all models unnecessarily, resulting in false positives.

By comparing unrendered configuration values, dbt now accurately detects genuine modifications, eliminating false positives during state comparisons. This improvement ensures that only truly modified models are selected for rebuilding, streamlining your CI processes.

To enable this feature, set the state_modified_compare_more_unrendered_values flag to True in your dbt_project.yml file:

flags:

state_modified_compare_more_unrendered_values: True In dbt 1.9, the dbt docs serve command now has more customization abilities with a new --host flag. This flag allows users to specify the host address for serving documentation. Previously, dbt docs serve defaulted to binding the server to 127.0.0.1 (localhost) without an option to override this setting.

Users can now specify a custom host address using the --host flag when running dbt docs serve. This enhancement provides the flexibility to bind the documentation server to any desired address, accommodating various deployment needs. The default of the --host flag will continue to bind to 127.0.0.1 by default, ensuring backward compatibility and secure defaults.

dbt 1.9 includes several updates aimed at improving performance, usability, and compatibility across projects. These changes ensure a smoother experience for users while keeping dbt aligned with modern standards.

dbt clone command now executes clone operations concurrently, enhancing efficiency and reducing execution time. dbt show and dbt compile commands now support parseable JSON and text outputs when run in quiet mode, facilitating easier integration with other tools and scripts by providing machine-readable outputs. skip_nodes_if_on_run_start_fails Behavior Change Flag: A new behavior change flag, skip_nodes_if_on_run_start_fails, has been introduced to gracefully handle failures in on-run-start hooks. When enabled, if an on-run-start hook fails, subsequent hooks and nodes are skipped, preventing partial or inconsistent runs. dbt 1.9 introduces a range of powerful features and enhancements, reaffirming its role as a cornerstone tool for modern data transformations. The enhancements in this release reflect the community's commitment to innovation and excellence as well as its strength and vitality. There's no better time to join this dynamic ecosystem and elevate your data workflows!

If you're looking to implement dbt efficiently, consider partnering with Datacoves. We can help you reduce your total cost of ownership by 50% and accelerate your time to market. Book a call with us today to discover how we can help your organization in building a modern data stack with minimal technical debt.

Checkout the full release notes.

dbt and Airflow are cornerstone tools in the modern data stack, each excelling in different areas of data workflows. Together, dbt and Airflow provide the flexibility and scalability needed to handle complex, end-to-end workflows.

This article delves into what dbt and Airflow are, why they work so well together, and the challenges teams face when managing them independently. It also explores how Datacoves offers a fully managed solution that simplifies operations, allowing organizations to focus on delivering actionable insights rather than managing infrastructure.

dbt (Data Build Tool) is an open-source analytics engineering framework that transforms raw data into analysis-ready datasets using SQL. It enables teams to write modular, version-controlled workflows that are easy to test and document, bridging the gap between analysts and engineers.

Apache Airflow is an open-source platform designed to orchestrate workflows and automate tasks. Initially created for ETL processes, it has evolved into a versatile solution for managing any sequence of tasks in data engineering, machine learning, or beyond.

While dbt excels at SQL-based data transformations, it has no built-in scheduler, and solutions like dbt Cloud’s scheduling capabilities are limited to triggering jobs in isolation or getting a trigger from an external source. This approach risks running transformations on stale or incomplete data if upstream processes fail. Airflow eliminates this risk by orchestrating tasks across the entire pipeline, ensuring transformations occur at the right time as part of a cohesive, integrated workflow.

Tools like Airbyte and Fivetran also provide built-in schedulers, but these are designed for loading data at a given time and optionally trigger a dbt pipeline. As complexity grows and organizations need to trigger dbt pipelines after data loads via different means such as dlt and Fivetran, then this simple approach does not scale. It is also common to trigger operations after a dbt pipeline and scheduling using the data loading tool will not handle that complexity. With dbt and Airflow, a team can connect the entire process and assure that processes don’t run if upstream tasks fail or are delayed.

Airflow centralizes orchestration, automating the timing and dependencies of tasks—extracting and loading data, running dbt transformations, and delivering outputs. This connected approach reduces inefficiencies and ensures workflows run smoothly with minimal manual intervention.

Modern data workflows extend beyond SQL transformations. Airflow complements dbt by supporting complex, multi-stage processes such as integrating APIs, executing Python scripts, and training machine learning models. This flexibility allows pipelines to adapt as organizational needs evolve.

Airflow also provides a centralized view of pipeline health, offering data teams complete visibility. With its ability to trace issues and manage dependencies, Airflow helps prevent cascading failures and keeps workflows reliable.

By combining dbt’s transformation strengths with Airflow’s orchestration capabilities, teams can move past fragmented processes. Together, these tools enable scalable, efficient analytics workflows, helping organizations focus on delivering actionable insights without being bogged down by operational hurdles.

In our previous article, we discussed building vs buying your Airflow and dbt infrastructure. There are many cons associated with self-hosting these two tools, but Datacoves takes the complexity out of managing dbt and Airflow by offering a fully integrated, managed solution. Datacoves has given many organizations the flexibility of open-source tools with the freedom of managed tools. See how we helped Johnson and Johnson MedTech migrate to our managed dbt and airflow platform.

Datacoves offers the most flexible and robust managed dbt Core environment on the market, enabling teams to fully harness the power of dbt without the complexities of infrastructure management, environment setup, or upgrades. Here’s why our customers choose Datacoves to implement dbt:

Datacoves offers a fully managed Airflow environment, designed for scalability, reliability, and simplicity. Whether you're orchestrating complex ETL workflows, triggering dbt transformations, or integrating with third-party APIs, Datacoves takes care of the heavy lifting by managing the Kubernetes infrastructure, monitoring, and scaling. Here’s what sets Datacoves apart as a managed Airflow solution:

dbt and Airflow are a natural pair in the Modern Data Stack. dbt’s powerful SQL-based transformations enable teams to build clean, reliable datasets, while Airflow orchestrates these transformations within a larger, cohesive pipeline. Their combination allows teams to focus on delivering actionable insights rather than managing disjointed processes or stale data.

However, managing these tools independently can introduce challenges, from infrastructure setup to scaling and ongoing maintenance. That’s where platforms like Datacoves make a difference. For organizations seeking to unlock the full potential of dbt and Airflow without the operational overhead, solutions like Datacoves provide the scalability and efficiency needed to modernize data workflows and accelerate insights.

Book a call today to see how Datacoves can help your organization realize the power of Airflow and dbt.

Not long ago, the data analytics world relied on monolithic infrastructures—tightly coupled systems that were difficult to scale, maintain, and adapt to changing needs. These legacy setups often resulted in operational bottlenecks, delayed insights, and high maintenance costs. To overcome these challenges, the industry shifted toward what was deemed the Modern Data Stack (MDS)—a suite of focused tools optimized for specific stages of the data engineering lifecycle.

This modular approach was revolutionary, allowing organizations to select best-in-class tools like Airflow for Orchestration or a managed version of Airflow from Astronomer or Amazon without the need to build custom solutions. While the MDS improved scalability, reduced complexity, and enhanced flexibility, it also reshaped the build vs. buy decision for analytics platforms. Today, instead of deciding whether to create a component from scratch, data teams face a new question: Should they build the infrastructure to host open-source tools like Apache Airflow and dbt Core, or purchase their managed counterparts? This article focuses on these two components because pipeline orchestration and data transformation lie at the heart of any organization’s data platform.

When we say build in terms of open-source solutions, we mean building infrastructure to self-host and manage mature open-source tools like Airflow and dbt. These two tools are popular because they have been vetted by thousands of companies! In addition to hosting and managing, engineers must also ensure interoperability of these tools within their stack, handle security, scalability, and reliability. Needless to say, building is a huge undertaking that should not be taken lightly.

dbt and Airflow both started out as open-source tools, which were freely available to use due to their permissive licensing terms. Over time, cloud-based managed offerings of these tools were launched to simplify the setup and development process. These managed solutions build upon the open-source foundation, incorporating proprietary features like enhanced user interfaces, automation, security integration, and scalability. The goal is to make the tools more convenient and reduce the burden of maintaining infrastructure while lowering overall development costs. In other words, paid versions arose out of the pain points of self-managing the open-source tools.

This begs the important question: Should you self-manage or pay for your open-source analytics tools?

As with most things, both options come with trade-offs, and the “right” decision depends on your organization’s needs, resources, and priorities. By understanding the pros and cons of each approach, you can choose the option that aligns with your goals, budget, and long-term vision.

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Before moving on to the buy tradeoffs, it is important to set the record straight. You may have noticed that we did not include “the tool is free to use” as one of our pros for building with open-source. You might have guessed by reading the title of this section, but many people incorrectly believe that building their MDS using open-source tools like dbt is free. When in reality there are many factors that contribute to the true dbt pricing and the same is true for Airflow.

How can that be? Well, setting up everything you need and managing infrastructure for Airflow and dbt isn’t necessarily plug and play. There is day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges which require ongoing expertise and attention. Hiring a team to handle this will be critical particularly as you scale. High salaries and benefits are needed to avoid costly mistakes; this can easily cost anywhere from $5,000 to $26,000+/month depending on the size of your team.

In addition to the cost of salaries, let’s look at other possible hidden costs that come with using open-source tools.

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks and when issues arise, a you will need expertise to accelerate the time to resolution.

And then there’s the learning curve. Not all engineers on your team will be seniors, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents a hidden cost.

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business users are impacted as they become reliant on your insights.

A managed solution for Airflow and dbt can solve many of the problems that come with building your own solution from open-source tools such as: hassle-free maintenance, improved UI/UX experience, and integrated functionality. Let’s take a look at the pros.

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however additional configuration and development will be needed for teams to leverage it with dbt and to troubleshoot infrastructure issues suck as containers running out of memory.

For data teams, the allure of a custom-built solution often lies in its promise of complete control and customization. However, building this requires significant time, expertise, and ongoing maintenance. Datacoves bridges the gap between custom-built flexibility and the simplicity of managed services, offering the best of both worlds.

With Datacoves, teams can leverage managed Airflow and pre-configured dbt environments to eliminate the operational burden of infrastructure setup and maintenance. This allows data teams to focus on what truly matters—delivering insights and driving business decisions—without being bogged down by tool management.

Unlike other managed solutions for dbt or Airflow, which often compromise on flexibility for the sake of simplicity, Datacoves retains the adaptability that custom builds are known for. By combining this flexibility with the ease and efficiency of managed services, Datacoves empowers teams to accelerate their analytics workflows while ensuring scalability and control.

Datacoves doesn’t just run the open-source solutions, but through real-world implementations, the platform has been molded to handle enterprise complexity while simplifying project onboarding. With Datacoves, teams don’t have to compromize on features like Datacoves-Mesh (aka dbt-mesh), column level lineage, GenAI, Semantic Layer, etc. Best of all, the company’s goal is to make you successful and remove hosting complexity without introducing vendor lock-in. What Datacove does, you can do yourself if given enough time, experience, and money. Finally, for security concious organizations, Datacoves is the only solution on the market that can be deployed in your private cloud with white-glove enterprise support.

Datacoves isn’t just a platform—it’s a partnership designed to help your data team unlock their potential. With infrastructure taken care of, your team can focus on what they do best: generating actionable insights and maximizing your ROI.

The build vs. buy debate has long been a challenge for data teams, with building offering flexibility at the cost of complexity, and buying sacrificing flexibility for simplicity. As discussed earlier in the article, solutions like dbt and Airflow are powerful, but managing them in-house requires significant time, resources, and expertise. On the other hand, managed offerings like dbt Cloud and MWAA simplify operations but often limit customization and control.

Datacoves bridges this gap, providing a managed platform that delivers the flexibility and control of a custom build without the operational headaches. By eliminating the need to manage infrastructure, scaling, and security. Datacoves enables data teams to focus on what matters most: delivering actionable insights and driving business outcomes.

As highlighted in Fundamentals of Data Engineering, data teams should prioritize extracting value from data rather than managing the tools that support them. Datacoves embodies this principle, making the argument to build obsolete. Why spend weeks—or even months—building when you can have the customization and adaptability of a build with the ease of a buy? Datacoves is not just a solution; it’s a rethinking of how modern data teams operate, helping you achieve your goals faster, with fewer trade-offs.

Organizations often opt for open-source tools because "free" seems like an easy decision, especially compared to the higher price of managed versions of the same tooling. However, as with many things, there is no such thing as a free lunch. When choosing these open-source tools, it is easy to say that the Airflow and dbt pricing is $0 dollars meaning a cost-saving choice, but hidden expenses that are hard to ignore will quickly be revealed.

dbt Core and Apache Airflow are a natural pair in modern data analytics. dbt Core simplifies SQL-based data transformations, empowering data teams to create and maintain clean, well-documented, structured pipelines. Apache Airflow takes care of orchestrating these workflows, automating the movement and processing of data through the data engineering life cycle. Together, they can drive a powerful analytics stack that’s flexible and scalable—when used correctly. But this flexibility often comes at a price.

In this article, we’ll examine the build vs. buy dilemma, highlighting the flexibility and true costs of open-source tools like dbt Core and Apache Airflow. We’ll also compare them to managed solutions such as dbt Cloud pricing and Datacoves pricing, providing the insights you need to evaluate the trade-offs and choose the best option for your organization.

The open-source tool dbt is free to download and use. However, the actual cost emerges when considering the technical resources required for effective implementation and management. Tasks such as setting up infrastructure, ensuring scalability, and maintaining the tool demand skilled engineers.

Assuming a team of 2–4 engineers is responsible for these tasks, with annual salaries ranging from $120,000 to $160,000 (approximately $10,000 to $13,000 per month), even dedicating 25–50% of their time to managing dbt Core results in a monthly cost of $5,000 to $26,000. As your use of dbt scales, you may need to hire a dedicated team to manage the open-source solution full-time, leading to costs equating to 100% of their combined salaries.

So we can begin to see the true open source dbt pricing, especially at scale. In addition to engineering labor are other costs such as time, and effort required to maintain and scale the platform. More on that later.

Just on engineering pricing alone, we begin to see the comparison between the open-source and managed solutions. dbt Labs offers a hosted solution, dbt Cloud, with added features and tiered pricing options.

Opting for a managed solution will allow your organization to cut engineering costs down or allow your engineers to focus on other projects. However, while dbt Cloud reduced the infrastructure burden a bit, it only focuses on the T of ELT. Meaning, you still need engineers to manage the other pieces of the stack which can result in a disconnected data pipeline.

It is worth noting that some companies decide to use dbt cloud for the scheduler feature which can quickly become limiting as workflows become more complex. The next step is always a full fledged orchestrator such as Airflow.

Just like dbt Core, Apache Airflow is also free to use, but the true cost comes from deploying and maintaining it securely and at scale, which requires significant expertise, particularly in areas like Kubernetes, dependency management, and high-availability configurations.

Assuming 2–4 engineers with annual salaries between $130,000 and $170,000 (around $11,000 to $14,000 per month) dedicate 25–50% of their time to Airflow, the monthly cost ranges from $5,500 to $28,000. The pattern we saw with dbt Core rings true here as well. As your workflows grow, hiring a dedicated team to manage Airflow becomes necessary, leading to costs equating to 100% of their salaries.

For teams looking to sidestep the complexities of managing Airflow in-house, managed solutions provide an appealing alternative:

A managed Airflow solution typically costs between $5,000 and $15,000 per year, depending on workload, resource requirements, and the number of Airflow instances. By choosing a managed solution, organizations can see cost savings in the infrastructure maintenance, overall maintenance stress and more.

Setting up and managing infrastructure for Airflow and dbt Core isn’t as straightforward—or as “free”—as it might seem. The day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges require ongoing expertise and attention. In addition to salaries and benefits, what starts as an open-source experiment can quickly morph into a significant operational overhead full of hidden costs. Let’s dive into how by looking at time and expertise, security and compliance, and scaling complexities which, if not considered, can lead to possible side effects such as extended downtime, security issues and more.

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks

And then there’s the learning curve. Not all engineers on your team will be senior, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents yet another hidden cost.

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be built integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business user are impacted as they become reliant on your insights.

Throughout this article, we have uncovered the true costs of open-source tools, bringing us to the critical decision between building in-house or buying a managed solution. Even after we have uncovered the actual cost of open-source, the decision isn’t just about price—it’s also about flexibility a custom build offers.

Managed solutions often adopt a one-size-fits-all approach designed to attract the widest range of customers. While this can simplify implementation for many organizations, it may not always meet the specific needs of your team. To make an informed decision, let’s examine the key advantages and challenges of each approach.

Pros:

Cons:

Example:

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Pros:

Cons:

Example:

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however it may not have the flexibility or interoperability with other aspects of their stack

Whereas using a solution like Datacoves, teams can leverage managed Airflow and pre-configured environments for dbt Core. This eliminates the need for infrastructure setup, simplifies day-to-day operations, and allows teams to focus on deriving value from their analytics, not maintaining the tools that support them.

There is no universal right answer to the build vs. buy dilemma—every use case is unique. However, it’s important to recognize that many problems have already been solved. Unless there is a compelling reason to reinvent the wheel, leveraging existing solutions can save time, money, and effort.

In Fundamentals of Data Engineering, Joe Reis and Matt Housley emphasize the importance of focusing on delivering insights rather than getting entangled in the complexities of building and maintaining data infrastructure. They advocate for using existing solutions wherever possible to streamline processes and allow teams to concentrate on extracting value from data. The key question to ask is: Will building this solution provide your organization with a competitive edge? If the answer is no, it’s worth seeking out an existing solution that fits your needs. Managed platforms can reduce the need for dedicated personnel as we saw above and provide predictable costs, making them an attractive option for many teams.

This philosophy underpins why we built Datacoves. We believe data teams shouldn’t be bogged down by the operational complexities of tools like dbt and Airflow. And we also believe that Data teams should have access to the flexibility a custom-built solution has to offer. Datacoves offers the flexibility these tools are known for while removing the infrastructure burden, enabling your team to focus on what really matters: generating actionable insights that drive your organization forward.

Datacoves delivers the best of both worlds: the flexibility of a custom-built open-source solution combined with the rich features and zero-infrastructure maintenance of a managed platform—all with minimal vendor lock-in. How does Datacoves achieve this? By focusing on open-source tools and eliminating the burden of maintenance. Datacoves has already done the challenging work of identifying the best tools for the job, configuring them to work seamlessly together, and optimizing performance.