Not long ago, the data analytics world relied on monolithic infrastructures—tightly coupled systems that were difficult to scale, maintain, and adapt to changing needs. These legacy setups often resulted in operational bottlenecks, delayed insights, and high maintenance costs. To overcome these challenges, the industry shifted toward what was deemed the Modern Data Stack (MDS)—a suite of focused tools optimized for specific stages of the data engineering lifecycle.

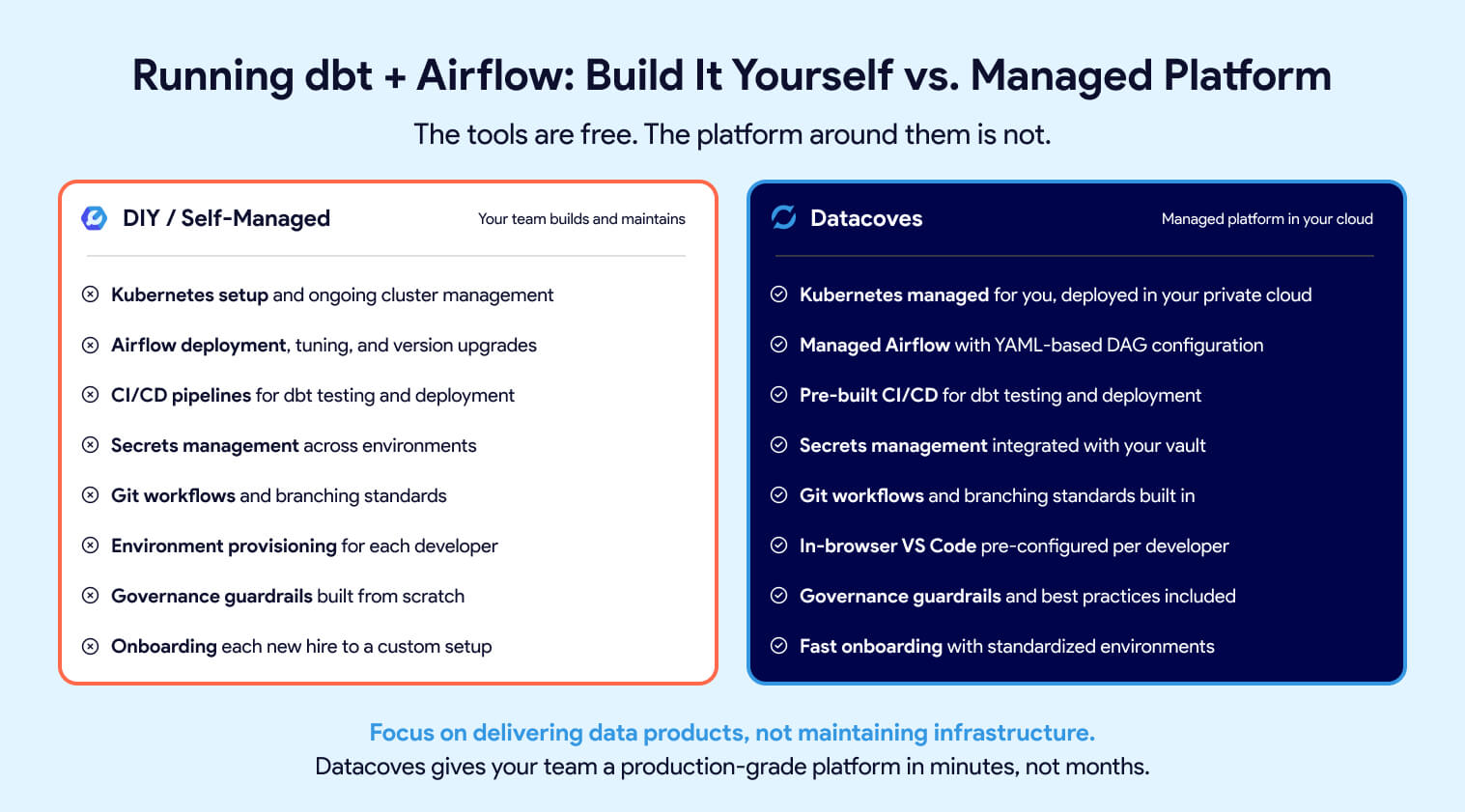

This modular approach was revolutionary, allowing organizations to select best-in-class tools like Airflow for Orchestration or a managed version of Airflow from Astronomer or Amazon without the need to build custom solutions. While the MDS improved scalability, reduced complexity, and enhanced flexibility, it also reshaped the build vs. buy decision for analytics platforms. Today, instead of deciding whether to create a component from scratch, data teams face a new question: Should they build the infrastructure to host open-source tools like Apache Airflow and dbt Core, or purchase their managed counterparts? This article focuses on these two components because pipeline orchestration and data transformation lie at the heart of any organization’s data platform.

When we say build in terms of open-source solutions, we mean building infrastructure to self-host and manage mature open-source tools like Airflow and dbt. These two tools are popular because they have been vetted by thousands of companies! In addition to hosting and managing, engineers must also ensure interoperability of these tools within their stack, handle security, scalability, and reliability. Needless to say, building is a huge undertaking that should not be taken lightly.

dbt and Airflow both started out as open-source tools, which were freely available to use due to their permissive licensing terms. Over time, cloud-based managed offerings of these tools were launched to simplify the setup and development process. These managed solutions build upon the open-source foundation, incorporating proprietary features like enhanced user interfaces, automation, security integration, and scalability. The goal is to make the tools more convenient and reduce the burden of maintaining infrastructure while lowering overall development costs. In other words, paid versions arose out of the pain points of self-managing the open-source tools.

This begs the important question: Should you self-manage or pay for your open-source analytics tools?

As with most things, both options come with trade-offs, and the “right” decision depends on your organization’s needs, resources, and priorities. By understanding the pros and cons of each approach, you can choose the option that aligns with your goals, budget, and long-term vision.

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Before moving on to the buy tradeoffs, it is important to set the record straight. You may have noticed that we did not include “the tool is free to use” as one of our pros for building with open-source. You might have guessed by reading the title of this section, but many people incorrectly believe that building their MDS using open-source tools like dbt is free. When in reality there are many factors that contribute to the true dbt pricing and the same is true for Airflow.

How can that be? Well, setting up everything you need and managing infrastructure for Airflow and dbt isn’t necessarily plug and play. There is day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges which require ongoing expertise and attention. Hiring a team to handle this will be critical particularly as you scale. High salaries and benefits are needed to avoid costly mistakes; this can easily cost anywhere from $5,000 to $26,000+/month depending on the size of your team.

In addition to the cost of salaries, let’s look at other possible hidden costs that come with using open-source tools.

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks and when issues arise, a you will need expertise to accelerate the time to resolution.

And then there’s the learning curve. Not all engineers on your team will be seniors, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents a hidden cost.

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business users are impacted as they become reliant on your insights.

A managed solution for Airflow and dbt can solve many of the problems that come with building your own solution from open-source tools such as: hassle-free maintenance, improved UI/UX experience, and integrated functionality. Let’s take a look at the pros.

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however additional configuration and development will be needed for teams to leverage it with dbt and to troubleshoot infrastructure issues suck as containers running out of memory.

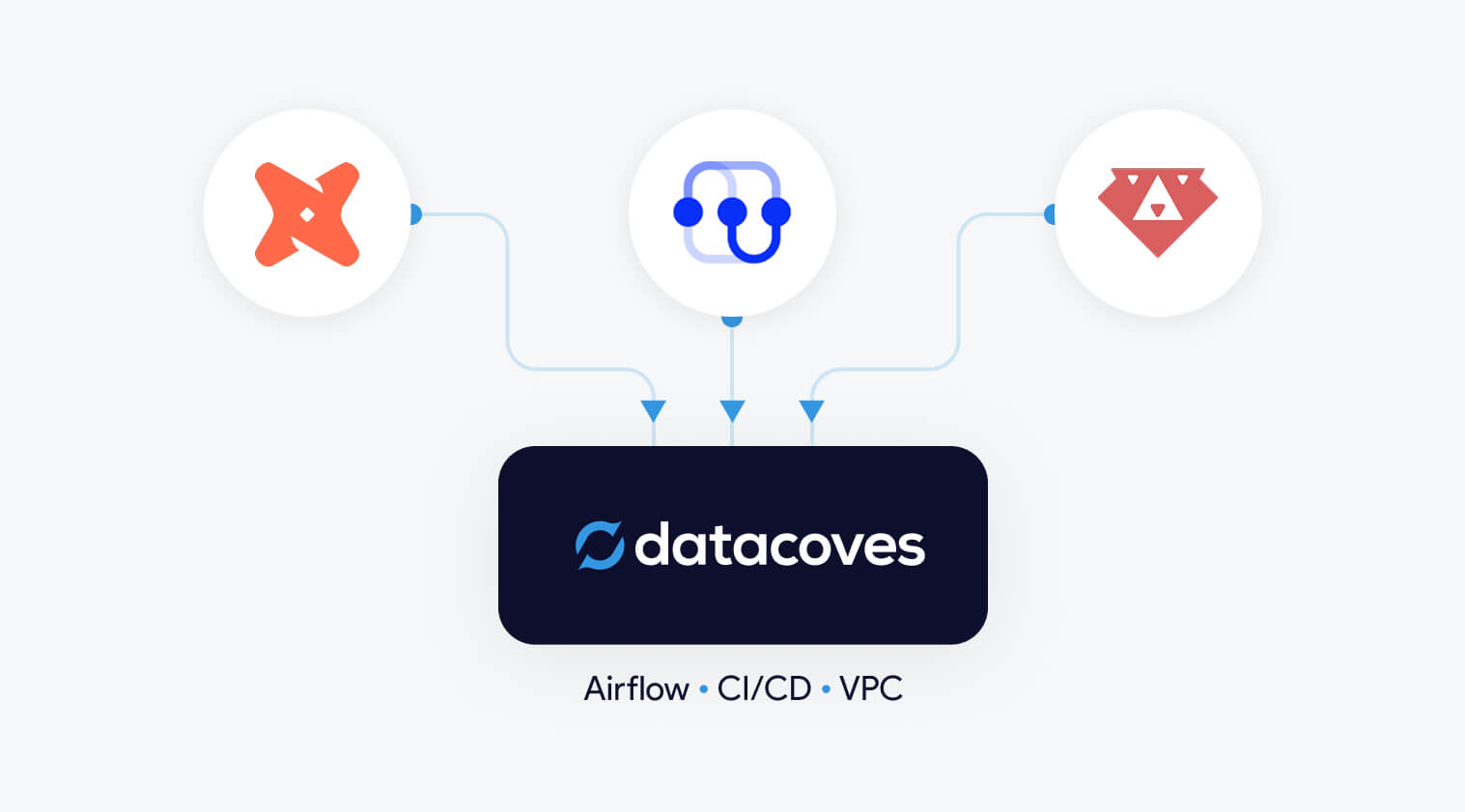

For data teams, the allure of a custom-built solution often lies in its promise of complete control and customization. However, building this requires significant time, expertise, and ongoing maintenance. Datacoves bridges the gap between custom-built flexibility and the simplicity of managed services, offering the best of both worlds.

With Datacoves, teams can leverage managed Airflow and pre-configured dbt environments to eliminate the operational burden of infrastructure setup and maintenance. This allows data teams to focus on what truly matters—delivering insights and driving business decisions—without being bogged down by tool management.

Unlike other managed solutions for dbt or Airflow, which often compromise on flexibility for the sake of simplicity, Datacoves retains the adaptability that custom builds are known for. By combining this flexibility with the ease and efficiency of managed services, Datacoves empowers teams to accelerate their analytics workflows while ensuring scalability and control.

Datacoves doesn’t just run the open-source solutions, but through real-world implementations, the platform has been molded to handle enterprise complexity while simplifying project onboarding. With Datacoves, teams don’t have to compromize on features like Datacoves-Mesh (aka dbt-mesh), column level lineage, GenAI, Semantic Layer, etc. Best of all, the company’s goal is to make you successful and remove hosting complexity without introducing vendor lock-in. What Datacove does, you can do yourself if given enough time, experience, and money. Finally, for security concious organizations, Datacoves is the only solution on the market that can be deployed in your private cloud with white-glove enterprise support.

Datacoves isn’t just a platform—it’s a partnership designed to help your data team unlock their potential. With infrastructure taken care of, your team can focus on what they do best: generating actionable insights and maximizing your ROI.

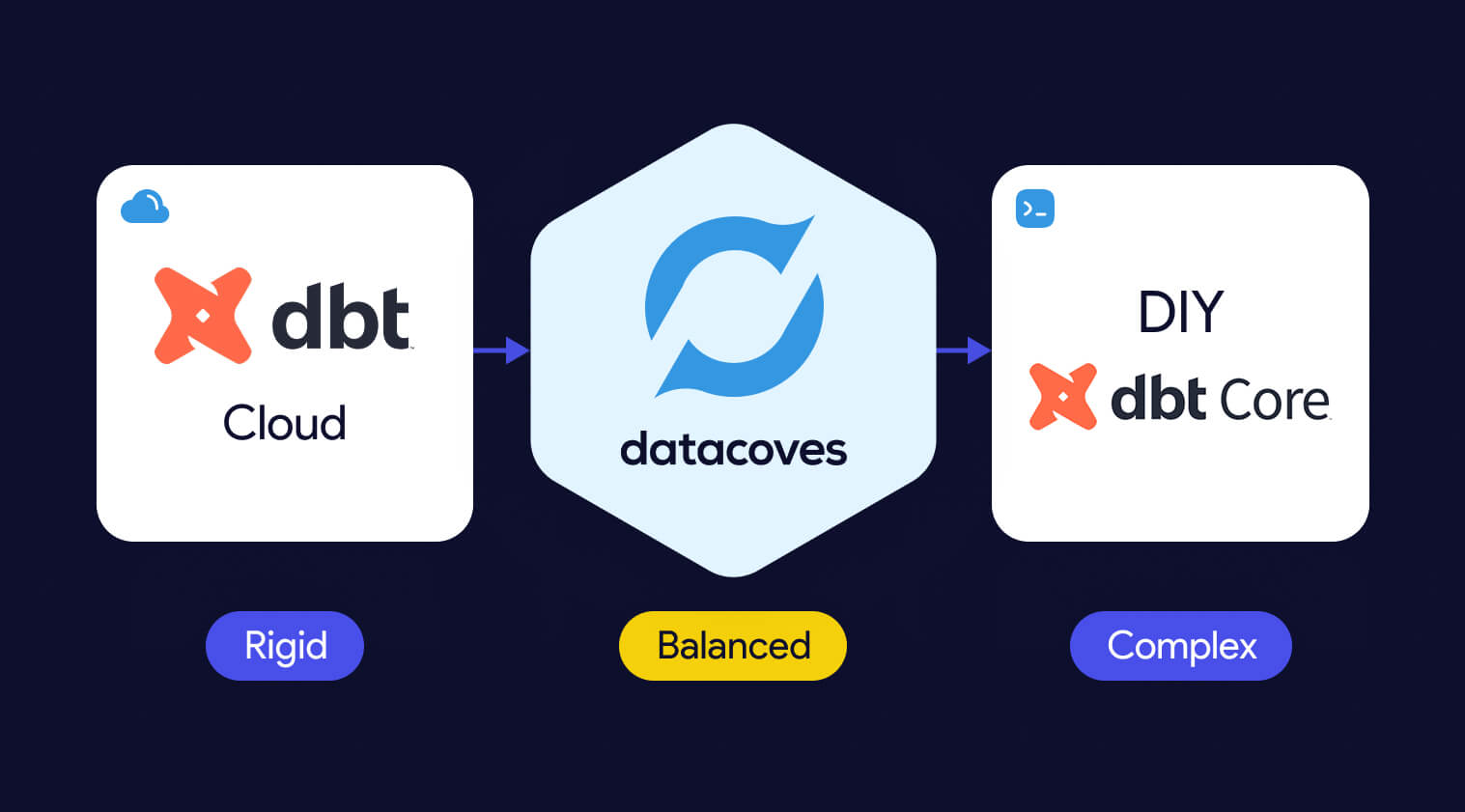

The build vs. buy debate has long been a challenge for data teams, with building offering flexibility at the cost of complexity, and buying sacrificing flexibility for simplicity. As discussed earlier in the article, solutions like dbt and Airflow are powerful, but managing them in-house requires significant time, resources, and expertise. On the other hand, managed offerings like dbt Cloud and MWAA simplify operations but often limit customization and control.

Datacoves bridges this gap, providing a managed platform that delivers the flexibility and control of a custom build without the operational headaches. By eliminating the need to manage infrastructure, scaling, and security. Datacoves enables data teams to focus on what matters most: delivering actionable insights and driving business outcomes.

As highlighted in Fundamentals of Data Engineering, data teams should prioritize extracting value from data rather than managing the tools that support them. Datacoves embodies this principle, making the argument to build obsolete. Why spend weeks—or even months—building when you can have the customization and adaptability of a build with the ease of a buy? Datacoves is not just a solution; it’s a rethinking of how modern data teams operate, helping you achieve your goals faster, with fewer trade-offs.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and visual ETL tools such as Alteryx, Matillion, and Informatica. Code-first engines offer stronger rigor, testing, and CI/CD, while GUI platforms emphasize ease of use and rapid prototyping. Teams choose these alternatives when they need more security, governance, or flexibility than dbt Core or dbt Cloud provide.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and GUI-based ETL tools such as Alteryx, Matillion, and Informatica.

Teams explore dbt alternatives when they need stronger governance, private deployments, or support for Python and code-first workflows that go beyond SQL. Many also prefer GUI-based ETL tools for faster onboarding. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in, which makes tool neutrality and platform flexibility more important than ever.

Teams look for dbt alternatives when they need stronger orchestration, consistent development environments, Python support, or private cloud deployment options that dbt Cloud does not provide.

Organizations evaluating dbt alternatives typically compare tools across three categories. Each category reflects a different approach to data transformation, development preferences, and organizational maturity.

Organizations consider alternatives to dbt Cloud when they need more flexibility, stronger security, or support for development workflows that extend beyond dbt. Teams comparing platform options often begin by evaluating the differences between dbt Cloud vs dbt Core.

Running enterprise-scale ELT pipelines often requires a full orchestration layer, consistent development environments, and private deployment options that dbt Cloud does not provide. Costs can also increase at scale (see our breakdown of dbt pricing considerations), and some organizations prefer to avoid features that are not open source to reduce long-term vendor lock-in.

This category includes platforms that deliver the benefits of dbt Cloud while providing more control, extensibility, and alignment with enterprise data platform requirements.

Datacoves provides a secure, flexible platform that supports dbt, SQLMesh, and Bruin in a unified environment with private cloud or VPC deployment.

Datacoves is an enterprise data platform that serves as a secure, flexible alternative to dbt Cloud. It supports dbt Core, SQLMesh, and Bruin inside a unified development and orchestration environment, and it can be deployed in your private cloud or VPC for full control over data access and governance.

Benefits

Flexibility and Customization:

Datacoves provides a customizable in-browser VS Code IDE, Git workflows, and support for Python libraries and VS Code extensions. Teams can choose the transformation engine that fits their needs without being locked into a single vendor.

Handling Enterprise Complexity:

Datacoves includes managed Airflow for end-to-end orchestration, making it easy to run dbt and Airflow together without maintaining your own infrastructure. It standardizes development environments, manages secrets, and supports multi-team and multi-project workflows without platform drift.

Cost Efficiency:

Datacoves reduces operational overhead by eliminating the need to maintain separate systems for orchestration, environments, CI, logging, and deployment. Its pricing model is predictable and designed for enterprise scalability.

Data Security and Compliance:

Datacoves can be deployed fully inside your VPC or private cloud. This gives organizations complete control over identity, access, logging, network boundaries, and compliance with industry and internal standards.

Reduced Vendor Lock-In:

Datacoves supports dbt, SQLMesh, and Bruin Data, giving teams long-term optionality. This avoids being locked into a single transformation engine or vendor ecosystem.

Running dbt Core yourself is a flexible option that gives teams full control over how dbt executes. It is also the most resource-intensive approach. Teams choosing DIY dbt Core must manage orchestration, scheduling, CI, secrets, environment consistency, and long-term platform maintenance on their own.

Benefits

Full Control:

Teams can configure dbt Core exactly as they want and integrate it with internal tools or custom workflows.

Cost Flexibility:

There are no dbt Cloud platform fees, but total cost of ownership often increases as the system grows.

Considerations

High Maintenance Overhead:

Teams must maintain Airflow or another orchestrator, build CI pipelines, manage secrets, and keep development environments consistent across users.

Requires Platform Engineering Skills:

DIY dbt Core works best for teams with strong Kubernetes, CI, Python, and DevOps expertise. Without this expertise, the environment becomes fragile over time.

Slow to Scale:

As more engineers join the team, keeping dbt environments aligned becomes challenging. Onboarding, upgrades, and platform drift create operational friction.

Security and Compliance Responsibility:

Identity, permissions, logging, and network controls must be designed and maintained internally, which can be significant for regulated organizations.

Teams that prefer code-first tools often look for dbt alternatives that provide strong SQL modeling, Python support, and seamless integration with CI/CD workflows and automated testing. These are part of a broader set of data transformation tools. Code-based ETL tools give developers greater control over transformations, environments, and orchestration patterns than GUI platforms. Below are four code-first contenders that organizations should evaluate.

Code-first dbt alternatives like SQLMesh, Bruin Data, and Dataform provide stronger CI/CD integration, automated testing, and more control over complex transformation workflows.

SQLMesh is an open-source framework for SQL and Python-based data transformations. It provides strong visibility into how changes impact downstream models and uses virtual data environments to preview changes before they reach production.

Benefits

Efficient Development Environments:

Virtual environments reduce unnecessary recomputation and speed up iteration.

Considerations

Part of the Fivetran Ecosystem:

SQLMesh was acquired by Fivetran, which may influence its future roadmap and level of independence.

Dataform is a SQL-based transformation framework focused specifically for BigQuery. It enables teams to create table definitions, manage dependencies, document models, and configure data quality tests inside the Google Cloud ecosystem. It also provides version control and integrates with GitHub and GitLab.

Benefits

Centralized BigQuery Development:

Dataform keeps all modeling and testing within BigQuery, reducing context switching and making it easier for teams to collaborate using familiar SQL workflows.

Considerations

Focused Only on the GCP Ecosystem:

Because Dataform is geared toward BigQuery, it may not be suitable for organizations that use multiple cloud data warehouses.

AWS Glue is a serverless data integration service that supports Python-based ETL and transformation workflows. It works well for organizations operating primarily in AWS and provides native integration with services like S3, Lambda, and Athena.

Benefits

Python-First ETL in AWS:

Glue supports Python scripts and PySpark jobs, making it a good fit for engineering teams already invested in the AWS ecosystem.

Considerations

Requires Engineering Expertise:

Glue can be complex to configure and maintain, and its Python-centric approach may not be ideal for SQL-first analytics teams.

Bruin is a modern SQL-based data modeling framework designed to simplify development, testing, and environment-aware deployments. It offers a familiar SQL developer experience while adding guardrails and automation to help teams manage complex transformation logic.

Benefits

Modern SQL Modeling Experience:

Bruin provides a clean SQL-first workflow with strong dependency management and testing.

Considerations

Growing Ecosystem:

Bruin is newer than dbt and has a smaller community and fewer third-party integrations.

While code-based transformation tools provide the most flexibility and long-term maintainability, some organizations prefer graphical user interface (GUI) tools. These platforms use visual, drag-and-drop components to build data integration and transformation workflows. Many of these platforms fall into the broader category of no-code ETL tools. GUI tools can accelerate onboarding for teams less comfortable with code editors and may simplify development in the short term. Below are several GUI-based options that organizations often consider as dbt alternatives.

GUI-based dbt alternatives such as Matillion, Informatica, and Alteryx use drag-and-drop interfaces that simplify development and accelerate onboarding for mixed-skill teams.

Matillion is a cloud-based data integration platform that enables teams to design ETL and transformation workflows through a visual, drag-and-drop interface. It is built for ease of use and supports major cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake.

Benefits

User-Friendly Visual Development:

Matillion simplifies pipeline building with a graphical interface, making it accessible for users who prefer low-code or no-code tooling.

Considerations

Limited Flexibility for Complex SQL Modeling:

Matillion’s visual approach can become restrictive for advanced transformation logic or engineering workflows that require version control and modular SQL development.

Informatica is an enterprise data integration platform with extensive ETL capabilities, hundreds of connectors, data quality tooling, metadata-driven workflows, and advanced security features. It is built for large and diverse data environments.

Benefits

Enterprise-Scale Data Management:

Informatica supports complex data integration, governance, and quality requirements, making it suitable for organizations with large data volumes and strict compliance needs.

Considerations

High Complexity and Cost:

Informatica’s power comes with a steep learning curve, and its licensing and operational costs can be significant compared to lighter-weight transformation tools.

Alteryx is a visual analytics and data preparation platform that combines data blending, predictive modeling, and spatial analysis in a single GUI-based environment. It is designed for analysts who want to build workflows without writing code and can be deployed on-premises or in the cloud.

Benefits

Powerful GUI Analytics Capabilities:

Alteryx allows users to prepare data, perform advanced analytics, and generate insights in one tool, enabling teams without strong coding skills to automate complex workflows.

Considerations

High Cost and Limited SQL Modeling Flexibility:

Alteryx is one of the more expensive platforms in this category and is less suited for SQL-first transformation teams who need modular modeling and version control.

Azure Data Factory (ADF) is a fully managed, serverless data integration service that provides a visual interface for building ETL and ELT pipelines. It integrates natively with Azure storage, compute, and analytics services, allowing teams to orchestrate and monitor pipelines without writing code.

Benefits

Strong Integration for Microsoft-Centric Teams:

ADF connects seamlessly with other Azure services and supports a pay-as-you-go model, making it ideal for organizations already invested in the Microsoft ecosystem.

Considerations

Limited Transformation Flexibility:

ADF excels at data movement and orchestration but offers limited capabilities for complex SQL modeling, making it less suitable as a primary transformation engine

Talend provides an end-to-end data management platform with support for batch and real-time data integration, data quality, governance, and metadata management. Talend Data Fabric combines these capabilities into a single low-code environment that can run in cloud, hybrid, or on-premises deployments.

Benefits

Comprehensive Data Quality and Governance:

Talend includes built-in tools for data cleansing, validation, and stewardship, helping organizations improve the reliability of their data assets.

Considerations

Broad Platform, Higher Operational Complexity:

Talend’s wide feature set can introduce complexity, and teams may need dedicated expertise to manage the platform effectively.

SQL Server Integration Services is part of the Microsoft SQL Server ecosystem and provides data integration and transformation workflows. It supports extracting, transforming, and loading data from a wide range of sources, and offers graphical tools and wizards for designing ETL pipelines.

Benefits

Strong Fit for SQL Server-Centric Teams:

SSIS integrates deeply with SQL Server and other Microsoft products, making it a natural choice for organizations with a Microsoft-first architecture.

Considerations

Not Designed for Modern Cloud Data Warehouses:

SSIS is optimized for on-premises SQL Server environments and is less suitable for cloud-native architectures or modern ELT workflows.

Recent consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in and pushed organizations to evaluate more flexible transformation platforms.

Organizations explore dbt alternatives when dbt no longer meets their architectural, security, or workflow needs. As teams scale, they often require stronger orchestration, consistent development environments, mixed SQL and Python workflows, and private deployment options that dbt Cloud does not provide.

Some teams prefer code-first engines for deeper CI/CD integration, automated testing, and strong guardrails across developers. Others choose GUI-based tools for faster onboarding or broader integration capabilities. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has also increased concerns about vendor lock-in.

These factors lead many organizations to evaluate tools that better align with their governance requirements, engineering preferences, and long-term strategy.

DIY dbt Core offers full control but requires significant engineering work to manage orchestration, CI/CD, security, and long-term platform maintenance.

Running dbt Core yourself can seem attractive because it offers full control and avoids platform subscription costs. However, building a stable, secure, and scalable dbt environment requires significantly more than executing dbt build on a server. It involves managing orchestration, CI/CD, and ensuring development environment consistency along with long-term platform maintenance, all of which require mature DataOps practices.

The true question for most organizations is not whether they can run dbt Core themselves, but whether it is the best use of engineering time. This is essentially a question of whether to build vs buy your data platform. DIY dbt platforms often start simple and gradually accumulate technical debt as teams grow, pipelines expand, and governance requirements increase.

For many organizations, DIY works in the early stages but becomes difficult to sustain as the platform matures.

The right dbt alternative depends on your team’s skills, governance requirements, pipeline complexity, and long-term data platform strategy.

Selecting the right dbt alternative depends on your team’s skills, security requirements, and long-term data platform strategy. Each category of tools solves different problems, so it is important to evaluate your priorities before committing to a solution.

If these are priorities, a platform with secure deployment options or multi-engine support may be a better fit than dbt Cloud.

Recent consolidation in the ecosystem has raised concerns about vendor dependency. Organizations that want long-term flexibility often look for:

Consider platform fees, engineering maintenance, onboarding time, and the cost of additional supporting tools such as orchestrators, IDEs, and environment management

dbt remains a strong choice for SQL-based transformations, but it is not the only option. As organizations scale, they often need stronger orchestration, consistent development environments, Python support, and private deployment capabilities that dbt Cloud or DIY dbt Core may not provide. Evaluating alternatives helps ensure that your transformation layer aligns with your long-term platform and governance strategy.

Code-first tools like SQLMesh, Bruin Data, and Dataform offer strong engineering workflows, while GUI-based tools such as Matillion, Informatica, and Alteryx support faster onboarding for mixed-skill teams. The right choice depends on the complexity of your pipelines, your team’s technical profile, and the level of security and control your organization requires.

Datacoves provides a flexible, secure alternative that supports dbt, SQLMesh, and Bruin in a unified environment. With private cloud or VPC deployment, managed Airflow, and a standardized development experience, Datacoves helps teams avoid vendor lock-in while gaining an enterprise-ready platform for analytics engineering.

Selecting the right dbt alternative is ultimately about aligning your transformation approach with your data architecture, governance needs, and long-term strategy. Taking the time to assess these factors will help ensure your platform remains scalable, secure, and flexible for your future needs.

The dbt-utils package enhances the dbt experience by offering a suite of utility macros. Designed to tackle common SQL modeling patterns, it streamlines complex operations, allowing users to focus on data transformation rather than the intricacies of SQL. dbt-utils is a must-have tool for dbt aficionados!

The dbt-utils package is a gem in the world of data transformations. Let this cheat sheet guide you swiftly through its features, ensuring you get the most out of dbt-utils. Enjoy!

The SQL generators in the dbt-utils package streamline your modeling tasks. By automating common SQL patterns, they minimize manual coding and guarantee consistent, high-quality queries. Think of it as a handy toolkit for every dbt user's SQL endeavors!

Within the dbt-utils package lies a set of generic tests, designed to validate your data effortlessly. These tests ensure consistency and quality, checking for common issues without the need to craft custom validation rules. It's data integrity made simple for dbt users.

The introspective macros within the dbt-utils package are a window into your data's metadata. They empower you to dynamically interact with and understand the underlying structure of your datasets. It's like having a magnifying glass for the intricacies of your dbt projects!

Please contact us with any errors or suggestions.

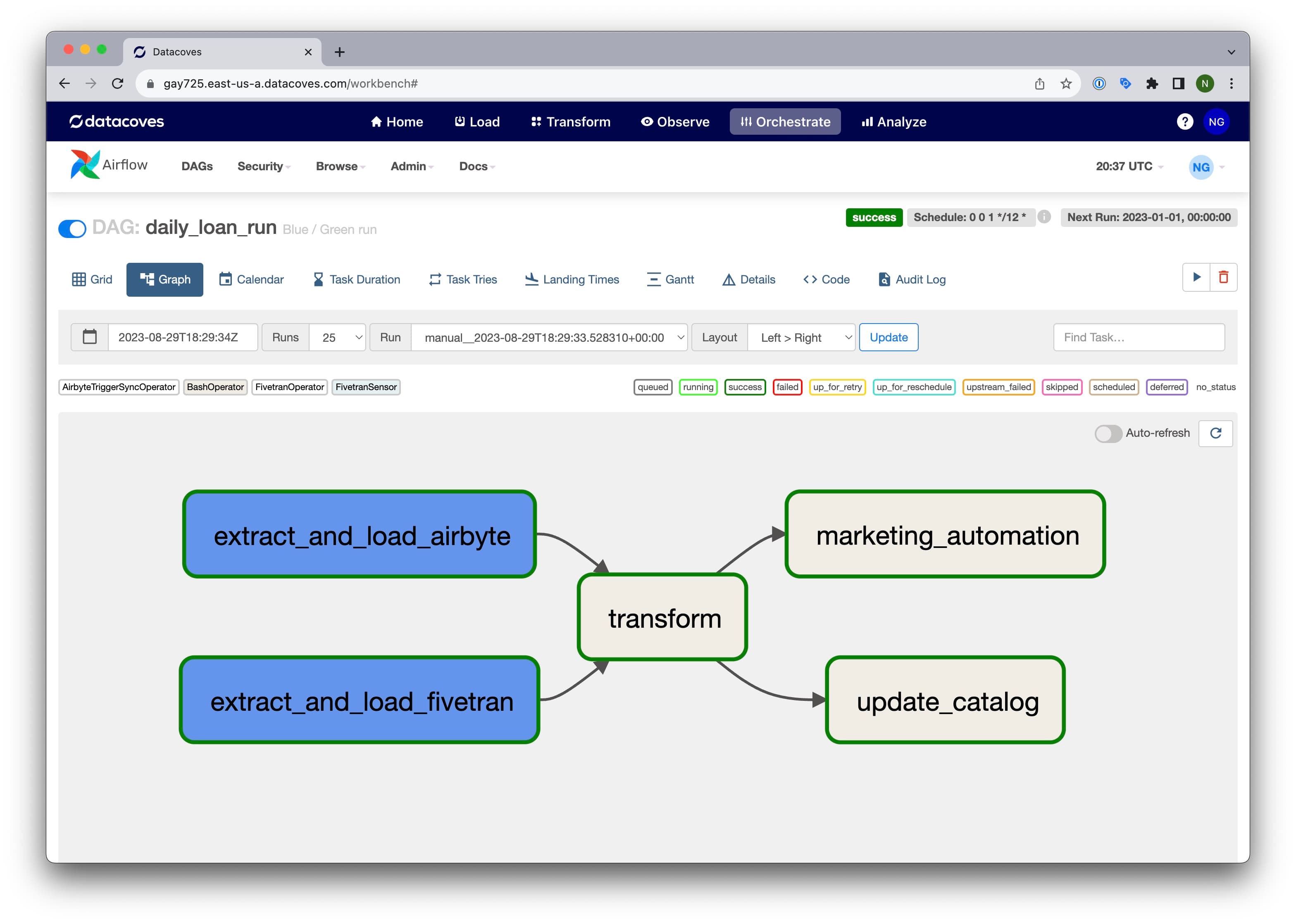

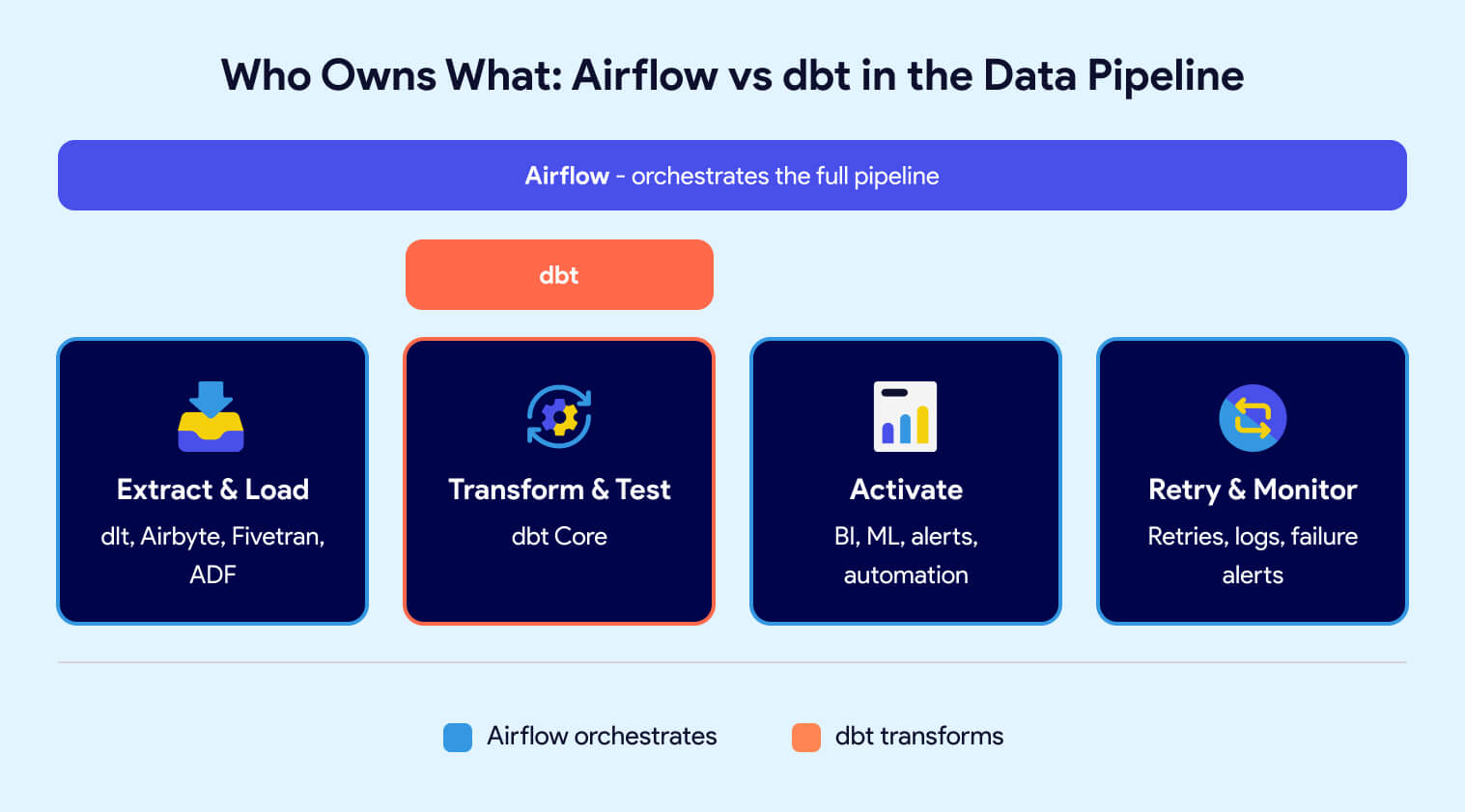

dbt transforms data inside your warehouse using SQL. Airflow orchestrates workflows across your entire data pipeline. They are not competing tools -- they solve different problems. dbt handles the "T" in ELT: modeling, data testing, unit testing, documentation, and lineage. Airflow handles the scheduling, sequencing, retries, failure notifications, observability, and coordination of every step in the pipeline, including ingesting data, triggering dbt, and activation steps. Most production data teams use both. The real decision is not which tool to pick, but how to run them together without drowning in infrastructure overhead.

Airflow is a workflow scheduler, but it is only one part of a broader data orchestration problem that includes dependencies, retries, visibility, and ownership across the entire data lifecycle.

Imagine a scenario where you have a series of tasks: Task A, Task B, and Task C. These tasks need to be executed in sequence every day at a specific time. Airflow enables you to programmatically define the sequence of steps as well as what each step does. With Airflow you can also monitor the execution of each step and get alerts when something fails.

Airflow's core strength is flexibility. You define the code behind each task, not just the sequence and schedule. Whether it's triggering an extraction job, calling a Python script, or integrating with an external platform, Airflow lets you control exactly how each step executes.

But that flexibility is a double-edged sword. Just because you can code everything inside Airflow doesn't mean you should. Overly complex task logic inside Airflow makes workflows harder to debug and maintain. The best practice: use Airflow as the orchestrator, not the execution engine. Let specialized tools handle the heavy lifting. Use tools like dbt for transformation and tools like Azure Data Factory, dlt, Fivetran or Airbyte for extraction. Let Airflow handle the scheduling, coordination, observability, and failure notification.

dbt (data build tool) is an open-source framework that uses SQL and Jinja templating to transform data inside the warehouse. Developed by dbt Labs, dbt handles the "T" in ELT: transforming, testing, documenting, and tracking lineage of data models. It brings software engineering practices like version control, CI/CD, and modular code to analytics workflows.

dbt lets you write transformation logic as SQL select statements, enhanced with Jinja templating for dynamic execution. Each transformation is called a "model." When you run dbt, it compiles those models into raw SQL and executes them against your warehouse, creating or replacing tables and views. dbt resolves dependencies between models automatically, running them sequentially or in parallel as needed.

Unlike traditional ETL tools that abstract SQL behind drag-and-drop interfaces, dbt treats SQL as the primary language for transformation. This makes it immediately accessible to anyone who already knows SQL. But dbt goes further by adding Jinja templating for dynamic scripting, conditional logic, and reusable macros.

A few things set dbt apart from alternatives:

dbt excels at the "T" in ELT but requires complementary tools for extraction, loading, and orchestration.

A common misunderstanding in the data community is equating "dbt" with "dbt Cloud." When people say dbt, they typically mean dbt Core -- the open-source framework. dbt Cloud is a commercial product from dbt Labs built on top of dbt Core that adds a scheduler, hosted IDE, monitoring, and managed infrastructure.

The key distinction: you can use dbt Core without paying for dbt Cloud, but you won't get a scheduler, hosted environment, or managed CI/CD. That means you'll need to solve orchestration, deployment, and infrastructure yourself. This is typically done with a tool like Airflow and a platform to tie it all together.

For a deeper comparison, see our article on dbt Cloud vs. dbt Core.

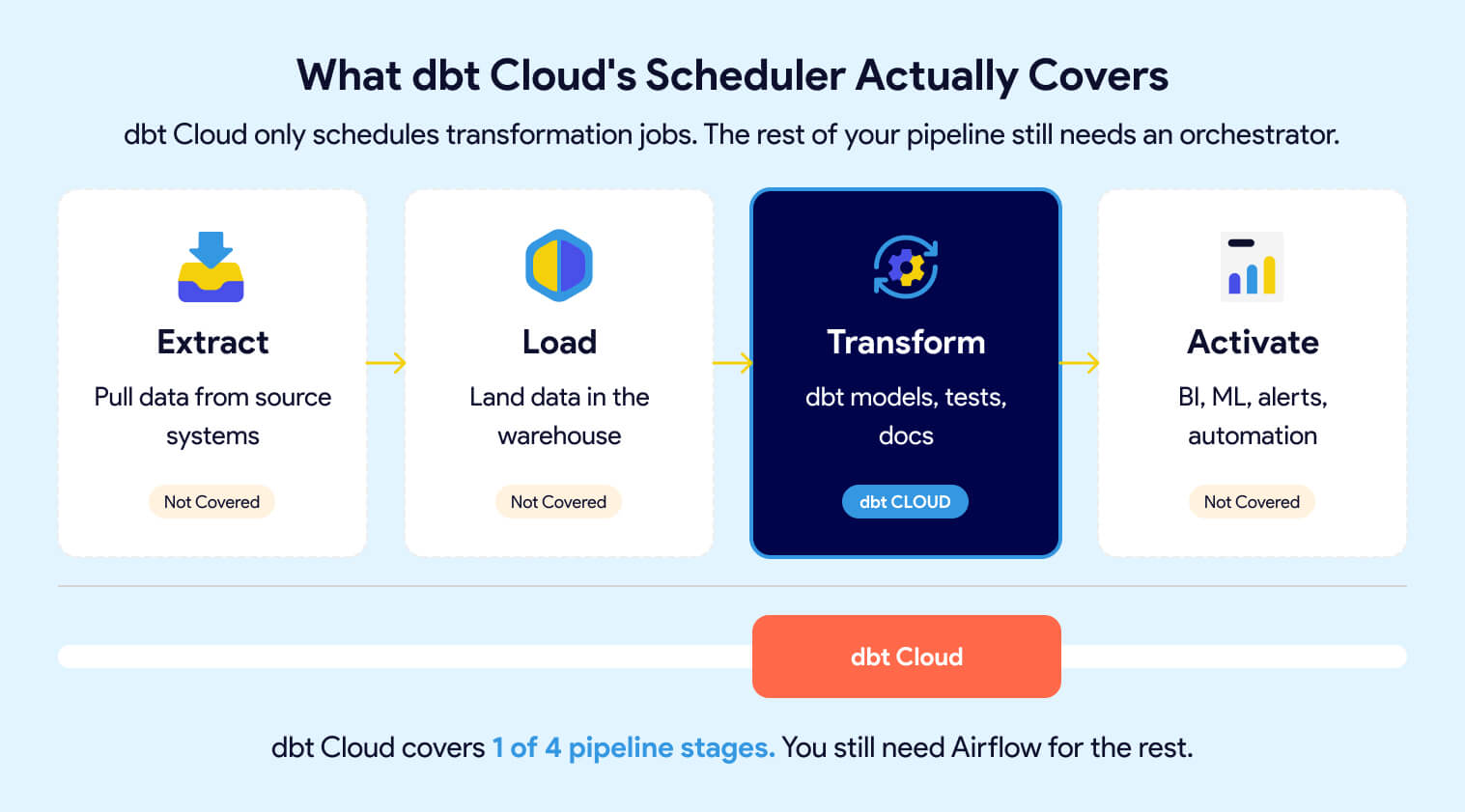

dbt Cloud includes a scheduler that automates dbt runs on a defined cadence. This keeps your transformation models fresh and your data reliable. But it only schedules dbt jobs, nothing else.

Your Extract and Load steps, downstream processes like BI refreshes, ML model retraining, marketing automation, and any cross-system coordination still need an orchestrator. dbt Cloud's scheduler does not replace Airflow. It replaces the need to cron-schedule dbt runs manually, but leaves the rest of the pipeline unmanaged.

Yes, unless your entire pipeline is just dbt transformations with nothing before or after them.

dbt Cloud schedules and runs your dbt jobs. That covers transformation, testing, and documentation. But most production pipelines involve more than that: extracting data from source systems, loading it into the warehouse, refreshing BI dashboards, triggering ML model retraining, sending alerts, and coordinating dependencies across all of these steps.

dbt Cloud does not orchestrate any of those. If your pipeline looks like this:

Extract → Load → Transform → Activate

Then dbt Cloud only covers one step. You still need an orchestrator like Airflow to tie the full pipeline together, manage dependencies between stages, handle retries, and provide end-to-end visibility when something breaks.

For small teams with a simple pipeline (one source, one warehouse, one dashboard), dbt Cloud's scheduler may be enough to start. But as soon as you add sources, downstream consumers, or cross-system dependencies, you'll need Airflow or a platform that includes it.

The hidden cost of choosing open-source dbt Core and Airflow is the platform you have to build around them. CI/CD pipelines, secrets management, environment provisioning, Git workflows, Kubernetes tuning, Airflow upgrades -- this is real engineering work that pulls your team away from delivering data products.

Datacoves eliminates that overhead. It provides managed dbt Core and managed Airflow in a unified environment deployed in your private cloud. Developers get an in-browser VS Code editor with dbt, Python, extensions, and Git pre-configured. Orchestration is handled through Datacoves' managed Airflow, which includes a simplified YAML-based DAG configuration that connects Extract, Load, and Transform steps without writing boilerplate Python.

Best practices, governance guardrails, and accelerators are built in, so teams get a production-grade data platform in minutes instead of months. For a deeper look at the tradeoffs, see our guide on building vs. buying your analytics platform.

To learn more about Datacoves, check out our product page.

This is not an either-or decision. dbt and Airflow solve different problems, and trying to force one tool to do the other's job creates fragile, hard-to-maintain pipelines.

Use dbt for transformation, testing, documentation, and lineage. Use Airflow for scheduling, dependency management, retries, and end-to-end pipeline coordination. The comparison table below shows where each tool fits.

If you need to pick one tool to start with, here's the decision framework:

Start with dbt if your immediate priority is transforming raw data into reliable, tested models in the warehouse. dbt gives you version control, testing, documentation, and lineage from day one. For small teams with simple pipelines, a cron job or dbt Cloud's scheduler can handle automation in the short term.

Start with Airflow if you already have multiple systems that need coordination; extraction from SaaS sources, loading into the warehouse, transformation, and downstream activation. Airflow gives you end-to-end visibility and control across the full pipeline.

The reality: most teams outgrow a single-tool approach quickly. Once your pipeline spans more than one step, you need both. The question isn't which tool to pick, it's how soon you'll need the other one. If you're still evaluating transformation tools, check out our dbt alternatives comparison.

In a production pipeline, the tools fit together like this:

Airflow owns the "when" and "what order." dbt owns the "how" for transformation. Neither tool steps on the other's responsibilities.

The challenge is running them together. Deploying Airflow and dbt Core in a shared, production-grade environment requires Kubernetes, CI/CD, secrets management, environment provisioning, and ongoing maintenance. For teams that don't want to build and maintain that platform layer themselves, a managed solution like Datacoves provides both tools pre-integrated in your private cloud -- so your team focuses on data, not infrastructure.

dbt and Airflow are not competing tools. dbt transforms your data. Airflow orchestrates your pipeline. Trying to use one without the other leaves gaps that show up as fragile pipelines, manual workarounds, and constant firefighting. The teams that struggle most are the ones who pick the right tools but never invest in the platform layer that makes them work together.

The real question is not "dbt vs Airflow," it's how you run them together without building and maintaining a platform from scratch. That infrastructure layer (Kubernetes, CI/CD, secrets, environments, Git workflows, upgrades) is where most teams burn time and budget.

Datacoves gives you managed dbt Core and managed Airflow in a unified environment, deployed in your private cloud, with best practices and governance built in. Your team focuses on delivering data products, not maintaining the platform underneath them.

dbt, also known as data build tool, is a data transformation framework that leverages templated SQL to transform and test data. dbt is part of the modern data stack and helps practitioners apply software development best practices on data pipelines. Some of these best practices include code modularity, version control, and continuous testing via its built in data quality framework. In this article we will focus on how data can be tested with dbt via build in functionality and with additional dbt packages and libraries.

Adding tests to workflows does more than ensure code and data integrity; it facilitates a continuous dialogue with your data, enhancing understanding and responsiveness. By integrating testing into your regular workflows, you can:

By embedding testing into the development cycle and consuming the results diligently, teams not only safeguard the functionality of their data transformations but also enhance their overall data literacy and operational efficiency. This proactive approach to testing ensures that the insights derived from data are both accurate and actionable.

In dbt, there are two main categories of tests: data tests and unit tests.

Data tests are meant to be executed with every pipeline run to validate the integrity of the data and can be further divided into two types: Generic tests and Singular tests.

Regardless of the type of data test, the process is the same behind the scenes: dbt will compile the code to a SQL SELECT statement and execute it against your database. If any rows are returned by the query, this indicates a failure to dbt.

Unit tests, on the other hand, are meant to validate your transformation logic. They rely on predefined data for comparison to ensure your logic is returning an expected result. Unlike data tests, which are meant to be run with every pipeline execution, unit tests are typically run during the CI (Continuous Integration) step when new code is introduced. Unit tests were incorporated in dbt Core as of version 1.8.

.png)

These are foundational tests provided by dbt-core, focusing on basic schema validation and source freshness. These tests are ideal for ensuring that your data sources remain valid and up-to-date.

dbt-core provides four built-in generic tests that are essential for data modeling and ensuring data integrity:

unique: is a test to verify that every value in a column (e.g. customer_id) contains unique values. This is useful for finding records that may inadvertently be duplicated in your data.

not_null: is a test to check that the values for a given column are always present. This can help you find cases where data in a column suddenly arrives without being populated.

accepted_values: this test is used to validate whether a set of values within a column is present. For example, in a column called payment_status, there can be values like pending, failed, accepted, rejected, etc. This test is used to verify that each row within the column contains one of the different payment statuses, but no other. This is useful to detect changes in the data like when a value gets changed such as accepted being replaced with approved.

relationships: these tests check referential integrity. This type of test is useful when you have related columns (e.g. the customer identifier) in two different tables. One table serves as the “parent” and the other is the “child” table. This is common when one table has a transaction and only lists a customer_id and the other table has the details for that customer. With this test we can verify that every row in the transaction table has a corresponding record in the dimension/details table. For example, if you have orders for customer_ids 1, 2, 3 we can validate that we have information about each of these customers in the customer details table.

Using a generic test is done by adding it to the model's property (yml) file.

Generic tests can accept additional test configurations such as a where clause to apply the test on a subset of rows. This can be useful on large tables by limiting the test to recent data or excluding rows based on the value of another column. Since an error will stop a dbt build or dbt test of the project, it is also possible to assign a severity to a test and optionally a threshold where errors will be treated as warning instead of errors. Finally, since dbt will automatically generate a name for the test, it may be useful to override the auto generated test name for simplicity. Here's the same property file from above with the additional configurations defined.

Singular tests allow for the customization of testing parameters to create tailored tests when the default generic ones (or the ones in the packages discussed below) do not meet your needs. These tests are simple SQL queries that express assertions about your data. An example of this type of test can be a more complex assertion such as having sales for one product be within +/- 10% of another product. The SQL simply needs to return the rows that do not meet this condition.

In dbt, it is also possible to define your own custom generic tests. This may be useful when you find yourself creating similar Singular tests. A custom generic test is essentially the same as a dbt macro which has a least a model as a parameter, and optionally column_name, if the test will apply to a column. Once the generic test is defined, it can be applied many times just like the generic tests shipped with dbt Core. It is also possible to pass additional parameters to a custom generic test.

As our data transformations become more complex, the need for testing becomes increasingly important. The concept of unit testing is already well established in software development, where tests confirm that individual units of code work as intended. Recognizing this, dbt 1.8 introduced unit testing.

Unlike the data tests we have above, which ensure that incoming data meets specific criteria and are run at every data refresh, unit tests are designed to verify that the transformation logic itself produces the expected results. In the context of dbt, unit tests validate transformation logic by comparing the test results against predefined data typically defined using seeds (CSV files) or SQL queries. Unit tests should only be executed when new data transformation code is introduced and implemented since they are designed to help catch potential issues early in the development process. It is recommended to run unit tests only during the CI step. Running them in production would be a redundant use of compute resources because the expected outcomes do not change. Unit testing is only available in 1.8 or higher, but there are community packages (dbt-unit-testing, dbt_datamocktool, dbt-unittest) that have worked to solve this problem and are worth exploring if you are not using dbt 1.8.

While not technically a dbt test, a freshness check validates the timeliness of source data. The freshness check in dbt Core is designed to monitor the timeliness of the data. It helps ensure that the data loaded into your warehouse is updated regularly and remains relevant for decision-making processes. This is valuable because sometimes data will stop getting refreshed and the data pipelines will continue to run with a silent failure. To assure that you are alerted when a data delivery SLA is not met, simply add a freshness check to your sources.

This comprehensive suite of testing capabilities in dbt Core ensures that data teams can build, maintain, and verify the reliability and accuracy of their data models effectively.

In addition to the generic tests that can be found within dbt Core, there are a lot more in the dbt ecosystem. These tests are found in dbt packages. Packages are libraries of reusable SQL code created by organizations of the dbt community. We will briefly go over some of the tests that can be found in these packages.

The dbt-utils package, created by dbt Labs, contains generic dbt tests, SQL generators, and macros. The dbt_utils package include 16 generic tests including:

not_accepted_values: this test is the opposite of the accepted_values test and is used to check that specific values are NOT present in a particular range of rows.

equal_rowcount: this test checks that two different tables have the same number of rows. This is a useful test that can assure that a transformation step does not accidentally introduce additional rows in the target table.

fewer_rows_than: this test is used to verify that a target table contains fewer rows than a source table. For example, if you are aggregating a table, you expect that the target table will have fewer rows than the table you are aggregating. This test can help you validate this condition.

There are 17 generic dbt tests available in the dbt-utils package.

Another awesome package that can accelerate your data testing is dbt-expectations. This package is a port of the great Python library Great Expectations. For those not familiar, Great Expectations is an open-source Python library that is used for automated testing. dbt-expectations is modeled after this library and was developed by Calogica so dbt practitioners would have access to an additional set of pre-created Generic tests without adding another tool to the data platform. Tests in dbt-expectations are divided into seven categories encompassing a total of 62 generic dbt tests:

You can find detailed information on all the dbt-expectations generics tests in their documentation.

Created by Snowflake, dbt_constraints adds primary and foreign key constraints to dbt models. When incorporated into a dbt project, this package automatically creates unique keys for all existing unique and dbt_utils.unique_combination_of_columns tests, along with foreign keys for existing relationship tests and not null constraints for not_null tests. It provides three flexible tests - primary_key, unique_key, and foreign_key - which can be used inline, out-of-line, and support multiple columns.

The elementary tool offers 10 generic dbt tests that help in detecting schema changes, validating JSON schemas, and monitoring anomalies in source freshness, among other functionalities.

dbt-fihr focuses on the healthcare sector, providing 20 generic dbt tests for validating HL7® FHIR® (Fast Healthcare Interoperability Resources) data types, a standard for exchanging healthcare information across different systems.

Maintained by Google, the fhir-dbt-analytics package includes tests that ensure the quality of clinical data. These tests might involve counting the number of FHIR resources to verify expected counts or checking references between FHIR resources.

By leveraging these diverse dbt testing packages, data teams can significantly enhance their data validation processes, ensuring that their data pipelines are robust, accurate, and reliable.

While the tests above run against production data and are run even when none of the dbt code has changed, there are some tests that should be applied during development. This will improve a project's long term maintainability, assure project governance, and validate transformation logic in isolation of production data.

This dbt-meta-testing package contains macros to assert test and documentation coverage leveraging a configuration defined in the dbt_project.yml configuration settings.

While dbt tests are great to test with "real" data, sometimes you may want to test the logic of a transformation with "fake" data. This type of test is called a unit test. The dbt-unit-testing package has all you need to do proper dbt unit testing. (side note, the dbt Core team has announced the unit testing will be part of a future release of dbt although it may not be exactly as done using this package).

dbt_datamocktool can be used to create mock CSV seeds to stand in for the sources and refs that your models use and test that the model produces the expected output as compared with another CSV seed.

The dbt-unittest is a dbt package to enhance dbt package development by providing unit testing macros.

Incorporating automated data validation into CI/CD pipelines helps catch issues early and ensures data accuracy before deployment. By integrating tests into every code change, teams can prevent bad data from reaching production and maintain reliable data pipelines.

dbt-checkpoint is a library that can be leveraged during the development and release life-cycle to assure a level of governance of the dbt project. Typical validations include assuring that dbt models and/or their columns have descriptions and that all the columns in a dbt model (sql) are present in a property file (yml).

Recce is an open-source data validation toolkit for comprehensive PR review in dbt projects. Recce helps to validate the data impact of code changes during development and PR review by enabling you to compare data structure, profiling statistics, and queries between two dbt environments, such as dev and prod. By performing Recce checks, you are able to identify unexpected data impact, validate expected impact, and prevent bad merges and incorrect data entering production.

Recce checks, can be performed during development, automatically as part of CI, and as part of PR review for root cause analysis. The suite of tools in Recce enable you to perform:

Record the results of your data validations in the Checklist and share as part of PR review or discussion with stakeholders.

For full coverage, use Recce’s automated ‘preset checks’ that are triggered with each pull request and automatically post an impact summary to your PR comment.

Recce Cloud users can also take advantage of check-syncing and PR merge-blocking until the reviewer or stakeholders have approved the check results.

By default, dbt will not store the results of a dbt test execution. There is a configuration that can be set for the dbt project or at the specific model level which will have dbt store the failures of the test in a table in the data warehouse. While this is a good start, these test results get overridden each time dbt tests are run. To overcome this deficiency, tools have been developed in the community that store results longitudinally and even provide dashboards of test results.

Elementary is an open source data observability tool for dbt. It simplifies the capture of dbt test results over time, enables testing without having to manually add tests to all your dbt model columns, and has a user interface for viewing test results as well as dbt lineage.

Elementary also provides advanced configurations for generating Slack alerts for dbt tests, enhancing how teams monitor and respond to data quality issues. You can configure alerts based on test results, test statuses, and test durations. Additionally, you can set up recurring alerts based on a schedule that you define, ensuring continuous oversight without constant manual checking.

Key features include:

This comprehensive suite of tools not only sends notifications but also allows for significant customization, ensuring that alerts are meaningful and actionable. The integration of these features into your workflow facilitates better data management and quicker response to potential data discrepancies, streamlining your project's efficiency and reliability.

This dbt Data Quality package is a Snowflake only package that helps users access and report on the outputs from dbt source freshness and dbt test results.

The dbt-tools package makes it simple to store and visualize dbt test results in a BI dashboard.

re_data is an open-source data reliability framework for modern data stack.

When migrating data from one system to another validating that tables match is incredibly important. For this we recommend datacompy to get the job done.

Getting started with dbt testing is simple thanks to the predefined generic dbt tests found within dbt Core and the additional generic tests found in dbt-utils and dbt-expectations. In addition to these juggernauts of the dbt community other organizations in the dbt community have contributed a additional generic tests, tools to improve dbt development, libraries that can help with validation and governance before releasing code to production and tools that can improve data quality observability. If you are using dbt cloud or dbt core you may be interested in reading more about dbt alternatives such as Datacoves which falls under the managed dbt core solutions.

You now know what dbt (data build tool) is all about. You are being productive, but you forgot what `dbt build` does or you forgot what the @ dbt graph operator does. This handy dbt cheat sheet has it all in one place.

With the advent of dbt 1.6, we updated the awesome dbt cheat sheet created originally by Bruno de Lima

We have also moved the dbt jinja sheet sheet to a dedicated post.

This reference summarizes all the dbt commands you may need as you run your dbt jobs or study for your dbt certification.

If you ever wanted to know what the difference between +model and @model is in your dbt run, you will find the answer. Whether you are trying to understand dbt graph operators or what the dbt retry command does, but this cheat sheet has you covered. Check it out below.

These are the principal commands you will use most frequently with dbt. Not all of these will be available on dbt Cloud

The dbt commands above have options that allow you to select and exclude models as well as deferring to another environment like production instead of building dependent models for a given run. This table shows which options are available for each dbt command

By combining the arguments above like "-s" with the options below, you can tell dbt which items you want to select or exclude. This can be a specific dbt model, everything in a specific folder, or now with the latest versions of dbt, the specific version of a model you are interested in.

dbt Graph Operator provide a powerful syntax that allow you to hone in on the specific items you want dbt to process.

The following commands are used less frequently and perform actions like initializing a dbt project, installing dependencies, or validating that you can connect to your database.

The flags below immediately follow the dbt command and go before the subcommand e.g. dbt <FLAG> run

Read the official dbt documentation

As a managed dbt Core solution, the Datacoves platform simplifies the dbt Core experience and retains its inherent flexibility. It effectively bridges the gap, capturing many benefits of dbt Cloud while mitigating the challenges tied to a pure dbt Core setup. See if Datacoves dbt pricing is right for your organization or visit our product page.

Please contact us with any errors or suggestions.

I read an article on Anchor Data Modeling, more specifically, Atomic modeling where the author proposes a different way of Data Modeling. The rationale for this change is that there is a lack of skills to model data well. We are giving powerful tools to novices, and that is bound to lead to problems.

From the article:

"we are in a distressful situation both concerning the art as a whole but also its place in modern architectures"

Is this the case? Do we have a big problem on the horizon that requires us to make this big shift?

I'd say I am open-minded and expose myself to different ways of thinking so I can broaden my views. A few years ago, I learned a bit about COBOL, not because I had any real use for it but because I was curious. I found it very interesting and even saw its similarities with SQL. I approached the topic with no preconceived ideas; this is the first time I heard of Atomic Modeling.

The issues I see with ideas like Atomic data modeling are not in their goal. I am 100% aligned with the goal; the problem is the technology, process, and people needed to get there.

What we see in the market is a direct result of a backlash against doing things "perfectly." But why is this the case? I believe it is because we haven't communicated how we will achieve this vision of ideas like atomic data. The author even says a key phrase in the first paragraph:

"practitioners shying away from its complexity"

If doing anchor data modeling is "complex" how are we going to up-skill people? Is this feasible? I am happy if I can get more people to use SQL vs a GUI tool like Alterix 😁

I am by no means an expert. Yet, I am fairly technical, and if I am not convinced, how will we convince decision-makers?

As I read this article, here's what I am thinking:

1. First, I will need to deconstruct the data I get from some source like material master data form SAP. That will be a bunch of tables, and who is going to do all this data modeling? It sounds expensive and time-consuming.

2. I am going to need some tooling for this, and I am either going to build it or use something a few others are using. Will my company want to take a chance on something this early? This sounds risky.

3. After I deconstruct all this data, I need to catalog all these atoms. I now have a lot of metadata, and that's good, but is the metadata business-friendly? We can't get people to add table descriptions how is this going to happen with this explosion of objects? Who will maintain it? How will we expose this? Is there a catalog for it already? Does that catalog have the other features people need? It sounds like I need to figure out a bunch of things, the biggest one being the change management aspect.

4. What sort of database will I use to store this? This is a great use case for a graph database. But graph databases are not widely adopted, and I have seen graph databases choke at scale. We can use a relational database, but these joins are going to be complex. Someone may have figured all this out, but there's more tech and learning needed. It sounds like this will also add time and expense.

5. When I have managed to do all the above, I will need to construct what people can actually use. We need objects that work with tools that are available. I need to make relational tables I can query with SQL and viz tools, which are more user-friendly. This sounds like more work, more time, and more money.

I may have missed some steps and oversimplified what's needed for this type of change. I am also aware that I may not know what exists to solve all the above. However, if I don't know it, then there are a ton of other people who also don't know it and this is where we need to start. We need to understand how we will tactically achieve this "better" world.

I've had conversations on metadata-driven automation, and like atomic modeling, I am not clear on who we are helping and how. What are we improving and in what timeframe? In the end, it feels like we have optimized for something only a few companies can do. To do anchor modeling well would be a huge expense, and when things go wrong, there are several points of failure. When we look at business problems, we need to be sure to optimize the end-to-end system. We can't locally optimize one area because we are likely moving the problem somewhere else. This can be in terms of time, money, or usability.

Decision-makers are not interested in data modeling. They are expecting results and a faster time to market. It's hard enough getting people to do things "better." This is why I find it hard to imagine that we can get to this level of maturity any time soon.

There are incremental steps we can take to incorporate best practices into the modern data stack. We need to help people mature their data practice faster, and we should not let perfection get in the way of good. Most companies are not large enterprises with millions of dollars to spend on initiatives like atomic modeling. That being said, I have yet to see anchor modeling in practice, so I welcome the opportunity to learn. I remember years ago the debates about how Ruby on Rials was teaching people "bad practices." The other side of that argument is that Rails helped companies like Twitter and Github launch businesses faster. Rails was also better than the alternative at the time, which included messy PHP code. Others advocated for well-crafted "scalable" and expensive Java applications. Rails may not be the powerhouse it once was, but it has had a huge influence on how we build software. I even see its influence in dbt even if it might not have been intentional or direct.

Tools like Snowflake and dbt allow us to build processes that are much better than what most people have. Should we focus on all the "bad" things that may come with the modern data stack? Should we focus on how practitioners are not well educated, and so we need to throw all they are doing out?

I don't think so; I believe that we can help companies mature their data practices faster. Will we have the best data models? Maybe not. Will users do things perfectly? Nope. But can we help them move faster and guide them along their journey to avoid big pitfalls? I think we can. Getting people to use git, automating testing, and creating DataOps processes is a huge step forward for many organizations. Let's start there.

There's a reason Data Mesh and the Modern Data Stack resonate with so many people. There's a desire to do things faster with more autonomy at many companies, not just the ones with multi-million-dollar budgets. Let's focus on what is achievable, do the best we can, and help people mature along the way. We don't need more complexity; we need less.

Using dbt with Snowflake is one of the most popular and powerful data stacks today. Are you facing one of these situations:

- You just joined a data team using dbt Core with Snowflake, and you want to set up your local dbt development environment

- Your company is already using Snowflake and wants to try out dbt.

- You are using dbt with another data warehouse and you want to try dbt with Snowflake before migrating.

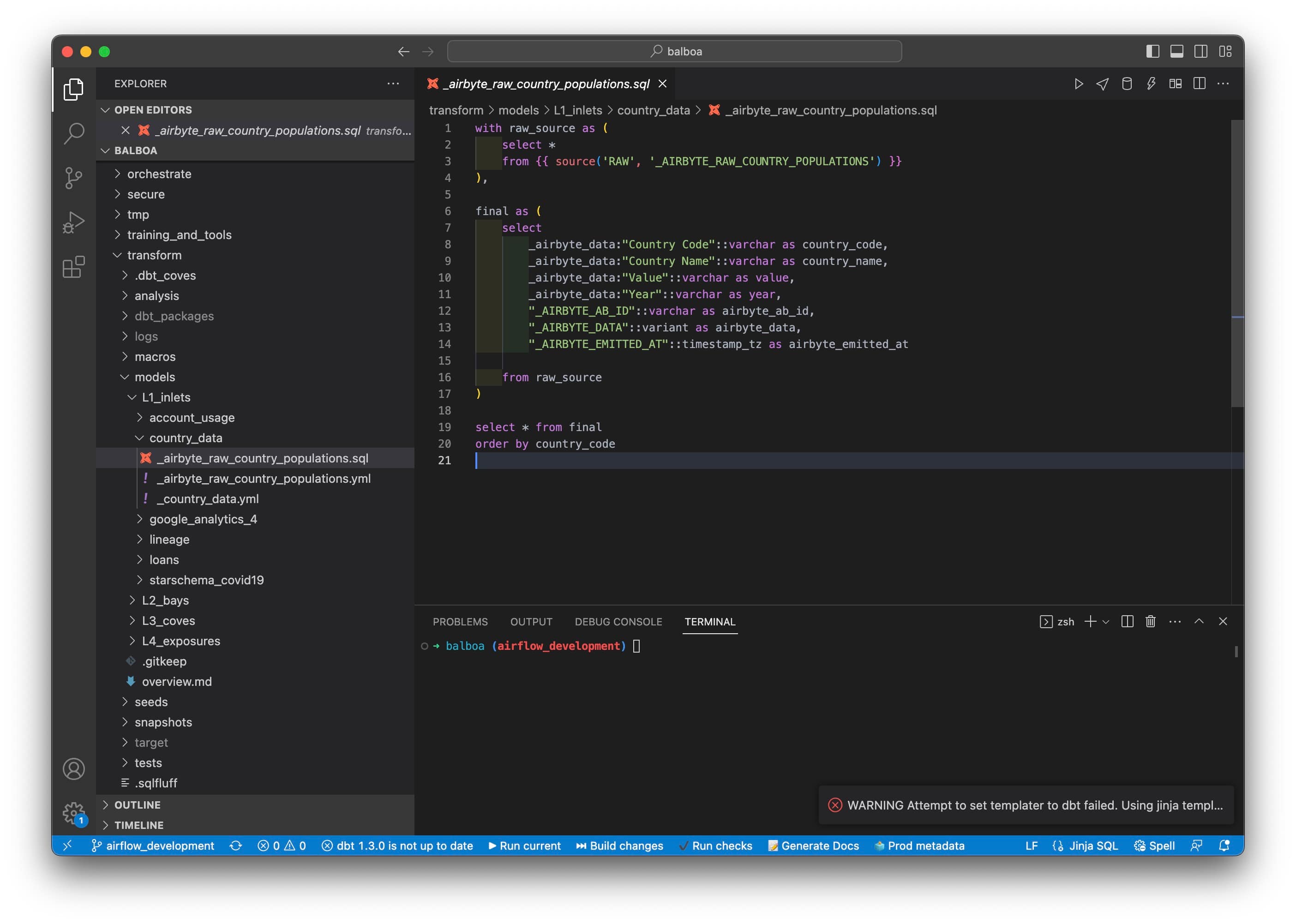

If you are facing any of these, this guide will help you set up your dbt environment for Snowflake using VS Code as your dbt development environment. We will also cover useful python libraries and VS Code extensions that will make you more productive. Want to become a dbt on Snowflake ninja, keep reading.

To get started, you need to set up your dbt development environment. This includes Python, dbt, and VS Code. You will also need to set up your Snowflake account properly so dbt can work its magic.

While dbt supports versions of Python greater than 3.7, some other tools like Snowpark require Python 3.8, therefore we recommend you stick with that version. You can find the installer for your particular Operating System on this page. Finding the right Windows Installer can be confusing, so look for a link titled Windows installer (64-bit). If you are on a Mac, you can use the Universal Installer macOS 64-bit universal2 installer.

When using dbt, you will also need Git. To setup git, follow this handy guide.

The preferred IDE for dbt is VS Code, you can find it on the official Microsoft site. click the big Download button and install like any other app.

Installing dbt is done using pip. You can find more information on this page.

For dbt Snowflake, simply run:

This will install the latest version of dbt core along with the dbt adapter for Snowflake. If you need to use an older version of dbt, you will need to specify it when you run pip. However, the version of the dbt adapter may not match the version of dbt core.

For example, as of this writing, the last version of dbt core 1.3.x is 1.3.4. However, dbt-snowflake for 1.3.x is 1.3.2. So, if you want to install the latest dbt 1.3.x for snowflake, you would run

This will install dbt-snowflake 1.3.2 along with dbt-core 1.3.4

The final pre-requisite you will need to do is set up a Snowflake user that has been granted a role with the right access to a database where dbt will create views and tables. The role should also have access to a Snowflake Warehouse for compute. Here is a handy guide that gives you the basics. We would recommend a more comprehensive setup for a production deployment, but this will get you going for now.

The key items that are not covered in that guide is that you should create a role ANALYST and a database ANALYTICS_DEV and grant OWNERSHIP of that database to the ANALYST role. The ANALYST role should also be granted USAGE on the TRANSFORMING warehouse. You also need to grant the ANALYST role to your specific user. Don’t run as ACCOUNTADMIN.

This is all needed because when dbt runs it will create a schema for your user in the ANALYTICS_DEV database and you will use the TRANSFORMING warehouse when compute is needed like when dbt creates tables.

If all of this seems confusing or tedious, you should consider a managed dbt solution like dbt Cloud or Datacoves. For more information, checkout our in-depth article where we compare dbt cloud vs dbt core as well as managed dbt core.

Now with all the pre-requisites out of the way, let’s configure dbt to connect with Snowflake.

To initialize your dbt project in Snowflake, dbt has a handy command dbt init. You can configure your dbt Snowflake profile using the dbt init command both for a new and a existing dbt project. First you will need to clone your repo using git. Then, simply run the dbt-init command and go through the prompts.

Once you get your project set up, consider adding a profile_template.yml to your project. As stated on that page, using a profiles template will simplify the dbt init process for users on your team.

To make sure dbt can connect to Snowflake, run dbt debug. If dbt can connect to your Snowflake account, you should see “All checks passed!” If you have problems, then join the dbt Community search the forums or ask a question in the #db-snowflake channel.

Even though dbt performed the setup of your profile.yml to connect to Snowflake with your credentials, it only provides the basic setup. This page provides additional parameters. that you can configure for the Snowflake connection in your profiles.yml file.

If you want to configure those parameters, you will need to open and edit the profiles.yml file. The profiles.yml file created by dbt init will be in your user’s home directory in a subdirectory called .dbt.

One handy configuration parameter to change is reuse_connections. Also, if you use SSO authentication with external browser, you should consider setting up connection caching on Snowflake, otherwise you will be prompted to authenticate for every connection dbt opens to the database.

Now that you have set up your dbt connection to Snowflake, there are some other options you can configure when dbt runs against your Snowflake account. This includes overriding the default warehouse for a specific model, adding query tags, copying grants, etc. This handy page has a lot more information on these dbt snowflake advanced configurations.

Now that you have dbt connecting to your database, let’s talk about some python libraries you should set up to improve how you work with dbt.

dbt-coves is an open source library created by Datacoves to complement dbt development by simplifying tedious tasks like generating staging models. It is a must-have tool for any dbt practitioner who wants to improve their efficiency. dbt-coves will automatically create your source.yml and staging models as well as their corresponding yml(property) files. It also has utilities for backing up Airbyte and Fivetran configurations.

SQLFluff is a Python library for linting SQL code. SQLFluff seamlessly integrates dbt using a templater and it is the only linter compatible with dbt. If you have not heard of code linting it helps you enforce rules on how your SQL code is formatted for example, should everyone use leading or trailing commas, should SQL keywords be upper or lower case. We recommend everyone use a linter as this will improve code readability and long term maintainability.

pre-commit with dbt-checkpoint

dbt-checkpoint is a tool that allows you to make sure your dbt project complies with certain governance rules. For example, you can have a rule that validates whether every dbt model has a description. You can also ensure that every column is documented among many other rules. We also recommend the use of dbt-checkpoint as it will assure developers don’t add technical debt from the start of a project.

In addition to these Python libraries, at Datacoves we set up the development environment with other handy libraries like Snowpark and Streamlit. We believe that flexibility is important especially in enterprise environments. If you want to learn what to consider when selecting a managed dbt core platform, check out our guide.

In addition to Python libraries, you can improve your dbt workflow with Snowflake by installing these VS Code extensions.

The official Snowflake dbt extension keeps you in the flow by bringing the Snowflake interface to VS Code. With it you can explore your database, run queries, and even upload and download files to Snowflake stages. It is a must-have for any Snowflake user.

dbt power user is a VS Code extension that improves the dbt development experience by adding handy shortcuts to run dbt models, tests, and even let’s you preview the result of a dbt model or a CTE within that model.

The SQLFluff VS Code extension is the companion to the SQLFluff python library. It improves the development experience by highlighting linting errors right in line with your SQL code. It even has a handy hover which describes the linting error and links to the SQLFluff documentation.

There are many other great VS Code extensions and at Datacoves we are always improving the dbt developer’s experience by pre-installing them. One recent addition demonstrated on the video below is a ChatGPT extension that allows you to improve the dbt experience by writing documentation in addition to other functions.

Getting started with dbt and Snowflake is not hard and knowing how to streamline the development experience when working with dbt and Snowflake will maximize developer happiness.

Some users may run into issues configuring their development environment. If that happens, check out the #sqlfluff, #tools-dbt-libraries, and #tools-vscode channels on the dbt Slack community. There are many helpful people there always ready to help.

As you can see there are a lot of steps and potential gotchas to get a dbt environment properly configured. This gets more complicated as the number of dbt users increases. Upgrading everyone can also pose a challenge. These reasons and more are why we created the most flexible managed dbt-core environment. If you want your developers to be up and running in minutes with no installation required, reach out and we can show you how we can streamline your teams’ dbt experience with best practices from the start.

Since its inception, dbt has served the needs of small to mid-sized companies to transform data into actionable insights. Now as larger enterprises look to adopt dbt, they must consider the unique complexities of large enterprises. These include integrating with existing systems behind a corporate firewall, orchestrating end-to-end data pipelines, and implementing DataOps processes to transform how teams collaborate across the organization.

This article compares three popular dbt development and deployment options: leveraging dbt Cloud, using dbt Core alone, or using a dbt Core managed platform other than dbt Cloud. To learn more about specific dbt Cloud features and how to implement them using dbt Core using other open-source tools checkout dbt Core vs dbt Cloud - Key Differences.

dbt Cloud is a hosted environment to develop and deploy dbt Core projects. dbt Cloud leverages dbt Core, the open-source data transformation framework we all know as dbt. dbt Cloud pricing starts at $100 per developer for teams or if you are a single developer, they offer a free single seat with limited features.

When companies are ramping up with dbt, one of the hardest parts is setting up and managing dbt IDE environments. Analytic Engineers coming to dbt may not be familiar with concepts like version control with git or using the command line. The dbt Cloud IDE simplifies developer onboarding by providing a web-based SQL IDE to team members so they can easily write, test, and refine data transformation code without having to install anything on their computers. Complexities like starting a git branch are tucked behind a big colorful button so users know that is the first step in their new feature journey.

Developers who are accustomed to more versatile local IDEs, such as VS Code, may find the dbt Cloud experience limiting as they cannot leverage extensions such as those from the VS Code Marketplace nor can they extend dbt Core using the vast array of Python libraries. An example of a common library employed in the dbt community is SQLFluff. With SQLFluff, teams can ensure a minimum level of code quality is achieved across developers.

DataOps is a set of practices that combines data management and software engineering to improve the quality, speed, and collaboration of data analytics teams. In an enterprise environment, implementing DataOps processes early on in a project's lifecycle is crucial.

dbt Cloud can be paired with Continuous Integration (CI) tools like GitHub Actions to validate data transformations before they are added to a production environment.

If CI integration checks include things like SQLFluff and dbt-checkpoint, there is currently no way for users to run these validations in dbt Cloud prior to executing the automated CI checks in GitHub Actions. This may lead to a lot of back and forth between the GitHub Actions run failures and the dbt Cloud IDE to fix and commit the changes. Aspects such as code reviews and approvals will occur in the CI/CD tool of choice such as GitHub and dbt Cloud can report job execution status back to GitHub Actions. This allows teams to know when it is safe to merge changes to their code.

Companies that have tools like Bitbucket, Jira, and Jenkins within their corporate firewall may find it challenging to integrate with dbt Cloud.

dbt Cloud makes deploying a dbt Core project simple. It allows you to define custom environment variables and the specific dbt commands (seed, run, test) that you want to run during production runs. The dbt Cloud scheduler can be configured to trigger at specific intervals using an intuitive UI.

dbt Cloud is primarily focused on running dbt projects. Therefore if a data pipeline has more dependencies, an external orchestration tool may be required. Cases where this is necessary include when you need to connect the Extract and Load step to the Transformation happening in dbt. Without connecting these steps, you have no assurance that the load is complete before the transformation starts. This can be mitigated with freshness checks, but without an explicit connection between the load step and the transformation, you may be delaying transformation unnecessarily.

Fortunately, if you do use an external orchestrator, dbt Cloud offers an API to trigger dbt Cloud jobs from your orchestrator. Another reason an external orchestrator may be needed in an enterprise is when there are additional steps to run after the dbt transformation. These may include sending data to downstream BI tools, marketing campaign tools, MDM systems, or refreshing machine learning models. dbt Cloud recently announced a webhooks API that would allow for triggering steps after transformation. However, if these endpoints reside within a corporate firewall, this may not be an option and an orchestrator like Airflow may be necessary.