Not long ago, the data analytics world relied on monolithic infrastructures—tightly coupled systems that were difficult to scale, maintain, and adapt to changing needs. These legacy setups often resulted in operational bottlenecks, delayed insights, and high maintenance costs. To overcome these challenges, the industry shifted toward what was deemed the Modern Data Stack (MDS)—a suite of focused tools optimized for specific stages of the data engineering lifecycle.

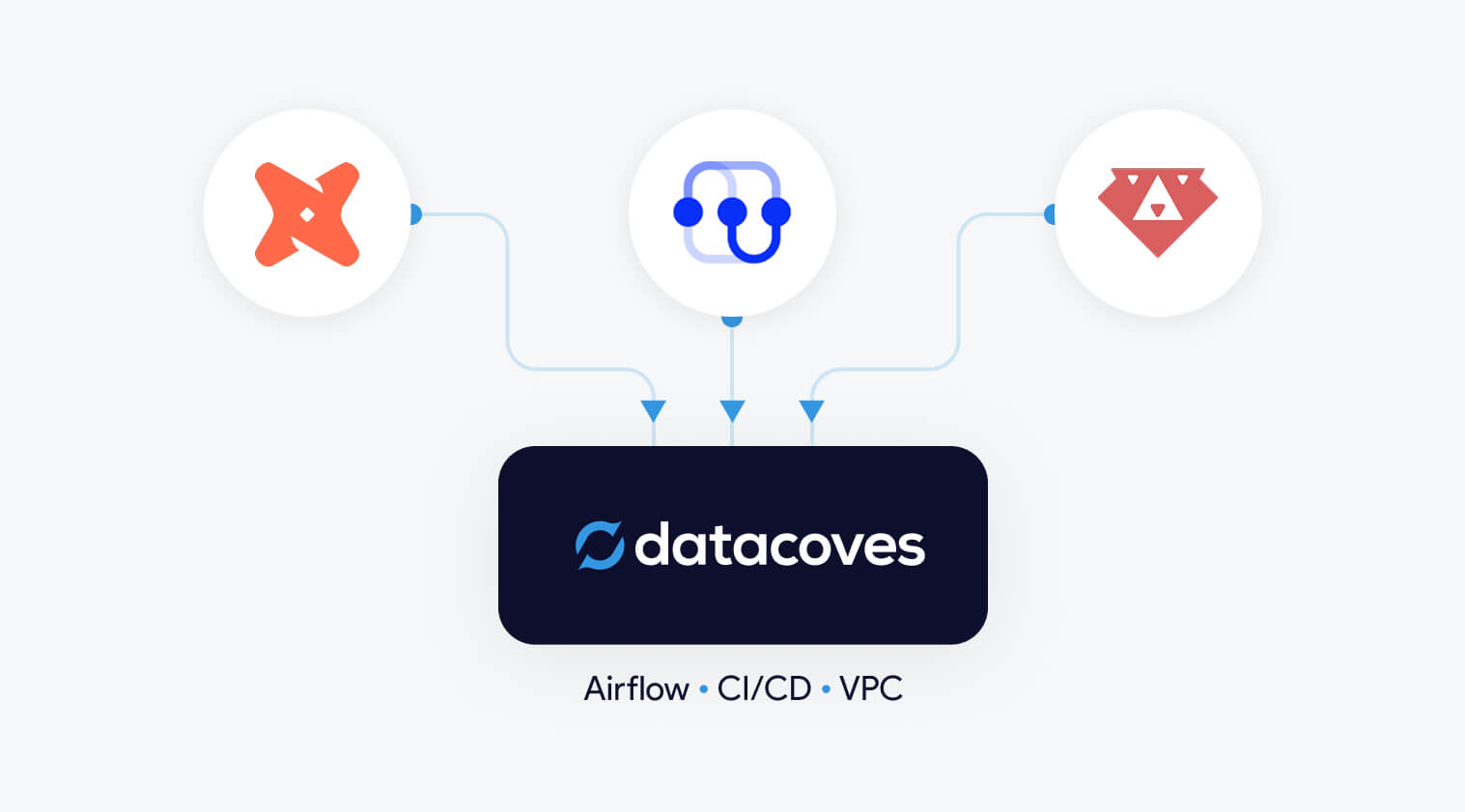

This modular approach was revolutionary, allowing organizations to select best-in-class tools like Airflow for Orchestration or a managed version of Airflow from Astronomer or Amazon without the need to build custom solutions. While the MDS improved scalability, reduced complexity, and enhanced flexibility, it also reshaped the build vs. buy decision for analytics platforms. Today, instead of deciding whether to create a component from scratch, data teams face a new question: Should they build the infrastructure to host open-source tools like Apache Airflow and dbt Core, or purchase their managed counterparts? This article focuses on these two components because pipeline orchestration and data transformation lie at the heart of any organization’s data platform.

When we say build in terms of open-source solutions, we mean building infrastructure to self-host and manage mature open-source tools like Airflow and dbt. These two tools are popular because they have been vetted by thousands of companies! In addition to hosting and managing, engineers must also ensure interoperability of these tools within their stack, handle security, scalability, and reliability. Needless to say, building is a huge undertaking that should not be taken lightly.

dbt and Airflow both started out as open-source tools, which were freely available to use due to their permissive licensing terms. Over time, cloud-based managed offerings of these tools were launched to simplify the setup and development process. These managed solutions build upon the open-source foundation, incorporating proprietary features like enhanced user interfaces, automation, security integration, and scalability. The goal is to make the tools more convenient and reduce the burden of maintaining infrastructure while lowering overall development costs. In other words, paid versions arose out of the pain points of self-managing the open-source tools.

This begs the important question: Should you self-manage or pay for your open-source analytics tools?

As with most things, both options come with trade-offs, and the “right” decision depends on your organization’s needs, resources, and priorities. By understanding the pros and cons of each approach, you can choose the option that aligns with your goals, budget, and long-term vision.

A team building Airflow in-house may spend weeks configuring a Kubernetes-backed deployment, managing Python dependencies, and setting up DAG synchronizing files via S3 or Git. While the outcome can be tailored to their needs, the time and expertise required represent a significant investment.

Before moving on to the buy tradeoffs, it is important to set the record straight. You may have noticed that we did not include “the tool is free to use” as one of our pros for building with open-source. You might have guessed by reading the title of this section, but many people incorrectly believe that building their MDS using open-source tools like dbt is free. When in reality there are many factors that contribute to the true dbt pricing and the same is true for Airflow.

How can that be? Well, setting up everything you need and managing infrastructure for Airflow and dbt isn’t necessarily plug and play. There is day-to-day work from managing Python virtual environments, keeping dependencies in check, and tackling scaling challenges which require ongoing expertise and attention. Hiring a team to handle this will be critical particularly as you scale. High salaries and benefits are needed to avoid costly mistakes; this can easily cost anywhere from $5,000 to $26,000+/month depending on the size of your team.

In addition to the cost of salaries, let’s look at other possible hidden costs that come with using open-source tools.

The time it takes to configure, customize, and maintain a complex open-source solution is often underestimated. It’s not until your team is deep in the weeds—resolving issues, figuring out integrations, and troubleshooting configurations—that the actual costs start to surface. With each passing day your ROI is threatened. You want to start gathering insights from your data as soon as possible. Datacoves helped Johnson and Johnson set up their data stack in weeks and when issues arise, a you will need expertise to accelerate the time to resolution.

And then there’s the learning curve. Not all engineers on your team will be seniors, and turnover is inevitable. New hires will need time to get up to speed before they can contribute effectively. This is the human side of technology: while the tools themselves might move fast, people don’t. That ramp-up period, filled with training and trial-and-error, represents a hidden cost.

Security and compliance add another layer of complexity. With open-source tools, your team is responsible for implementing best practices—like securely managing sensitive credentials with a solution like AWS Secrets Manager. Unlike managed solutions, these features don’t come prepackaged and need to be integrated with the system.

Compliance is no different. Ensuring your solution meets enterprise governance requirements takes time, research, and careful implementation. It’s a process of iteration and refinement, and every hour spent here is another hidden cost as well as risking security if not done correctly.

Scaling open-source tools is where things often get complicated. Beyond everything already mentioned, your team will need to ensure the solution can handle growth. For many organizations, this means deploying on Kubernetes. But with Kubernetes comes steep learning curves and operational challenges. Making sure you always have a knowledgeable engineer available to handle unexpected issues and downtimes can become a challenge. Extended downtime due to this is a hidden cost since business users are impacted as they become reliant on your insights.

A managed solution for Airflow and dbt can solve many of the problems that come with building your own solution from open-source tools such as: hassle-free maintenance, improved UI/UX experience, and integrated functionality. Let’s take a look at the pros.

Using a solution like MWAA, teams can leverage managed Airflow eliminating the need for infrastructure worries however additional configuration and development will be needed for teams to leverage it with dbt and to troubleshoot infrastructure issues suck as containers running out of memory.

For data teams, the allure of a custom-built solution often lies in its promise of complete control and customization. However, building this requires significant time, expertise, and ongoing maintenance. Datacoves bridges the gap between custom-built flexibility and the simplicity of managed services, offering the best of both worlds.

With Datacoves, teams can leverage managed Airflow and pre-configured dbt environments to eliminate the operational burden of infrastructure setup and maintenance. This allows data teams to focus on what truly matters—delivering insights and driving business decisions—without being bogged down by tool management.

Unlike other managed solutions for dbt or Airflow, which often compromise on flexibility for the sake of simplicity, Datacoves retains the adaptability that custom builds are known for. By combining this flexibility with the ease and efficiency of managed services, Datacoves empowers teams to accelerate their analytics workflows while ensuring scalability and control.

Datacoves doesn’t just run the open-source solutions, but through real-world implementations, the platform has been molded to handle enterprise complexity while simplifying project onboarding. With Datacoves, teams don’t have to compromize on features like Datacoves-Mesh (aka dbt-mesh), column level lineage, GenAI, Semantic Layer, etc. Best of all, the company’s goal is to make you successful and remove hosting complexity without introducing vendor lock-in. What Datacove does, you can do yourself if given enough time, experience, and money. Finally, for security concious organizations, Datacoves is the only solution on the market that can be deployed in your private cloud with white-glove enterprise support.

Datacoves isn’t just a platform—it’s a partnership designed to help your data team unlock their potential. With infrastructure taken care of, your team can focus on what they do best: generating actionable insights and maximizing your ROI.

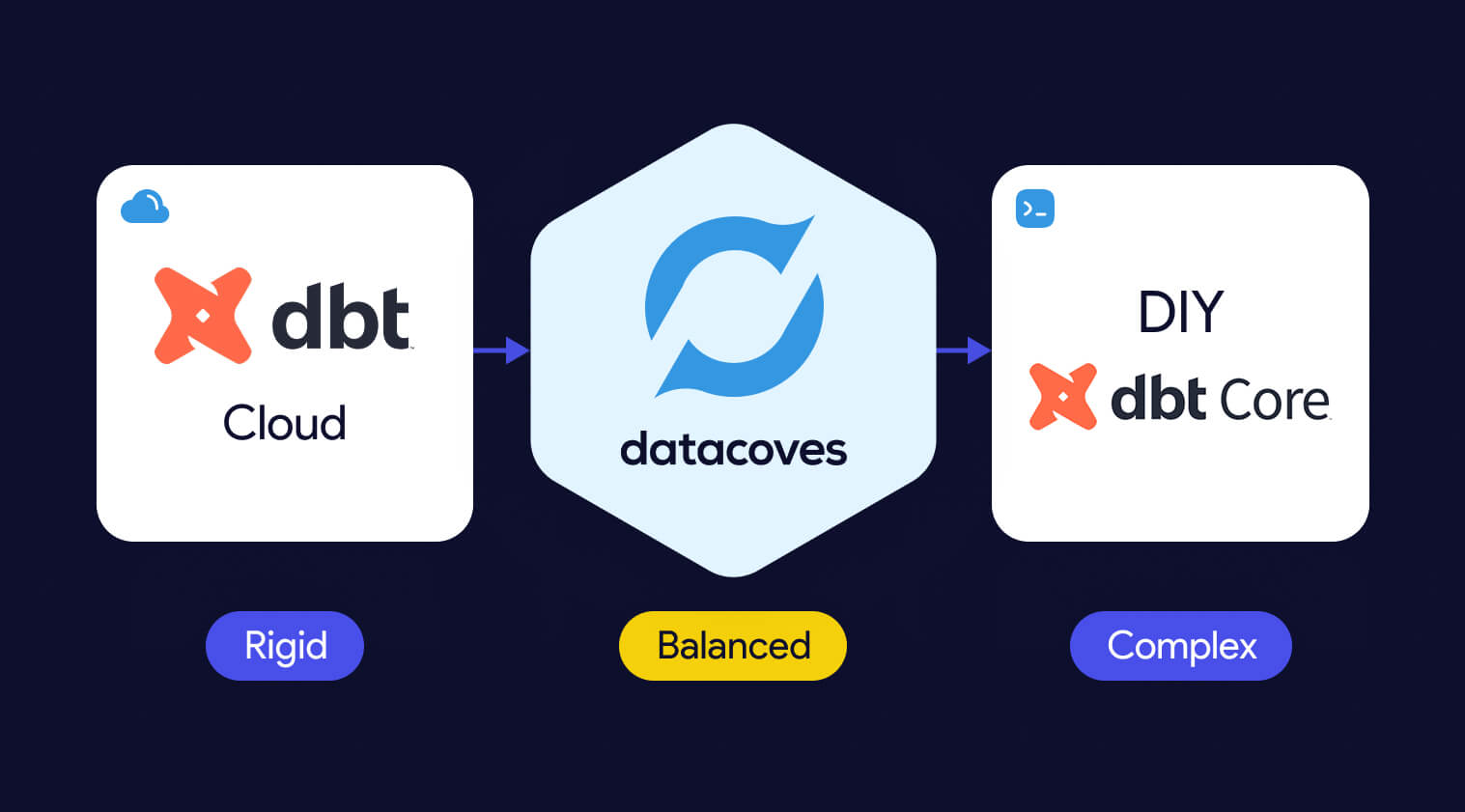

The build vs. buy debate has long been a challenge for data teams, with building offering flexibility at the cost of complexity, and buying sacrificing flexibility for simplicity. As discussed earlier in the article, solutions like dbt and Airflow are powerful, but managing them in-house requires significant time, resources, and expertise. On the other hand, managed offerings like dbt Cloud and MWAA simplify operations but often limit customization and control.

Datacoves bridges this gap, providing a managed platform that delivers the flexibility and control of a custom build without the operational headaches. By eliminating the need to manage infrastructure, scaling, and security. Datacoves enables data teams to focus on what matters most: delivering actionable insights and driving business outcomes.

As highlighted in Fundamentals of Data Engineering, data teams should prioritize extracting value from data rather than managing the tools that support them. Datacoves embodies this principle, making the argument to build obsolete. Why spend weeks—or even months—building when you can have the customization and adaptability of a build with the ease of a buy? Datacoves is not just a solution; it’s a rethinking of how modern data teams operate, helping you achieve your goals faster, with fewer trade-offs.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and visual ETL tools such as Alteryx, Matillion, and Informatica. Code-first engines offer stronger rigor, testing, and CI/CD, while GUI platforms emphasize ease of use and rapid prototyping. Teams choose these alternatives when they need more security, governance, or flexibility than dbt Core or dbt Cloud provide.

The top dbt alternatives include Datacoves, SQLMesh, Bruin Data, Dataform, and GUI-based ETL tools such as Alteryx, Matillion, and Informatica.

Teams explore dbt alternatives when they need stronger governance, private deployments, or support for Python and code-first workflows that go beyond SQL. Many also prefer GUI-based ETL tools for faster onboarding. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in, which makes tool neutrality and platform flexibility more important than ever.

Teams look for dbt alternatives when they need stronger orchestration, consistent development environments, Python support, or private cloud deployment options that dbt Cloud does not provide.

Organizations evaluating dbt alternatives typically compare tools across three categories. Each category reflects a different approach to data transformation, development preferences, and organizational maturity.

Organizations consider alternatives to dbt Cloud when they need more flexibility, stronger security, or support for development workflows that extend beyond dbt. Teams comparing platform options often begin by evaluating the differences between dbt Cloud vs dbt Core.

Running enterprise-scale ELT pipelines often requires a full orchestration layer, consistent development environments, and private deployment options that dbt Cloud does not provide. Costs can also increase at scale (see our breakdown of dbt pricing considerations), and some organizations prefer to avoid features that are not open source to reduce long-term vendor lock-in.

This category includes platforms that deliver the benefits of dbt Cloud while providing more control, extensibility, and alignment with enterprise data platform requirements.

Datacoves provides a secure, flexible platform that supports dbt, SQLMesh, and Bruin in a unified environment with private cloud or VPC deployment.

Datacoves is an enterprise data platform that serves as a secure, flexible alternative to dbt Cloud. It supports dbt Core, SQLMesh, and Bruin inside a unified development and orchestration environment, and it can be deployed in your private cloud or VPC for full control over data access and governance.

Benefits

Flexibility and Customization:

Datacoves provides a customizable in-browser VS Code IDE, Git workflows, and support for Python libraries and VS Code extensions. Teams can choose the transformation engine that fits their needs without being locked into a single vendor.

Handling Enterprise Complexity:

Datacoves includes managed Airflow for end-to-end orchestration, making it easy to run dbt and Airflow together without maintaining your own infrastructure. It standardizes development environments, manages secrets, and supports multi-team and multi-project workflows without platform drift.

Cost Efficiency:

Datacoves reduces operational overhead by eliminating the need to maintain separate systems for orchestration, environments, CI, logging, and deployment. Its pricing model is predictable and designed for enterprise scalability.

Data Security and Compliance:

Datacoves can be deployed fully inside your VPC or private cloud. This gives organizations complete control over identity, access, logging, network boundaries, and compliance with industry and internal standards.

Reduced Vendor Lock-In:

Datacoves supports dbt, SQLMesh, and Bruin Data, giving teams long-term optionality. This avoids being locked into a single transformation engine or vendor ecosystem.

Running dbt Core yourself is a flexible option that gives teams full control over how dbt executes. It is also the most resource-intensive approach. Teams choosing DIY dbt Core must manage orchestration, scheduling, CI, secrets, environment consistency, and long-term platform maintenance on their own.

Benefits

Full Control:

Teams can configure dbt Core exactly as they want and integrate it with internal tools or custom workflows.

Cost Flexibility:

There are no dbt Cloud platform fees, but total cost of ownership often increases as the system grows.

Considerations

High Maintenance Overhead:

Teams must maintain Airflow or another orchestrator, build CI pipelines, manage secrets, and keep development environments consistent across users.

Requires Platform Engineering Skills:

DIY dbt Core works best for teams with strong Kubernetes, CI, Python, and DevOps expertise. Without this expertise, the environment becomes fragile over time.

Slow to Scale:

As more engineers join the team, keeping dbt environments aligned becomes challenging. Onboarding, upgrades, and platform drift create operational friction.

Security and Compliance Responsibility:

Identity, permissions, logging, and network controls must be designed and maintained internally, which can be significant for regulated organizations.

Teams that prefer code-first tools often look for dbt alternatives that provide strong SQL modeling, Python support, and seamless integration with CI/CD workflows and automated testing. These are part of a broader set of data transformation tools. Code-based ETL tools give developers greater control over transformations, environments, and orchestration patterns than GUI platforms. Below are four code-first contenders that organizations should evaluate.

Code-first dbt alternatives like SQLMesh, Bruin Data, and Dataform provide stronger CI/CD integration, automated testing, and more control over complex transformation workflows.

SQLMesh is an open-source framework for SQL and Python-based data transformations. It provides strong visibility into how changes impact downstream models and uses virtual data environments to preview changes before they reach production.

Benefits

Efficient Development Environments:

Virtual environments reduce unnecessary recomputation and speed up iteration.

Considerations

Part of the Fivetran Ecosystem:

SQLMesh was acquired by Fivetran, which may influence its future roadmap and level of independence.

Dataform is a SQL-based transformation framework focused specifically for BigQuery. It enables teams to create table definitions, manage dependencies, document models, and configure data quality tests inside the Google Cloud ecosystem. It also provides version control and integrates with GitHub and GitLab.

Benefits

Centralized BigQuery Development:

Dataform keeps all modeling and testing within BigQuery, reducing context switching and making it easier for teams to collaborate using familiar SQL workflows.

Considerations

Focused Only on the GCP Ecosystem:

Because Dataform is geared toward BigQuery, it may not be suitable for organizations that use multiple cloud data warehouses.

AWS Glue is a serverless data integration service that supports Python-based ETL and transformation workflows. It works well for organizations operating primarily in AWS and provides native integration with services like S3, Lambda, and Athena.

Benefits

Python-First ETL in AWS:

Glue supports Python scripts and PySpark jobs, making it a good fit for engineering teams already invested in the AWS ecosystem.

Considerations

Requires Engineering Expertise:

Glue can be complex to configure and maintain, and its Python-centric approach may not be ideal for SQL-first analytics teams.

Bruin is a modern SQL-based data modeling framework designed to simplify development, testing, and environment-aware deployments. It offers a familiar SQL developer experience while adding guardrails and automation to help teams manage complex transformation logic.

Benefits

Modern SQL Modeling Experience:

Bruin provides a clean SQL-first workflow with strong dependency management and testing.

Considerations

Growing Ecosystem:

Bruin is newer than dbt and has a smaller community and fewer third-party integrations.

While code-based transformation tools provide the most flexibility and long-term maintainability, some organizations prefer graphical user interface (GUI) tools. These platforms use visual, drag-and-drop components to build data integration and transformation workflows. Many of these platforms fall into the broader category of no-code ETL tools. GUI tools can accelerate onboarding for teams less comfortable with code editors and may simplify development in the short term. Below are several GUI-based options that organizations often consider as dbt alternatives.

GUI-based dbt alternatives such as Matillion, Informatica, and Alteryx use drag-and-drop interfaces that simplify development and accelerate onboarding for mixed-skill teams.

Matillion is a cloud-based data integration platform that enables teams to design ETL and transformation workflows through a visual, drag-and-drop interface. It is built for ease of use and supports major cloud data warehouses such as Amazon Redshift, Google BigQuery, and Snowflake.

Benefits

User-Friendly Visual Development:

Matillion simplifies pipeline building with a graphical interface, making it accessible for users who prefer low-code or no-code tooling.

Considerations

Limited Flexibility for Complex SQL Modeling:

Matillion’s visual approach can become restrictive for advanced transformation logic or engineering workflows that require version control and modular SQL development.

Informatica is an enterprise data integration platform with extensive ETL capabilities, hundreds of connectors, data quality tooling, metadata-driven workflows, and advanced security features. It is built for large and diverse data environments.

Benefits

Enterprise-Scale Data Management:

Informatica supports complex data integration, governance, and quality requirements, making it suitable for organizations with large data volumes and strict compliance needs.

Considerations

High Complexity and Cost:

Informatica’s power comes with a steep learning curve, and its licensing and operational costs can be significant compared to lighter-weight transformation tools.

Alteryx is a visual analytics and data preparation platform that combines data blending, predictive modeling, and spatial analysis in a single GUI-based environment. It is designed for analysts who want to build workflows without writing code and can be deployed on-premises or in the cloud.

Benefits

Powerful GUI Analytics Capabilities:

Alteryx allows users to prepare data, perform advanced analytics, and generate insights in one tool, enabling teams without strong coding skills to automate complex workflows.

Considerations

High Cost and Limited SQL Modeling Flexibility:

Alteryx is one of the more expensive platforms in this category and is less suited for SQL-first transformation teams who need modular modeling and version control.

Azure Data Factory (ADF) is a fully managed, serverless data integration service that provides a visual interface for building ETL and ELT pipelines. It integrates natively with Azure storage, compute, and analytics services, allowing teams to orchestrate and monitor pipelines without writing code.

Benefits

Strong Integration for Microsoft-Centric Teams:

ADF connects seamlessly with other Azure services and supports a pay-as-you-go model, making it ideal for organizations already invested in the Microsoft ecosystem.

Considerations

Limited Transformation Flexibility:

ADF excels at data movement and orchestration but offers limited capabilities for complex SQL modeling, making it less suitable as a primary transformation engine

Talend provides an end-to-end data management platform with support for batch and real-time data integration, data quality, governance, and metadata management. Talend Data Fabric combines these capabilities into a single low-code environment that can run in cloud, hybrid, or on-premises deployments.

Benefits

Comprehensive Data Quality and Governance:

Talend includes built-in tools for data cleansing, validation, and stewardship, helping organizations improve the reliability of their data assets.

Considerations

Broad Platform, Higher Operational Complexity:

Talend’s wide feature set can introduce complexity, and teams may need dedicated expertise to manage the platform effectively.

SQL Server Integration Services is part of the Microsoft SQL Server ecosystem and provides data integration and transformation workflows. It supports extracting, transforming, and loading data from a wide range of sources, and offers graphical tools and wizards for designing ETL pipelines.

Benefits

Strong Fit for SQL Server-Centric Teams:

SSIS integrates deeply with SQL Server and other Microsoft products, making it a natural choice for organizations with a Microsoft-first architecture.

Considerations

Not Designed for Modern Cloud Data Warehouses:

SSIS is optimized for on-premises SQL Server environments and is less suitable for cloud-native architectures or modern ELT workflows.

Recent consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has increased concerns about vendor lock-in and pushed organizations to evaluate more flexible transformation platforms.

Organizations explore dbt alternatives when dbt no longer meets their architectural, security, or workflow needs. As teams scale, they often require stronger orchestration, consistent development environments, mixed SQL and Python workflows, and private deployment options that dbt Cloud does not provide.

Some teams prefer code-first engines for deeper CI/CD integration, automated testing, and strong guardrails across developers. Others choose GUI-based tools for faster onboarding or broader integration capabilities. Recent market consolidation, including Fivetran acquiring SQLMesh and merging with dbt Labs, has also increased concerns about vendor lock-in.

These factors lead many organizations to evaluate tools that better align with their governance requirements, engineering preferences, and long-term strategy.

DIY dbt Core offers full control but requires significant engineering work to manage orchestration, CI/CD, security, and long-term platform maintenance.

Running dbt Core yourself can seem attractive because it offers full control and avoids platform subscription costs. However, building a stable, secure, and scalable dbt environment requires significantly more than executing dbt build on a server. It involves managing orchestration, CI/CD, and ensuring development environment consistency along with long-term platform maintenance, all of which require mature DataOps practices.

The true question for most organizations is not whether they can run dbt Core themselves, but whether it is the best use of engineering time. This is essentially a question of whether to build vs buy your data platform. DIY dbt platforms often start simple and gradually accumulate technical debt as teams grow, pipelines expand, and governance requirements increase.

For many organizations, DIY works in the early stages but becomes difficult to sustain as the platform matures.

The right dbt alternative depends on your team’s skills, governance requirements, pipeline complexity, and long-term data platform strategy.

Selecting the right dbt alternative depends on your team’s skills, security requirements, and long-term data platform strategy. Each category of tools solves different problems, so it is important to evaluate your priorities before committing to a solution.

If these are priorities, a platform with secure deployment options or multi-engine support may be a better fit than dbt Cloud.

Recent consolidation in the ecosystem has raised concerns about vendor dependency. Organizations that want long-term flexibility often look for:

Consider platform fees, engineering maintenance, onboarding time, and the cost of additional supporting tools such as orchestrators, IDEs, and environment management

dbt remains a strong choice for SQL-based transformations, but it is not the only option. As organizations scale, they often need stronger orchestration, consistent development environments, Python support, and private deployment capabilities that dbt Cloud or DIY dbt Core may not provide. Evaluating alternatives helps ensure that your transformation layer aligns with your long-term platform and governance strategy.

Code-first tools like SQLMesh, Bruin Data, and Dataform offer strong engineering workflows, while GUI-based tools such as Matillion, Informatica, and Alteryx support faster onboarding for mixed-skill teams. The right choice depends on the complexity of your pipelines, your team’s technical profile, and the level of security and control your organization requires.

Datacoves provides a flexible, secure alternative that supports dbt, SQLMesh, and Bruin in a unified environment. With private cloud or VPC deployment, managed Airflow, and a standardized development experience, Datacoves helps teams avoid vendor lock-in while gaining an enterprise-ready platform for analytics engineering.

Selecting the right dbt alternative is ultimately about aligning your transformation approach with your data architecture, governance needs, and long-term strategy. Taking the time to assess these factors will help ensure your platform remains scalable, secure, and flexible for your future needs.

In continuation of our previous blog discussing the importance of implementing DataOps, we now turn our attention to the tools that can efficiently streamline your processes. Additionally, we will explore real-life examples of successful implementations, illustrating the tangible benefits of adopting DataOps practices.

There are a lot of DataOps tools that can help you automate data processes, manage data pipelines, and ensure the quality of your data. These tools can help data teams work faster, make fewer mistakes, and deliver data products more quickly.

Here are some recommended tools needed for a robust DataOps process:

DataOps has been successfully used in the real world by companies of all sizes, from small startups to large corporations. The DataOps methodology is based on collaboration, automation, and monitoring throughout the entire data lifecycle, from collecting data to using it. Organizations can get insights faster, be more productive, and improve the quality of their data. DataOps has been used successfully in many industries, including finance, healthcare, retail, and technology.

Here are a few examples of real-world organizations that have used DataOps well:

DataOps has a bright future because more and more businesses are realizing how important data is to their success. With the exponential growth of data, it is becoming more and more important for organizations to manage it well. DataOps will likely be used by more and more companies as they try to streamline their data management processes and cut costs. Cloud-based data management platforms have made it easier for organizations to manage their data well. Some of the main benefits of these platforms are that they are scalable, flexible, and cost-effective. With DataOps teams can improve collaboration, agility, and build trust in data by creating processes that test changes before they are rolled out to production.

With the development of modern data tools, companies can now adopt software development best practices in analytics. In today’s fast-paced world, it's important to give teams the tools they need to respond quickly to changes in the market by using high-quality data. Companies should use DataOps if they want to manage data better and reduct the technical debt created from uncontrolled processes. Putting DataOps processes in place for the first time can be hard, and it's easier said than done. DataOps requires a change in attitude, a willingness to try out new technologies and ways of doing things, and a commitment to continuous improvement. If an organization is serious about using DataOps, it must invest in the training, infrastructure, and cultural changes that are needed to make it work. With the right approach, companies can get the most out of DataOps and help their businesses deliver better outcomes.

At Datacoves, we offer a suite of DataOps tools to help organizations implement DataOps quickly and efficiently. We enable organizations to start automating simple processes and gradually build out more complex ones as their needs evolve. Our team has extensive experience guiding organizations through the DataOps implementation process.

Schedule a call with us, and we'll explain how dbt and DataOps can help you mature your data processes.

The amount of data produced today is mind-boggling and is expanding at an astonishing rate. This explosion of data has led to the emergence of big data challenges that organizations must overcome to remain competitive. Organizations must effectively manage data, guarantee its accuracy and quality, in order to derive actionable insights from it. This is where DataOps comes in. In this first post we go through an overview of DataOps and in our next post we will discuss tooling that support DataOps as well as discuss companies that have seen the benefit of implementing DataOps Processes.

DataOps is a methodology that merges DevOps principles from software development with data management practices. This helps to improve the process of developing, deploying, and maintaining data-driven applications. The goal is to increase the delivery of new insights and the approach emphasizes collaboration between data scientists, data engineers, data analysts, and analytics engineers.

Automation and continuous delivery are leveraged for faster deployment cycles. Organizations can enhance their data management procedures, decrease errors, and increase the accuracy and timeliness of their data by implementing DataOps.

The overarching goal is to improve an organization's capacity for decision-making by facilitating quicker, more precise data product deliveries. DataOps can speed up task completion with fewer errors by encouraging effective team collaboration, ultimately enhancing the organization's success.

DataOps is now feasible thanks to modern data stack tools. Modern data tools like Snowflake and dbt can enable delivery of new features more quickly while upholding IT governance standards. This allows for employee empowerment and improves their agility all while helping to create a culture that values data-driven decision-making. Effective DataOps implementation enables businesses to maximize the value of their data and build a data-driven culture for greater success.

It is impossible to overstate the role that DataOps plays in overcoming big data challenges. Organizations must learn how to manage data effectively as it continues to increase if they want to stay competitive.

DataOps addresses these challenges by providing a set of best practices and tools that enable data teams to work more efficiently and effectively. Some of the key benefits of DataOps include:

In a world without DataOps, data-related processes can be slow, error-prone, and documentation is often outdated. DataOps provides the opportunity to rethink how data products are delivered. This promotes a culture of federated delivery. Everyone in the organization with the necessary skills is empowered to participate in insight generation.

We can achieve comparable results while preserving governance over the delivery process by learning from large, distributed open-source software development projects like the Linux project. To ensure high-quality output, a good DataOps process places an emphasis on rules and procedures that are monitored and controlled by a core team.

To implement DataOps well, one needs to take a holistic approach that brings together people, processes, and technology. Here are the five most important parts of DataOps that organizations need to think about in order to implement it successfully:

Having identified what needs to be done to achieve a good DataOps implementation, you may be wondering how we turn these ideas into actionable steps.

At Datacoves, we offer a comprehensive suite of DataOps tools to help organizations implement robust processes quickly and efficiently. One of the key benefits of using Datacoves is the ability to implement DataOps incrementally using our dynamic Infrastructure. Organizations can start by automating simple processes, such as data collection and cleaning, and gradually build out more complex processes as their needs evolve.

Our agile methodology allows for iterative development and continuous deployment, ensuring that our tools and processes are tailored to meet the evolving needs of our clients. This approach allows organizations to see the benefits of DataOps quickly, without committing to a full-scale implementation upfront. We have helped teams implement mature DataOps processes in as little as six weeks.

The Datacoves platform is being used by top global organizations and our team has extensive experience in guiding organizations through the DataOps implementation process. We ensure that you benefit from best practices and avoid common pitfalls when implementing DataOps.

Set aside some time to speak with us to discuss how we can support you in maturing your data processes with dbt and DataOps.

Don't miss out on our latest blog, where we showcase the best tools and provide real-life examples of successful implementations.

Get ready to take your data operations to the next level!

No one bakes for the sake of baking alone; cakes are meant to be shared. If no one bought, ate, or gifted someone with their delicious chocolate cake, then there would be no bakers. The same is true for data analytics. Our goal as data practitioners is to feed our organization with the information needed to make decisions.

If our cake doesn’t taste good or isn’t available when people want dessert, then it doesn’t matter that we made it from scratch. When it comes to big data, your goal should be to have the equivalent of a delicious cake - usable data - available when someone needs it.

.webp)

Life would be simple if everyone were happy with a single flavor of cake. Metrics play a crucial role in our organization, and two of the most fundamental ones are ARR (Annual Recurring Revenue) and NRR (Net Recurring Revenue). These metrics are like chocolate and vanilla - they remain popular and relevant. Yet, these flavors alone are not enough. Just as with ice cream flavors, we eventually need to try something new, the same goes for insights. It's important to experiment and explore different perspectives.

When something is novel, we love it. When we first start baking, we are not consistent. But, over time, quality improves. We go from ok, to good, and to great. Even with our newfound expertise, the chocolate cake will become boring. We all want something new.

With data, you often start with a simple metric. Having something is better than nothing, but that only lasts so long. We discover something new about our business and we translate that information into action. This is good for a while, but we will eventually see diminishing returns. While a LTV (Life Time Value) analysis may have a significant impact today, its usefulness is likely to diminish within a short period of time. Your stakeholders will crave something new, something more innovative and updated.

Someone is going to ask for a deep dive into how CAC (Customer Acquisition Cost) impacts LTV, or they might ask for a new kind of icing on that cake you just made. Either way, the point remains – as those around you start making use (or eating) of what you’re providing, they will inevitably ask for something more.

It depends. Some organizations can do more than enough with the reports and dashboards built into their CRM, web analytics, or e-commerce system. This works for many companies. There is no need to spend extra time and money on more complex data systems when something simple will suffice. There is a reason that chocolate and vanilla are popular flavors; many people like their taste and know what they are getting with their order. The same can be said for your data infrastructure.

However, flexibility is the challenge. You are limited by your reporting options and the data analytics you can access. You can have a cake you can eat, but it’s going to look a certain way, having only one type of icing, and it certainly will not have any premium fillings.

If you want those things, you need to look for something a bit more nuanced.

How do you start to accommodate your organization’s new demand for analysis or your family’s newly refined palate? By using new tools and techniques.

The Easy Bake Oven is the simplest first step – you get a pouch of ingredients, mix them, and within a few minutes, you have a cake. You might think it’s a children’s toy, but there are very creative recipes out there for the adventurous types. Unfortunately, you can only go so far; you’re limited by the size of the oven and the speed you can bake each item.

The corollary in the data world is Excel – a fabulous tool, but something that has its limitations. While it is possible to extract data from your tools and manipulate it in a spreadsheet to make it more manageable, you are still limited by the pre-designed extracts offered by the vendor tools. Excel is flexible enough for many, but it is not a perfect end-state reporting solution for everyone.

Eventually, you’ll need to address inconsistencies between systems and automate the process of data prep before it gets into your spreadsheet.

We have a feel for the basics, but now we want to improve our process. There are certain things we do repeatedly, regardless of the recipes we’re making. We need to create an assembly line. Whether we’re calculating metrics or mixing the wet ingredients and the dry ingredients, there are steps we need to complete in a specific order and to a certain level of quality.

We’ve moved on from the Easy Bake Oven and are now baking using a full-size oven. We need to be more careful about our measurements, ensure the oven is at the correct temperature, and write down notes about the various steps in our more complicated recipes. We need to make sure that different cakes, fillings, and decorations are ready at the same time, even if they have wildly different prep times. We need consistent results every time we make the cake, and we need others to be able to make the same recipe and achieve the same results by following our instructions.

Documenting and transferring this knowledge to others is difficult. Sure, you can write things down, but it is easy to skip a step that you take for granted. Perhaps your handwriting is hard to read, or someone is having trouble with the oven. You may be aware that using a hair dryer while baking can cause the circuit breaker to trip, but unless you document this information, others will not know this quirk. If your recipe has changed, such as using individual ingredients instead of a pre-made cake mix, it is important to clearly specify these adjustments, otherwise your friends and family may struggle to replicate your delicious cake.

There are plenty of data analytics tools out there that assist at this stage – Alteryx, Tableau Prep, and Datameer are just a few of many. In large enterprise organizations, you might find Informatica Power Center, Talend, or Matillion. These types of tools have graphical user interfaces (GUI); they give you the flexibility to extract data, load data, inspect data and transform it. Many enable you to define and calculate metrics. But they require you to work within each tool’s set of rules and constraints. This works well if you are starting and need something less complex.

The process that was once simple now is not; there are hidden assumptions, configurations, and requirements. You’re not using a pen-and-paper recipe anymore; now you’re working within a new system.

GUI-based tools are great for companies whose workflows fit the way the systems work. But between the way some tools are licensed, and the skill needed to use them, they are only available to the IT organization. This leaves users to find shortcuts, develop workarounds, and become dependent on the business’ shadow IT. Inevitably, you’re going to run into maintainability issues.

Recipes often have plenty of steps and ingredients; “data recipes” are no different. There are dependencies between operations, different run times for different transformations, different release cadences, and data availability SLAs. Your team might be able to manage your entire workflow quite well with one of these tools, but once your team starts introducing custom SQL logic or additional overlapping tools into the ecosystem, you introduce another layer of complexity. The result is an increase in the total cost of ownership.

Often, this complexity is opaque, too. It is not obvious what that GUI component is doing, but they are strung together to build something usable. Over time, the complexity continues to grow; custom SQL logic is introduced, and more steps in the chain. Eventually, abstractions begin to form. The data engineers decide that these processing pipelines look quite similar and can be customized based on some basic configurations. Less overhead, more output.

You’re now on the path of building custom ELT (Extract, Transform, and Load) pipelines, stitched together within the constraints of a GUI-based system. For some companies, this is okay, and it works. But there is a hidden cost – it is harder to maintain high quality inputs and outputs. The layers are tightly coupled and a mistake in one step is not caught until the whole pipeline is complete.

IT may not be aware of downstream issues because they occur in other tools outside of their domain; one change here breaks something else there. This is like buying a ready-made cake mix and “enhancing it” with your custom ingredients. It works until it does not. One day, Duncan Hines changes their ingredients, and without you realizing, there is a bad reaction between your “enhancements” and the new mix. Your once great recipe is not so great anymore, but there was no way for Duncan Hines to know. They expected you to follow their instructions; everything has been going according to plan until now.

Even if your tool has strong version control built in, it’s often difficult to reverse the changes before it is too late. If your recipe calls for 1 cup of sugar but you accidentally add in 11, we don’t want to wait until the cake is baked to discover the error. We want to catch that mistake as soon as it happens.

Everything up to this point serves a specific profile of a business, but what happens when the business matures beyond what these tools offer? What happens when you know how to bake a cake, but struggle to consistently produce hundreds or thousands of the same quality cakes?

When we aim to expand our baking operations, it's crucial to maintain consistency among bakers, minimize accidental mistakes, and have the ability to swiftly recover from any errors that occur. We need enough mixers and ovens to support the demand for our cakes, and we need an organized pantry with the correct measuring cups and spoons. We need to know which ingredients are running low, which are delayed, and which have common allergens.

In data analytics, we have our own supply chain, often called ELT. You will find tools like Airbyte and Fivetran are common choices for bringing in our data “ingredient delivery”. They manage data extraction and ingestion so you can skip the manual CSV downloads that once served you so well.

We want to ensure quality, have traceability, document our process, and successfully produce and deliver our cakes. To do this, we need a repeatable process, with clear sequential steps. In the world of baking, we use recipes to achieve this.

All baking recipes have a series of steps, some of which are common across different recipes. For example, creaming eggs and sugar, combining the wet and dry ingredients, and whipping the icing are repeatable steps that, when performed in the correct order, result in a delicious dessert. Recipes also follow a standard format: preheat the oven to the specified temperature, list the ingredients in the order in which they are used, and provide the preparation steps last. The sequence is intentional and provides a clear understanding of what to expect during the baking process.

We can apply this model to our data infrastructure by using a tool called dbt (data build tool). Instead of repeating miscellaneous transformation steps in various places, we can centralize our transformation logic into reusable components. Then we can reference those components throughout our project. We can also identify which data is stale, review the chain of dependencies between transformations, and capture the documentation alongside that logic.

We no longer need a GUI-driven tool to review our data; instead, we use the process as defined in the code to inform our logic and documentation. Our new teammate can now confidently create her cake to the same standard as everyone else; she can be confident that she is avoiding common allergens, too.

Better yet, we have a history of changes to our process and “data recipe”. Version control and code reviews are an expectation, so we know when modifications to our ingredient list will cause a complete change in the final product. Our recipes are no longer scattered, but part of a structured system of reusable, composable steps.

Maturing the way we build data processes comes down to our readiness. When we started with our Easy Bake Oven, little could go wrong, but little could be tweaked. As we build a more robust system, we can take advantage of its increased flexibility, but we also need to maintain more pieces and ensure quality throughout a more complex process.

We need to know the difference between baking soda and baking powder. Which utensils are best, and which oven cooks most evenly? How do we best organize our new suite of recipes? How do you set up the kitchen and install the appliances? There are many decisions to make, and not every organization is ready to make them. All this can be daunting for even large organizations.

But you don’t have to do things all at once. Many organizations choose to make gradual improvements, transforming their big data process from disorganized to consistent.

You can subscribe to multiple SaaS services like Fivetran or Airbyte for data loading and use providers like dbt Cloud for dbt development. If your work grows increasingly complex, you can make use of another set of tools (such as Astronomer or Dagster) to orchestrate your end-to-end process. You will still need to develop the end-to-end flow, so what you gained in flexibility you have lost in simplicity.

This is what we focus on at Datacoves. We aim to help organizations create mature processes, even when they have neither the time nor resources to figure everything out.

We give you “a fully stocked kitchen” - all the appliances, recipes, and best practices to make them work cohesively. You can take the guess work out of your data infrastructure, and instead, use a suite of tools designed to help your team perform timely and efficient analytics.

Whether your company is early in its data analytics journey or ready to take your processes to the next level, we are here to help. If your organization has strong info-sec or compliance requirements, we can also deploy within your private cloud. Datacoves is designed to get you “baking delicious cakes” as soon as possible.

Set aside some time to speak with me and learn how Datacoves has helped both small and large companies deploy mature analytics processes from the start. Also check out our case studies to see some of our customer's journeys.

Imagine yourself baking the next great cake at your organization. You can do it quickly with our help.

When you are learning to use a new tool or technology, one of the hardest things is learning all the new terminology. As we pick up language throughout our lives, we develop an association between words and our mental model of what they represent. The next time we see the word again that picture pops up in our head and if the word is now being used to mean something new, we must create a new mental model. . In this post, we introduce some core dbt (data building tool)terminology and how it all connects.

Language understanding is interesting in that once we have a mental model of a term, we have a hard time grasping the new association. I still remember the first time I spoke to someone about the Snowflake Data Warehouse, and they used the term warehouse. To me, the term had two mental models. One was a place where we store a lot of physical goods, type Costco Warehouse into Google and the first result is Costco Wholesale, a large retailer in the US that is so big it is literally a warehouse full of goods.

I have also worked in manufacturing, so I also associated a warehouse as the place where raw materials and finished goods are stored.

In programming, we would say we are overloading the term warehouse to mean different things.

In some programming languages, function overloading or method overloading is the ability to create multiple functions of the same name with different implementations – Wikipedia

We do this type of thing all the time and don’t think twice about it. However, if I say “I need a bass” do you know what I am talking about?

In my Snowflake example, I knew the context was technology and more specifically something to do with databases, so I already had a mental model for a warehouse. It’s even in Wikipedia's description of the company.

Snowflake Inc. is a cloud computing-based data warehousing company - Wikipedia

I knew of data warehouses from Teradata and Amazon (Redshift), so it was natural for me to think of a warehouse as a technology and a place where lots of data is stored. In my mind, I quickly thought of

For those new to the term warehouse, I may have lost you already. Maybe you are new to dbt and you come from the world of tools like Microsoft Excel, Alterix, Tableau, and PowerBI. If you know all this, grant me a few minutes to bring everyone up to speed.

Let’s step back and first define a database.

A database is an organized collection of structured information, or data, typically stored electronically in a computer system - Oracle

Ok, you probably know Excel. You have probably also seen an Excel Workbook with many sheets. If you organize your data neatly in Excel like the image below, we could consider that workbook a database.

Going back to the definition above “organized collection of structured information” you can see that we have structured information, a list of orders with a Date, Order Quantity, and Order Amount. We also have a collection of these, namely Orders and Invoices.

In database terms, we call each Excel sheet a table and each of the columns an attribute.

Now back to a warehouse. This was my mental model of a warehouse.

A data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for reporting and data analysis and is considered a core component of business intelligence. DWs are central repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise - Wikipedia

Again, if you are new to all this jargon, the above definition might not make much sense to you. Going back to our Excel Example. In an organization, you have many people with their own “databases” like the example above. Jane has one, Mario has another, Elena has a third. All have some valuable information we want to combine in order to make better decisions. So instead of keeping these Excel workbooks separately, we put them all together into a database and now we call that a warehouse. We use this central repository for our “business intelligence”

So, knowing all of this, when I heard of a Snowflake warehouse the above is what I thought. It is the place where we have all the data, duh. Just like Redshift and Teradata. But look at what the people at Snowflake did, they changed the meaning on me.

A virtual warehouse, often referred to simply as a “warehouse”, is a cluster of compute resources in Snowflake. - Snowflake

The term warehouse here is no longer about the storage of things it now means “cluster of compute” A what of what?

Ok, let’s break this down. You are probably reading this on a laptop or some other mobile device. That device stores all your documents and when you perform some actions it “computes” certain things. Well, in Snowflake the storage of the information is separate and independent of the computation on the things that are stored. So, you can store things once and connect different “computers” to it. Imagine you were performing a task on your laptop, and it was slow. What if you could reach in your desk drawer, pull out a faster computer, and speed up the task that was slow, well, in Snowflake you can. Also, instead of just having one computer doing the work, they have a cluster of computers working together to get the job done even faster.

As you can see, language is tricky, and creating a shared understanding of it is crucial to advancing your understanding and mastery of the technology. Every Snowflake user develops the new mental model for a warehouse and using it is second nature, but we forget that these terms that are now natural to us may still be confusing to newcomers.

Let’s start with dbt. When you join the dbt Slack community you will inevitably learn that the preferred way to write dbt is all lower case. Not DBT, not Dbt, just dbt. I still don’t know why exactly, but you may have noticed that everyone in this space always puts “dbt (Data Build Tool)”

If you have some knowledge of Behavioral Therapy you may already know that DBT has a different meaning. Dialectical behavior therapy (DBT)

Dialectical behavioral therapy (DBT) is a type of cognitive-behavioral therapy. Cognitive-behavioral therapy tries to identify and change negative thinking patterns and pushes for positive behavioral changes.

Did you notice how they do the inverse? They spell out Dialectical behavior therapy and put DBT in parenthesis. So, maybe the folks at Fishtown Analytics, now dbt Labs came across this other meaning for DBT and chose to differentiate by using lowercase, or maybe it was to mess with all of the newbies lol.

So update your auto-correct and don’t let dbt become DBT or Dbt or you will hear from someone in the community, haha.

Now let’s do a quick rundown of terms you will hear in dbt land which may confuse you as you start your dbt journey. I will link to the documentation with more information. My job here is to hopefully create a good mental model for you, not to teach you all the ins and outs of all of these things.

This is simply some data that you put into a file and make it part of your project. You put it in the seeds folder within your dbt project, but don’t use this as your source to populate your data warehouse, these are typically small files you may use as lookup tables. If you are using an older version of dbt, the folder would be data instead of seeds. That was another source of confusion, so now the term seed and the directory seed are more tightly connected. The format of these files must be CSV, more information can be found via the link above.

Jinja is a templating engine with syntax similar to the Python programming language that allows you to use special placeholders in your SQL code to make it dynamic. The stuff you see with {{ }} is Jinja.

Without Jinja, there is no dbt. I mean it is the combination of Jinja with SQL that gives us the power to do things that would otherwise be very difficult. So, when you see the lineage you get in the dbt documentation, you can thank Jinja for that.

I knew you would have this question. Well, a macro is simply a reusable piece of code. This too adds to the power of dbt. Every newcomer to dbt will quickly learn about the ref and source macros. These are the cornerstone of dbt. They help capture the relationship and sequence of all your data transformations. Sometimes you are using macros and you may not even realize it. Like the not_null test in your yml file, that’s a macro.

Behind the scenes, dbt is taking information in your yml file and sending parameters to this macro. In my example, the parameter model gets replaced with base_cases (along with the database name and schema name) and colum_name gets replaced with cases. The compiled version of this test looks like this:

There are dbt packages like dbt-expectations that extend the core dbt tests by adding a bunch of test macros, so check it out.

What do you do when you have a lot of great macros that you want to share with others in the community? You create a dbt package of course.

But what is a dbt package? A package is simply a mini dbt project that can be incorporated into your dbt project via the packages.yml file. There are a ton of great packages and the first one you will likely run into is dbt-utils. These are handy utilities that will make your life easier. Trust me, go see all the great things in the dbt-utils package.

Packages don’t just have macros though. Remember, they are mini dbt projects, so some packages incorporate some data transformations to help you do your analytics faster. If you and I both need to analyze the performance of our Google Ads, why should we both have to start from scratch? Well, the fine folks over at Fivetran thought the same thing and created a Google Ads package to help.

When you run the command dbt deps, dbt will look at your packages.yml file and download the specified packages to the dbt_packages directory of your dbt project. If you are on an older version of dbt, packages will be downloaded to the dbt_modules directory instead, but again you can see how this could be confusing hence the updated directory name.

There are many packages and new ones arrive regularly. You can see a full listing on dbt hub.

This is the website maintained by dbt Labs with a listing of dbt packages.

As a side note, we at Datacoves also maintain a similar listing of Python libraries that enhance the dbt experience in our dbt Libraries page. Check out all the libraries that exist. From additional database adapters to tools that can extract data from your BI tool and connect it with dbt, there’s a wealth of great open-source projects that take dbt to another level. Keep in mind that you cannot install Python libraries on dbt Cloud.

These are the SQL files you find in the models directory. These files specify how you want to transform your data. By default, each of these files creates a view in the database, but you can change the materialization of a model to something else and for example, have dbt create a table instead.

Materializations define what dbt will do when it runs your models. Basically, when you execute dbt run this is what happens.

All the code that dbt compiles and runs can be found in the dbt target directory

This term can be ambiguous to a new dbt user. This is because in dbt we use it interchangeably to mean two different things. As I used it above, I meant the directory within your dbt project where dbt commands write their output. If you look in this directory, you will see the compiled and run directories where I found the code I showed above.

Now that you know what dbt is doing under the hood, you can look in this directory to see what will be executed in the database. When you need to do some debugging, you should be able to take code directly from the compiled directory and run it on your database.

This is the other meaning for target. It refers to where dbt will create/materialize the objects in your database.

Again, dbt first compiles your model code and creates the files in the compiled directory. It then wraps the compiled code with the specified materialization and saves the resulting code in the run directory. Finally, it executes that code in your database target. It is the final file in the run directory that is executed in your database.

The image above is the code that runs in my Snowflake instance.

But how does dbt know which database target to use? You told it when you set up your dbt profile which is normally stored in a folder called .dbt in your computer's home folder (dbt Cloud and Datacoves both abstract this complexity for you).

When you start using dbt, you learn of a file called profiles.yml This file has your connection information to the database and should be kept secret as it typically contains your username and password.

This file is called profiles, plural, because you can have more than one profile which you eventually realize is where the target database is defined. Here is a case where you can argue that a better name for this file is targets.yml, but you will learn later why the name profiles.yml was probably chosen and why this name makes sense.

Notice above that I have two different dbt targets defined below the word outputs, dev and prd. dbt can only work on one target at a time so if you want to run dbt against two different databases you can specify them here. Just copy the dev target, give it a new name, and change some of the parameters.

Think of the word outputs on line 3 above as targets. Notice in line 2 the line target: dev this tells dbt which target it should use as your default. In my case, unless I specify otherwise, dbt will use the dev target as my default connection. Hence it will replace the Jinja ref macro with my development database.

How would you use the other target? You simply pass the target parameter to the dbt command like

dbt run --target prd or dbt run -t prd

What is that default: thing on the first line of my profiles.yml file?

Well you see, that’s the name given to your dbt profile, which by default is well, default.

The dbt project is what is created when you create a project via the dbt init command. It includes all of the folders you typically associate with a dbt project and includes a configuration file called dbt_project.yml. If you look at your dbt_project.yml file, you will find something similar to this.

In line 10 you can see which profile dbt will look for in your profiles.yml file. If I change that line and try to run dbt, I will get an error.

NOTE: For those paying close attention, you may have seen I used-s and not -m when selecting a specific model to run. This is the new/preferred way to select what dbt will run.

So now you see why profiles.yml is called profiles.yml and not targets.yml, because you can have multiple profiles in the file. In practice, I think people normally only have one profile, but nothing is preventing you from creating more and it might be handy if you have multiple dbt projects each with different connection information.

Those smart folks at Fishtown Analytics build in this flexibility for a very specific use case. You see, they were originally an analytics consulting company and developed dbt to help them do their work more efficiently. You can imagine that they were working with multiple clients whose project timelines overlapped so by having multiple profiles they could point each independent dbt project to a different profile in the profiles.yml file with each client's database connection information. Something like this.

Now that I have a profile called company_a in my profiles.yml that matches what I defined in my dbt_project.yml dbt will run correctly.

There is a ton of stuff to learn in your dbt journey and starting out with a solid foundation can help you better communicate and quickly progress through the learning curve.

Fishtown Analytics, now dbt Labs, created dbt to meet a real need they had and some of their shared vocabularies made it into the names we now use in the community. Those of us who have made it past the initial learning curve sometimes forget how daunting all the terminology can be for a newcomer.

There is a wealth of information you can find in the dbt documentation and our own dbt cheat sheet, but it takes some time to get used to all the new terms and understand how it's all connected. So next time you come across a newbie, think about the term that you are about to use and the mental model they will have when you tell them to update the seed. We need to take our new dbt seeds (people) and mature them into strong trees.

In 3 Core Pillars to a Data-Driven Culture, I discussed the reasons why decision makers don’t trust analytics. I then outlined the alignment and change management aspect to any solution. Once you know what you want, how do you deliver it? The cloud revolution has brought in a new set of challenges for organizations which have nothing to do with delivering solutions. The main problem is that people are faced with a Cheesecake Factory menu and most people would be better served with Omakase.

For those who may not be aware, The Cheesecake Factory menu has 23 pages and over 250 items to choose from. There are obviously people who want the variety and there is certainly nothing wrong with that, but my best meals have been where I have left the decision to the chef.

Omakase, in a Japanese restaurant is a meal consisting of dishes selected by the chef, it literally means “I'll leave it up to you”

How does this relate to the analytics landscape? Well, there is a gold rush in the analytics space. There is a lot of investment and there are literally hundreds of tools to choose from. I have been following this development over the last five years and if anything, the introduction of tools has accelerated.

Most people are where I was back in 2016. While I have been doing work in this space for many years the cloud and big data space was all new to me. There was a lot I needed to learn and I was always questioning whether I was making the right decision. I know many people today who do POC after POC to see which tool will work the best, I know, I did the same thing.

Contrast this process with my experience learning a web development framework called Ruby on Rails. When I started learning Rails in 2009 I was focused on what I was trying to build, not the set of tools and libraries that are needed to create a modern web application. That’s because Rails is Omakase.

When you select Omakase in Rails you are trusting many people with years of experience and training to share that knowledge with you. Not only does this help you get going faster, but it also brings you into a community of like-minded people. So that when you run into problems, there are people ready to help. Below I present my opinionated view of a three-course meal data stack that can serve most people and the rationale behind it. This solution may not be perfect for everyone, but neither is Rails.

You are hungry to get going and start doing analysis, but we need to start off slowly. You want to get the data, but where do you start. Well, there are a few things to consider.

· Where is the data coming from?

· Is it structured into columns and rows or is it semi-structured(JSON)?

· Is it coming in at high velocity?

· How much data are you expecting?

What I find is that many people want to over engineer a solution or focus on optimizing for one dimension which is usually cost since that is simple to grasp. The problem is that if you focus only on cost, you are giving up something else, usually a better user experience. You don’t have a lot of time to evaluate solutions and build extract and load scripts, so let me make this simple. If you start with Snowflake as your database and Fivetran as your Extract and Load solution, you’ll be fine. Yes, there are reasons why not to choose those solutions, but you probably don’t need to worry about them, especially if you are starting out and you are not Apple.

Why Snowflake you ask? Well, I have used Redshift, MS SQLServer, Databricks, Hadoop, Teradata, and others, but when I started using Snowflake I felt like a weight was lifted. It “just worked.” Do you think you will need to mask some data at some point? They have dynamic data masking. Do you want to be able to scale compute or storage independently? They have separate compute and storage too. Do you like waiting for data vendors to extract data from their system and then having to import it on your side? Or do you need to collaborate with partners and send them data? Well,Snowflake has a way for companies to share data securely, gone are the days of moving data around, now you can securely grant access to groups within or outside your organization, simple, elegant. What about enriching your data with external data sources? Well, they have a data marketplace too and this is bound to grow. Security is well thought out too and you can tell they are focused on the user experience because they do things to improve analyst happiness like MATCH_RECOGNIZE. Oh, and they also handle structured and semi-structured data amazingly well and all without having to tweak endless knobs. With one solution I have been able to eliminate the need to answer the questions above because Snowflake can very likely handle your use case regardless of the answer. I can go on and on, but trust me, you’ll be satisfied with your Snowflake appetizer. If it’s good enough for Warren Buffett, it’s good enough for me.

But what about Fivetran you say? Well, because you have better things to do than to replicate data from Google Analytics, Salesforce, Square, Concur, Workday, Google Ads, etc. etc. Here’s the full list of current connectors Fivetran supports. Just set it and forget it. No one will give you a metal for mapping data from standard data sources to Snowflake. So just do the simple thing and let’s get to the main dish.

Now that we have all our data sources in Snowflake, what do we do? Well, I haven’t met anyone who doesn’t want to do some level of data quality, documentation, lineage for impact analysis, and do this in a collaborative way that builds trust in the process.

I’ve got you covered. Just use dbt. Yup, that’s it, simple, a single tool that can do documentation, lineage, data quality, and more. dbt is a key component in our DataOps process because it, like Snowflake, just works. It was developed by people who were analysts themselves and appreciated software development best practices like DRY. They knew that SQL is the great common denominator and all it needed was some tooling around it. It’s hard enough finding good analytics engineers let alone finding ones that know Python. Leave the Python to Data Science and first build a solid foundation for your transformation process. Don’t worry, I didn’t forget about your ambition to create great machine learning models, Snowflake has you covered there as well, check out Snowpark.

You will need a little more than dbt in order to schedule your runs and bring some order to what otherwise would become chaos, but dbt will get you a long way there and if you want to know how we solve this with our Datacoves, reach out, we’ll share our knowledge in our 1-hour free consultation.

This three-course meal is quickly coming to an end, but I couldn’t let you go home before you have dessert. You need dashboards, but you also want self-service, then you can’t go wrong with Looker. I am not the only chef saying this, have a look at this.

One big reason for choosing Looker in addition to the above is the fact that version control is part of the process. If you want things that are documented, reused, and follow software development best practices, then you need to have everything in version control. You can no longer depend on the secret recipe that one of your colleagues has on their laptops. People get promoted, move to other companies, forget… and you need to have a data stack that is not brittle. So choose your dessert wisely.

There are a lot of decisions to be made when creating a great meal. You need to know your guests dietary needs, what you have available, and how to turn raw ingredients into a delicious plate. When it comes to data the options and permutations are endless and most people need to get to delivering solutions so decision makers can improve business results. While no solution is perfect, in my experience there are certain ingredients that when put together well enable users to get to building quickly. If you want to deliver analytics your decision makers can trust, just go Omakase.

In our previous article we wrote about the various dbt tests, we talked about the importance of testing data and how dbt, a tool developed by dbt Labs, helps data practitioners validate the integrity of their data. In that article we covered the various packages in the dbt ecosystem that can be used to run a variety of tests on data. Many people have legacy ETL processes and are unable to make the move to dbt quickly, but they can still leverage the power of dbt and by doing so slowly begin the transition to this tool. In this article, I’ll discuss how you can use dbt to test and document your data even if you are not using dbt for transformation.

Ideally, we can prevent erroneous data from ever reaching our decision makers and this is what dbt was created to do. dbt allows us to embed software engineering best practices into data transformation. It is the “T” in ELT (Extract, Load, and Transform) and it also helps capture documentation, testing, and lineage. Since dbt uses SQL as the transformation language, we can also add governance and collaboration via DataOps, but that’s a topic for another post.

I often talk to people who find dbt very appealing, but they have a lot of investment in existing tools like Talend, Informatica, SSIS, Python, etc. They often have gaps in their processes around documentation and data quality and while other tools exist, I believe dbt is a good alternative and by leveraging dbt to fill the gaps in your current data processes, you open the door to incrementally moving your transformations to dbt,

Eventually dbt can be fully leveraged as part of the modern data workflow to produce value from data in an agile way. The automated and flexible nature of dbt allows data experts to focus more on exploring data to find insights.

The term ELT can be confusing, some people hear ELT and ETL and think they are fundamentally the same thing. This is muddied by marketers who try to appeal to potential customers by suggesting their tool can do it all. The way I define ELT is by making sure that data is loaded from the source without any filters or transformation. This is EL (Extract and Load). We keep all rows and all columns. Data is replicated even if there is no current need. While this may seem wasteful at first, it allows Analytic and Data Engineers to quickly react to business needs. Have you ever faced the need to answer a question only to find that the field you need was never imported into the data warehouse? This is common especially in traditional thinking where it was costly to store data or when companies had limited resources due to data warehouses that coupled compute with storage. Today, warehouses like Snowflake have removed this constraint so we can load all the data and keep it synchronized with the sources. Another aspect of modern EL solutions is making the process to load and synchronize data simple. Tools like Fivetran and Airbyte allow users to easily load data by simply selecting pre-build connectors for a variety of sources and selecting the destination where the data should land. Gone are the days of creating tables in target data warehouses and dealing with changes when sources add or remove columns. The new way of working is helping users set it and forget it.